kubernetes容器持久化存储

之前使用了volume支持了本地和网络存储,譬如之前的NFS挂载要指定NFS服务器IP要挂载的数据卷和容器要挂载的目录,下面会提到两个东西,分别是PersistenVolume(PV)&PersistentVolumeClaim(PVC),他们作用和设计目的主要是将存储资源可以作为集群的一部分来管理。

PV你可以理解为一个接口,例如挂载NFS,你直接去使用PVC资源去消费就可以了,所以我们要定义PV的大小,以什么样的模式去访问,你知道这些信息就好了,这就是PV&PVC的设计之初,PV的主要作用就是将你后端的存储做一个抽象,然后在集群中可以管理这些存储,它分为静态和动态,静态就是需要手动去除创建,动态就是自动为你去创建一个PV,一会静态和动态都会涉及到。

PVC可以让用户不需要关心具体存储的实现,他只关心你用多大容量,PV也就是持久卷,Pod去使用pv的持久卷,你在任何节点都能访问到自己的数据,即使是删除再启动,PVC可以让用户更简单的使用,成为编排的一部分,所以PV&PVC的关系很简,PV是提供者,提供存储容量,PVC是消费者,消费的过程称为绑定

PV 静态供给

要使用PV会有三个阶段,需要定义容器应用,定义卷的需求,定义数据卷,先看容器定义

定义PVC

现在定义数据卷的来源只需要指定一个PVC,你可以理解这个PVC是个媒人

[root@k8s01 yml]# cat nginx-pvc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: web

spec:

selector:

matchLabels:

app: web

replicas: 3

template:

metadata:

labels:

app: web

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

volumeMounts:

- name: wwwroot

mountPath: /usr/share/nginx/html

volumes:

- name: wwwroot

persistentVolumeClaim:

claimName: nginx-pvc

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nginx-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 5Gi

目前的访问模式定义的为ReadWriteMany,也就是卷可以由许多节点以读写方式挂载,缩写为RWX ,除了这个还有两种,分别如下。

- ReadWriteOnce

- 卷可以由单个节点以读写方式挂载,缩写

RWO

- 卷可以由单个节点以读写方式挂载,缩写

- ReadOnlyMany

- 卷可以由许多节点以只读方式挂载,缩写

ROX

- 卷可以由许多节点以只读方式挂载,缩写

这算是一个需求撒,申请5G的使用空间,访问模式为ReadWriteMany,pvc需要把这个需求告诉pv

定义PV

最后定义数据卷。

[root@k8s01 yml]# cat pv.yml

apiVersion: v1

kind: PersistentVolume

metadata:

name: nginx-pv

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteMany

nfs:

path: /data/

server: 192.168.10.41

这里的访问模式和pvc的一样,现在可以开始创建了。

开始创建

需要先创建你的pv,其实在你创建pv的时候你并不知道真的有人需要这个pv,所以一般情况下会创建很多的pv在这里等着,你找存储的需求发布到pvc后pvc会去匹配pv,如果有合适直接牵线,如果没有匹配到从此你就一蹶不振,无法启动,大概就是这样,所以现在把pv创建了吧。

[root@k8s01 yml]# kubectl create -f pv.yml

persistentvolume/nginx-pv created

[root@k8s01 yml]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

nginx-pv 5Gi RWX Retain Available 5s

使用get pv可以看到当前集群中哪pv可以用,可以看到就一个刚刚创建的,而且开始可用状态,模式为RWX,你领到后想干嘛干嘛,现在可以创建应用了,找pvc的需求也写好了,所以你没写错的话创建之后就能领走了。

[root@k8s01 yml]# kubectl create -f nginx-pvc.yaml

deployment.apps/nginx-deployment created

persistentvolumeclaim/nginx-pvc created

[root@k8s01 yml]# kubectl get pods,pv,pvc

NAME READY STATUS RESTARTS AGE

pod/nginx-deployment-7b77d54576-6bnpc 1/1 Running 0 44s

pod/nginx-deployment-7b77d54576-6fwz4 1/1 Running 0 44s

pod/nginx-deployment-7b77d54576-8cx4w 1/1 Running 0 44s

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/nginx-pv 5Gi RWX Retain Bound default/nginx-pvc 9m51s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/nginx-pvc Bound nginx-pv 5Gi RWX 44s

[root@k8s01 yml]#

没啥子问题,Pod正常启动并且牵线成功,试着访问一下

[root@k8s01 yml]# cat services.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx-service

labels:

app: web

spec:

type: NodePort

ports:

- port: 80

targetPort: 80

selector:

app: web

[root@k8s01 yml]# kubectl create -f services.yaml

service/nginx-service created

[root@k8s01 yml]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 13h

nginx-service NodePort 10.0.0.188 <none> 80:49302/TCP 61s

[root@k8s01 yml]# curl 192.168.10.92:49302

hello world

[root@k8s01 yml]#

没问题,这就是使用一个持久卷静态模式下的部署,在pvc匹配pv的时候,主要看的是存储容量和访问模式,这两个都符合需求才能匹配成功,都符合两个需求的pv可能有很多,多的话随便给你一个用就算完了,如果匹配不到合适的就绝不会牵线,可能你会感觉很麻烦,每次都要去手动创建pv,能不能我需求发布后自动给我创建一个?可以,这就会涉及到PV的动态供给了,手动创建在小的规模下部署的应用没几个手动搞搞也无所谓,大规模的情况下就很麻烦了,下面看看PV动态供给。

官方文档编写yaml文件:

https://github.com/kubernetes-incubator/external-storage/tree/master/nfs/docs/demo

PV动态供给(StorageClass)

K8S提供了一个机制叫Dynamic Provisioning,这个机制工作的核心在于StorageClass,这是一个存储类,他的作用是自动操作你后端的存储,为你创建相对应的PV,一个API对象,下面定义一个StorageClass,后端还使用NFS作为存储。

定义StorageClass

[root@k8s01 yml]# cat storageclass-nfs.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

provisioner: fuseim.pri/ifs

parameters:

archiveOnDelete: "false"

定义nfs-web插件

[root@k8s01 yml]# cat nfs-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

namespace: default

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: lizhenliang/nfs-client-provisioner:latest

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: fuseim.pri/ifs

- name: NFS_SERVER

value: 192.168.10.41

- name: NFS_PATH

value: /data

volumes:

- name: nfs-client-root

nfs:

server: 192.168.10.41

path: /data

定义rbac授权

[root@k8s01 yml]# cat rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

默认情况下NFS是不支持动态供给的,需要部署一个插件,你需要改一下NFS地址和共享目录,这个插件需要连接apiserver的,所以要进行授权,上面的文件都已经定义了,下面直接开始创建就行了,确保Pod启动后没有抛错。

[root@k8s01 yml]# kubectl create -f storageclass-nfs.yaml

storageclass.storage.k8s.io/managed-nfs-storage created

[root@k8s01 yml]# kubectl get storageclasses.storage.k8s.io

NAME PROVISIONER AGE

managed-nfs-storage fuseim.pri/ifs 37s

[root@k8s01 yml]#

[root@k8s01 yml]# kubectl create -f rbac.yaml

serviceaccount/nfs-client-provisioner created

clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created

role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

[root@k8s01 yml]#

[root@k8s01 yml]# kubectl create -f nfs-deployment.yaml

deployment.apps/nfs-client-provisioner created

[root@k8s01 yml]#

[root@k8s01 yml]# kubectl get pods nfs-client-provisioner-c46c684fd-dz4vp

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-c46c684fd-dz4vp 1/1 Running 0 48s

[root@k8s01 yml]#

使用动态供给

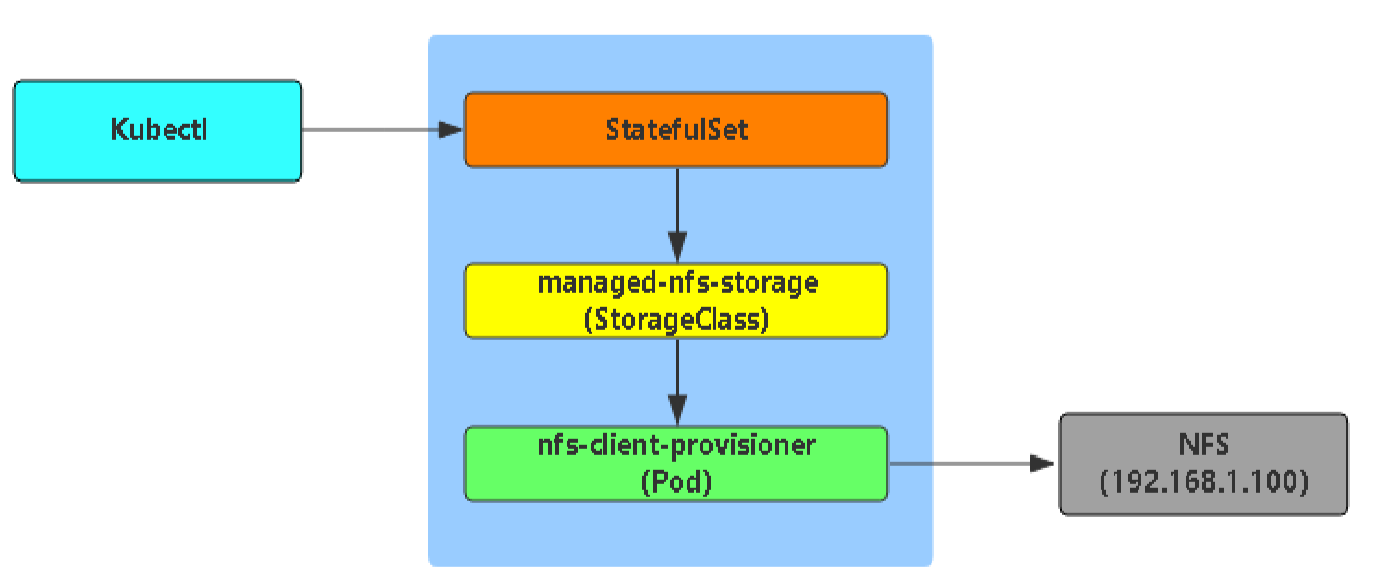

部署一个资源对象会请求managed-nfs-storage,他会创建pv,nfs-client-provisioner具体会请求NFS

部署mysql

部署一个mysql,他需要一个持久化的存储和一个唯一的网络标识,现在需要写一个statefulset和一个无头Service

[root@k8s01 yml]# cat mysql-demo.yaml

apiVersion: v1

kind: Service

metadata:

name: mysql

spec:

ports:

- port: 3306

name: mysql

clusterIP: None

selector:

app: mysql-public

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: mysql-db

spec:

selector:

matchLabels:

app: mysql-public

serviceName: "mysql"

template:

metadata:

labels:

app: mysql-public

spec:

containers:

- name: mysql

image: mysql:5.7

env:

- name: MYSQL_ROOT_PASSWORD

value: "123456"

ports:

- containerPort: 3306

volumeMounts:

- mountPath: "/var/lib/mysql"

name: mysql-data

volumes:

- name: mysql-data

persistentVolumeClaim:

claimName: mysql-pvc

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mysql-pvc

spec:

accessModes:

- ReadWriteMany

storageClassName: managed-nfs-storage

resources:

requests:

storage: 5Gi

数据来源指定的pv动态供给,创建pvc动态供给的内容如下。

volumes:

- name: mysql-data

persistentVolumeClaim:

claimName: mysql-pvc

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mysql-pvc

spec:

accessModes:

- ReadWriteMany

storageClassName: managed-nfs-storage

resources:

requests:

storage: 5Gi

使用的存储类名称为managed-nfs-storage,就是上面我定义的那个,他和之前的数据卷引用方式差不多,只不过这个需要指定存储类帮你创建pv,也就是这个。

[root@k8s01 yml]# kubectl get pod nfs-client-provisioner-c46c684fd-dz4vp

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-c46c684fd-dz4vp 1/1 Running 0 36m

[root@k8s01 yml]#

现在可以创建mysql服务了

[root@k8s01 yml]# kubectl create -f mysql-demo.yaml

service/mysql created

statefulset.apps/mysql-db created

persistentvolumeclaim/mysql-pvc created

[root@k8s01 yml]# kubectl get pods mysql-db-0

NAME READY STATUS RESTARTS AGE

mysql-db-0 1/1 Running 0 33s

[root@k8s01 yml]# kubectl get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/nginx-pv 5Gi RWX Retain Bound default/nginx-pvc 114m

persistentvolume/pvc-43bff7ce-bbdf-483a-8359-f03419d196ff 5Gi RWX Delete Bound default/mysql-pvc managed-nfs-storage 54s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/mysql-pvc Bound pvc-43bff7ce-bbdf-483a-8359-f03419d196ff 5Gi RWX managed-nfs-storage 54s

persistentvolumeclaim/nginx-pvc Bound nginx-pv 5Gi RWX 105m

[root@k8s01 yml]#

就是这种效果,试试这个mysql是否能使用,需要通过DNS名称去访问了,启动一个容器试试。

[root@k8s01 ~]# kubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes

clusterrolebinding.rbac.authorization.k8s.io/kube-apiserver:kubelet-apis created

[root@k8s01 ~]# kubectl run -it --image=mysql:5.7 mysql-client --restart=Never --rm /bin/bash

If you don't see a command prompt, try pressing enter.

root@mysql-client:/# mysql -uroot -p123456 -hmysql-db-0.mysql

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or g.

Your MySQL connection id is 3

Server version: 5.7.30 MySQL Community Server (GPL)

Copyright (c) 2000, 2020, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or 'h' for help. Type 'c' to clear the current input statement.

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| sys |

+--------------------+

4 rows in set (0.00 sec)

mysql>

看一眼NFS上的东西

[root@nfs01 data]# ll default-mysql-pvc-pvc-43bff7ce-bbdf-483a-8359-f03419d196ff/

total 188484

-rw-r----- 1 polkitd ssh_keys 56 Jun 7 13:47 auto.cnf

-rw------- 1 polkitd ssh_keys 1680 Jun 7 13:47 ca-key.pem

-rw-r--r-- 1 polkitd ssh_keys 1112 Jun 7 13:47 ca.pem

-rw-r--r-- 1 polkitd ssh_keys 1112 Jun 7 13:47 client-cert.pem

-rw------- 1 polkitd ssh_keys 1676 Jun 7 13:47 client-key.pem

-rw-r----- 1 polkitd ssh_keys 1343 Jun 7 13:47 ib_buffer_pool

-rw-r----- 1 polkitd ssh_keys 79691776 Jun 7 13:47 ibdata1

-rw-r----- 1 polkitd ssh_keys 50331648 Jun 7 13:47 ib_logfile0

-rw-r----- 1 polkitd ssh_keys 50331648 Jun 7 13:47 ib_logfile1

-rw-r----- 1 polkitd ssh_keys 12582912 Jun 7 13:48 ibtmp1

drwxr-x--- 2 polkitd ssh_keys 4096 Jun 7 13:47 mysql

drwxr-x--- 2 polkitd ssh_keys 8192 Jun 7 13:47 performance_schema

-rw------- 1 polkitd ssh_keys 1676 Jun 7 13:47 private_key.pem

-rw-r--r-- 1 polkitd ssh_keys 452 Jun 7 13:47 public_key.pem

-rw-r--r-- 1 polkitd ssh_keys 1112 Jun 7 13:47 server-cert.pem

-rw------- 1 polkitd ssh_keys 1680 Jun 7 13:47 server-key.pem

drwxr-x--- 2 polkitd ssh_keys 8192 Jun 7 13:47 sys

[root@nfs01 data]#