Docker 网络

理解docker0

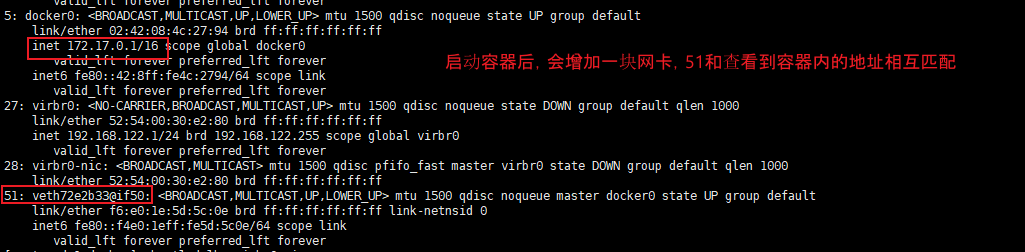

安装docker后,使用ifconfig查看网络,增加了一块docker0网卡。

问题:docker 如何处理容器网络访问?

#直接run 一个tomcat容器,从docker官方下载最新版,使用8082端口

[root@node1 docker]# docker run --name tomcat01 -p 8082:8080 -d tomcat:latest

#进入容器查看容器内IP addr 地址, 发现容器启动得到一个eth0@if51的IP地址,由docker0分配

[root@node1 docker]# docker exec -it tomcat01 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

50: eth0@if51: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.2/16 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::42:acff:fe11:2/64 scope link

valid_lft forever preferred_lft forever

#此网络和本机是互通的

[root@node1 docker]# ping 172.17.0.2

PING 172.17.0.2 (172.17.0.2) 56(84) bytes of data.

64 bytes from 172.17.0.2: icmp_seq=1 ttl=64 time=0.206 ms

64 bytes from 172.17.0.2: icmp_seq=2 ttl=64 time=0.130 ms

64 bytes from 172.17.0.2: icmp_seq=3 ttl=64 time=0.122 ms

64 bytes from 172.17.0.2: icmp_seq=4 ttl=64 time=0.193 ms

原理

1, 每启动一个docker容器,docker就会给容器分配一个IP

只要按照docker,就会有一块docker0网卡,默认是桥接模式,使用技术是evth-pair!

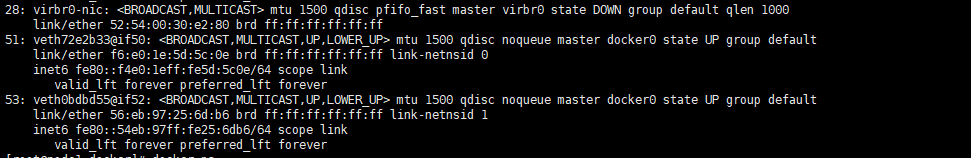

2, 再启动一个容器验证

[root@node1 docker]# docker run --name tomcat02 -p 8083:8080 -d tomcat

6b528e53c64aaa747cf97107141522770267801a4c873a1cbe42a87ef5682b24

[root@node1 docker]# docker exec -it tomcat02 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

52: eth0@if53: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:03 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.3/16 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::42:acff:fe11:3/64 scope link

valid_lft forever preferred_lft forever

#这里增加一对网卡52: eth0@if53

3, 发现:

启动容器生成的网卡都是成对出现的

evth-pair就是一对虚拟设备接口,一段连着协议,一段彼此相连

evth-pair充当桥梁,连接各种虚拟网络设备

4,测试tomcat01 是否可以ping 通tomcat02!

[root@node1 docker]# docker exec -it tomcat02 ping 172.17.0.3

PING 172.17.0.3 (172.17.0.3) 56(84) bytes of data.

64 bytes from 172.17.0.3: icmp_seq=1 ttl=64 time=0.059 ms

64 bytes from 172.17.0.3: icmp_seq=2 ttl=64 time=0.106 ms

64 bytes from 172.17.0.3: icmp_seq=3 ttl=64 time=0.086 m

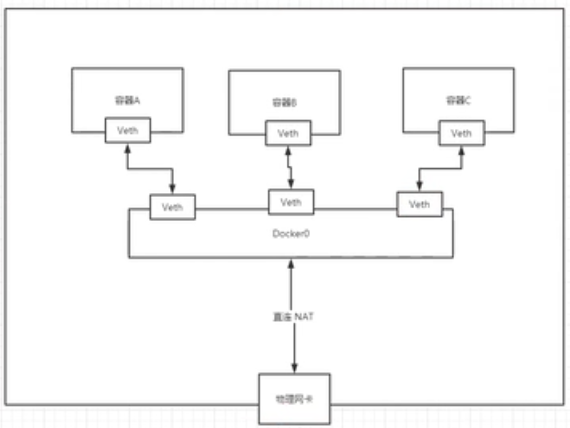

发现可以正常ping通,这里docker0充当的类似于路由器。即统一主机上容器之间是可以互相通讯的!

tomcat01和tomcat02是公用路由docker0

所有的容器不指定网络的情况下,都由docker0路由,docker给容器分配一个默认的可用IP

小结

docker 使用的是linux的桥接,宿主机中是docker 容器的网桥,即docker0

docker 中所有网络接口都是虚拟的

只要容器删除,对应的网桥就会被删除

测试容器删除后网络情况

tomcat01和tomcat02 启动,2对虚拟网卡正常

删除tomcat02,再查看网卡信息

[root@node1 docker]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

6b528e53c64a tomcat "catalina.sh run" 35 minutes ago Up 35 minutes 0.0.0.0:8083->8080/tcp tomcat02

88e4de225cea tomcat:latest "catalina.sh run" 58 minutes ago Up 58 minutes 0.0.0.0:8082->8080/tcp tomcat01

[root@node1 docker]# docker rm -f 6b528e53c64a

6b528e53c64a

[root@node1 docker]# ip addr

docker 容器间的互联

--link

容器重启后,会得到重新分配的ip,如果服务之间配置是通过IP 互相通讯,重启后通讯即中断

再次启动容器tomcat02,尝试直接使用tomcat01 ping tomcat02

#再次启动容器tomcat02

[root@node1 docker]# docker run --name tomcat02 -p 8083:8080 -d tomcat

[root@node1 docker]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

7f0115d955d7 tomcat "catalina.sh run" 8 hours ago Up 8 hours 0.0.0.0:8083->8080/tcp tomcat02

88e4de225cea tomcat:latest "catalina.sh run" 9 hours ago Up 9 hours 0.0.0.0:8082->8080/tcp tomcat01

#直接使用tomcat01 ping tomcat02 失败, 如何解决?

[root@node1 docker]# docker exec -it tomcat01 ping tomcat02

ping: tomcat02: Temporary failure in name resolution

#启动容器tomcat03,使用--link 连接到tomcat02

[root@node1 docker]# docker run --name tomcat03 -p 8084:8080 --link tomcat02 -d tomcat

82deda8e1be4c760ad4270a0db77d96ab7d6b0226c80504e62530a1a3f2ee910

#再次使用tomcat03 ping tomcat02 成功

[root@node1 docker]# docker exec -it tomcat03 ping tomcat02

PING tomcat02 (172.17.0.3) 56(84) bytes of data.

64 bytes from tomcat02 (172.17.0.3): icmp_seq=1 ttl=64 time=1.07 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=2 ttl=64 time=0.147 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=3 ttl=64 time=0.122 ms

#tomcat02是否可以ping通tomcat03? 即方向是否可以ping通,直接测试: 失败,原因是tomcat02没有配置

[root@node1 docker]# docker exec -it tomcat02 ping tomcat03

ping: tomcat03: Temporary failure in name resolution

探究网络配置:

#查看当前存在的网络

[root@node1 docker]# docker network ls

NETWORK ID NAME DRIVER SCOPE

9b937167a279 bin_default bridge local

6aae8ccbba9d bridge bridge local

e118e3656a14 docker_default bridge local

98096ab0c7e7 harbor_harbor bridge local

201680a6b52b host host local

6da132137731 none null local

#查看docker0网络详细信息,id是6aae8ccbba9d

[root@node1 docker]# docker inspect 6aae8ccbba9d

#查看tomcat03的配置

[root@node1 docker]# docker inspect 82deda8e1be4

#直接产看tomcat03 hosts文件

[root@node1 docker]# docker exec -it tomcat03 cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.17.0.3 tomcat02 7f0115d955d7

172.17.0.4 82deda8e1be4

#这里已经存在了tomcat02的地址,本地解析的tomcat02

#反过来查看tomcat02 则无此配置,这就是tomcat03可以pingtomcat02,而反过来则ping不通的原因

[root@node1 docker]# docker exec -it tomcat02 cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.17.0.3 7f0115d955d7

结论:

--link tomcat02, 也就是再tomcat03中增加了一条tomcat02的映射 172.17.0.3 tomcat02 7f0115d955d7

但是现在docker已经不建议使用 --link了!

自定义网络!不适合于docker0!

docker0的问题: 不支持容器名连接访问

docker0的特点,默认域名不能访问,--link可以打通连接

建议使用自定义网络!

自定义网络

查看所有的docker网络

[root@node1 docker]# docker network ls

NETWORK ID NAME DRIVER SCOPE

9b937167a279 bin_default bridge local

6aae8ccbba9d bridge bridge local

e118e3656a14 docker_default bridge local

98096ab0c7e7 harbor_harbor bridge local

201680a6b52b host host local

6da132137731 none null local

网络模式:

bridge : 桥接docker (默认)

none:不配置网络

host : 和宿主机共享网络

container : 容器内网络联通 (使用较少了,局限性大)

测试:

#直接启动的容器命令,默认是带 --net bridge, 而这就是docker0

docker run --name tomcat03 -p 8084:8080 --link tomcat02 -d tomcat

#实际情况是下命的命令

docker run --name tomcat03 --net bridge -p 8084:8080 --link tomcat02 -d tomcat

#首先删除运行的容器,保证测试环境干净

[root@node1 docker]# docker rm -f $(docker ps -aq)

#查看网络帮助命令

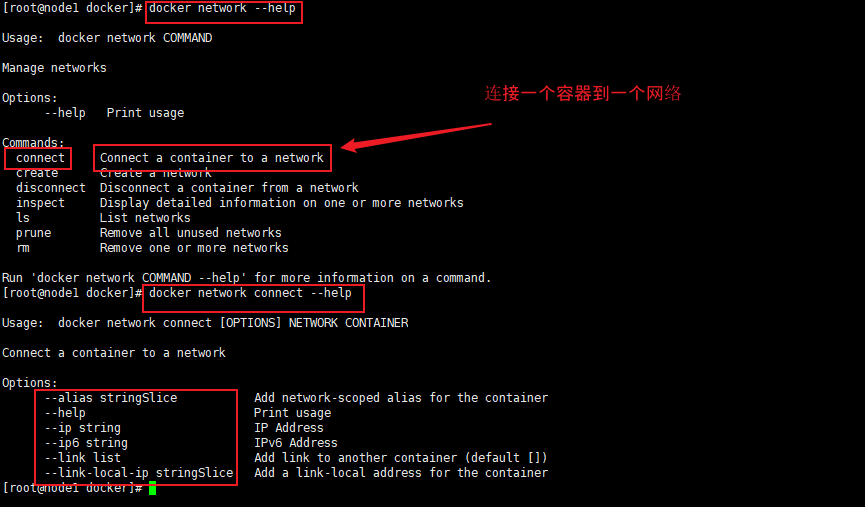

[root@node1 docker]# docker network --help

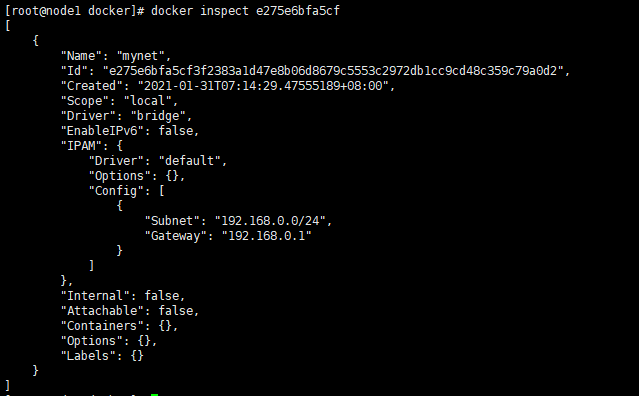

#创建自定义网络

#--driver bridge

#--subnet 192.168.0.0/24 255个可用ip地址

#--gateway 192.168.0.1

[root@node1 docker]# docker network create --driver bridge --subnet 192.168.0.0/24 --gateway 192.168.0.1 mynet

e275e6bfa5cf3f2383a1d47e8b06d8679c5553c2972db1cc9cd48c359c79a0d2

查看mynet 信息

将容器发布到自建网络

#运行2个容器tomcat-net-01 和tomcat-net-02,使用mynet 网络

[root@node1 docker]# docker run -d -P --name tomcat-net-01 --net mynet tomcat

761791ee09f3e143c0f2187c97b2354ae99d14e2542a9a6b41c08881502a9243

[root@node1 docker]# docker run -d -P --name tomcat-net-02 --net mynet tomcat

9572ef21c2a85120f6045a8d79ed41230cc828720ebebe198e773e08f751301f

[root@node1 docker]# docker inspect e275e6bfa5cf

[

{

"Name": "mynet",

"Id": "e275e6bfa5cf3f2383a1d47e8b06d8679c5553c2972db1cc9cd48c359c79a0d2",

"Created": "2021-01-31T07:14:29.47555189+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "192.168.0.0/24",

"Gateway": "192.168.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Containers": {

"761791ee09f3e143c0f2187c97b2354ae99d14e2542a9a6b41c08881502a9243": {

"Name": "tomcat-net-01",

"EndpointID": "86b876f36638d1645ebe2d8361791909fb0f43455388c1cf0329f44843eac2b0",

"MacAddress": "02:42:c0:a8:00:02",

"IPv4Address": "192.168.0.2/24",

"IPv6Address": ""

},

"9572ef21c2a85120f6045a8d79ed41230cc828720ebebe198e773e08f751301f": {

"Name": "tomcat-net-02",

"EndpointID": "6b80369a3166cfa66c88937a40ddb7741121dc43f26d8a77e97d564dd7c19ede",

"MacAddress": "02:42:c0:a8:00:03",

"IPv4Address": "192.168.0.3/24",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {}

}

]

#测试ping连接 IP

[root@node1 docker]# docker exec -it tomcat-net-01 ping 192.168.0.3

PING 192.168.0.3 (192.168.0.3) 56(84) bytes of data.

64 bytes from 192.168.0.3: icmp_seq=1 ttl=64 time=0.150 ms

64 bytes from 192.168.0.3: icmp_seq=2 ttl=64 time=0.064 ms

#测试ping连接 容器名,发现不使用--link也可以连接

[root@node1 docker]# docker exec -it tomcat-net-01 ping tomcat-net-02

PING tomcat-net-02 (192.168.0.3) 56(84) bytes of data.

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=1 ttl=64 time=0.056 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=2 ttl=64 time=0.355 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=3 ttl=64 time=0.115 ms

自定义的网络,docker已经维护了对应的关系,推荐使用自定义网络

优点:

redis : 不同集群使用不同网络,保证集群的健康和安全

mysql: 不同集群使用不同网络,保证集群的健康和安全

网络联通

查看命令网络帮忙命令

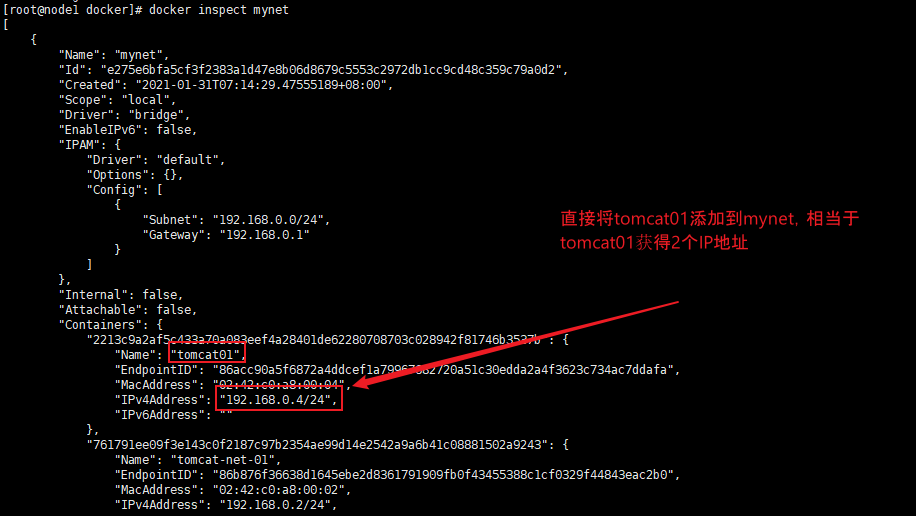

再启动2个容器tomcat01 和tomcat02,使用默认网络,然后将其添加都mynet 网络

#启动2个容器tomcat01 和tomcat02,使用默认网络

[root@node1 docker]# docker run -d -P --name tomca01 tomcat

670139405f504601e446dae843288b2ddae1cb4cf6b68b19825ee33917a92f73

[root@node1 docker]# docker run -d -P --name tomca02 tomcat

784c986a8e988270d709e409ca84284281453c4bd4d849589bbacbdda435881a

#tomcat01尝试ping 连接tomcat-net-01 或 192.168.0.2, 均告失败

[root@node1 docker]# docker exec -it tomcat01 ping tomcat-net-01

ping: tomcat-net-01: Temporary failure in name resolution

[root@node1 docker]# docker exec -it tomcat01 ping 192.168.0.2

PING 192.168.0.2 (192.168.0.2) 56(84) bytes of data.

#连接tomcat01 到mynet 网络

[root@node1 docker]# docker network connect mynet tomcat01

#再次ping测试, 连接成功

[root@node1 docker]# docker exec -it tomcat01 ping tomcat-net-01

PING tomcat-net-01 (192.168.0.2) 56(84) bytes of data.

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=1 ttl=64 time=0.285 ms

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=2 ttl=64 time=0.152 ms

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=3 ttl=64 time=0.144 ms

#查看mynet信息

[root@node1 docker]# docker inspect mynet

#联通之后,是将tomcat01放到了mynet网络下,可以类似将tomcat02 也打通网络

#tomcat01 联通ok

[root@node1 docker]# docker exec -it tomcat01 ping tomcat-net-01

PING tomcat-net-01 (192.168.0.2) 56(84) bytes of data.

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=1 ttl=64 time=0.196 ms

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=2 ttl=64 time=0.115 ms

#tomcat02 依旧不通

[root@node1 docker]# docker exec -it tomcat02 ping tomcat-net-01

ping: tomcat-net-01: Temporary failure in name resolution

结论:假设需要跨网络操作,需要使用docker network connect连通!!

实战:部署redis集群

#首先创建redis网络

[root@node1 docker]# docker network create --subnet 172.38.0.0/16 redis

3c72a2b7dd46f17816f590b4d159b4c75ec4979c0fac8551b8b8a9868c7365db

[root@node1 docker]# docker network ls

NETWORK ID NAME DRIVER SCOPE

9b937167a279 bin_default bridge local

6aae8ccbba9d bridge bridge local

e118e3656a14 docker_default bridge local

98096ab0c7e7 harbor_harbor bridge local

201680a6b52b host host local

e275e6bfa5cf mynet bridge local

6da132137731 none null local

3c72a2b7dd46 redis bridge local

#bai'n

[root@node1 docker]# for port in $(seq 1 6);

> do

> mkdir -p /mydata/redis/node-${port}/conf

> touch /mydata/redis/node-${port}/conf/redis.conf

> cat << EOF > /mydata/redis/node-${port}/conf/redis.conf

> port 6379

> cluster-enable yes

> cluster-config-file nodes_conf

> cluster-node-timeout 5000

> cluster-announce-ip 172.38.0.1${port}

> cluster-announce-port 6379

> cluster-announce-bus-port 6379

> appendonly yes

> EOF

> done

redis创建配置文件脚本内容

for port in $(seq 1 6);

do

mkdir -p /mydata/redis/node-${port}/conf

touch /mydata/redis/node-${port}/conf/redis.conf

cat << EOF > /mydata/redis/node-${port}/conf/redis.conf

port 6379

bind 0.0.0.0

cluster-enabled yes

cluster-config-file nodes.conf

cluster-node-timeout 5000

cluster-announce-ip 172.38.0.1${port}

cluster-announce-port 6379

cluster-announce-bus-port 16379

appendonly yes

EOF

done

redis批量启动脚本

for port in $(seq 1 6);

do

docker run -p 637${port}:6379 -p 1637${port}:16379 --name redis-${port}

-v /mydata/redis/node-${port}/data:/data

-v /mydata/redis/node-${port}/conf/redis.conf:/etc/redis/redis.conf

-d --net redis --ip 172.38.0.1${port} redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf;

done

然后启动redis容器,这里需要启动6个redis容器

#第一个redis直接使用命令启动

[root@node1 docker]# docker run -p 6371:6379 -p 16371:16379 --name redis-1

> -v /mydata/redis/node-1/data:/data

> -v /mydata/redis/node-1/conf/redis.conf:/etc/redis/redis.conf

> -d --net redis --ip 172.38.0.11 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

c8d26544ad5d22401beb82f2f4fc0a2d9a6a4ebd4d3410625c998cc701cab028

#启动成功,查看状态

[root@node1 docker]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c8d26544ad5d redis:5.0.9-alpine3.11 "docker-entrypoint..." 5 seconds ago Up 4 seconds 0.0.0.0:6371->6379/tcp, 0.0.0.0:16371->16379/tcp redis-1

#第二至六个容器,使用脚本启动

[root@node1 docker]# for port in $(seq 2 6);

> do

> docker run -p 637${port}:6379 -p 1637${port}:16379 --name redis-${port}

> -v /mydata/redis/node-${port}/data:/data

> -v /mydata/redis/node-${port}/conf/redis.conf:/etc/redis/redis.conf

> -d --net redis --ip 172.38.0.1${port} redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf;

> done

b9818abea6c4663f8e8f3573b56dd2207d0e91d887096237ec8692f4b7de811c

24ef6937a686354495eea557bc82642b75a98823e7b9f1301864ac1cdb50c442

20ff7556686b1a2d78339cca23b3af52f2db0357386e9363525b30a8c01d5c63

e0d00235f2c5bb35e615f0a8c645cd410f691f0435518704f6550653faa23b2f

9ffb0b18abcfa81f0ac342175e8b973bc2cb38e69ce73b4a425aa386cddcd46a

#启动成功,查看状态, 都启动成功

[root@node1 docker]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

9ffb0b18abcf redis:5.0.9-alpine3.11 "docker-entrypoint..." 2 seconds ago Up 2 seconds 0.0.0.0:6376->6379/tcp, 0.0.0.0:16376->16379/tcp redis-6

e0d00235f2c5 redis:5.0.9-alpine3.11 "docker-entrypoint..." 3 seconds ago Up 2 seconds 0.0.0.0:6375->6379/tcp, 0.0.0.0:16375->16379/tcp redis-5

20ff7556686b redis:5.0.9-alpine3.11 "docker-entrypoint..." 3 seconds ago Up 2 seconds 0.0.0.0:6374->6379/tcp, 0.0.0.0:16374->16379/tcp redis-4

24ef6937a686 redis:5.0.9-alpine3.11 "docker-entrypoint..." 3 seconds ago Up 3 seconds 0.0.0.0:6373->6379/tcp, 0.0.0.0:16373->16379/tcp redis-3

b9818abea6c4 redis:5.0.9-alpine3.11 "docker-entrypoint..." 4 seconds ago Up 3 seconds 0.0.0.0:6372->6379/tcp, 0.0.0.0:16372->16379/tcp redis-2

c8d26544ad5d redis:5.0.9-alpine3.11 "docker-entrypoint..." 38 seconds ago Up 37 seconds 0.0.0.0:6371->6379/tcp, 0.0.0.0:16371->16379/tcp redis-1

# 进入任一redis, 注意redis命令是/bin/sh, 不是bash

[root@node1 docker]# docker exec -it redis-1 /bin/sh

#默认目录是/data

/data # ls

appendonly.aof nodes.conf

# 创建redis 集群

/data # redis-cli --cluster create 172.38.0.11:6379 172.38.0.12:6379 172.38.0.13:6379 172.38.0.14:6379 172.38.0.15:6379 172.38.0.16:6379 --cluster

-replicas 1

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 172.38.0.15:6379 to 172.38.0.11:6379

Adding replica 172.38.0.16:6379 to 172.38.0.12:6379

Adding replica 172.38.0.14:6379 to 172.38.0.13:6379

M: 7e593624e546539f83c844a5d0014fc5687aa6c4 172.38.0.11:6379

slots:[0-5460] (5461 slots) master

M: 586370b79f1be2968d847f5947819e632da66aa1 172.38.0.12:6379

slots:[5461-10922] (5462 slots) master

M: 32026a285df860992a1a7dac4e9e1d60f253decd 172.38.0.13:6379

slots:[10923-16383] (5461 slots) master

S: 21ea6b6ac1b6e238bebad3ac54ac72a0274ecae0 172.38.0.14:6379

replicates 32026a285df860992a1a7dac4e9e1d60f253decd

S: 383e7f0fd8aab86bd45740de3a1a2ad5681eb591 172.38.0.15:6379

replicates 7e593624e546539f83c844a5d0014fc5687aa6c4

S: d37dd99e5ddb19d9961ce1d613a93adbf00e25d0 172.38.0.16:6379

replicates 586370b79f1be2968d847f5947819e632da66aa1

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

...

>>> Performing Cluster Check (using node 172.38.0.11:6379)

M: 7e593624e546539f83c844a5d0014fc5687aa6c4 172.38.0.11:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: 383e7f0fd8aab86bd45740de3a1a2ad5681eb591 172.38.0.15:6379

slots: (0 slots) slave

replicates 7e593624e546539f83c844a5d0014fc5687aa6c4

S: d37dd99e5ddb19d9961ce1d613a93adbf00e25d0 172.38.0.16:6379

slots: (0 slots) slave

replicates 586370b79f1be2968d847f5947819e632da66aa1

S: 21ea6b6ac1b6e238bebad3ac54ac72a0274ecae0 172.38.0.14:6379

slots: (0 slots) slave

replicates 32026a285df860992a1a7dac4e9e1d60f253decd

M: 32026a285df860992a1a7dac4e9e1d60f253decd 172.38.0.13:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

M: 586370b79f1be2968d847f5947819e632da66aa1 172.38.0.12:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

# 进入redis集群,并查看集群信息

/data # redis-cli -c

127.0.0.1:6379> cluster info

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:3

cluster_current_epoch:6

cluster_my_epoch:1

cluster_stats_messages_ping_sent:236

cluster_stats_messages_pong_sent:233

cluster_stats_messages_sent:469

cluster_stats_messages_ping_received:228

cluster_stats_messages_pong_received:236

cluster_stats_messages_meet_received:5

cluster_stats_messages_received:469

#查看集群节点

127.0.0.1:6379> cluster nodes

383e7f0fd8aab86bd45740de3a1a2ad5681eb591 172.38.0.15:6379@16379 slave 7e593624e546539f83c844a5d0014fc5687aa6c4 0 1612059041000 5 connected

d37dd99e5ddb19d9961ce1d613a93adbf00e25d0 172.38.0.16:6379@16379 slave 586370b79f1be2968d847f5947819e632da66aa1 0 1612059041568 6 connected

21ea6b6ac1b6e238bebad3ac54ac72a0274ecae0 172.38.0.14:6379@16379 slave 32026a285df860992a1a7dac4e9e1d60f253decd 0 1612059041266 4 connected

7e593624e546539f83c844a5d0014fc5687aa6c4 172.38.0.11:6379@16379 myself,master - 0 1612059041000 1 connected 0-5460

32026a285df860992a1a7dac4e9e1d60f253decd 172.38.0.13:6379@16379 master - 0 1612059040057 3 connected 10923-16383

586370b79f1be2968d847f5947819e632da66aa1 172.38.0.12:6379@16379 master - 0 1612059040763 2 connected 5461-10922

#集群可用性测试

127.0.0.1:6379> set a b

-> Redirected to slot [15495] located at 172.38.0.13:6379

OK

#数据在节点172.38.0.13

# get 数据正常

172.38.0.13:6379> get a

"b"

#停止节点172.38.0.13,验证集群高可用性

[root@node1 docker]# docker stop redis-3

redis-3

#重新进入集群测试

/data # redis-cli -c

127.0.0.1:6379> get a

-> Redirected to slot [15495] located at 172.38.0.14:6379

"b"

#发现数据从节点172.38.0.14获取到,再看集群nodes 发现:172.38.0.13:failed, 而172.38.0.14变成master

172.38.0.14:6379> cluster nodes

21ea6b6ac1b6e238bebad3ac54ac72a0274ecae0 172.38.0.14:6379@16379 myself,master - 0 1612059556000 7 connected 10923-16383

383e7f0fd8aab86bd45740de3a1a2ad5681eb591 172.38.0.15:6379@16379 slave 7e593624e546539f83c844a5d0014fc5687aa6c4 0 1612059555890 5 connected

32026a285df860992a1a7dac4e9e1d60f253decd 172.38.0.13:6379@16379 master,fail - 1612059496236 1612059494000 3 connected

7e593624e546539f83c844a5d0014fc5687aa6c4 172.38.0.11:6379@16379 master - 0 1612059555000 1 connected 0-5460

586370b79f1be2968d847f5947819e632da66aa1 172.38.0.12:6379@16379 master - 0 1612059555000 2 connected 5461-10922

d37dd99e5ddb19d9961ce1d613a93adbf00e25d0 172.38.0.16:6379@16379 slave 586370b79f1be2968d847f5947819e632da66aa1 0 1612059556594 6 connected

172.38.0.14:6379>

至此,redis集群搭建完成。