Prometheus 官方的高可用有几种方案:

- HA:即两套 Prometheus 采集完全一样的数据,外边挂负载均衡

- HA + 远程存储:除了基础的多副本 Prometheus,还通过 Remote write 写入到远程存储,解决存储持久化问题

- 联邦集群:即 Federation,按照功能进行分区,不同的 Shard 采集不同的数据,由 Global 节点来统一存放,解决监控数据规模的问题.

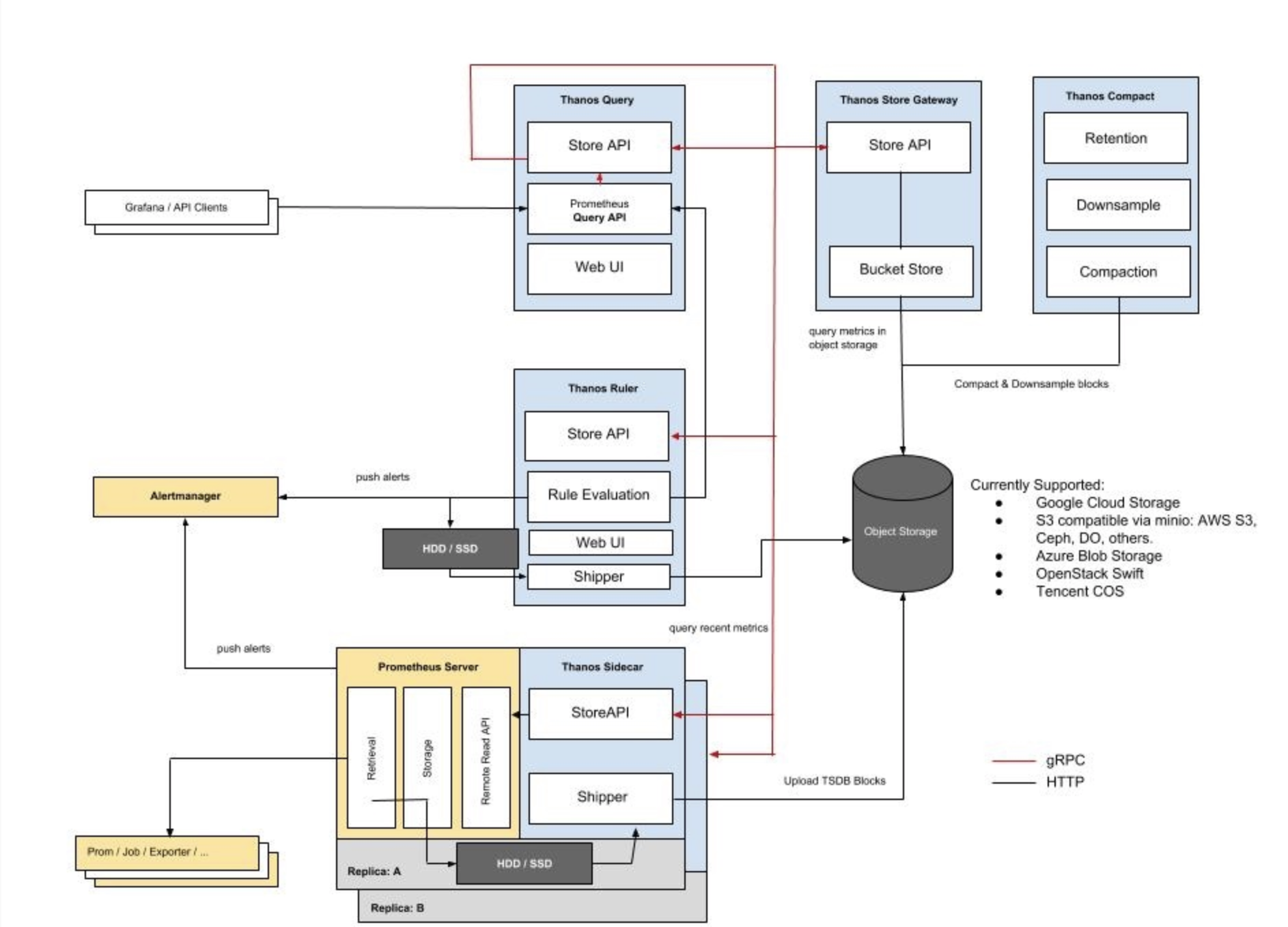

Thanos 的默认模式:sidecar 方式. or Receiver方式 ;

- Thanos Query. 主要是对从Promethues Pod采集来的数据进行merge,提供查询接口给客户端;

- Thanos SideCar. 将Promethues container的数据进行封装,以提供接口给Thanos Query;

- Prometheus Container. 采集数据,通过Remote Read API提供接口给Thanos SideCar。

- Thanos store Gateway: 将对象存储的数据暴露给 Thanos Query 去查询。

- thanos compact: 将对象存储中的数据进行压缩和降低采样率,加速大时间区间监控数据查询的速度。

- Thanos Ruler: 对监控数据进行评估和告警,还可以计算出新的监控数据,将这些新数据提供给 Thanos Query 查询并且/或者上传到对象存储,以供长期存储。

thanos 主:

thanos compact --data-dir ./thanos/comp --http-address 0.0.0.0:19192 --objstore.config-file ./bucket_config.yaml

thanos store --data-dir ./thanos/store --objstore.config-file ./bucket_config.yaml --http-address 0.0.0.0:19191 --grpc-address 0.0.0.0:19090

thanos query --http-address 0.0.0.0:8080 --grpc-address 0.0.0.0:8081 --query.replica-label slave --store 172.16.10.11:10901 --store 172.16.10.10:10901 --store 127.0.0.1:19090

thanos sidecar --tsdb.path /data/ --prometheus.url http://localhost:9090 --objstore.config-file ./bucket_config.yaml --shipper.upload-compacted

thanos 备:

thanos sidecar --tsdb.path /data/ --prometheus.url http://localhost:9090 --objstore.config-file ./bucket_config.yaml --shipper.upload-compacted

thanos query --http-address 0.0.0.0:8080 --grpc-address 0.0.0.0:8081 --query.replica-label slave --store 172.16.10.10:10901 --store 172.16.10.11:10901 --store 172.16.10.10:19090

注: bucket_config.yaml为云存储;

promtheus 配置:

global: scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute. evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute. # scrape_timeout is set to the global default (10s). external_labels: slave: 02 # Alertmanager configuration #alerting: # alertmanagers: # - static_configs: # - targets: ['127.0.0.1:9093'] alerting: alertmanagers: - scheme: http static_configs: - targets: - "172.16.10.10:9093" # Load rules once and periodically evaluate them according to the global 'evaluation_interval'. rule_files: - "/data/alert/etc/*.rule" # - "first_rules.yml" # - "second_rules.yml" # A scrape configuration containing exactly one endpoint to scrape: # Here it's Prometheus itself. scrape_configs: # The job name is added as a label `job=<job_name>` to any timeseries scraped from this config. - job_name: 'prometheus' static_configs: - targets: ['localhost:9090'] - job_name: 'Node_Exporter' consul_sd_configs: - server: '172.16.10.10:8500' relabel_configs: - source_labels: ["__meta_consul_service_address"] regex: "(.*)" replacement: $1 action: replace target_label: "address" - source_labels: ["__meta_consul_service"] regex: "(.*)" replacement: $1 action: replace target_label: "hostname" - source_labels: ["__meta_consul_service_address"] regex: "10.2.*" action: drop - source_labels: ["__meta_consul_service_address"] regex: "10.3.*" action: drop - source_labels: ["__meta_consul_service_address"] regex: "10.7.*" action: drop - source_labels: ["__meta_consul_tags"] regex: ".*测试环境.*" action: drop - source_labels: ["__meta_consul_tags"] regex: ".*安全组.*" action: drop - source_labels: ["__meta_consul_tags"] regex: ",(.*),(.*),(.*),(.*),(.*),(.*)," replacement: $1 action: replace target_label: "department" - source_labels: ["__meta_consul_tags"] regex: ",(.*),(.*),(.*),(.*),(.*),(.*)," replacement: $2 action: replace target_label: "group" - source_labels: ["__meta_consul_tags"] regex: ",(.*),(prod|dev|pre|test)," replacement: $2 action: replace target_label: "env" - source_labels: ["__meta_consul_tags"] regex: ",(.*),(.*),(.*),(.*),(.*),(.*)," replacement: $3 action: replace target_label: "application" - source_labels: ["__meta_consul_tags"] regex: ",(.*),(.*),(.*),(.*),(.*),(.*)," replacement: $4 action: replace target_label: "type" - source_labels: ["__meta_consul_tags"] regex: ",(.*),(.*),(.*),(.*),(.*),(.*)," replacement: $5 action: replace target_label: "dc" - source_labels: ["__meta_consul_tags"] regex: ",(.*),(.*),(.*),(.*),(.*),(.*)," replacement: $6 action: replace target_label: "appCode" - source_labels: ["__meta_consul_service_id"] regex: "(.*)" replacement: $1 action: replace target_label: "id" - job_name: kubetake kubernetes_sd_configs: - role: node api_server: 'https://172.16.10.11:6443' tls_config: ca_file: /data/alert/etc/ca.crt bearer_token_file: /data/alert/etc/token relabel_configs: - action: labelmap regex: (.+) - target_label: __address__ source_labels: [__meta_kubernetes_node_address_InternalIP] regex: (.+) replacement: ${1}:9100