K邻近算法

存在一个样本数据集合,样本集中每个数据都存在标签,即我们知道样本集中每一数据与所属分类的对应关系。输入没有标签的新数据后,将新数据的每个特征与样本集中数据对应的特征进行比较,然后算法提取样本集中特征最相似(最邻近)的分类标签。

K邻近模型由三个基本要素--距离度量、K值选择和分类决策规则决定

1. 使用python导入数据

from numpy import *

import operator #提供排序操作需要的函数

def createDataSet():

group = array([[1.0, 1.1], [1.0,1.1],[0, 0], [0, 0.1]])

labels = ['A', 'A', 'B', 'B']

return group, labels

group, labels = createDataSet()

group

array([[1. , 1.1],

[1. , 1.1],

[0. , 0. ],

[0. , 0.1]])

labels

['A', 'A', 'B', 'B']

2.实施KNN算法

伪代码如下:

- 计算已知类别数据集中的点与当前点之间的距离

- 按照距离递增次序排序

- 选取与当前点距离最小的k个点

- 确定前k个点所在类别的出现频率

- 返回前k个点出现频率最高的类别作为当前点的预测分类

def classify0(inX, dataSet, labels, k): #inX是输入(待分类)

dataSetSize = dataSet.shape[0] #利用属性shape[0]得到样本的个数

diffMat = tile(inX, (dataSetSize, 1)) - dataSet

sqDiffMat = diffMat**2

sqDistances = sqDiffMat.sum(axis=1)

distances = sqDistances**0.5 #距离计算

sortedDistIndicies = distances.argsort() #对距离进行排序

classCount = {}

for i in range(k): #选取临近的k个样本

voteIlabel = labels[sortedDistIndicies[i]] #获得样本标签

classCount[voteIlabel] = classCount.get(voteIlabel, 0) + 1 #样本标签统计

#对样本标签进行从大到小排序,返回出现次数最多的那个标签值

sortedClassCount = sorted(classCount.items(), key=operator.itemgetter(1), reverse=True)

return sortedClassCount[0][0]

注:

1.

numpy.tile()函数可以将原矩阵纵向或横向地复制,复制的方向取决于第二个参数。在这里inX被纵向地复制了四次,然后减去dataset,这样一次减法就将inX和样本集中的每个样本做了减法。形如inX=[x1,x2].tile之后变成[[x1,x2],[x1,x2],[x1,x2],[x1,x2]],原dataset的数据形式为[[a1,b1],[a2,b2],[a3,b3],[a4,b4]]。结合代码不难理解这里的距离计算了。2.

numpy.argsort()返回数组值从小到大的索引值3.

classCount.get(voteIlabel,0).这里用到python字典的方法get(). 其使用的语法为:dict.get(key, default=None)。其中key是字典中要查找的键,如果指定的键不在,那么返回default的值。4.对标签数目排序用的是

numpy.sorted,其与numpy.sort有一定区别。后者是用在列表对象上,前者则适用与所有可迭代的对象。classCount.iteritems()的作用是返回字典列表操作之后的迭代(python3x中已经废除)。key=operator.itemgetter(1)的意思定义了获取字典第一维上(也就是每个标签数目)的数据的函数,itemgetter()返回的是一个函数,因为key的参数可以是lamda表达式或者函数。

classify0([0,0], group, labels, 3)

'B'

3.实例1--利用knn算法改进约会网站的配对效果

Step1:准备数据:从文本文件中解析数据

def file2matrix(filename):

fr = open(filename)

arrayOLines = fr.readlines() #arrayOLines是一个列表,包括所有的行

numberOfLines = len(arrayOLines) #获得行数

returnMat = zeros((numberOfLines,3)) #创建一个返回的NumPy矩阵

classLabelVector = [] #类标签

index = 0

for line in arrayOLines:

line = line.strip() #去掉换行符

listFromLine = line.split(' ')

returnMat[index, :] = listFromLine[0:3] #将数据装填到returnMat

labels = {'didntLike':1, 'smallDoses':2, 'largeDoses':3}

classLabelVector.append(labels[(listFromLine[-1])]) #获得每个样例的标签

index += 1

return returnMat, classLabelVector

datingDataMat, datingLabels = file2matrix('datingTestSet.txt')

datingDataMat

array([[4.0920000e+04, 8.3269760e+00, 9.5395200e-01],

[1.4488000e+04, 7.1534690e+00, 1.6739040e+00],

[2.6052000e+04, 1.4418710e+00, 8.0512400e-01],

...,

[2.6575000e+04, 1.0650102e+01, 8.6662700e-01],

[4.8111000e+04, 9.1345280e+00, 7.2804500e-01],

[4.3757000e+04, 7.8826010e+00, 1.3324460e+00]])

datingLabels[0:20]

[3, 2, 1, 1, 1, 1, 3, 3, 1, 3, 1, 1, 2, 1, 1, 1, 1, 1, 2, 3]

注:

1.

readlines()是python文件的方法,它返回一个列表,包含所有的行2.

split()是字符串方法,通过指定分隔符对字符串进行切片,返回一个字符串列表。如果参数 num 有指定值,则分隔 num+1 个子字符串,语法是str.split(str="", num=string.count(str)).,str是分隔符,有' ',' '等,num是分隔的次数,默认-1,即分割所有。

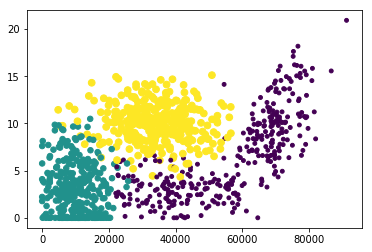

Step2:分析数据:使用Matplotlib创建散点图

%matplotlib inline

import matplotlib

import matplotlib.pyplot as plt

fig = plt.figure()

ax = fig.add_subplot(111)

ax.scatter(datingDataMat[:,0], datingDataMat[:,1],15.0*array(datingLabels),15.0*array(datingLabels))

plt.show()

Step3:归一化特征值

def autoNorm(dataset):

minVals = dataset.min(0)

maxVals = dataset.max(0) #获得每一列的最大、最小值

ranges = maxVals - minVals #获得范围

normDataSet = zeros(shape(dataset))

m = dataset.shape[0] #数据集的个数

normDataSet = dataset - tile(minVals, (m,1))

normDataSet = normDataSet/tile(ranges, (m,1))#归一化的公式,再次用到tile()

return normDataSet, ranges, minVals

normMat, ranges, minVals = autoNorm(datingDataMat)

normMat

array([[0.44832535, 0.39805139, 0.56233353],

[0.15873259, 0.34195467, 0.98724416],

[0.28542943, 0.06892523, 0.47449629],

...,

[0.29115949, 0.50910294, 0.51079493],

[0.52711097, 0.43665451, 0.4290048 ],

[0.47940793, 0.3768091 , 0.78571804]])

ranges

array([9.1273000e+04, 2.0919349e+01, 1.6943610e+00])

minVals

array([0. , 0. , 0.001156])

Step4:对分类器进行测试

def datingClassTest():

hoRatio = 0.10

datingDataMat, datingLabels = file2matrix('datingTestSet.txt') #读取文件

normMat, ranges, minVals = autoNorm(datingDataMat) #归一化

m = normMat.shape[0] #获得样本总体数目

numTestVecs = int(m*hoRatio) #选出一部分作为测试,另一部分作为训练

errorCount = 0.0

for i in range(numTestVecs):

classifierResult = classify0(normMat[i,:], normMat[numTestVecs:m,:],

datingLabels[numTestVecs:m],3) #得到分类结果

print("the classifier came back with: %d, the real answer is: %d"

%(classifierResult, datingLabels[i]))

if(classifierResult != datingLabels[i]): errorCount += 1.0 #如果错误就记录

print("the total error rate is: %f" %(errorCount/float(numTestVecs))) #计算错误率

datingClassTest()

the classifier came back with: 3, the real answer is: 3

the classifier came back with: 2, the real answer is: 2

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 3, the real answer is: 3

the classifier came back with: 3, the real answer is: 3

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 3, the real answer is: 3

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 2, the real answer is: 2

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 2, the real answer is: 2

the classifier came back with: 3, the real answer is: 3

the classifier came back with: 2, the real answer is: 2

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 3, the real answer is: 2

the classifier came back with: 3, the real answer is: 3

the classifier came back with: 2, the real answer is: 2

the classifier came back with: 3, the real answer is: 3

the classifier came back with: 2, the real answer is: 2

the classifier came back with: 3, the real answer is: 3

the classifier came back with: 2, the real answer is: 2

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 3, the real answer is: 3

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 3, the real answer is: 3

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 2, the real answer is: 2

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 2, the real answer is: 2

the classifier came back with: 3, the real answer is: 3

the classifier came back with: 3, the real answer is: 3

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 2, the real answer is: 2

the classifier came back with: 3, the real answer is: 3

the classifier came back with: 3, the real answer is: 3

the classifier came back with: 3, the real answer is: 3

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 2, the real answer is: 2

the classifier came back with: 2, the real answer is: 2

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 3, the real answer is: 3

the classifier came back with: 2, the real answer is: 2

the classifier came back with: 2, the real answer is: 2

the classifier came back with: 2, the real answer is: 2

the classifier came back with: 2, the real answer is: 2

the classifier came back with: 3, the real answer is: 3

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 2, the real answer is: 2

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 2, the real answer is: 2

the classifier came back with: 2, the real answer is: 2

the classifier came back with: 2, the real answer is: 2

the classifier came back with: 2, the real answer is: 2

the classifier came back with: 2, the real answer is: 2

the classifier came back with: 3, the real answer is: 3

the classifier came back with: 2, the real answer is: 2

the classifier came back with: 3, the real answer is: 3

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 2, the real answer is: 2

the classifier came back with: 3, the real answer is: 3

the classifier came back with: 2, the real answer is: 2

the classifier came back with: 2, the real answer is: 2

the classifier came back with: 3, the real answer is: 1

the classifier came back with: 3, the real answer is: 3

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 3, the real answer is: 3

the classifier came back with: 3, the real answer is: 3

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 2, the real answer is: 2

the classifier came back with: 3, the real answer is: 3

the classifier came back with: 3, the real answer is: 1

the classifier came back with: 3, the real answer is: 3

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 2, the real answer is: 2

the classifier came back with: 2, the real answer is: 2

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 3, the real answer is: 3

the classifier came back with: 2, the real answer is: 3

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 2, the real answer is: 2

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 3, the real answer is: 3

the classifier came back with: 3, the real answer is: 3

the classifier came back with: 2, the real answer is: 2

the classifier came back with: 1, the real answer is: 1

the classifier came back with: 3, the real answer is: 1

the total error rate is: 0.050000