scrapy

scrapy是一个爬取网站数据,提取结构性数据的框架。注意敲重点是框架。框架就说明了什么?——提供的组件丰富,scrapy的设计参考了Django,可见一斑。但是不同于Django的是scrapy的可拓展性也很强,所以说,你说你会用python写爬虫,不了解点scrapy。。。。

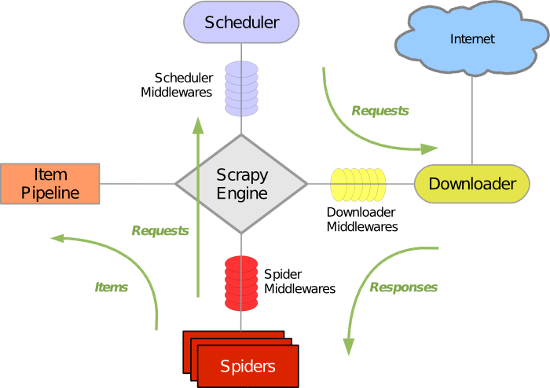

scrapy使用了Twisted异步网络库来处理网络通讯,整体架构如下图:

Scrapy主要包括了以下组件:

- 引擎(Scrapy)

用来处理整个系统的数据流处理, 触发事务(框架核心) - 调度器(Scheduler)

用来接受引擎发过来的请求, 压入队列中, 并在引擎再次请求的时候返回. 可以想像成一个URL(抓取网页的网址或者说是链接)的优先队列, 由它来决定下一个要抓取的网址是什么, 同时去除重复的网址 - 下载器(Downloader)

用于下载网页内容, 并将网页内容返回给蜘蛛(Scrapy下载器是建立在twisted这个高效的异步模型上的) - 爬虫(Spiders)

爬虫是主要干活的, 用于从特定的网页中提取自己需要的信息, 即所谓的实体(Item)。用户也可以从中提取出链接,让Scrapy继续抓取下一个页面 - 项目管道(Pipeline)

负责处理爬虫从网页中抽取的实体,主要的功能是持久化实体、验证实体的有效性、清除不需要的信息。当页面被爬虫解析后,将被发送到项目管道,并经过几个特定的次序处理数据。 - 下载器中间件(Downloader Middlewares)

位于Scrapy引擎和下载器之间的框架,主要是处理Scrapy引擎与下载器之间的请求及响应。 - 爬虫中间件(Spider Middlewares)

介于Scrapy引擎和爬虫之间的框架,主要工作是处理蜘蛛的响应输入和请求输出。 - 调度中间件(Scheduler Middewares)

介于Scrapy引擎和调度之间的中间件,从Scrapy引擎发送到调度的请求和响应。

好介绍到这里。面试题来了:谈谈你对scrapy架构的理解:

上面就是我的理解,你的理解与我的可能不一样,以你的为准,面试的时候如果被问到了。千万要说,这东西怎么说都对。不说的话,一定会被面试官反问一句:你还有什么要补充的吗?。。。。

安装:

pass

直接开始写代码吧。

项目上手,利用scrapy爬取抽屉。读到这里你是不是有点失望了?为什么不是淘宝?不是腾讯视频?不是知乎?在此说明下抽屉虽小,五脏俱全嘛。目的是为了展示下scrapy的基本用法没必要将精力花在处理反爬虫上。

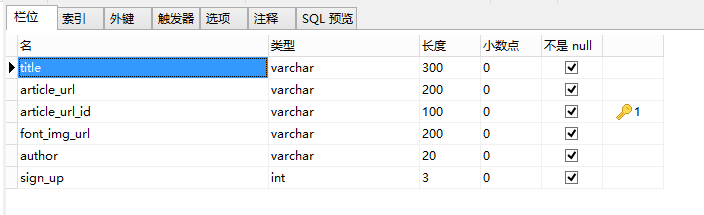

在开始我们的项目之前,要确定一件事就是我们要爬取什么数据?怎么存储数据?这个案例使用到的Mysql最为容器存储数据的。设计的表结构如下:

有了这些字段就能着手于spider的编写了,第一步首先写item类

1 import datetime 2 import re 3 4 import scrapy 5 from scrapy.loader import ItemLoader 6 from scrapy.loader.processors import Join, MapCompose, TakeFirst 7 8 9 def add_title(value): 10 """字段之后加上签名""" 11 return value + 'Pontoon' 12 13 14 class ArticleItemLoader(ItemLoader): 15 """重写ItemLoader,从列表中提取第一个字段""" 16 default_output_processor = TakeFirst() 17 18 19 class ChouTiArticleItem(scrapy.Item): 20 """初始化items""" 21 title = scrapy.Field( 22 input_processor=MapCompose(add_title) 23 ) 24 article_url = scrapy.Field() 25 article_url_id = scrapy.Field() 26 font_img_url = scrapy.Field() 27 author = scrapy.Field() 28 sign_up = scrapy.Field() 29 30 def get_insert_sql(self): 31 insert_sql = ''' 32 insert into chouti(title, article_url, article_url_id, font_img_url, author, sign_up) 33 VALUES (%s, %s, %s, %s, %s, %s) 34 ''' 35 params = (self["title"], self["article_url"], self["article_url_id"], self["font_img_url"], 36 self["author"], self["sign_up"],) 37 return insert_sql, params

下一步解析数据(这里并没由对下一页进行处理,省事!)

1 # -*- coding: utf-8 -*- 2 import scrapy 3 from scrapy.http import Request 4 5 from ..items import ChouTiArticleItem, ArticleItemLoader 6 from ..utils.common import get_md5 7 8 9 class ChoutiSpider(scrapy.Spider): 10 name = 'chouti' 11 allowed_domains = ['dig.chouti.com/'] 12 start_urls = ['https://dig.chouti.com/'] 13 header = { 14 "User-Agent": "Mozilla/5.0 (Windows NT 6.3; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) " 15 "Chrome/71.0.3578.98 Safari/537.36" 16 } 17 18 def parse(self, response): 19 article_list = response.xpath("//div[@class='item']") 20 for article in article_list: 21 item_load = ArticleItemLoader(item=ChouTiArticleItem(), selector=article) # 注意这里的返回值并不是response了 22 article_url = article.xpath(".//a[@class='show-content color-chag']/@href").extract_first("") 23 item_load.add_xpath("title", ".//a[@class='show-content color-chag']/text()") 24 item_load.add_value("article_url", article_url) 25 item_load.add_value("article_url_id", get_md5(article_url)) 26 item_load.add_xpath("font_img_url", ".//div[@class='news-pic']/img/@src") 27 item_load.add_xpath("author", ".//a[@class='user-a']//b/text()") 28 item_load.add_xpath("sign_up", ".//a[@class='digg-a']//b/text()") 29 article_item = item_load.load_item() # 解析上述定义的字段 30 yield article_item

OK,这时我们的数据就被解析成了字典yield到了Pipline中了

1 import MySQLdb 2 import MySQLdb.cursors 3 from twisted.enterprise import adbapi # 将入库变成异步操作 4 5 6 class MysqlTwistedPipleline(object): 7 """抽屉Pipleline""" 8 def __init__(self, db_pool): 9 self.db_pool = db_pool 10 11 @classmethod 12 def from_settings(cls, settings): 13 """内置的方法自动调用settings""" 14 db_params = dict( 15 host=settings["MYSQL_HOST"], 16 db=settings["MYSQL_DBNAME"], 17 user=settings["MYSQL_USER"], 18 password=settings["MYSQL_PASSWORD"], 19 charset="utf8", 20 cursorclass=MySQLdb.cursors.DictCursor, 21 use_unicode=True, 22 ) 23 db_pool = adbapi.ConnectionPool("MySQLdb", **db_params) 24 25 return cls(db_pool) 26 27 def process_item(self, item, spider): 28 """使用twisted异步插入数据值数据库""" 29 query = self.db_pool.runInteraction(self.do_insert, item) # runInteraction() 执行异步操作的函数 30 query.addErrback(self.handle_error, item, spider) # addErrback() 异步处理异常的函数 31 32 def handle_error(self, failure, item, spider): 33 """自定义处理异步插入数据的异常""" 34 print(failure) 35 36 def do_insert(self, cursor, item): 37 """自定义执行具体的插入""" 38 insert_sql, params = item.get_insert_sql() 39 # chouti插入数据 40 cursor.execute(insert_sql, (item["title"], item["article_url"], item["article_url_id"], 41 item["font_img_url"], item["author"], item["sign_up"]))

再到settings.py中配置一下文件即可。

1 ITEM_PIPELINES = { 2 'ChoutiSpider.pipelines.MysqlTwistedPipleline': 3, 3 4 } 5 ... 6 7 MYSQL_HOST = "127.0.0.1" 8 MYSQL_DBNAME = "article_spider" # 数据库名称 9 MYSQL_USER = "xxxxxx" 10 MYSQL_PASSWORD = "xxxxx"

ok练手的项目做完了,接下来正式进入正题。我将根据scrapy的架构图,简单概述下scrapy的各个组件,以及源码一览。

1、spiders

scrapy为我们提供了5中spider用于构造请求,解析数据、返回item。常用的就scrapy.spider、scrapy.crawlspider两种。下面是spider常用到的属性和方法。

scrapy.spider

| 属性、方法 | 功能 | 简述 |

| name | 爬虫的名称 | 启动爬虫的时候会用到 |

| start_urls | 起始url | 是一个列表,默认被start_requests调用 |

| allowd_doamins | 对url进行的简单过滤 | 当请求url没有被allowd_doamins匹配到时,会报一个非常恶心的错,详见我的分布式爬虫的那一篇博客 |

| start_requests() | 第一次请求 | 自己的spider可以重写,突破一些简易的反爬机制 |

| custom_settings | 定制settings | 可以对每个爬虫定制settings配置 |

| from_crawler | 实例化入口 | 在scrapy的各个组件的源码中,首先执行的就是它 |

关于spider我们可以定制start_requests、可以单独的设置custom_settings、也可以设置请求头、代理、cookies。这些基础的用发,之前的一些博客也都介绍到了,额外的想说一下的就是关于页面的解析。

页面的解析,就是两种,一种是请求得到的文章列表中标题中信息量不大(一些新闻、资讯类的网站),只需访问具体的文章内容。在做循环时需解析到a标签下的href属性里的url,还有一种就是一些电商网站类的,商品的列表也本身包含的信息就比较多,既要提取列表中商品的信息,又要提取到详情页中用户的评论,这时循环解析到具体的li标签,然后在解析li里面的a标签进行下一步的跟踪。

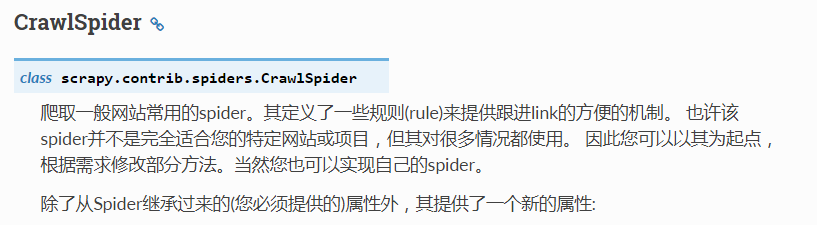

scrapy.crawlspider

文档进行了说明,其实就是利用自己定制的rules,正则匹配每次请求的url,对allowd_doamins进行的补充,常用于网站的全站爬取。关于全站的爬取还可以自己找网站url的特征来进行,博客中的新浪网的爬虫以及当当图书的爬虫都是我自己定义的url匹配规则进行的爬取。

具体的用法请参考文档中提供的案例以及我的博客crawlspider爬取拉勾网案例

ok!spider先介绍到这里,如果还有其他的发现我会回来补充

2、scrapy的去重以及深度、优先级

scrapy—url去重的策略

其实scrapy内部的去重策略就是首先对请求的url进行sha1加密,然后将加密后的url存储到set( )集合里,这样就实现了去重的功能。(在后面分布式爬虫中也是用到了redis的集合进行的url去重)

1 class ChoutiSpider(scrapy.Spider): 2 name = 'chouti' 3 allowed_domains = ['chouti.com'] 4 start_urls = ['https://dig.chouti.com/'] 5 header = { 6 "User-Agent": "Mozilla/5.0 (Windows NT 6.3; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) " 7 "Chrome/71.0.3578.98 Safari/537.36" 8 } 9 10 def parse(self, response): 11 """ 12 article_list = response.xpath("//div[@class='item']") 13 for article in article_list: 14 item_load = ArticleItemLoader(item=ChouTiArticleItem(), selector=article) # 注意这里的返回值并不是response了 15 article_url = article.xpath(".//a[@class='show-content color-chag']/@href").extract_first("") 16 item_load.add_xpath("title", ".//a[@class='show-content color-chag']/text()") 17 item_load.add_value("article_url", article_url) 18 item_load.add_value("article_url_id", get_md5(article_url)) 19 item_load.add_xpath("font_img_url", ".//div[@class='news-pic']/img/@src") 20 item_load.add_xpath("author", ".//a[@class='user-a']//b/text()") 21 item_load.add_xpath("sign_up", ".//a[@class='digg-a']//b/text()") 22 article_item = item_load.load_item() # 解析上述定义的字段 23 yield article_item 24 """ 25 print(response.request.url) 26 27 page_list = response.xpath("//div[@id='dig_lcpage']//a/@href").extract() 28 # page_list = response.xpath("//div[@id='dig_lcpage']//li[last()]/a/@href").extract() 29 for page in page_list: 30 page = "https://dig.chouti.com" + page 31 yield Request(url=page, callback=self.parse) 32 33 >>>https://dig.chouti.com/ 34 https://dig.chouti.com/all/hot/recent/2 35 https://dig.chouti.com/all/hot/recent/3 36 https://dig.chouti.com/all/hot/recent/7 37 https://dig.chouti.com/all/hot/recent/6 38 https://dig.chouti.com/all/hot/recent/8 39 https://dig.chouti.com/all/hot/recent/9 40 https://dig.chouti.com/all/hot/recent/4 41 https://dig.chouti.com/all/hot/recent/5 42 43 url去重

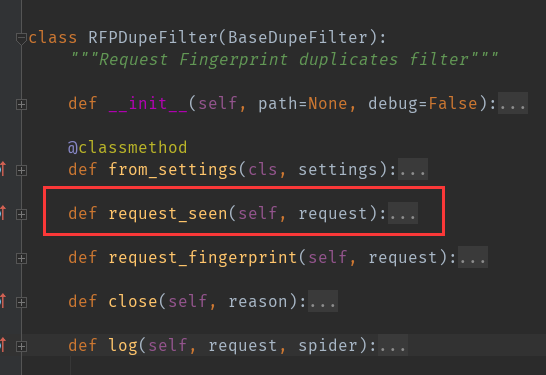

从输出的结果就可以发现scrapy其实内部自动帮助我们实现了url去重的功能。去看看他的源码。

from scrapy.dupefilter import RFPDupeFilter # 源码入口

首先看这个方法

def request_seen(self, request): fp = self.request_fingerprint(request) # 将request中的url进行hashlib.sha1()加密 if fp in self.fingerprints: # self.fingerprints = set() return True # 如果访问过了就不再访问了 self.fingerprints.add(fp) # 否则的话就将加密后的url放入集合之中 if self.file: self.file.write(fp + os.linesep) # 也可以将fp写入到文件中去

了解了源码的原理之后就可以改写url去重规则了

1 from scrapy.dupefilters import BaseDupeFilter 2 3 4 class ChouTiDupeFilter(BaseDupeFilter): 5 6 @classmethod 7 def from_settings(cls, settings): 8 return cls() 9 10 def request_seen(self, request): 11 return False # 表示不对URL进行去重 12 13 def open(self): # can return deferred 14 pass 15 16 def close(self, reason): # can return a deferred 17 pass 18 19 def log(self, request, spider): # log that a request has been filtered 20 pass

在settings.py中添加下参数

1 # 自定制URL去重 2 DUPEFILTER_CLASS = 'JobboleSpider.chouti_dupefilters.ChouTiDupeFilter'

这个时候在运行爬虫会发现url出现了重复访问的现象。

1 from scrapy.dupefilters import BaseDupeFilter 2 from scrapy.dupefilters import request_fingerprint 3 4 5 class ChouTiDupeFilter(BaseDupeFilter): 6 def __init__(self): 7 self.visited_fd = set() 8 9 @classmethod 10 def from_settings(cls, settings): 11 return cls() # 实例化 12 13 def request_seen(self, request): 14 # return False # 表示不对URL进行去重 15 fd = request_fingerprint(request) # request_fingerprint 这个方法要说下 16 if fd in self.visited_fd: 17 return True 18 self.visited_fd.add(fd) 19 20 def open(self): # can return deferred 21 print('start') 22 23 def close(self, reason): # can return a deferred 24 print('close') 25 26 def log(self, request, spider): # log that a request has been filtered 27 pass

1 import hashlib 2 from scrapy.dupefilters import request_fingerprint 3 from scrapy.http import Request 4 5 # 注意书写url的时候参数要带上问号 6 url1 = "http://www.baidu.com/?k1=v1&k2=v2" 7 url2 = "http://www.baidu.com/?k2=v2&k1=v1" 8 ret1 = hashlib.sha1() 9 fd1 = Request(url=url1) 10 ret2 = hashlib.sha1() 11 fd2 = Request(url=url2) 12 ret1.update(url1.encode('utf-8')) 13 ret2.update(url2.encode('utf-8')) 14 15 print("fd1:", ret1.hexdigest()) 16 print("fd2:", ret2.hexdigest()) 17 print("re1:", request_fingerprint(request=fd1)) 18 print("re2:", request_fingerprint(request=fd2)) 19 20 >>>fd1: 1864fb5c5b86b058577fb94714617f3c3a226448 21 fd2: ba0922c651fc8f3b7fb07dfa52ff24ed05f6475e 22 re1: de8c206cf21aab5a0c6bbdcdaf277a9f71758525 23 re2: de8c206cf21aab5a0c6bbdcdaf277a9f71758525 24 25 # sha1 ——> 40位随机数 26 # md5 ——> 32位随机数

scrapy—深度和优先级

关于深度和优先级这两个概念其实在平常的使用中是体会不到的尤其是深度这个概念,可以这样来获取请求的深度。

response.request.meta.get("depth", None)

但是深度和优先级这两个概念在源码的执行流程中又特别的重要,因为这两个会spider_output至engine中最终传递到scheduler调度器中进行存储的。查看下源码

from scrapy.spidermiddlewares import depth # 源码入口,位于spidermiddleware中

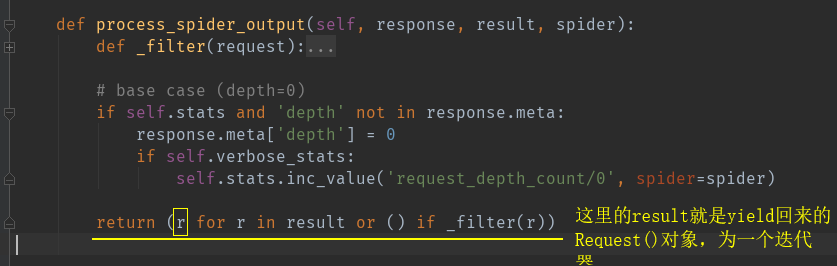

1 import logging 2 3 from scrapy.http import Request 4 5 logger = logging.getLogger(__name__) 6 7 8 class DepthMiddleware(object): 9 10 def __init__(self, maxdepth, stats=None, verbose_stats=False, prio=1): 11 self.maxdepth = maxdepth # 最大深度 12 self.stats = stats # 数据收集器 13 self.verbose_stats = verbose_stats 14 self.prio = prio # 优先级 15 16 @classmethod 17 def from_crawler(cls, crawler): 18 settings = crawler.settings 19 maxdepth = settings.getint('DEPTH_LIMIT') 20 verbose = settings.getbool('DEPTH_STATS_VERBOSE') 21 prio = settings.getint('DEPTH_PRIORITY') 22 return cls(maxdepth, crawler.stats, verbose, prio) 23 24 def process_spider_output(self, response, result, spider): 25 def _filter(request): 26 """闭包函数""" 27 if isinstance(request, Request): 28 depth = response.meta['depth'] + 1 29 request.meta['depth'] = depth 30 # 下面为优先级 31 if self.prio: 32 # 优先级递减也就是,请求先来的depth的优先级高于后面来的 33 request.priority -= depth * self.prio 34 if self.maxdepth and depth > self.maxdepth: 35 logger.debug( 36 "Ignoring link (depth > %(maxdepth)d): %(requrl)s ", 37 {'maxdepth': self.maxdepth, 'requrl': request.url}, 38 extra={'spider': spider} 39 ) 40 return False 41 elif self.stats: 42 if self.verbose_stats: 43 self.stats.inc_value('request_depth_count/%s' % depth, 44 spider=spider) 45 self.stats.max_value('request_depth_max', depth, 46 spider=spider) 47 return True 48 49 # base case (depth=0) 50 if self.stats and 'depth' not in response.meta: 51 response.meta['depth'] = 0 52 if self.verbose_stats: 53 self.stats.inc_value('request_depth_count/0', spider=spider) 54 # result为yield回来的Request()对象,为一个迭代器 55 return (r for r in result or () if _filter(r)) # if _filter(r)返回True则将这个请求的具体对象r放到调度器中

下图所示

3、scheduler

调度器从引擎接受request并将他们入队,以便之后引擎请求他们时提供给引擎。

scheduler源码

1 import os 2 import json 3 import logging 4 from os.path import join, exists 5 6 from scrapy.utils.reqser import request_to_dict, request_from_dict 7 from scrapy.utils.misc import load_object 8 from scrapy.utils.job import job_dir 9 10 logger = logging.getLogger(__name__) 11 12 13 class Scheduler(object): 14 15 def __init__(self, dupefilter, jobdir=None, dqclass=None, mqclass=None, 16 logunser=False, stats=None, pqclass=None): 17 self.df = dupefilter 18 self.dqdir = self._dqdir(jobdir) 19 self.pqclass = pqclass 20 self.dqclass = dqclass 21 self.mqclass = mqclass 22 self.logunser = logunser 23 self.stats = stats 24 25 @classmethod 26 def from_crawler(cls, crawler): 27 settings = crawler.settings 28 dupefilter_cls = load_object(settings['DUPEFILTER_CLASS']) 29 dupefilter = dupefilter_cls.from_settings(settings) 30 pqclass = load_object(settings['SCHEDULER_PRIORITY_QUEUE']) 31 dqclass = load_object(settings['SCHEDULER_DISK_QUEUE']) 32 mqclass = load_object(settings['SCHEDULER_MEMORY_QUEUE']) 33 logunser = settings.getbool('LOG_UNSERIALIZABLE_REQUESTS', settings.getbool('SCHEDULER_DEBUG')) 34 return cls(dupefilter, jobdir=job_dir(settings), logunser=logunser, 35 stats=crawler.stats, pqclass=pqclass, dqclass=dqclass, mqclass=mqclass) 36 37 def has_pending_requests(self): 38 return len(self) > 0 39 40 def open(self, spider): 41 self.spider = spider 42 self.mqs = self.pqclass(self._newmq) 43 self.dqs = self._dq() if self.dqdir else None 44 return self.df.open() 45 46 def close(self, reason): 47 if self.dqs: 48 prios = self.dqs.close() 49 with open(join(self.dqdir, 'active.json'), 'w') as f: 50 json.dump(prios, f) 51 return self.df.close(reason) 52 53 def enqueue_request(self, request): 54 if not request.dont_filter and self.df.request_seen(request): 55 self.df.log(request, self.spider) 56 return False 57 dqok = self._dqpush(request) 58 if dqok: 59 self.stats.inc_value('scheduler/enqueued/disk', spider=self.spider) 60 else: 61 self._mqpush(request) 62 self.stats.inc_value('scheduler/enqueued/memory', spider=self.spider) 63 self.stats.inc_value('scheduler/enqueued', spider=self.spider) 64 return True 65 66 def next_request(self): 67 request = self.mqs.pop() 68 if request: 69 self.stats.inc_value('scheduler/dequeued/memory', spider=self.spider) 70 else: 71 request = self._dqpop() 72 if request: 73 self.stats.inc_value('scheduler/dequeued/disk', spider=self.spider) 74 if request: 75 self.stats.inc_value('scheduler/dequeued', spider=self.spider) 76 return request 77 78 def __len__(self): 79 return len(self.dqs) + len(self.mqs) if self.dqs else len(self.mqs) 80 81 def _dqpush(self, request): 82 if self.dqs is None: 83 return 84 try: 85 reqd = request_to_dict(request, self.spider) 86 self.dqs.push(reqd, -request.priority) 87 except ValueError as e: # non serializable request 88 if self.logunser: 89 msg = ("Unable to serialize request: %(request)s - reason:" 90 " %(reason)s - no more unserializable requests will be" 91 " logged (stats being collected)") 92 logger.warning(msg, {'request': request, 'reason': e}, 93 exc_info=True, extra={'spider': self.spider}) 94 self.logunser = False 95 self.stats.inc_value('scheduler/unserializable', 96 spider=self.spider) 97 return 98 else: 99 return True 100 101 def _mqpush(self, request): 102 self.mqs.push(request, -request.priority) 103 104 def _dqpop(self): 105 if self.dqs: 106 d = self.dqs.pop() 107 if d: 108 return request_from_dict(d, self.spider) 109 110 def _newmq(self, priority): 111 return self.mqclass() 112 113 def _newdq(self, priority): 114 return self.dqclass(join(self.dqdir, 'p%s' % priority)) 115 116 def _dq(self): 117 activef = join(self.dqdir, 'active.json') 118 if exists(activef): 119 with open(activef) as f: 120 prios = json.load(f) 121 else: 122 prios = () 123 q = self.pqclass(self._newdq, startprios=prios) 124 if q: 125 logger.info("Resuming crawl (%(queuesize)d requests scheduled)", 126 {'queuesize': len(q)}, extra={'spider': self.spider}) 127 return q 128 129 def _dqdir(self, jobdir): 130 if jobdir: 131 dqdir = join(jobdir, 'requests.queue') 132 if not exists(dqdir): 133 os.makedirs(dqdir) 134 return dqdir

首先执行from_crawler加载对象

@classmethod def from_crawler(cls, crawler): settings = crawler.settings dupefilter_cls = load_object(settings['DUPEFILTER_CLASS']) dupefilter = dupefilter_cls.from_settings(settings) # 如果不设置DUPEFILTER_CLASS采用默认的去重规则 pqclass = load_object(settings['SCHEDULER_PRIORITY_QUEUE']) # 优先级队列 dqclass = load_object(settings['SCHEDULER_DISK_QUEUE']) # 磁盘队列 mqclass = load_object(settings['SCHEDULER_MEMORY_QUEUE']) # 内存队列 logunser = settings.getbool('LOG_UNSERIALIZABLE_REQUESTS', settings.getbool('SCHEDULER_DEBUG')) return cls(dupefilter, jobdir=job_dir(settings), logunser=logunser, stats=crawler.stats, pqclass=pqclass, dqclass=dqclass, mqclass=mqclass)

在到init方法实例化

def __init__(self, dupefilter, jobdir=None, dqclass=None, mqclass=None, logunser=False, stats=None, pqclass=None): self.df = dupefilter self.dqdir = self._dqdir(jobdir) self.pqclass = pqclass self.dqclass = dqclass self.mqclass = mqclass self.logunser = logunser self.stats = stats

然后在执行open方法

def open(self, spider): self.spider = spider self.mqs = self.pqclass(self._newmq) self.dqs = self._dq() if self.dqdir else None return self.df.open()

然后在执行 enqueue_request 方法请求入列

def enqueue_request(self, request): """请求入队""" if not request.dont_filter and self.df.request_seen(request): # request.dont_filter默认False;而self.df.request_seen(request)返回True代表url已经访问过了,很显然这里返回False self.df.log(request, self.spider) return False dqok = self._dqpush(request) # 跳到self._dqpush(request)函数中去,请求入队,采用先进后出的方式 if dqok: self.stats.inc_value('scheduler/enqueued/disk', spider=self.spider) else: self._mqpush(request) # 执行内存队列,同样的请求入队,采用先进后出的方式 self.stats.inc_value('scheduler/enqueued/memory', spider=self.spider) self.stats.inc_value('scheduler/enqueued', spider=self.spider)

_dqpush方法

def _dqpush(self, request): if self.dqs is None: return try: reqd = request_to_dict(request, self.spider) # 将请求对象实例化成字典 self.dqs.push(reqd, -request.priority) # 内存队列中加入请求字典,-request.priority except ValueError as e: # non serializable request if self.logunser: msg = ("Unable to serialize request: %(request)s - reason:" " %(reason)s - no more unserializable requests will be" " logged (stats being collected)") logger.warning(msg, {'request': request, 'reason': e}, exc_info=True, extra={'spider': self.spider}) self.logunser = False self.stats.inc_value('scheduler/unserializable', spider=self.spider) return else: return True

然后执行next_request请求出队,交给下载器进行下载

def next_request(self): """请求出队""" request = self.mqs.pop() # 先从从内存队列中取 if request: self.stats.inc_value('scheduler/dequeued/memory', spider=self.spider) else: request = self._dqpop() if request: self.stats.inc_value('scheduler/dequeued/disk', spider=self.spider) if request: self.stats.inc_value('scheduler/dequeued', spider=self.spider) return request def __len__(self): return len(self.dqs) + len(self.mqs) if self.dqs else len(self.mqs)

ok调度器其实就是一个队列这里scrapy引用了第三方的队列库queuelib,请求是先进后出传递给下载器。

scrapy—cookie

在scrapy中cookie可以直接写在Request()中。

class Request(object_ref): def __init__(self, url, callback=None, method='GET', headers=None, body=None, cookies=None, meta=None, encoding='utf-8', priority=0, dont_filter=False, errback=None, flags=None):

详细的用法通过案例来介绍

1 class ChoutiSpider(scrapy.Spider): 2 name = 'chouti' 3 allowed_domains = ['chouti.com'] 4 start_urls = ['https://dig.chouti.com/'] 5 cookie_dict = {} 6 7 def parse(self, response): 8 cookie_jar = CookieJar() 9 cookie_jar.extract_cookies(response, response.request) 10 for k, v in cookie_jar._cookies.items(): 11 for i, j in v.items(): 12 for m, n in j.items(): 13 self.cookie_dict[m] = n.value 14 15 print(cookie_jar) 16 print(self.cookie_dict) 17 # 模拟登录 18 yield Request(url="https://dig.chouti.com/login", method="POST", 19 body="phone=xxxxxxxxxx&password=xxxxxxxx&oneMonth=1", 20 cookies=self.cookie_dict, 21 headers={ 22 "User-Agent": "Mozilla/5.0 (Windows NT 6.3; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) " 23 "Chrome/71.0.3578.98 Safari/537.36", 24 "content-type": "application/x-www-form-urlencoded;charset=UTF-8", 25 }, 26 callback=self.check_login, 27 ) 28 29 # 解析字段 30 """ 31 article_list = response.xpath("//div[@class='item']") 32 for article in article_list: 33 item_load = ArticleItemLoader(item=ChouTiArticleItem(), selector=article) # 注意这里的返回值并不是response了 34 article_url = article.xpath(".//a[@class='show-content color-chag']/@href").extract_first("") 35 item_load.add_xpath("title", ".//a[@class='show-content color-chag']/text()") 36 item_load.add_value("article_url", article_url) 37 item_load.add_value("article_url_id", get_md5(article_url)) 38 item_load.add_xpath("font_img_url", ".//div[@class='news-pic']/img/@src") 39 item_load.add_xpath("author", ".//a[@class='user-a']//b/text()") 40 item_load.add_xpath("sign_up", ".//a[@class='digg-a']//b/text()") 41 article_item = item_load.load_item() # 解析上述定义的字段 42 yield article_item 43 """ 44 # 获取每一页 45 """ 46 print(response.request.url, response.request.meta.get("depth", 0)) 47 48 page_list = response.xpath("//div[@id='dig_lcpage']//a/@href").extract() 49 # page_list = response.xpath("//div[@id='dig_lcpage']//li[last()]/a/@href").extract() 50 for page in page_list: 51 page = "https://dig.chouti.com" + page 52 yield Request(url=page, callback=self.parse) 53 """ 54 55 def check_login(self, response): 56 """判断是否登录成功,如果登录成功跳转至首页需要携带cookie""" 57 print(response.text) 58 59 yield Request(url="https://dig.chouti.com/all/hot/recent/1", 60 cookies=self.cookie_dict, 61 callback=self.index 62 ) 63 64 def index(self,response): 65 """首页实现点赞""" 66 news_list = response.xpath("//div[@id='content-list']/div[@class='item']") 67 for news in news_list: 68 link_id = news.xpath(".//div[@class='part2']/@share-linkid").extract_first() 69 print(link_id) 70 yield Request( 71 url="https://dig.chouti.com/link/vote?linksId=%s" % (link_id, ), 72 method="POST", 73 cookies=self.cookie_dict, 74 callback=self.do_favs 75 ) 76 77 # 翻页 78 page_list = response.xpath("//div[@id='dig_lcpage']//a/@href").extract() 79 # page_list = response.xpath("//div[@id='dig_lcpage']//li[last()]/a/@href").extract() 80 for page in page_list: 81 page = "https://dig.chouti.com" + page 82 yield Request(url=page, cookies=self.cookie_dict, callback=self.index) 83 84 def do_favs(self, response): 85 """获取点赞信息""" 86 print(response.text)