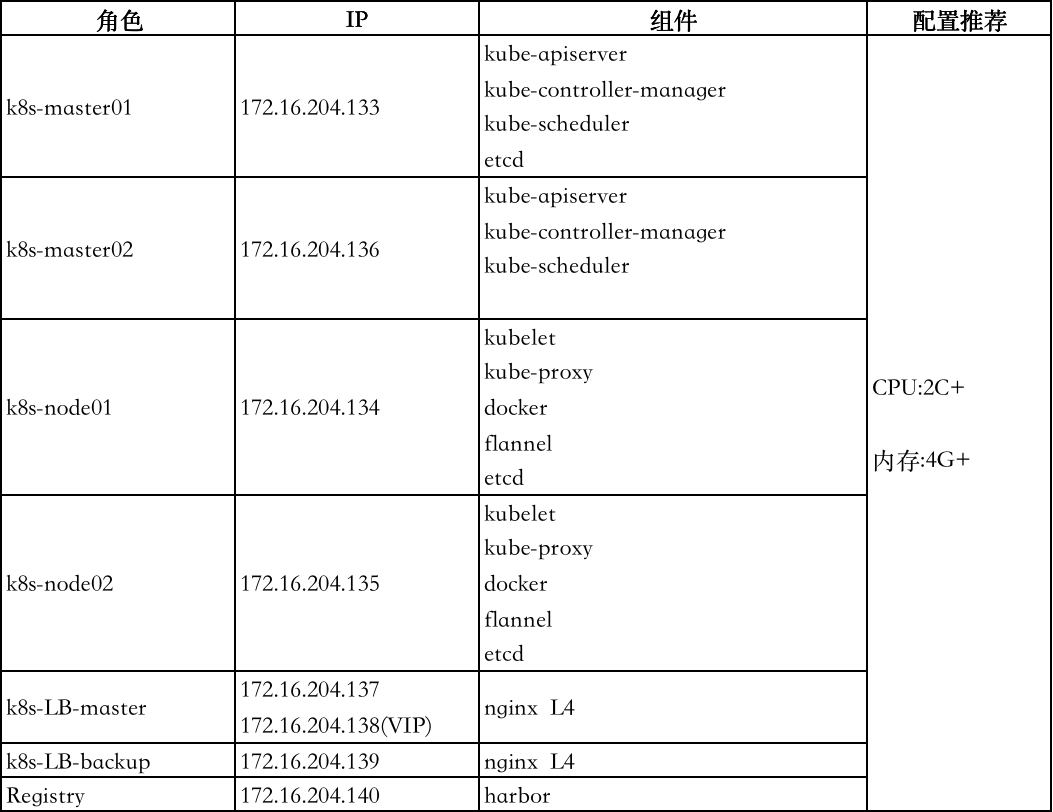

部署规划

软件版本

应用规划

集群架构规划

安装注意事项:

这套k8s都是通过脚本部署的,所以一定要注意各个节点的服务的安装目录,我本地安装的根目录是:/opt/k8s,如果想使用其他目录,就需要修改脚本中涉及目录的所有参数

部署准备(三个节点都需要操作):

1.修改主机名

参考上述角色名

2.同步ntp网络时间

3.关闭selinux

4.关闭firewalld防火墙

5.关闭swap分区

swapoff -a && sysctl -w vm.swappiness=0

6.设置k8s的路由转发(k8s1.8版本后引入了ipvs来代替iptables,本次安装1.7版本,不涉及配置ipvs)

[root@k8s-master01 etcd-cert]# modprobe br_netfilter [root@k8s-master01 etcd-cert]# cat << EOF | tee /etc/sysctl.d/k8s.conf net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF [root@k8s-master01 etcd-cert]# sysctl -p /etc/sysctl.d/k8s.conf

7.开启ipvs支持

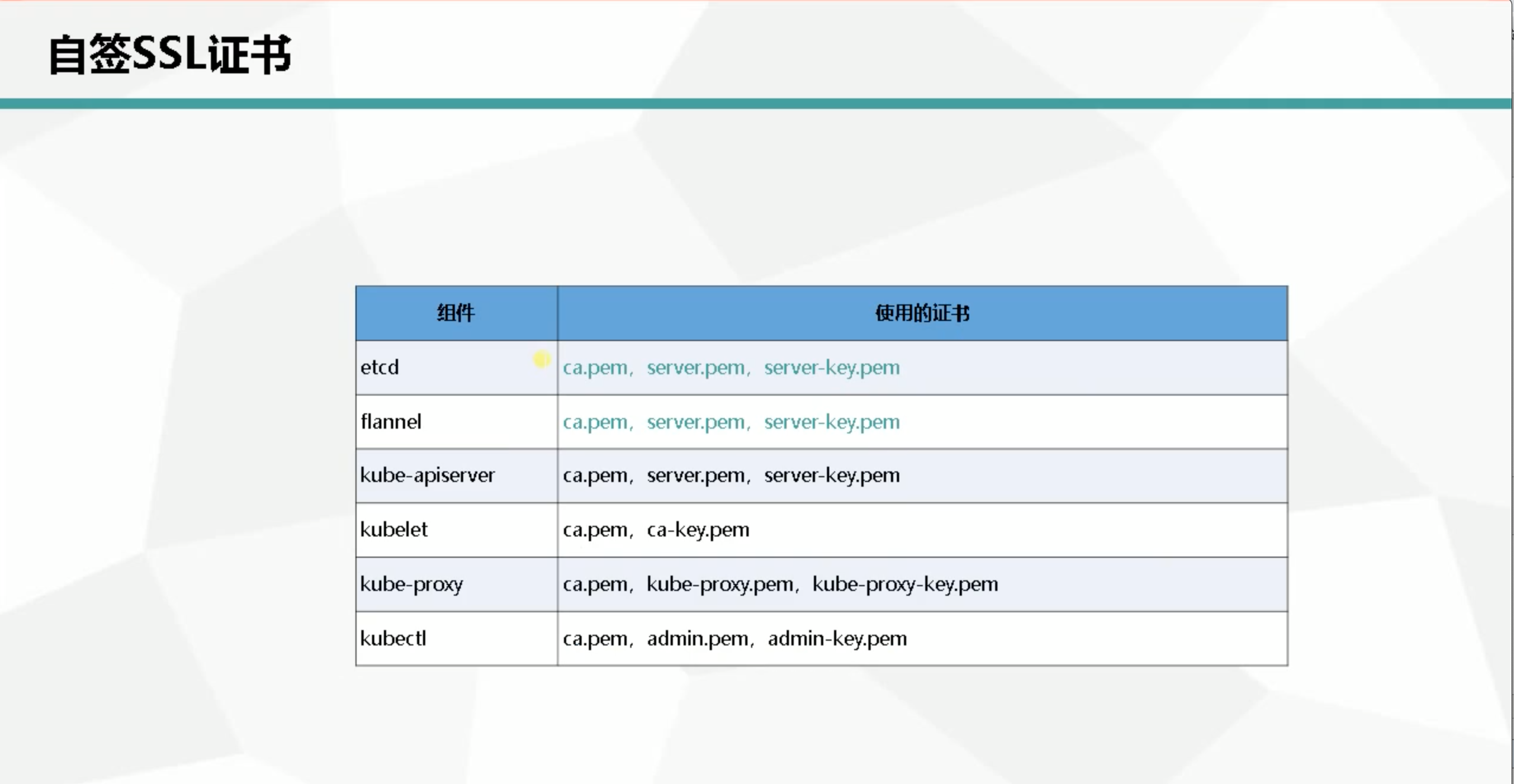

一、生成证书

由于server-api和etcd服务都是通过https请求的,所以每个服务都需要部署相应的证书,证书我们可以使用自颁发的

上面基本需要的环境已经配置完成

接下来开始进入正题

配置证书:(随便在任意一台服务器上操作即可,这里我们在k8s-master上执行)

1.使用cfssl来生成自签证书,先下载cfssl工具:

mkdir /opt/cfssl && cd /opt/cfssl wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64 mv cfssl_linux-amd64 /usr/local/bin/cfssl mv cfssljson_linux-amd64 /usr/local/bin/cfssljson mv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo

2.创建证书目录

mkdir -p /opt/k8s/k8s-cert mkdir -p /opt/k8s/etcd-cert

3.使用脚本部署(注意脚本中etcd的节点IP地址,需要修改成自己的)

mv etcd-cert.sh /k8s/etcd-cert #脚本内容 cat > ca-config.json <<EOF { "signing": { "default": { "expiry": "87600h" }, "profiles": { "www": { "expiry": "87600h", "usages": [ "signing", "key encipherment", "server auth", "client auth" ] } } } } EOF cat > ca-csr.json <<EOF { "CN": "etcd CA", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "Tianjing", "ST": "Tianjing" } ] } EOF cfssl gencert -initca ca-csr.json | cfssljson -bare ca - #----------------------- cat > server-csr.json <<EOF { "CN": "etcd", "hosts": [ "172.16.204.133", "172.16.204.134", "172.16.204.135" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "TianJing", "ST": "TianJing" } ] } EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server [root@k8s-master01 etcd-cert]# cat etcd-cert.sh cat > ca-config.json <<EOF { "signing": { "default": { "expiry": "87600h" }, "profiles": { "www": { "expiry": "87600h", "usages": [ "signing", "key encipherment", "server auth", "client auth" ] } } } } EOF cat > ca-csr.json <<EOF { "CN": "etcd CA", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "Tianjing", "ST": "Tianjing" } ] } EOF cfssl gencert -initca ca-csr.json | cfssljson -bare ca - #----------------------- cat > server-csr.json <<EOF { "CN": "etcd", "hosts": [ "172.16.204.133", "172.16.204.134", "172.16.204.135" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "TianJing", "ST": "TianJing" } ] } EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

#执行脚本

sh etcd-cert.sh

#查看证书是否生成

[root@k8s-master01 etcd-cert]# ls

ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem etcd-cert.sh server.csr server-csr.json server-key.pem server.pem

部署etcd集群

下载安装包

mkdir /opt/k8s/soft && cd/opt/k8s/soft

wget https://github.com/etcd-io/etcd/releases/download/v3.3.10/etcd-v3.3.10-linux-amd64.tar.gz

tar -zxvf etcd-v3.3.10-linux-amd64.tar.gz

#创建etcd服务目录

mkdir -p /opt/k8s/etcd/{cfg,bin,ssl}

cd etcd-v3.3.10-linux-amd64

mv etcd etcdctl /opt/k8s/etcd/bin/

这里我们使用脚本部署:

cat etcd.sh

#!/bin/bash # example: ./etcd.sh etcd01 192.168.1.10 etcd02=https://192.168.1.11:2380,etcd03=https://192.168.1.12:2380 ETCD_NAME=$1 ETCD_IP=$2 ETCD_CLUSTER=$3 WORK_DIR=/opt/k8s/etcd cat <<EOF >$WORK_DIR/cfg/etcd #[Member] ETCD_NAME="${ETCD_NAME}" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://${ETCD_IP}:2380" ETCD_LISTEN_CLIENT_URLS="https://${ETCD_IP}:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://${ETCD_IP}:2380" ETCD_ADVERTISE_CLIENT_URLS="https://${ETCD_IP}:2379" ETCD_INITIAL_CLUSTER="etcd01=https://${ETCD_IP}:2380,${ETCD_CLUSTER}" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" EOF cat <<EOF >/usr/lib/systemd/system/etcd.service [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target [Service] Type=notify EnvironmentFile=${WORK_DIR}/cfg/etcd ExecStart=${WORK_DIR}/bin/etcd --name=${ETCD_NAME} --data-dir=${ETCD_DATA_DIR} --listen-peer-urls=${ETCD_LISTEN_PEER_URLS} --listen-client-urls=${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 --advertise-client-urls=${ETCD_ADVERTISE_CLIENT_URLS} --initial-advertise-peer-urls=${ETCD_INITIAL_ADVERTISE_PEER_URLS} --initial-cluster=${ETCD_INITIAL_CLUSTER} --initial-cluster-token=${ETCD_INITIAL_CLUSTER_TOKEN} --initial-cluster-state=new --cert-file=${WORK_DIR}/ssl/server.pem --key-file=${WORK_DIR}/ssl/server-key.pem --peer-cert-file=${WORK_DIR}/ssl/server.pem --peer-key-file=${WORK_DIR}/ssl/server-key.pem --trusted-ca-file=${WORK_DIR}/ssl/ca.pem --peer-trusted-ca-file=${WORK_DIR}/ssl/ca.pem Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl enable etcd systemctl restart etcd

执行部署脚本

chmod +x etcd.sh #生成启动脚本并添加其它etcd节点到集群中 ./etcd.sh etcd01 172.16.204.133 etcd02=https://172.16.204.134:2380,etcd03=https://172.16.204.135:2380

将etcd需要的证书放到ssl目录

cp /opt/k8s/etcd-cert/{ca,server-key,server}.pem /opt/k8s/etcd/ssl/

查看etcd的启动脚本(在部署脚本中可以看到这段的配置)

cat /usr/lib/systemd/system/etcd.service [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target [Service] Type=notify EnvironmentFile=/opt/k8s/etcd/cfg/etcd ExecStart=/opt/k8s/etcd/bin/etcd --name=${ETCD_NAME} --data-dir=${ETCD_DATA_DIR} --listen-peer-urls=${ETCD_LISTEN_PEER_URLS} --listen-client-urls=${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 --advertise-client-urls=${ETCD_ADVERTISE_CLIENT_URLS} --initial-advertise-peer-urls=${ETCD_INITIAL_ADVERTISE_PEER_URLS} --initial-cluster=${ETCD_INITIAL_CLUSTER} --initial-cluster-token=${ETCD_INITIAL_CLUSTER_TOKEN} --initial-cluster-state=new --cert-file=/opt/k8s/etcd/ssl/server.pem --key-file=/opt/k8s/etcd/ssl/server-key.pem --peer-cert-file=/opt/k8s/etcd/ssl/server.pem --peer-key-file=/opt/k8s/etcd/ssl/server-key.pem --trusted-ca-file=/opt/k8s/etcd/ssl/ca.pem --peer-trusted-ca-file=/opt/k8s/etcd/ssl/ca.pem Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target

k8s-master节点启动etcd服务

systemctl start etcd #会有卡顿,因为另外俩个节点还没有部署并启动etcd节点,所以启动etcd集群另外俩个节点会显示超时

#查看日志

tail -f /var/log/messages

部署etcd到另外俩个节点(复制etcd相关文件到其它节点,并修改配置文件即可)

1.在k8s-node01和k8s-node02上创建k8s目录

#在k8s-node01和k8s-node02上执行

mkdir -p /opt/k8s

2.将k8s-master上etcd的相关文件和证书复制到另外俩个节点

scp -r /opt/k8s/etcd 172.16.204.134:/opt/k8s

scp -r /opt/k8s/etcd 172.16.204.135:/opt/k8s

scp /usr/lib/systemd/system/etcd.service 172.16.204.134:/usr/lib/systemd/system/

scp /usr/lib/systemd/system/etcd.service 172.16.204.135:/usr/lib/systemd/system/

3.修改k8s-node01上的配置文件并启动etcd服务

vim /opt/k8s/etcd/cfg/etcd #将除了etcd集群的配置不变,其它的IP配置改为k8s-node01的IP地址,端口不变(2380是监听端口,2379是数据传输端口,标红的是需要修改的地方) ETCD_NAME="etcd02" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://172.16.204.134:2380" ETCD_LISTEN_CLIENT_URLS="https://172.16.204.134:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://172.16.204.134:2380" ETCD_ADVERTISE_CLIENT_URLS="https://172.16.204.134:2379" ETCD_INITIAL_CLUSTER="etcd01=https://172.16.204.133:2380,etcd02=https://172.16.204.134:2380,etcd03=https://172.16.204.135:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new"

#启动etcd服务

systemctl daemon-reload

systemctl start etcd

4..修改k8s-node02上的配置文件并启动etcd服务

vim /opt/k8s/etcd/cfg/etcd #将除了etcd集群的配置不变,其它的IP配置改为k8s-node01的IP地址,端口不变(2380是监听端口,2379是数据传输端口,标红的是需要修改的地方) ETCD_NAME="etcd02" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://172.16.204.135:2380" ETCD_LISTEN_CLIENT_URLS="https://172.16.204.135:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://172.16.204.135:2380" ETCD_ADVERTISE_CLIENT_URLS="https://172.16.204.135:2379" ETCD_INITIAL_CLUSTER="etcd01=https://172.16.204.133:2380,etcd02=https://172.16.204.134:2380,etcd03=https://172.16.204.135:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" #启动etcd服务 systemctl daemon-reload systemctl start etcd

验证etcd集群是否正常

#在k8s-master上执行下面命令,ip地址改成自己的就好 /opt/k8s/etcd/bin/etcdctl --ca-file=/opt/k8s/etcd/ssl/ca.pem --cert-file=/opt/k8s/etcd/ssl/server.pem --key-file=/opt/k8s/etcd/ssl/server-key.pem --endpoints="https://172.16.204.133:2379,https://172.16.204.134:2379,https://172.16.204.135:2379" cluster-health

输出结果

member 6776bd806704ee4 is healthy: got healthy result from https://172.16.204.133:2379 member 2663165d9244289c is healthy: got healthy result from https://172.16.204.134:2379 member 4fdd244cdd2c8097 is healthy: got healthy result from https://172.16.204.135:2379 cluster is healthy

======etcd集群部署完成=====

node节点安装docker

1,CentOS7安装Docker, 要求 CentOS 系统的内核版本高于 3.10,查看内核版本: [root@localhost ~]# uname -r 2,更新yum包 [root@localhost ~]# yum -y update 3,卸载旧版本docker [root@localhost ~]# yum remove docker docker-common docker-selinux docker-engine 4,安装必要的一些系统工具 # yum-utils提供yum的配置管理 # device-mapper-persistent-data 与 lvm2 是devicemapper存储驱动所需要的 [root@localhost ~]# yum install -y yum-utils device-mapper-persistent-data lvm2 5, 配置Docker的稳定版本仓库 [root@localhost ~]# yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo 6,更新安装包索引 [root@localhost ~]# yum makecache fast 7,安装Docker CE [root@localhost ~]# yum -y install docker-ce 8,开启Docker服务 [root@localhost ~]# service docker start 9,查看Docker CE安装是否成功 [root@localhost ~]# docker version 作者:归去来ming 链接:https://www.jianshu.com/p/780ae3bd04fd 来源:简书 著作权归作者所有。商业转载请联系作者获得授权,非商业转载请注明出处。

在俩个node节点上配置docker加速器

sudo mkdir -p /etc/docker sudo tee /etc/docker/daemon.json <<-'EOF' { "registry-mirrors": ["https://4lymnb6o.mirror.aliyuncs.com"] } EOF

启动docker

sudo systemctl daemon-reload sudo systemctl restart docker

=====docker安装完成=============

k8s网络模型(简称:CNI,全称:Container Network Interface)

k8s网络模型设计基本要求:

- 一个pod一个ip

- 每个pod独立ip,pod内所有容器共享网络(同一个ip)

- 所有容器都可以与其它容器通信

- 所有节点都可以与所有容器通信

Flannel:通过给每台宿主机分配一个子网的方式为容器提供虚拟网络,它基于Linux TUN/TAP,使用UDP封装IP包来创建overlay网络,并借助etcd维护网络的分配情况。

开始部署Flannel网络(flannel主要部署到node节点,写入flannel信息可以在master上进行)

1.写入分配的子网到etcd,供flanneld使用

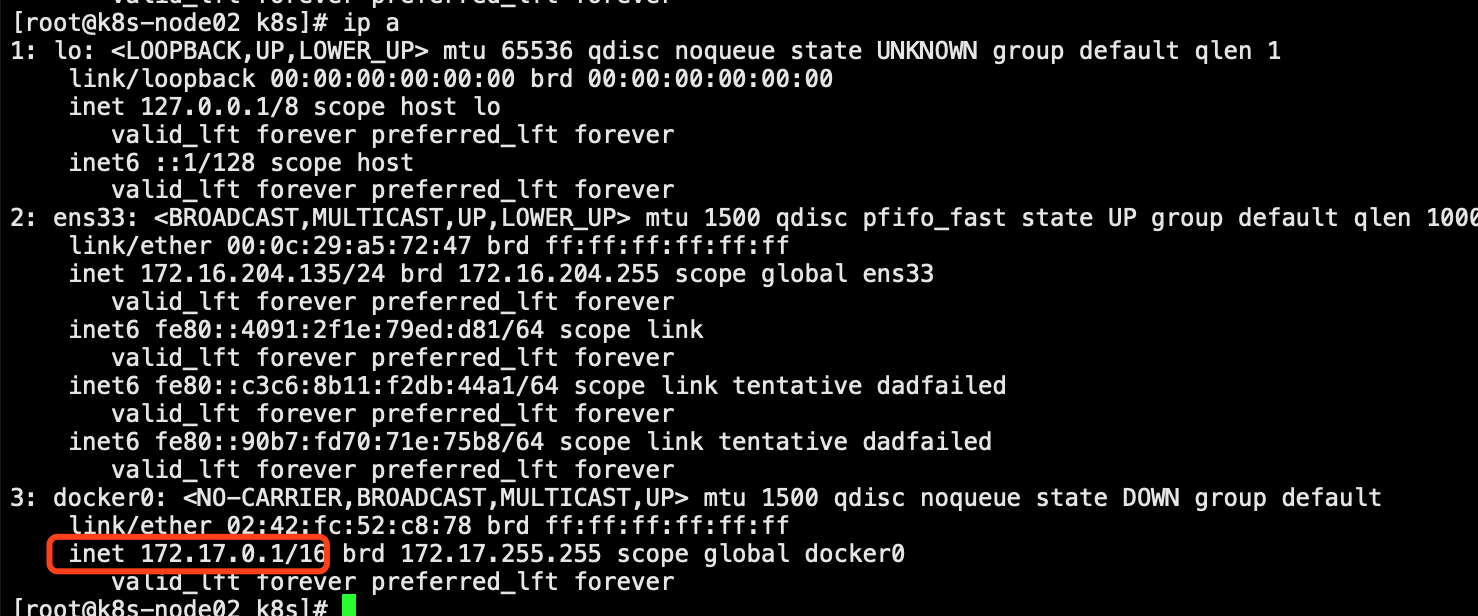

此处有采坑:etcd配置的网段需要和node节点的网卡docker0的网段匹配,即:Network:"172.17.0.0/16"

在master执行下面命令,主要是将Flannel网络的相关信息写入到etcd [root@k8s-master01 ~]# /opt/k8s/etcd/bin/etcdctl --ca-file=/opt/k8s/etcd/ssl/ca.pem --cert-file=/opt/k8s/etcd/ssl/server.pem --key-file=/opt/k8s/etcd/ssl/server-key.pem --endpoints="https://172.16.204.133:2379,https://172.16.204.134:2379,https://172.16.204.135:2379" set /coreos.com/network/config '{"Network:"172.17.0.0/16","Backend":{"Type": "vxlan"}}}'

2.下载二进制包

wget https://github.com/coreos/flannel/releases/download/v0.12.0/flannel-v0.12.0-linux-amd64.tar.gz

3.部署flanneld:下面操作均在各个node节点上操作,每个节点都需要部署flannel

Node所有节点下创建工作目录

mkdir -p /opt/k8s/kubernetes/{bin,ssl,cfg}

解压flannel压缩包

tar -zxvf flannel-v0.12.0-linux-amd64.tar.gz

将flannel的启动脚本和相关文件移动到flannel的bin目录

mv flanneld mk-docker-opts.sh /opt/k8s/kubernetes/bin/

#解释:

mk-docker-opts.sh:为本机创建一个flannel网络的工具

flanneld:flannel的启动脚本

将etcd的证书文件移动到flannel的ssl目录

cp -rp /opt/k8s/etcd/ssl/* /opt/k8s/kubernetes/ssl/

通过脚本脚本部署flannel

脚本内容:

#!/bin/bash

ETCD_ENDPOINTS=${1:-"http://127.0.0.1:2379"}

#ETCD_ENDPOINTS=${1}

cat <<EOF >/opt/k8s/kubernetes/cfg/flanneld

FLANNEL_OPTIONS="--etcd-endpoints=${ETCD_ENDPOINTS}

-etcd-cafile=/opt/k8s/etcd/ssl/ca.pem

-etcd-certfile=/opt/k8s/etcd/ssl/server.pem

-etcd-keyfile=/opt/k8s/etcd/ssl/server-key.pem"

EOF

cat <<EOF >/usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/opt/k8s/kubernetes/cfg/flanneld

ExecStart=/opt/k8s/kubernetes/bin/flanneld --ip-masq $FLANNEL_OPTIONS

ExecStartPost=/opt/k8s/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

cat <<EOF >/usr/lib/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/run/flannel/subnet.env

ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable flanneld

systemctl restart flanneld

systemctl restart docker

执行脚本部署

脚本后面跟etcd集群的信息

./flannel.sh https://172.16.204.133:2379,https://172.16.204.134:2379,https://172.16.204.135:2379

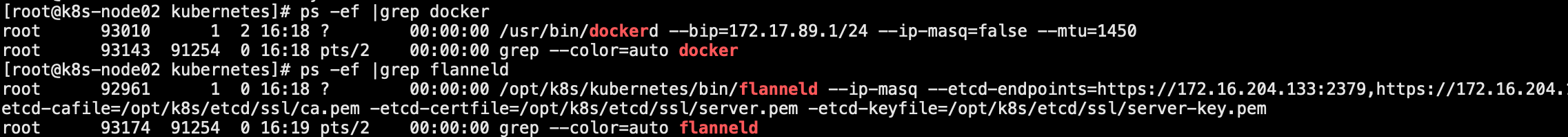

启动flannel和docker服务

#如果之前安装过docker,需要重启docker服务,保证docker使用的是flannel分配的子网

systemctl start flanneld

systemctl start docker

验证:

1.查看flannel和docker服务是否正常启动

2.docker是否使用的是flannel分配的子网

3.flannel会给每个节点分配一个独立不冲突的子网,保证的IP的唯一性

4.可以在node2上启动一个容器,然后在node1上ping容器的IP地址

[root@k8s-node02 kubernetes]# docker run -it busybox / # ifconfig eth0 Link encap:Ethernet HWaddr 02:42:AC:11:59:02 inet addr:172.17.89.2 Bcast:172.17.89.255 Mask:255.255.255.0 UP BROADCAST RUNNING MULTICAST MTU:1450 Metric:1 RX packets:6 errors:0 dropped:0 overruns:0 frame:0 TX packets:0 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:508 (508.0 B) TX bytes:0 (0.0 B) lo Link encap:Local Loopback inet addr:127.0.0.1 Mask:255.0.0.0 UP LOOPBACK RUNNING MTU:65536 Metric:1 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 TX packets:0 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1 RX bytes:0 (0.0 B) TX bytes:0 (0.0 B) / #

[root@k8s-node01 ~]# ping 172.17.89.2 PING 172.17.89.2 (172.17.89.2) 56(84) bytes of data. 64 bytes from 172.17.89.2: icmp_seq=1 ttl=63 time=0.832 ms 64 bytes from 172.17.89.2: icmp_seq=2 ttl=63 time=0.661 ms 64 bytes from 172.17.89.2: icmp_seq=3 ttl=63 time=0.628 ms 64 bytes from 172.17.89.2: icmp_seq=4 ttl=63 time=0.432 ms 64 bytes from 172.17.89.2: icmp_seq=5 ttl=63 time=0.508 ms 64 bytes from 172.17.89.2: icmp_seq=6 ttl=63 time=0.417 ms 64 bytes from 172.17.89.2: icmp_seq=7 ttl=63 time=0.337 ms