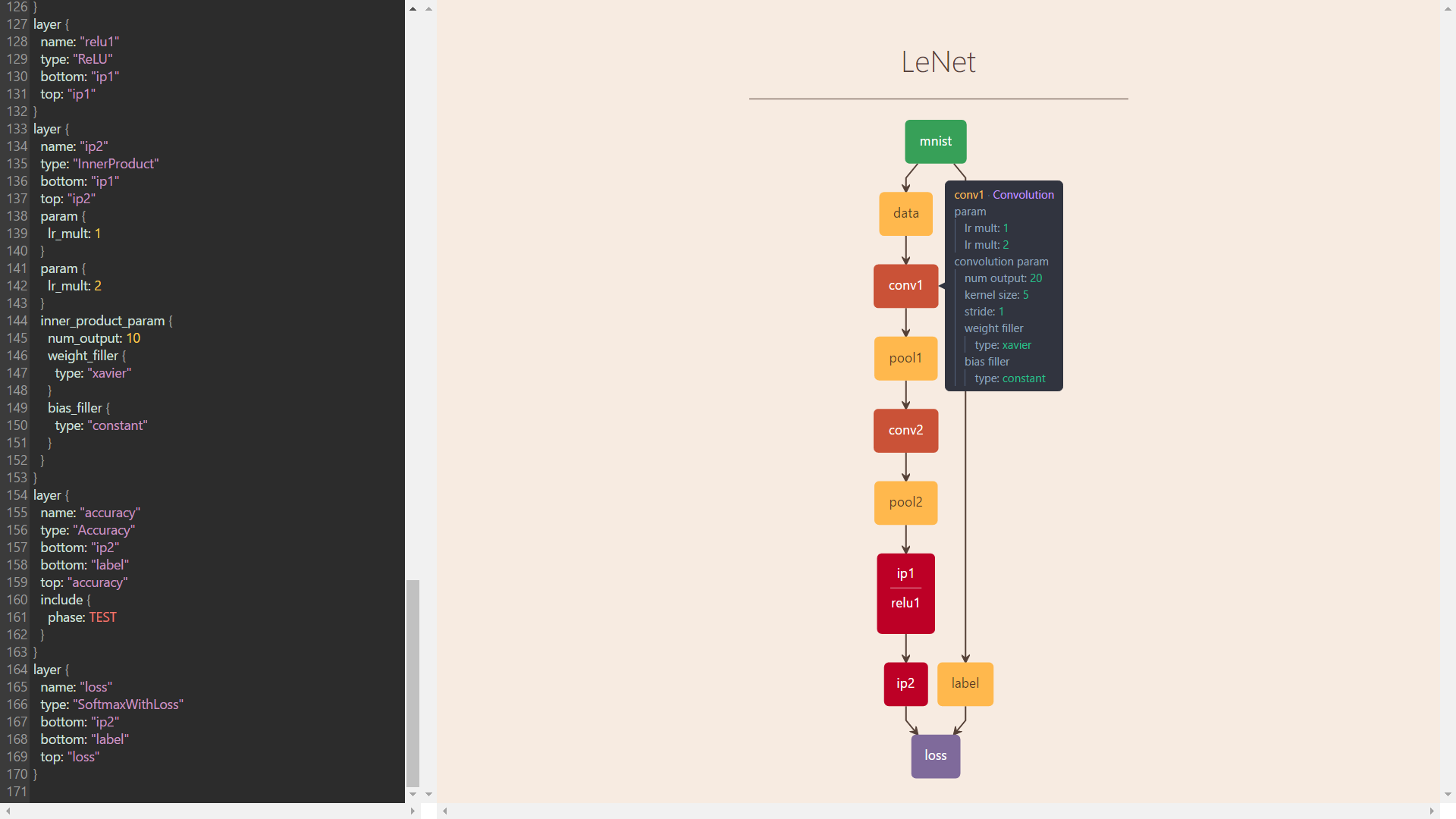

网络定义文件net.prototxt,可以用工具画出网络结构。最快速的方法是使用netscope,粘贴内容后shift+回车就可以看结果了。

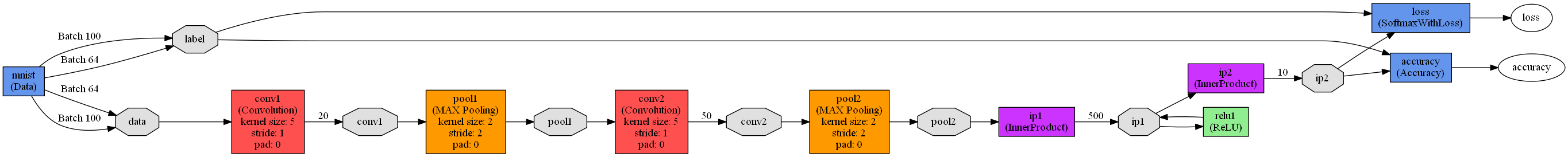

caffe也自带了网络结构绘制工具,以下是在windows下使用graphviz的操作步骤。

安装pydot。

pip install protobuf pydot

下载graphviz。解压并将bin添加到环境变量。

cd %PythonPath%

python draw_net.py ........examplesmnistlenet_train_test.prototxt ........examplesmnistlenet_train_test.png

结果如下。

caffe运行记录及部分注释如下。

I1213 13:33:59.757851 2904 layer_factory.hpp:77] Creating layer mnist I1213 13:33:59.757851 2904 common.cpp:36] System entropy source not available, using fallback algorithm to generate seed instead. I1213 13:33:59.757851 2904 net.cpp:91] Creating Layer mnist I1213 13:33:59.757851 2904 net.cpp:399] mnist -> data I1213 13:33:59.757851 2904 net.cpp:399] mnist -> label I1213 13:33:59.757851 5316 common.cpp:36] System entropy source not available, using fallback algorithm to generate seed instead. I1213 13:33:59.757851 5316 db_lmdb.cpp:40] Opened lmdb examples/mnist/mnist_train_lmdb I1213 13:33:59.882871 2904 data_layer.cpp:41] output data size: 64,1,28,28 # Batch size 64 I1213 13:33:59.898500 2904 net.cpp:141] Setting up mnist I1213 13:33:59.898500 2904 net.cpp:148] Top shape: 64 1 28 28 (50176) I1213 13:33:59.898500 2904 net.cpp:148] Top shape: 64 (64) I1213 13:33:59.898500 348 common.cpp:36] System entropy source not available, using fallback algorithm to generate seed instead. I1213 13:33:59.898500 2904 net.cpp:156] Memory required for data: 200960 I1213 13:33:59.898500 2904 layer_factory.hpp:77] Creating layer conv1 I1213 13:33:59.898500 2904 net.cpp:91] Creating Layer conv1 I1213 13:33:59.898500 2904 net.cpp:425] conv1 <- data I1213 13:33:59.898500 2904 net.cpp:399] conv1 -> conv1 I1213 13:34:00.389096 2904 net.cpp:141] Setting up conv1 I1213 13:34:00.389096 2904 net.cpp:148] Top shape: 64 20 24 24 (737280) # num_output: 20 kernel_size: 5 28-5+1=24 I1213 13:34:00.389096 2904 net.cpp:156] Memory required for data: 3150080 I1213 13:34:00.389096 2904 layer_factory.hpp:77] Creating layer pool1 I1213 13:34:00.389096 2904 net.cpp:91] Creating Layer pool1 I1213 13:34:00.389096 2904 net.cpp:425] pool1 <- conv1 I1213 13:34:00.389096 2904 net.cpp:399] pool1 -> pool1 I1213 13:34:00.404721 2904 net.cpp:141] Setting up pool1 I1213 13:34:00.404721 2904 net.cpp:148] Top shape: 64 20 12 12 (184320) # kernel_size: 2 stride: 2 24/2=12 I1213 13:34:00.404721 2904 net.cpp:156] Memory required for data: 3887360 I1213 13:34:00.404721 2904 layer_factory.hpp:77] Creating layer conv2 I1213 13:34:00.404721 2904 net.cpp:91] Creating Layer conv2 I1213 13:34:00.404721 2904 net.cpp:425] conv2 <- pool1 I1213 13:34:00.404721 2904 net.cpp:399] conv2 -> conv2 I1213 13:34:00.404721 2904 net.cpp:141] Setting up conv2 I1213 13:34:00.404721 2904 net.cpp:148] Top shape: 64 50 8 8 (204800) # num_output: 50 kernel_size: 5 12-5+1=8 I1213 13:34:00.404721 2904 net.cpp:156] Memory required for data: 4706560 I1213 13:34:00.404721 2904 layer_factory.hpp:77] Creating layer pool2 I1213 13:34:00.404721 2904 net.cpp:91] Creating Layer pool2 I1213 13:34:00.404721 2904 net.cpp:425] pool2 <- conv2 I1213 13:34:00.420348 2904 net.cpp:399] pool2 -> pool2 I1213 13:34:00.420348 2904 net.cpp:141] Setting up pool2 I1213 13:34:00.420348 2904 net.cpp:148] Top shape: 64 50 4 4 (51200) # kernel_size: 2 stride: 2 8/2=4 I1213 13:34:00.420348 2904 net.cpp:156] Memory required for data: 4911360 I1213 13:34:00.420348 2904 layer_factory.hpp:77] Creating layer ip1 I1213 13:34:00.420348 2904 net.cpp:91] Creating Layer ip1 I1213 13:34:00.420348 2904 net.cpp:425] ip1 <- pool2 I1213 13:34:00.420348 2904 net.cpp:399] ip1 -> ip1 I1213 13:34:00.420348 2904 net.cpp:141] Setting up ip1 I1213 13:34:00.435976 2904 net.cpp:148] Top shape: 64 500 (32000) # num_output=500 I1213 13:34:00.435976 2904 net.cpp:156] Memory required for data: 5039360 I1213 13:34:00.435976 2904 layer_factory.hpp:77] Creating layer relu1 I1213 13:34:00.435976 2904 net.cpp:91] Creating Layer relu1 I1213 13:34:00.435976 2904 net.cpp:425] relu1 <- ip1 I1213 13:34:00.435976 2904 net.cpp:386] relu1 -> ip1 (in-place) I1213 13:34:00.435976 2904 net.cpp:141] Setting up relu1 I1213 13:34:00.435976 2904 net.cpp:148] Top shape: 64 500 (32000) I1213 13:34:00.435976 2904 net.cpp:156] Memory required for data: 5167360 I1213 13:34:00.435976 2904 layer_factory.hpp:77] Creating layer ip2 I1213 13:34:00.435976 2904 net.cpp:91] Creating Layer ip2 I1213 13:34:00.435976 2904 net.cpp:425] ip2 <- ip1 I1213 13:34:00.435976 2904 net.cpp:399] ip2 -> ip2 I1213 13:34:00.451606 2904 net.cpp:141] Setting up ip2 I1213 13:34:00.451606 2904 net.cpp:148] Top shape: 64 10 (640) # num_output=10 I1213 13:34:00.451606 2904 net.cpp:156] Memory required for data: 5169920 I1213 13:34:00.451606 2904 layer_factory.hpp:77] Creating layer loss I1213 13:34:00.451606 2904 net.cpp:91] Creating Layer loss I1213 13:34:00.451606 2904 net.cpp:425] loss <- ip2 I1213 13:34:00.451606 2904 net.cpp:425] loss <- label I1213 13:34:00.451606 2904 net.cpp:399] loss -> loss I1213 13:34:00.451606 2904 layer_factory.hpp:77] Creating layer loss I1213 13:34:00.451606 2904 net.cpp:141] Setting up loss I1213 13:34:00.451606 2904 net.cpp:148] Top shape: (1) I1213 13:34:00.451606 2904 net.cpp:151] with loss weight 1 I1213 13:34:00.467228 2904 net.cpp:156] Memory required for data: 5169924 I1213 13:34:00.467228 2904 net.cpp:217] loss needs backward computation. I1213 13:34:00.467228 2904 net.cpp:217] ip2 needs backward computation. I1213 13:34:00.467228 2904 net.cpp:217] relu1 needs backward computation. I1213 13:34:00.467228 2904 net.cpp:217] ip1 needs backward computation. I1213 13:34:00.467228 2904 net.cpp:217] pool2 needs backward computation. I1213 13:34:00.467228 2904 net.cpp:217] conv2 needs backward computation. I1213 13:34:00.467228 2904 net.cpp:217] pool1 needs backward computation. I1213 13:34:00.467228 2904 net.cpp:217] conv1 needs backward computation. I1213 13:34:00.467228 2904 net.cpp:219] mnist does not need backward computation. I1213 13:34:00.467228 2904 net.cpp:261] This network produces output loss I1213 13:34:00.467228 2904 net.cpp:274] Network initialization done. I1213 13:34:00.482890 2904 solver.cpp:181] Creating test net (#0) specified by net file: examples/mnist/lenet_train_test.prototxt I1213 13:34:00.482890 2904 net.cpp:313] The NetState phase (1) differed from the phase (0) specified by a rule in layer mnist I1213 13:34:00.482890 2904 net.cpp:49] Initializing net from parameters: