背景

2014年,VGG分别在定位和分类问题中获得了第一和第二名,在其他数据集上也实现了最好的结果。

结构

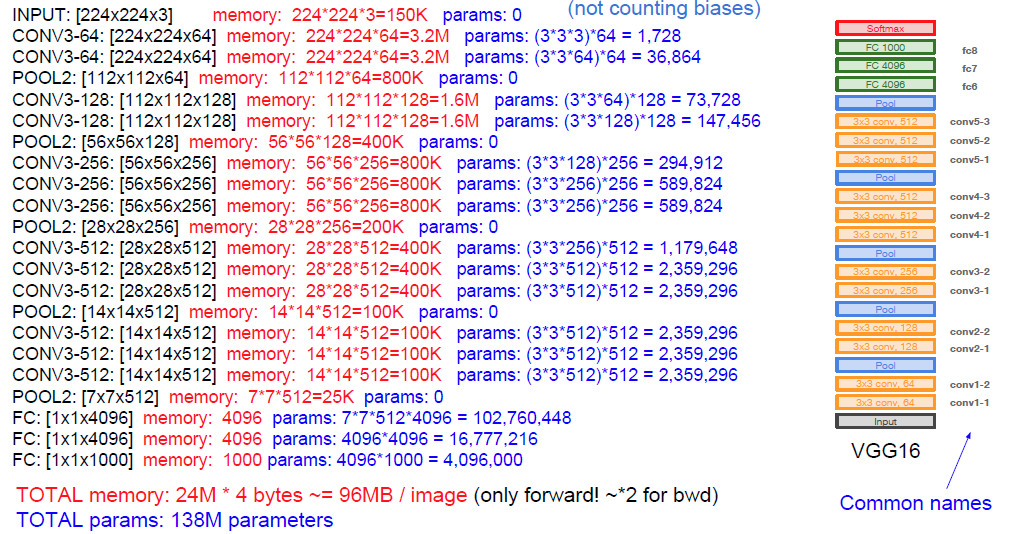

VGGNet探索了神经网络的深度与性能之间的关系,表明在结构相似的情况下,网络越深性能越好。

模型中大量使用3*3的卷积核的串联,构造出16到19层的网络。

2个3*3的卷积核的串联相当于5*5的卷积核。

3个3*3的卷积核的串联相当于7*7的卷积核。

其意义在于7*7所需要的参数为49,3个3*3的卷积核参数为27个,几乎减少了一半。

在C中还是用了1*1的卷积核,而且输出通道和输入通道数并没有发生改变,只是起到了线性变换的作用,其意义在VGG中其实意义不大。

结构图如下:

实现

data = mx.symbol.Variable(name="data") # group 1 conv1_1 = mx.symbol.Convolution(data=data, kernel=(3, 3), pad=(1, 1), num_filter=64, name="conv1_1") relu1_1 = mx.symbol.Activation(data=conv1_1, act_type="relu", name="relu1_1") pool1 = mx.symbol.Pooling( data=relu1_1, pool_type="max", kernel=(2, 2), stride=(2,2), name="pool1") # group 2 conv2_1 = mx.symbol.Convolution( data=pool1, kernel=(3, 3), pad=(1, 1), num_filter=128, name="conv2_1") relu2_1 = mx.symbol.Activation(data=conv2_1, act_type="relu", name="relu2_1") pool2 = mx.symbol.Pooling( data=relu2_1, pool_type="max", kernel=(2, 2), stride=(2,2), name="pool2") # group 3 conv3_1 = mx.symbol.Convolution( data=pool2, kernel=(3, 3), pad=(1, 1), num_filter=256, name="conv3_1") relu3_1 = mx.symbol.Activation(data=conv3_1, act_type="relu", name="relu3_1") conv3_2 = mx.symbol.Convolution( data=relu3_1, kernel=(3, 3), pad=(1, 1), num_filter=256, name="conv3_2") relu3_2 = mx.symbol.Activation(data=conv3_2, act_type="relu", name="relu3_2") pool3 = mx.symbol.Pooling( data=relu3_2, pool_type="max", kernel=(2, 2), stride=(2,2), name="pool3") # group 4 conv4_1 = mx.symbol.Convolution( data=pool3, kernel=(3, 3), pad=(1, 1), num_filter=512, name="conv4_1") relu4_1 = mx.symbol.Activation(data=conv4_1, act_type="relu", name="relu4_1") conv4_2 = mx.symbol.Convolution( data=relu4_1, kernel=(3, 3), pad=(1, 1), num_filter=512, name="conv4_2") relu4_2 = mx.symbol.Activation(data=conv4_2, act_type="relu", name="relu4_2") pool4 = mx.symbol.Pooling( data=relu4_2, pool_type="max", kernel=(2, 2), stride=(2,2), name="pool4") # group 5 conv5_1 = mx.symbol.Convolution( data=pool4, kernel=(3, 3), pad=(1, 1), num_filter=512, name="conv5_1") relu5_1 = mx.symbol.Activation(data=conv5_1, act_type="relu", name="relu5_1") conv5_2 = mx.symbol.Convolution( data=relu5_1, kernel=(3, 3), pad=(1, 1), num_filter=512, name="conv5_2") relu5_2 = mx.symbol.Activation(data=conv5_2, act_type="relu", name="conv1_2") pool5 = mx.symbol.Pooling( data=relu5_2, pool_type="max", kernel=(2, 2), stride=(2,2), name="pool5") # group 6 flatten = mx.symbol.Flatten(data=pool5, name="flatten") fc6 = mx.symbol.FullyConnected(data=flatten, num_hidden=4096, name="fc6") relu6 = mx.symbol.Activation(data=fc6, act_type="relu", name="relu6") drop6 = mx.symbol.Dropout(data=relu6, p=0.5, name="drop6") # group 7 fc7 = mx.symbol.FullyConnected(data=drop6, num_hidden=4096, name="fc7") relu7 = mx.symbol.Activation(data=fc7, act_type="relu", name="relu7") drop7 = mx.symbol.Dropout(data=relu7, p=0.5, name="drop7") # output fc8 = mx.symbol.FullyConnected(data=drop7, num_hidden=num_classes, name="fc8") softmax = mx.symbol.SoftmaxOutput(data=fc8, name='softmax') return softmax