Error 99 connecting to 192.168.3.212:6379. Cannot assign requested address

Redis - corelation between QPS, response time, number of connections, response size and network connection speed - Stack Overflow https://stackoverflow.com/questions/28241728/redis-corelation-between-qps-response-time-number-of-connections-response-s

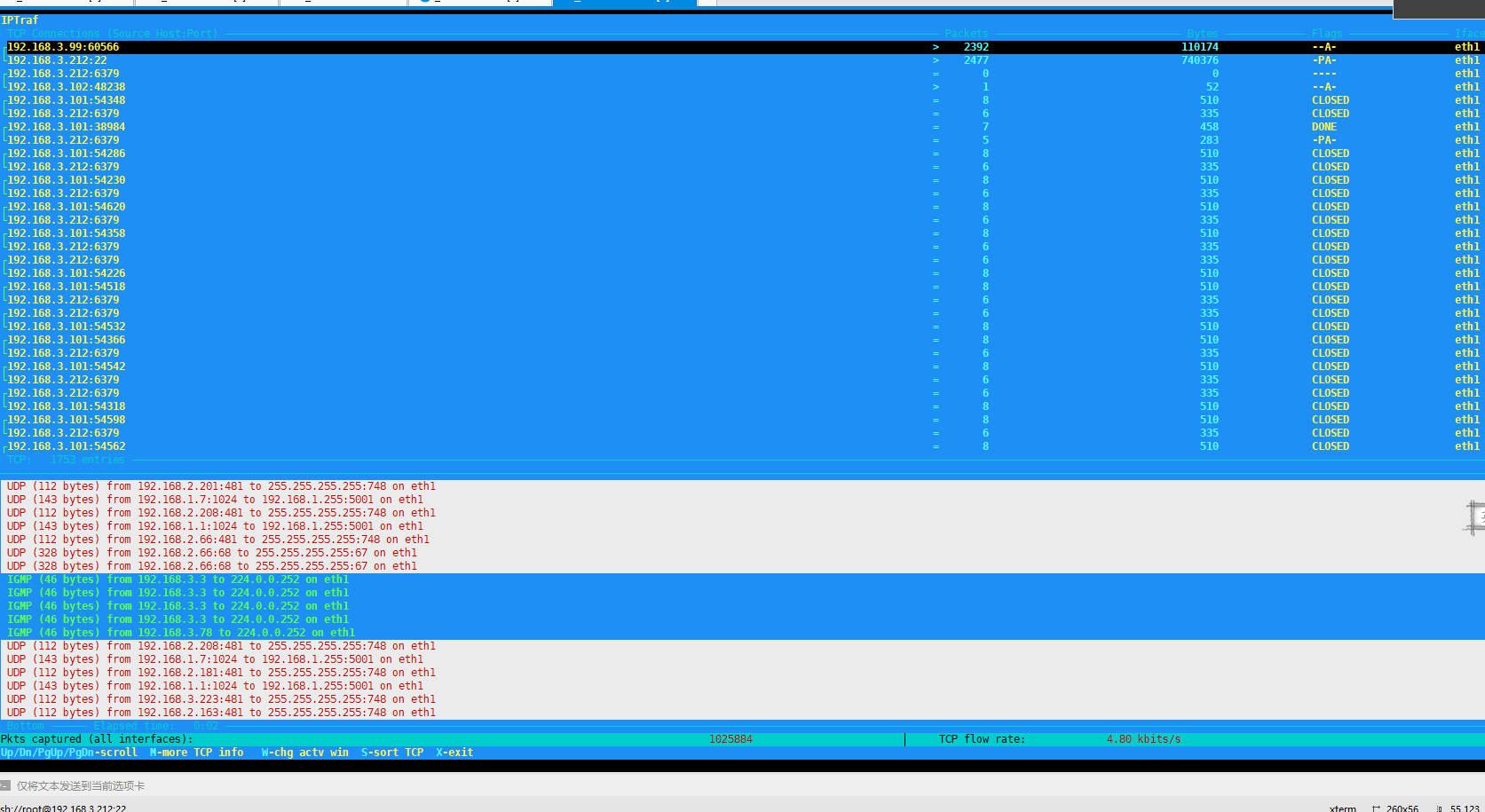

连接数与带宽

Here are my answers:

Want to understand more about this magic.

Redis is awesome, but there is no magic. It is just a smart and efficient implementation of very pragmatic concepts. And because it is a human-sized project, it is actually easy to understand why, by having a look at the source code.

Is this just network latency or Redis has to maintain some overhead till entire data is flushed out.

Of course, Redis has to maintain communication buffers, so that it can deal with slower network links. That said, this should have very little impact on the perceived latency. In your case, the 50 ms are probably mainly due to the network latency, which you could probably check by running a ping command or any other similar tool.

Can number of connections affect Redis throughput?

Of course, it can, like for any server software. Now, you need to distinguish the throughput per connection, and the global throughput of the server.

The throughput per connection is heavily impacted by the number of connections. Consider that the server can only provide a certain bandwidth, and this bandwidth is shared across connections. The more connections, the less bandwidth per connection.

On the other hand, the global throughput of the server is only lightly impacted by the number of connections. Redis can accept tens of thousands of connection with no issue. There is still an overhead though. As a rule of thumb, consider that at 30000 connections, Redis supports only half of the throughput it can support at 100 connections. See the nice graphs available on the Redis benchmark page.

Will the last request take 500muSec * 1000 = 500ms?

Yes, but your figures are probably wrong.

Yes, all activity is serialized (single-threaded design), so the processing time of each command has to be added. When many commands are received at the same time, the last one will be served after all the other ones. If each command takes 5 us to be processed, and 1000 are received at the same time, the last reply will be sent in 5 ms.

Now, in practice, the number of truly concurrent queries is not so high. Redis rarely receives 1000 simultaneous queries in the same event loop iteration.

Furthermore, you are confusing the response time (as measured on client-side), and theprocessing time (that would be measured on Redis side). The response time can be 500 us, but the processing time is much closer to 5 us, the difference being the time spent on the network and in the OS process scheduling. Keep in mind that only the processing time has to be cumulated, everything else is parallelized over the connections (network latency for instance).

To calculate the average processing time of your instance, just use redis-benchmark to saturate the instance. When using pipelining, it is not uncommon to see instances processing up to 400 Kop/s or more, which gives an average processing time of 2.5 us.

Can response size have an affect here?

Of course, it can, like for any server software. Past a certain size, the latency is always impacted by the volume of data, because both the bandwidth and the speed of the network are limited. With ethernet networks, this threshold is closely related to the size of the MTU.

TCP connection on the Redis has to wait till the last packet is delivered and if the network connection is slow, will it slowdown Redis?

Absolutely not. Redis systematically buffers the replies (whatever their size), and manages all sockets in a non-blocking way, thanks to an event loop. If one connection is slow (or one client is slow), Redis will fill the corresponding socket buffer as much it can, register the socket in the event loop, and move to another connection. The event loop will continue sending traffic on the slow connection when there is space again in the socket buffer. Nothing ever blocks.