文件转载自:https://www.orchome.com/1284

本节以GlusterFS为例,从定义StorageClass、创建GlusterFS和Heketi服务、用户申请PVC到创建Pod使用存储资源,对StorageClass和动态资源分配进行详细说明,进一步剖析k8s的存储机制。

1.准备工作

首先在用于部署GlusterFS的三个节点上安装GlusterFS客户端:

yum -y install glusterfs glusterfs-fuse

GlusterFS管理服务容器需要以特权模式运行,kube-apiserver的启动参数中确认已经打开了:

--allow-privileged=true

给要部署GlusterFS管理服务的节点打上"storagenode=glusterfs"的标签,这样可以将GlusterFS容器定向部署到安装了GlusterFS的Node上:

[k8s@kube-server harbor]$ kubectl label node kube-node1 storagenode=glusterfs

node "kube-node1" labeled

[k8s@kube-server harbor]$ kubectl label node kube-node2 storagenode=glusterfs

node "kube-node2" labeled

[k8s@kube-server harbor]$ kubectl label node kube-node3 storagenode=glusterfs

node "kube-node3" labeled

2.创建GlusterFS服务容器集群

GlusterFS服务容器以DaemonSet的方式进行部署,确保每台Node上都运行一个GlusterFS管理服务,glusterfs-daemonset.yaml内容如下。参照 https://github.com/gluster/gluster-kubernetes。

1)在各个Node节点的启动参数中增加以下选项,因为GlusterFS需要使用容器的特权模式运行

--allow-privileged

生效:

systemctl daemon-reload

systemctl restart kubelet

systemctl status kubelet

2)给每个运行GlusterFS的Node节点增加一块数据磁盘

注意数据盘挂载后,在系统中使用的设备描述符,需要在下一步配置中使用到。

3)编辑topology.json拓朴文件

获取一份安装资源:git clone https://github.com/gluster/gluster-kubernetes.git

[k8s@kube-server deploy]$ pwd

/home/k8s/gluster-kubernetes/deploy

[k8s@kube-server deploy]$ ls

gk-deploy heketi.json.template kube-templates ocp-templates topology.json

至少需要3个节点,按下面格式对该文件进行更新:

[k8s@kube-server deploy]$ cat topology.json

{

"clusters": [

{

"nodes": [

{

"node": {

"hostnames": {

"manage": [

"kube-node1"

],

"storage": [

"172.16.10.101"

]

},

"zone": 1

},

"devices": [

"/dev/sdb"

]

},

{

"node": {

"hostnames": {

"manage": [

"kube-node2"

],

"storage": [

"172.16.10.102"

]

},

"zone": 1

},

"devices": [

"/dev/sdb"

]

},

{

"node": {

"hostnames": {

"manage": [

"kube-node3"

],

"storage": [

"172.16.10.103"

]

},

"zone": 1

},

"devices": [

"/dev/sdb"

]

}

]

}

]

}

4)在k8s上部署 GlusterFS + heketi

需要先检查下环境:

- 至少需要3个节点

- 每个节点上至少提供一个裸块存储设备

- 确保以下端口没有被占用:2222,24007, 24008, 49152~49251

- 在系统中加载以下模块: modprobe dm_snapshot && modprobe dm_mirror && modprobe dm_thin_pool

- 安装依赖包:yum -y install glusterfs-fuse

执行部署命令:

注:-g参数表示要创建出一套glusterfs集群服务。

[k8s@kube-server deploy]$ ./gk-deploy -g

如果一切顺利,在结束时会看到下面的输出:

....

service "heketi" created

deployment.extensions "heketi" created

Waiting for heketi pod to start ... OK

Flag --show-all has been deprecated, will be removed in an upcoming release

heketi is now running and accessible via https://172.30.86.3:8080 . To run

administrative commands you can install 'heketi-cli' and use it as follows:

# heketi-cli -s https://172.30.86.3:8080 --user admin --secret '<ADMIN_KEY>' cluster list

You can find it at https://github.com/heketi/heketi/releases . Alternatively,

use it from within the heketi pod:

# /opt/k8s/bin/kubectl -n default exec -i heketi-75dcfb7d44-vj9bk -- heketi-cli -s https://localhost:8080 --user admin --secret '<ADMIN_KEY>' cluster list

For dynamic provisioning, create a StorageClass similar to this:

---

apiVersion: storage.k8s.io/v1beta1

kind: StorageClass

metadata:

name: glusterfs-storage

provisioner: kubernetes.io/glusterfs

parameters:

resturl: "https://172.30.86.3:8080"

Deployment complete!

查看下都创建出了哪些服务实例:

[k8s@kube-server deploy]$ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

glusterfs-88469 1/1 Running 0 2h 172.16.10.102 kube-node2

glusterfs-lwm4n 1/1 Running 0 2h 172.16.10.103 kube-node3

glusterfs-pfgwb 1/1 Running 0 2h 172.16.10.101 kube-node1

heketi-75dcfb7d44-vj9bk 1/1 Running 0 1h 172.30.86.3 kube-node2

my-nginx-86555897f9-2kn92 1/1 Running 2 8h 172.30.49.2 kube-node1

my-nginx-86555897f9-d95t9 1/1 Running 4 2d 172.30.48.2 kube-node3

[k8s@kube-server deploy]$ kubectl get svc -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

heketi ClusterIP 10.254.42.129 <none> 8080/TCP 1h glusterfs=heketi-pod

heketi-storage-endpoints ClusterIP 10.254.4.122 <none> 1/TCP 1h <none>

kubernetes ClusterIP 10.254.0.1 <none> 443/TCP 7d <none>

my-nginx ClusterIP 10.254.191.237 <none> 80/TCP 5d run=my-nginx

[k8s@kube-server deploy]$ kubectl get deployment

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

heketi 1 1 1 1 1h

my-nginx 2 2 2 2 5d

[k8s@kube-server deploy]$ kubectl get secret

NAME TYPE DATA AGE

default-token-p5wjd kubernetes.io/service-account-token 3 7d

heketi-config-secret Opaque 3 1h

heketi-service-account-token-mrtsx kubernetes.io/service-account-token 3 2h

kubelet-api-test-token-gdj7g kubernetes.io/service-account-token 3 6d

[k8s@kube-server deploy]$

5)使用示例

在可以调用kubectl管理k8s集群的节点上,安装一个heketi客户端:

yum -y install heketi-client

创建个1GB的PV存储卷:

[k8s@kube-server deploy]$ export HEKETI_CLI_SERVER=https://172.30.86.3:8080

[k8s@kube-server deploy]$ heketi-cli volume create --size=1 --persistent-volume --persistent-volume-endpoint=heketi-storage-endpoints | kubectl create -f -

persistentvolume "glusterfs-900fb349" created

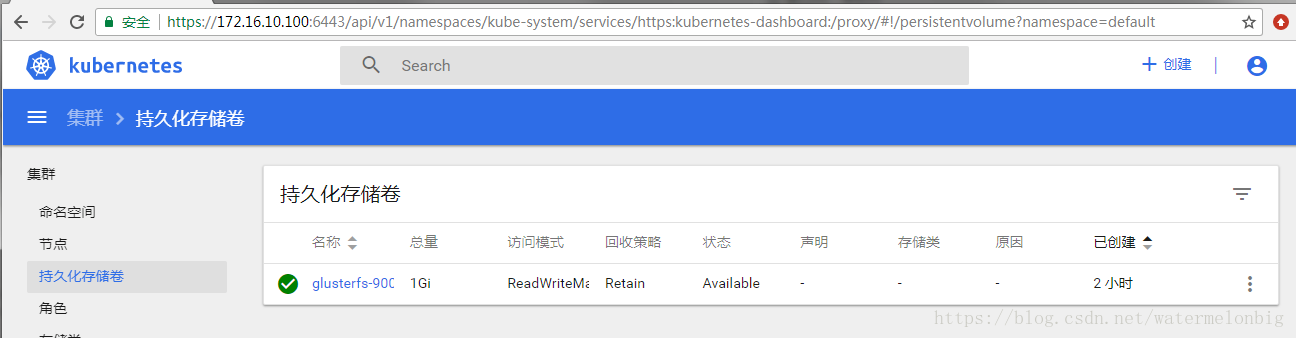

在Dashboard上看看这个刚创建的存储卷:

通过heketi服务查看和管理GlusterFS集群: 查看集群列表:

[root@kube-node1 ~]# curl 10.254.42.129:8080/clusters

{"clusters":["ada54ffbeac15a5c9a7521e0c7d2f636"]}

查看集群详情:

[root@kube-node1 ~]# curl 10.254.42.129:8080/clusters/ada54ffbeac15a5c9a7521e0c7d2f636

{"id":"ada54ffbeac15a5c9a7521e0c7d2f636","nodes":["49ac6f56ef21408bcad7c7613cd40bd8","bdf51ae46025cd4fcf134f7be36c32de","fc21262379ec3636e3eadcae15efcc94"],"volumes":["42b01b9b08af23b751b2359fb161c004","900fb349e56af275f47d523d08fdfd6e"],"block":true,"file":true,"blockvolumes":[]}

查看节点详情:

state 为 online说明节点正常

[root@kube-node1 ~]# curl 10.254.42.129:8080/nodes/49ac6f56ef21408bcad7c7613cd40bd8

{"zone":1,"hostnames":{"manage":["kube-node3"],"storage":["172.16.10.103"]},"cluster":"ada54ffbeac15a5c9a7521e0c7d2f636","id":"49ac6f56ef21408bcad7c7613cd40bd8","state":"online","devices":[{"name":"/dev/sdb","storage":{"total":8253440,"free":5087232,"used":3166208},"id":"2f6b2f6c289a2f6bf48fbec59c0c2009","state":"online","bricks":[{"id":"2ea90ebd791a4230e927d233d1c8a7d1","path":"/var/lib/heketi/mounts/vg_2f6b2f6c289a2f6bf48fbec59c0c2009/brick_2ea90ebd791a4230e927d233d1c8a7d1/brick","device":"2f6b2f6c289a2f6bf48fbec59c0c2009","node":"49ac6f56ef21408bcad7c7613cd40bd8","volume":"42b01b9b08af23b751b2359fb161c004","size":2097152},{"id":"4c98684d878ffe7dbfc1008336460eed","path":"/var/lib/heketi/mounts/vg_2f6b2f6c289a2f6bf48fbec59c0c2009/brick_4c98684d878ffe7dbfc1008336460eed/brick","device":"2f6b2f6c289a2f6bf48fbec59c0c2009","node":"49ac6f56ef21408bcad7c7613cd40bd8","volume":"900fb349e56af275f47d523d08fdfd6e","size":1048576}]}]}

6)创建一个使用GlusterFS动态存储供应服务的nginx应用

注:在本示例中的用户认证是未启用的,如果要启动用户认证服务,则可以创建一个secret,然后通过StorageClass配置参数传递给Gluster动态存储供应服务。

下面是一个存储类的示例,它将请求2GB的按需存储,用于在我们的HelloWorld应用程序中使用。

# cat gluster-storage-class.yaml

apiVersion: storage.k8s.io/v1beta1

kind: StorageClass

metadata:

name: gluster-heketi

provisioner: kubernetes.io/glusterfs

parameters:

resturl: "https://10.254.42.129:8080"

restuser: "joe"

restuserkey: "My Secret Life"

- name,StorageClass名称

- provisioner,存储服务提供者

- resturl,Heketi REST Url

- restuser,因为未启用认证,所以这个参数无效

- restuserkey,同上

创建该存储类:

[k8s@kube-server ~]$ kubectl create -f gluster-storage-class.yaml

storageclass.storage.k8s.io "gluster-heketi" created

[k8s@kube-server ~]$ kubectl get storageclass

NAME PROVISIONER AGE

gluster-heketi kubernetes.io/glusterfs 43s

创建PersistentVolumeClaim(PVC)以请求我们的HelloWorld应用程序的存储:

我们将创建一个要求2GB存储空间的PVC,此时,Kubernetes Dynamic Provisioning Framework和Heketi将自动配置新的GlusterFS卷并生成Kubernetes PersistentVolume(PV)对象。

annotations,Kubernetes存储类注释和存储类的名称

gluster-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: gluster1

annotations:

volume.beta.kubernetes.io/storage-class: gluster-heketi

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi

[k8s@kube-server ~]$ kubectl create -f gluster-pvc.yaml

persistentvolumeclaim "gluster1" created

可以看到PVC是绑定到一个动态供给的存储卷上的:

[k8s@kube-server ~]$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

gluster1 Bound pvc-53e824cf-7eb7-11e8-bf5c-080027395360 2Gi RWO gluster-heketi 53s

[k8s@kube-server ~]$ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

glusterfs-900fb349 1Gi RWX Retain Available 2h

pvc-53e824cf-7eb7-11e8-bf5c-080027395360 2Gi RWO Delete Bound default/gluster1 gluster-heketi 1m

创建一个使用该PVC的nginx实例:

nginx-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod1

labels:

name: nginx-pod1

spec:

containers:

- name: nginx-pod1

image: nginx:1.7.9

ports:

- name: web

containerPort: 80

volumeMounts:

- name: gluster-vol1

mountPath: /usr/share/nginx/html

volumes:

- name: gluster-vol1

persistentVolumeClaim:

claimName: gluster1 # claimName,要使用的PVC的名称

[k8s@kube-server ~]$ kubectl create -f nginx-pod.yaml

pod "nginx-pod1" created

[k8s@kube-server ~]$ kubectl get pods -o wide|grep nginx-pod

nginx-pod1 1/1 Running 0 33s 172.30.86.3 kube-node2

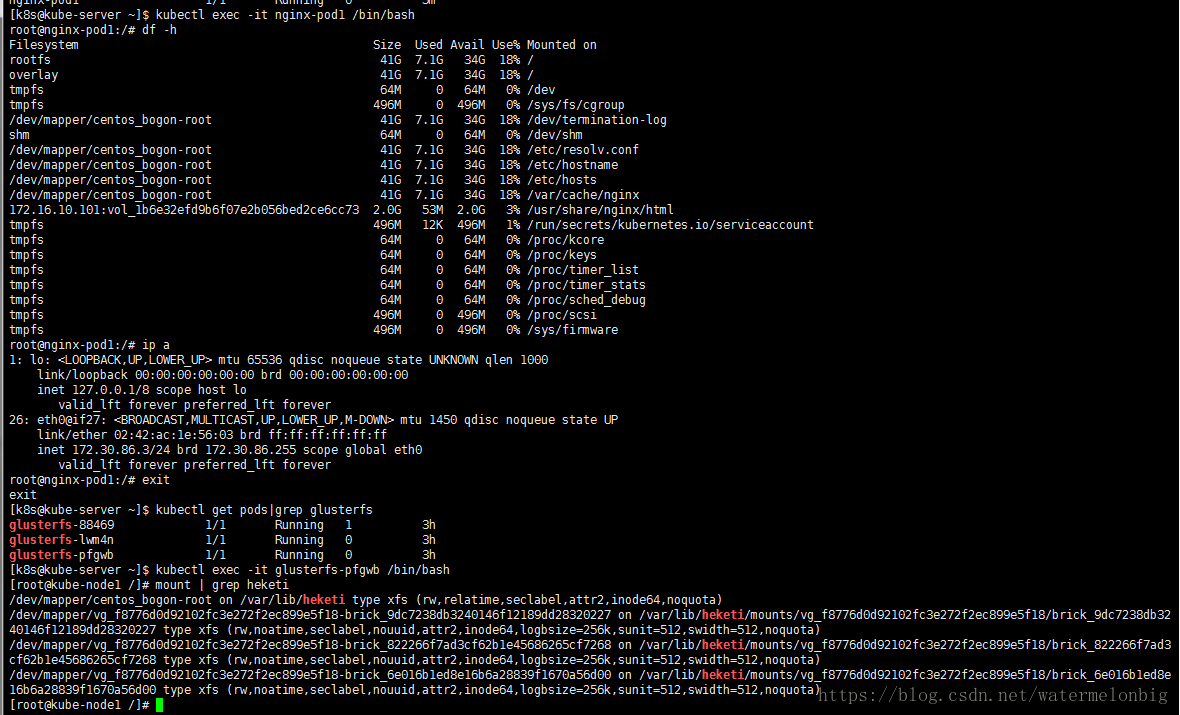

登录到该Pod中并创建一个网页文件:

[k8s@kube-server ~]$ kubectl exec -it nginx-pod1 /bin/bash

root@nginx-pod1:/# df -h

Filesystem Size Used Avail Use% Mounted on

rootfs 41G 7.1G 34G 18% /

overlay 41G 7.1G 34G 18% /

tmpfs 64M 0 64M 0% /dev

tmpfs 496M 0 496M 0% /sys/fs/cgroup

/dev/mapper/centos_bogon-root 41G 7.1G 34G 18% /dev/termination-log

shm 64M 0 64M 0% /dev/shm

/dev/mapper/centos_bogon-root 41G 7.1G 34G 18% /etc/resolv.conf

/dev/mapper/centos_bogon-root 41G 7.1G 34G 18% /etc/hostname

/dev/mapper/centos_bogon-root 41G 7.1G 34G 18% /etc/hosts

/dev/mapper/centos_bogon-root 41G 7.1G 34G 18% /var/cache/nginx

172.16.10.101:vol_1b6e32efd9b6f07e2b056bed2ce6cc73 2.0G 53M 2.0G 3% /usr/share/nginx/html

tmpfs 496M 12K 496M 1% /run/secrets/kubernetes.io/serviceaccount

tmpfs 64M 0 64M 0% /proc/kcore

tmpfs 64M 0 64M 0% /proc/keys

tmpfs 64M 0 64M 0% /proc/timer_list

tmpfs 64M 0 64M 0% /proc/timer_stats

tmpfs 64M 0 64M 0% /proc/sched_debug

tmpfs 496M 0 496M 0% /proc/scsi

tmpfs 496M 0 496M 0% /sys/firmware

root@nginx-pod1:/# cd /usr/share/nginx/html

dex.htmlnx-pod1:/usr/share/nginx/html# echo 'Hello World from GlusterFS!!!' > in

root@nginx-pod1:/usr/share/nginx/html# ls

index.html

root@nginx-pod1:/usr/share/nginx/html# cat index.html

Hello World from GlusterFS!!!

root@nginx-pod1:/usr/share/nginx/html# exit

exit

访问一下我们的网页:

[k8s@kube-server ~]$ curl https://172.30.86.3

Hello World from GlusterFS!!!

再检查一下gluster pod,找到我们刚写入的index.html文件,登录任一个gluster pod:

[root@kube-node1 brick]# pwd

/var/lib/heketi/mounts/vg_f8776d0d92102fc3e272f2ec899e5f18/brick_6e016b1ed8e16b6a28839f1670a56d00/brick

[root@kube-node1 brick]# ls

index.html

[root@kube-node1 brick]# cat index.html

Hello World from GlusterFS!!!

7)删除 glusterfs 集群配置

curl -X DELETE 10.254.42.129:8080/devices/46b2685901f56d6fe0cc85bf3d37bf75 # 使用的是device id,删除device

curl -X DELETE 10.254.42.129:8080/nodes/8bd8497a8dcda0708508228f4ae8c2ae #使用的是node id, 删除node

每个节点都要删除掉device才能再删除node

cluster 列表下的所有节点都删除后 才能删除cluster:

curl -X DELETE 10.254.42.129:8080/clusters/ada54ffbeac15a5c9a7521e0c7d2f636

Heketi服务

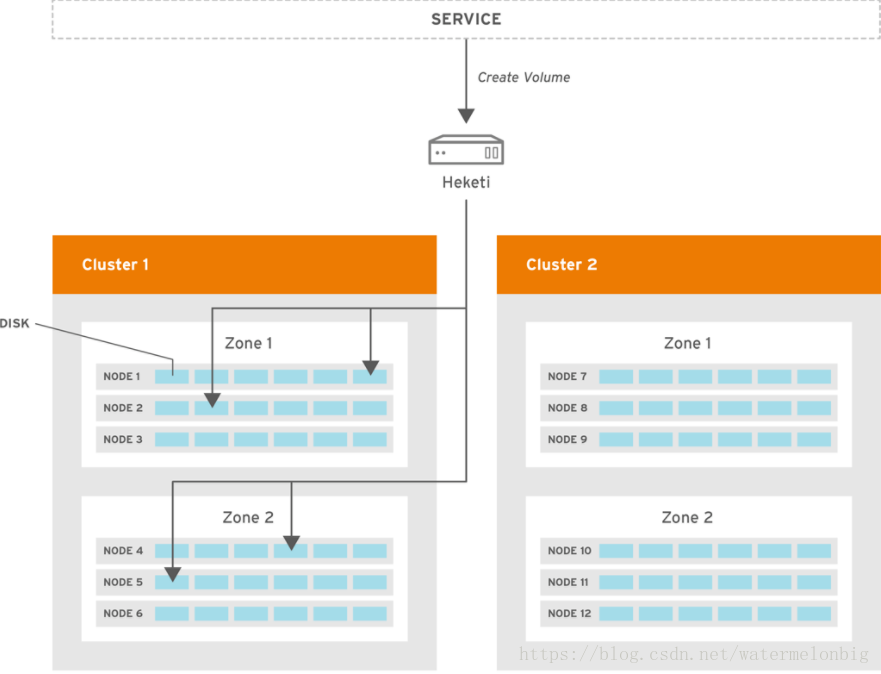

GlusterFS 是个开源的分布式文件系统,而 Heketi 在其上提供了 REST 形式的 API,二者协同为 Kubernetes 提供了存储卷的自动供给能力。Heketi还支持GlusterFS多集群管理。当一个集群中同时有多种规格、性能和容量特点的存储资源时,Heketi可以通过接入多个存储集群,在每个集群中又按资源特性划分出多个Zone来进行管理。

1)在k8s中部署Heketi服务前需要为其创建一个ServiceAccount账号

我们继续看一下前面例子中使用到的一些配置资源:

[k8s@kube-server deploy]$ pwd

/home/k8s/gluster-kubernetes/deploy

[k8s@kube-server deploy]$ cat ./kube-templates/heketi-service-account.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: heketi-service-account

labels:

glusterfs: heketi-sa

heketi: sa

[k8s@kube-server deploy]$ kubectl get sa | grep heketi

heketi-service-account 1 4h

2)通过Deployment部署Heketi服务

[k8s@kube-server deploy]$ kubectl get deployment

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

heketi 1 1 1 1 3h

[k8s@kube-server kube-templates]$ pwd

/home/k8s/gluster-kubernetes/deploy/kube-templates

[k8s@kube-server kube-templates]$ cat heketi-deployment.yaml

---

kind: Service

apiVersion: v1

metadata:

name: heketi

labels:

glusterfs: heketi-service

heketi: service

annotations:

description: Exposes Heketi Service

spec:

selector:

glusterfs: heketi-pod

ports:

- name: heketi

port: 8080

targetPort: 8080

---

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: heketi

labels:

glusterfs: heketi-deployment

heketi: deployment

annotations:

description: Defines how to deploy Heketi

spec:

replicas: 1

template:

metadata:

name: heketi

labels:

glusterfs: heketi-pod

heketi: pod

spec:

serviceAccountName: heketi-service-account

containers:

- image: heketi/heketi:dev

imagePullPolicy: IfNotPresent

name: heketi

env:

- name: HEKETI_USER_KEY

value: ${HEKETI_USER_KEY}

- name: HEKETI_ADMIN_KEY

value: ${HEKETI_ADMIN_KEY}

- name: HEKETI_EXECUTOR

value: ${HEKETI_EXECUTOR}

- name: HEKETI_FSTAB

value: ${HEKETI_FSTAB}

- name: HEKETI_SNAPSHOT_LIMIT

value: '14'

- name: HEKETI_KUBE_GLUSTER_DAEMONSET

value: "y"

- name: HEKETI_IGNORE_STALE_OPERATIONS

value: "true"

ports:

- containerPort: 8080

volumeMounts:

- name: db

mountPath: "/var/lib/heketi"

- name: config

mountPath: /etc/heketi

readinessProbe:

timeoutSeconds: 3

initialDelaySeconds: 3

httpGet:

path: "/hello"

port: 8080

livenessProbe:

timeoutSeconds: 3

initialDelaySeconds: 30

httpGet:

path: "/hello"

port: 8080

volumes:

- name: db

glusterfs:

endpoints: heketi-storage-endpoints

path: heketidbstorage

- name: config

secret:

secretName: heketi-config-secret

定义了两个volumes,其中"db"是使用的glusterfs提供的存储卷,而"config"的volume则是使用的"secret"

查看一下被当作volume使用的secret的内容:

[k8s@kube-server kube-templates]$ kubectl describe secret heketi-config-secret

Name: heketi-config-secret

Namespace: default

Labels: glusterfs=heketi-config-secret

heketi=config-secret

Annotations: <none>

Type: Opaque

Data

====

heketi.json: 909 bytes

private_key: 0 bytes

topology.json: 1029 bytes

可以看到这个secret中是包含了三个配置文件

这个secret是使用脚本创建的:

[k8s@kube-server deploy]$ pwd

/home/k8s/gluster-kubernetes/deploy

[k8s@kube-server deploy]$ ls

gk-deploy heketi.json.template kube-templates ocp-templates topology.json

[k8s@kube-server deploy]$ grep heketi-config-secret gk-deploy

eval_output "${CLI} create secret generic heketi-config-secret --from-file=private_key=${SSH_KEYFILE} --from-file=./heketi.json --from-file=topology.json=${TOPOLOGY}"

eval_output "${CLI} label --overwrite secret heketi-config-secret glusterfs=heketi-config-secret heketi=config-secret"

[k8s@kube-server deploy]$

3)为Heketi设置GlusterFS集群

使用一个topology.json的配置文件来完成各个GlusterFS节点和设备的定义。

Heketi要求一个GlusterFS集群中至少有3个节点。在topology.json配置文件的hostnames字段中的manage中填写主机名,在storage上填写IP地址,devices要求是未创建文件系统的裸设备(支持多块磁盘)。这样Heketi就可以自动完成PV、VG和LV的创建。

topology.json文件的内容在前面已经提供了,不再重复。

当使用gluster-kubernetes项目提供的脚本工具和模板创建glusterfs和heketi服务时,不需要额外的手动干预。但如果是手动创建这些服务,则可以按下面的方法使用Heketi加载topology配置,完成GlusterFS服务集群的创建。

可以登录进入Hekiti容器执行以下命令:

# export HEKETI_CLI_SERVER=https://localhost:8080

# heketi-cli topology load --json=topology.json

这样Heketi就完成了GlusterFS集群的创建,同时在GlusterFS集群的各个节点的/dev/sdb盘上成功创建了PV和VG。

此时,查看Heketi的topology信息,可以看到很详细的Node和Device信息:

[k8s@kube-server deploy]$ heketi-cli topology info

Cluster Id: ada54ffbeac15a5c9a7521e0c7d2f636

Volumes:

Name: vol_1b6e32efd9b6f07e2b056bed2ce6cc73

Size: 2

Id: 1b6e32efd9b6f07e2b056bed2ce6cc73

Cluster Id: ada54ffbeac15a5c9a7521e0c7d2f636

Mount: 172.16.10.103:vol_1b6e32efd9b6f07e2b056bed2ce6cc73

Mount Options: backup-volfile-servers=172.16.10.102,172.16.10.101

Durability Type: replicate

Replica: 3

Snapshot: Disabled

Bricks:

Id: 6e016b1ed8e16b6a28839f1670a56d00

Path: /var/lib/heketi/mounts/vg_f8776d0d92102fc3e272f2ec899e5f18/brick_6e016b1ed8e16b6a28839f1670a56d00/brick

Size (GiB): 2

Node: fc21262379ec3636e3eadcae15efcc94

Device: f8776d0d92102fc3e272f2ec899e5f18

Id: c7acfcecaf85aada98b0c0208798440f

Path: /var/lib/heketi/mounts/vg_2f6b2f6c289a2f6bf48fbec59c0c2009/brick_c7acfcecaf85aada98b0c0208798440f/brick

Size (GiB): 2

Node: 49ac6f56ef21408bcad7c7613cd40bd8

Device: 2f6b2f6c289a2f6bf48fbec59c0c2009

Id: e2acc8268f17f05e940ebe679dacfa3a

Path: /var/lib/heketi/mounts/vg_e100120226d5d9567ed0f92b9810236c/brick_e2acc8268f17f05e940ebe679dacfa3a/brick

Size (GiB): 2

Node: bdf51ae46025cd4fcf134f7be36c32de

Device: e100120226d5d9567ed0f92b9810236c

Name: heketidbstorage

Size: 2

Id: 42b01b9b08af23b751b2359fb161c004

Cluster Id: ada54ffbeac15a5c9a7521e0c7d2f636

Mount: 172.16.10.103:heketidbstorage

Mount Options: backup-volfile-servers=172.16.10.102,172.16.10.101

Durability Type: replicate

Replica: 3

Snapshot: Disabled

Bricks:

Id: 2ea90ebd791a4230e927d233d1c8a7d1

Path: /var/lib/heketi/mounts/vg_2f6b2f6c289a2f6bf48fbec59c0c2009/brick_2ea90ebd791a4230e927d233d1c8a7d1/brick

Size (GiB): 2

Node: 49ac6f56ef21408bcad7c7613cd40bd8

Device: 2f6b2f6c289a2f6bf48fbec59c0c2009

Id: 9dc7238db3240146f12189dd28320227

Path: /var/lib/heketi/mounts/vg_f8776d0d92102fc3e272f2ec899e5f18/brick_9dc7238db3240146f12189dd28320227/brick

Size (GiB): 2

Node: fc21262379ec3636e3eadcae15efcc94

Device: f8776d0d92102fc3e272f2ec899e5f18

Id: cb68cbf4e992abffc86ab3b5db58ef56

Path: /var/lib/heketi/mounts/vg_e100120226d5d9567ed0f92b9810236c/brick_cb68cbf4e992abffc86ab3b5db58ef56/brick

Size (GiB): 2

Node: bdf51ae46025cd4fcf134f7be36c32de

Device: e100120226d5d9567ed0f92b9810236c

Name: vol_900fb349e56af275f47d523d08fdfd6e

Size: 1

Id: 900fb349e56af275f47d523d08fdfd6e

Cluster Id: ada54ffbeac15a5c9a7521e0c7d2f636

Mount: 172.16.10.103:vol_900fb349e56af275f47d523d08fdfd6e

Mount Options: backup-volfile-servers=172.16.10.102,172.16.10.101

Durability Type: replicate

Replica: 3

Snapshot: Disabled

Bricks:

Id: 4c98684d878ffe7dbfc1008336460eed

Path: /var/lib/heketi/mounts/vg_2f6b2f6c289a2f6bf48fbec59c0c2009/brick_4c98684d878ffe7dbfc1008336460eed/brick

Size (GiB): 1

Node: 49ac6f56ef21408bcad7c7613cd40bd8

Device: 2f6b2f6c289a2f6bf48fbec59c0c2009

Id: 7c82d03c88d73bb18d407a791a1053c2

Path: /var/lib/heketi/mounts/vg_e100120226d5d9567ed0f92b9810236c/brick_7c82d03c88d73bb18d407a791a1053c2/brick

Size (GiB): 1

Node: bdf51ae46025cd4fcf134f7be36c32de

Device: e100120226d5d9567ed0f92b9810236c

Id: 822266f7ad3cf62b1e45686265cf7268

Path: /var/lib/heketi/mounts/vg_f8776d0d92102fc3e272f2ec899e5f18/brick_822266f7ad3cf62b1e45686265cf7268/brick

Size (GiB): 1

Node: fc21262379ec3636e3eadcae15efcc94

Device: f8776d0d92102fc3e272f2ec899e5f18

Nodes:

Node Id: 49ac6f56ef21408bcad7c7613cd40bd8

State: online

Cluster Id: ada54ffbeac15a5c9a7521e0c7d2f636

Zone: 1

Management Hostname: kube-node3

Storage Hostname: 172.16.10.103

Devices:

Id:2f6b2f6c289a2f6bf48fbec59c0c2009 Name:/dev/sdb State:online Size (GiB):7 Used (GiB):5 Free (GiB):2

Bricks:

Id:2ea90ebd791a4230e927d233d1c8a7d1 Size (GiB):2 Path: /var/lib/heketi/mounts/vg_2f6b2f6c289a2f6bf48fbec59c0c2009/brick_2ea90ebd791a4230e927d233d1c8a7d1/brick

Id:4c98684d878ffe7dbfc1008336460eed Size (GiB):1 Path: /var/lib/heketi/mounts/vg_2f6b2f6c289a2f6bf48fbec59c0c2009/brick_4c98684d878ffe7dbfc1008336460eed/brick

Id:c7acfcecaf85aada98b0c0208798440f Size (GiB):2 Path: /var/lib/heketi/mounts/vg_2f6b2f6c289a2f6bf48fbec59c0c2009/brick_c7acfcecaf85aada98b0c0208798440f/brick

Node Id: bdf51ae46025cd4fcf134f7be36c32de

State: online

Cluster Id: ada54ffbeac15a5c9a7521e0c7d2f636

Zone: 1

Management Hostname: kube-node2

Storage Hostname: 172.16.10.102

Devices:

Id:e100120226d5d9567ed0f92b9810236c Name:/dev/sdb State:online Size (GiB):7 Used (GiB):5 Free (GiB):2

Bricks:

Id:7c82d03c88d73bb18d407a791a1053c2 Size (GiB):1 Path: /var/lib/heketi/mounts/vg_e100120226d5d9567ed0f92b9810236c/brick_7c82d03c88d73bb18d407a791a1053c2/brick

Id:cb68cbf4e992abffc86ab3b5db58ef56 Size (GiB):2 Path: /var/lib/heketi/mounts/vg_e100120226d5d9567ed0f92b9810236c/brick_cb68cbf4e992abffc86ab3b5db58ef56/brick

Id:e2acc8268f17f05e940ebe679dacfa3a Size (GiB):2 Path: /var/lib/heketi/mounts/vg_e100120226d5d9567ed0f92b9810236c/brick_e2acc8268f17f05e940ebe679dacfa3a/brick

Node Id: fc21262379ec3636e3eadcae15efcc94

State: online

Cluster Id: ada54ffbeac15a5c9a7521e0c7d2f636

Zone: 1

Management Hostname: kube-node1

Storage Hostname: 172.16.10.101

Devices:

Id:f8776d0d92102fc3e272f2ec899e5f18 Name:/dev/sdb State:online Size (GiB):7 Used (GiB):5 Free (GiB):2

Bricks:

Id:6e016b1ed8e16b6a28839f1670a56d00 Size (GiB):2 Path: /var/lib/heketi/mounts/vg_f8776d0d92102fc3e272f2ec899e5f18/brick_6e016b1ed8e16b6a28839f1670a56d00/brick

Id:822266f7ad3cf62b1e45686265cf7268 Size (GiB):1 Path: /var/lib/heketi/mounts/vg_f8776d0d92102fc3e272f2ec899e5f18/brick_822266f7ad3cf62b1e45686265cf7268/brick

Id:9dc7238db3240146f12189dd28320227 Size (GiB):2 Path: /var/lib/heketi/mounts/vg_f8776d0d92102fc3e272f2ec899e5f18/brick_9dc7238db3240146f12189dd28320227/brick

总结:使用Kubernetes的动态存储供应模式,配置StorageClass和Heketi共同搭建基于GlusterFS的共享存储,相对于静态模式至少有两大优势。

1.一个是管理员无须预先创建大量的PV作为存储资源

2.用户在申请PVC时也无法保证容量与预置PV的容量能够一致。因此,从k8s v1.6开始,建议用户优先考虑使用StorageClass的动态存储供应模式进行存储管理。