一. 背景

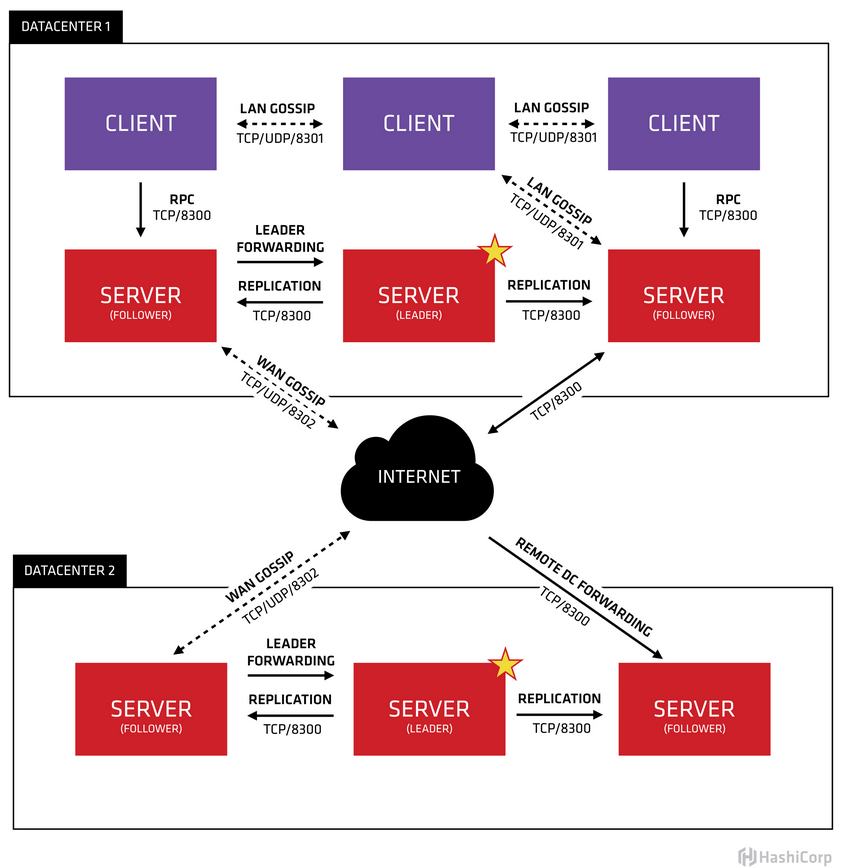

在生产环境中,后台服务众多,当选择使用 consul 做服务治理的时候,所有服务注册到consul 上,若consul 挂掉,会影响整个平台的业务运行,为了保证业务的稳定性,需要consul 不宕机持续对外提供服务,因此要求consul提供高可靠的能力,根据官方文档,需要将consul部署成集群(部署多个consul实例)

二. 方案

在《Consul 学习笔记(二)之生产环境部署 》文章中其实已经按照官方的集群部署方案进行部署的,部署规模为:2个consul server,2个client, web服务均注册到client,由client注册到server上。此种部署方案有一个缺点,当其中一个 consul server 实例宕机,整个集群将会不可用,未达到高可靠的目的。

结合官方文档说明,consul server 集群的容错(允许宕机的实例个数)如下:

由前文得知,在构建 consul server 集群的时候,必须指定一个实例为-bootstrap-expect(或-bootstrap),让其以 bootstrap 模式启动。必须的原因为:

- 以 bootstrap 模式启动的实例可以自动选举为 leader,若不指定某个实例为bootstrap模式,则初始的leader无法选择,造成无法构建集群;

- 在一个集群构建的时候,bootstrap模式的 consul server 实例有且仅能有一个。

- 以上2点成立的条件是没有配置bootstrap_expect或者bootstrap_expect配置值为1。若事先知道部署的consul server数量,每个consul server 的都可配置 bootstrap_expect,其值为consul server的数量。

官方推荐的部署方式为:

部署规模:consul server 每个数据中心 3-5 个,consul client 可以有多个。其中 consul server 数量这样限定的理由是:在容错和性能上面达到一个平衡,随着实例越来越多会造成一致性进展变慢。

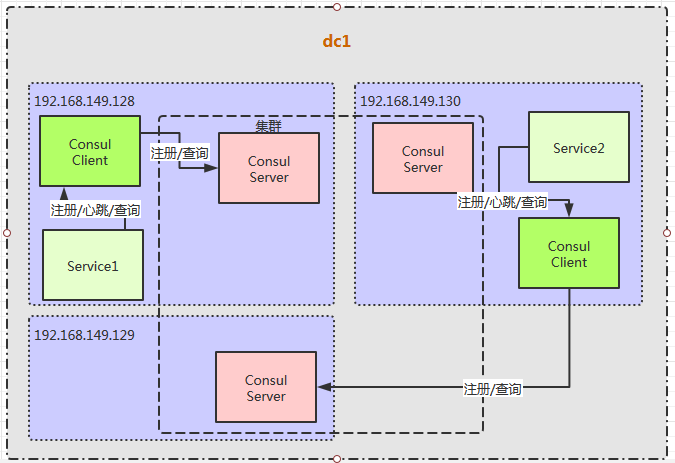

基于以上,本文章中 集群初始部署规模为: 3个consul server,2个 client,web服务注册到 client 上。

consul server: 192.168.149.128、192.168.149.130、192.168.149.129

consul client:192.168.149.128、192.168.149.130

两个client 都注册 web 服务。

192.168.149.129 默认是leader。

三. 部署

部署方式主要参考《Consul 学习笔记(二)之生产环境部署 》,这里主要给出部署配置和效果图,以及部署过程中遇到的问题。

1、配置

1) consul server

192.168.149.128

{ "datacenter": "test-datacenter1", "data_dir": "/opt/consul/consul/data/", "log_file": "/opt/consul/consul/log/", "log_level": "INFO", "bind_addr": "192.168.149.128", "client_addr": "0.0.0.0", "node_name": "consul server1", "ui": true, "server": true,

"retry_join":["192.168.149.129:8301"] //默认加入到 129 consul server 上组成集群 }

192.168.149.129

{ "datacenter": "test-datacenter1", "data_dir": "/opt/consul/consul/data/", "log_file": "/opt/consul/consul/log/", "log_level": "INFO", "bind_addr": "192.168.149.129", "client_addr": "0.0.0.0", "node_name": "consul server2", "ui": true, "server": true,

"bootstrap_expect":1 //默认是 leader }

192.168.149.130

{ "datacenter": "test-datacenter1", "data_dir": "/opt/consul/consul/data/", "log_file": "/opt/consul/consul/log/", "log_level": "INFO", "bind_addr": "192.168.149.130", "client_addr": "0.0.0.0", "node_name": "consul server3", "ui": true, "server": true, "retry_join":["192.168.149.129:8301"] //默认加入到 129 consul server 上组成集群 }

2) consul client

192.168.149.128

{ "datacenter": "test-datacenter1", "data_dir": "/opt/consul/client/data/", "log_file": "/opt/consul/client/log/", "log_level": "INFO", "bind_addr": "192.168.149.128", "client_addr": "0.0.0.0", "node_name": "consul client1on128", "retry_join": ["192.168.149.128:8301"], "ports": { "dns": 8703, "http": 8700, "serf_wan": 8702, "serf_lan": 8701, "server":8704 } }

192.168.149.130

{ "datacenter": "test-datacenter1", "data_dir": "/opt/consul/client/data/", "log_file": "/opt/consul/client/log/", "log_level": "INFO", "bind_addr": "192.168.149.130", "client_addr": "0.0.0.0", "node_name": "consul client1on130", "retry_join": ["192.168.149.129:8301"], "ports": { "dns": 8703, "http": 8700, "serf_wan": 8702, "serf_lan": 8701 } }

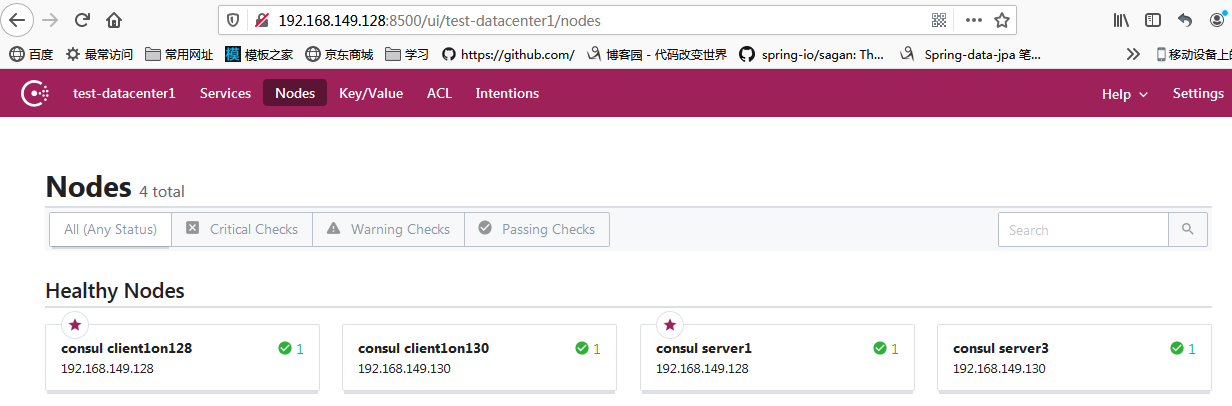

2、效果

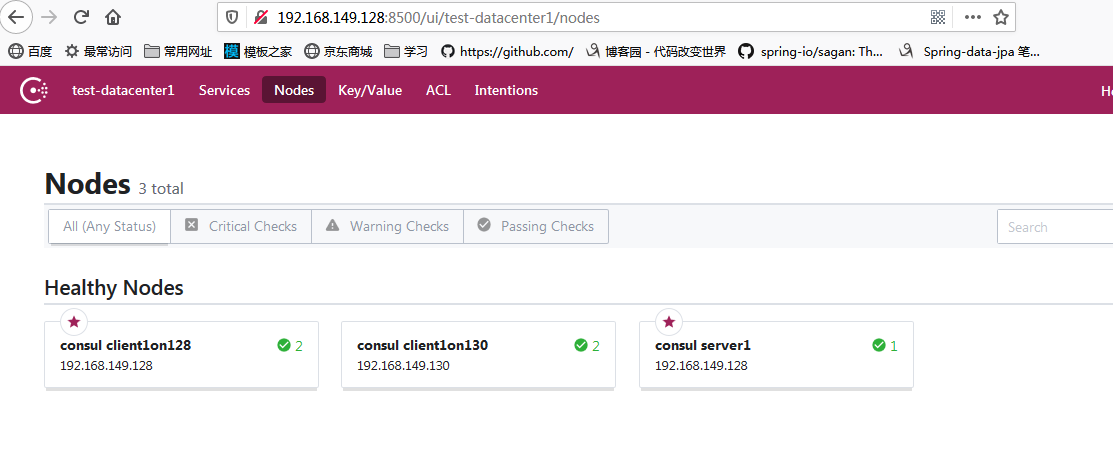

通过./consul agent -config-dir=conf ,启动所有的 consul server 和 consul client.

打开 128、129、130 的 UI 查看结点情况。

可以看出此时 129 被选举成了 leader.

1) 129 宕机场景

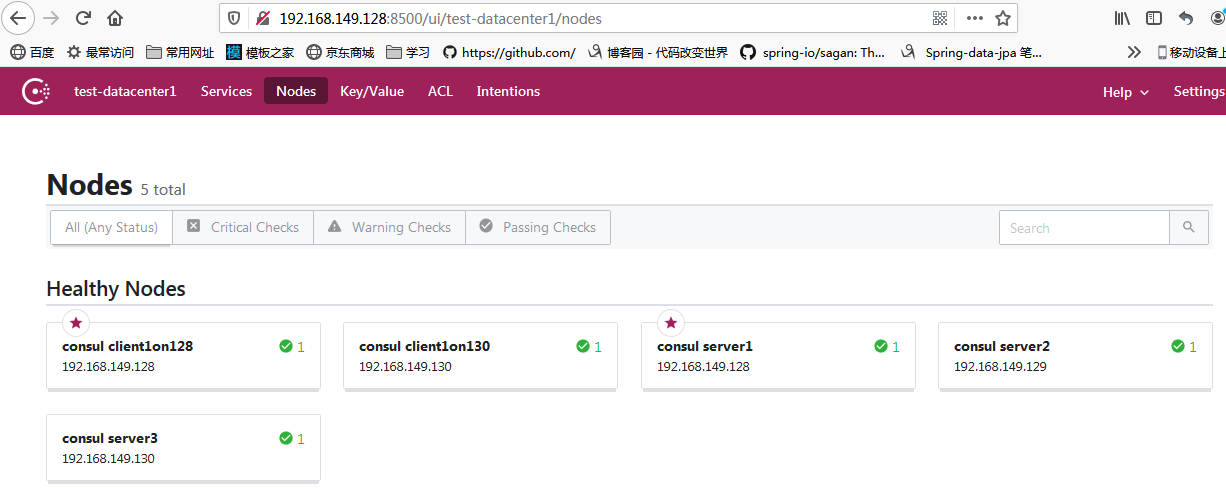

在 129 的启动窗口 按 ctrl+c 停止服务,此时在128的UI上看结点情况。

此时 128 被推举为 leader,而之前 130 consul client 是直接通过 129 注册到 consul server,但是现在虽然129 挂掉了,在 128 的consul server 上还是能够看到 130 client结点还存活着。所以 consul 结点通过集群中的一个consul server 实例加入到集群中来,意味着所有 consul server 实例都知道该 client。

2) 恢复 129 consul server

129 被自动加入集群,leader 不变。

3)在实际场景中,我们会碰到批量自动安装consul server,又要求 consul server 的所有实例自动组成集群,根据以上得知,构建集群的 consul server中必须有一个且仅有一个consulserver实例是bootstrap模式启动。基于此场景,给出的部署方案为:

a)批量安装的 consul server 都以bootstrap模式开启;

b)每个实例启动之后,开启一个脚本 向另外一个服务查询是否存在其它 consul 实例,若是存在,停止当前 实例,以非 bootstrap 模式启动,并将当前实例 通过 retry-join 配置加入到所有实例中,即启动里面增加如下配置:

“retry-join”:["x.x.x.x:xx","x.x.x.x:xx"] //x.x.x.x 为其他实例IP,xx:为实例的 serf_lan_port

经过验证,在client上注册的服务,最终都会同步每个 consul server上。所有 server client 结点在 consul server UI 上都可以看到。

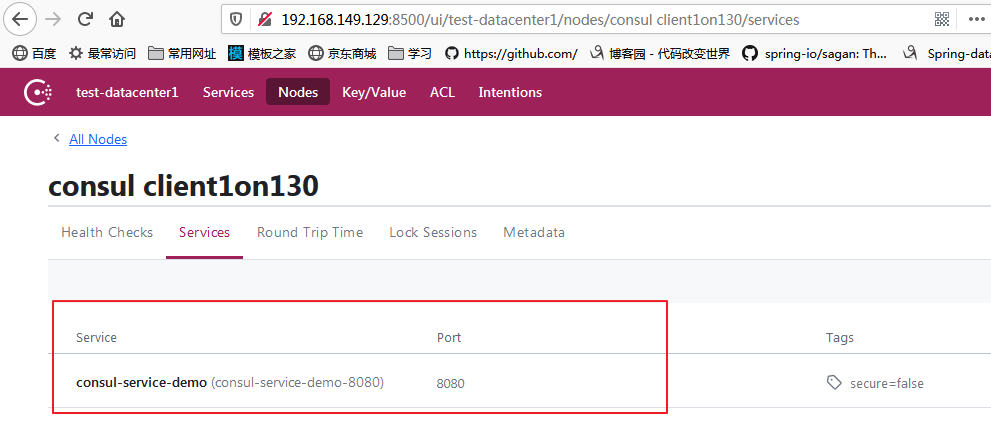

3、服务注册

在以上的部署方案中,128 和 130 服务器上部署一个微服务,微服务均注册到服务器上部署的 consul client上面,此时打开任意 consul web ui 界面,查看注册的服务数据如下:

四、问题

1、在进行高可用宕机测试的时候,通过 CTRL+C的方式停止3个服务中2个服务会有不同效果

(1) 停止129服务的时候

128 的consul server 上可以看到如下日志:

2020-07-20T08:51:05.860-0400 [DEBUG] agent.server.memberlist.lan: memberlist: Failed ping: consul server2 (timeout reached)

2020-07-20T08:51:06.360-0400 [INFO] agent.server.memberlist.lan: memberlist: Suspect consul server2 has failed, no acks received

2020-07-20T08:51:06.528-0400 [DEBUG] agent.server.memberlist.wan: memberlist: Initiating push/pull sync with: consul server3.test-datacenter1 192.168.149.130:8302

2020-07-20T08:51:08.491-0400 [DEBUG] agent.server.raft: accepted connection: local-address=192.168.149.128:8300 remote-address=192.168.149.130:34859

2020-07-20T08:51:08.492-0400 [WARN] agent.server.raft: rejecting vote request since we have a leader: from=192.168.149.130:8300 leader=192.168.149.129:8300

2020-07-20T08:51:08.575-0400 [INFO] agent.server.serf.lan: serf: EventMemberFailed: consul server2 192.168.149.129

2020-07-20T08:51:08.601-0400 [INFO] agent.server: Removing LAN server: server="consul server2 (Addr: tcp/192.168.149.129:8300) (DC: test-datacenter1)"

2020-07-20T08:51:08.613-0400 [INFO] agent.server.memberlist.lan: memberlist: Marking consul server2 as failed, suspect timeout reached (2 peer confirmations)

2020-07-20T08:51:08.860-0400 [DEBUG] agent.server.memberlist.lan: memberlist: Failed ping: consul server2 (timeout reached)

2020-07-20T08:51:09.491-0400 [DEBUG] agent.server.memberlist.lan: memberlist: Initiating push/pull sync with: consul client1on130 192.168.149.130:8701

2020-07-20T08:51:10.359-0400 [INFO] agent.server.memberlist.lan: memberlist: Suspect consul server2 has failed, no acks received

2020-07-20T08:51:13.441-0400 [WARN] agent.server.raft: heartbeat timeout reached, starting election: last-leader=192.168.149.129:8300

2020-07-20T08:51:13.441-0400 [INFO] agent.server.raft: entering candidate state: node="Node at 192.168.149.128:8300 [Candidate]" term=3

2020-07-20T08:51:13.474-0400 [WARN] agent.server.raft: unable to get address for sever, using fallback address: id=c92b2e81-c03e-f6d7-9eaf-7d5000141052 fallback=192.168.149.129:8300 error="Could not find address for server id c92b2e81-c03e-f6d7-9eaf-7d5000141052"

2020-07-20T08:51:13.475-0400 [DEBUG] agent.server.raft: votes: needed=2

2020-07-20T08:51:13.475-0400 [DEBUG] agent.server.raft: vote granted: from=a5c8f427-d4f7-571f-1057-1ec5d1981713 term=3 tally=1

2020-07-20T08:51:13.475-0400 [ERROR] agent.server.raft: failed to make requestVote RPC: target="{Voter c92b2e81-c03e-f6d7-9eaf-7d5000141052 192.168.149.129:8300}" error="dial tcp 192.168.149.128:0->192.168.149.129:8300: connect: connection refused"

2020-07-20T08:51:16.014-0400 [DEBUG] agent.server.memberlist.lan: memberlist: Stream connection from=192.168.149.128:56926

2020-07-20T08:51:16.975-0400 [DEBUG] agent.server.raft: lost leadership because received a requestVote with a newer term

2020-07-20T08:51:16.981-0400 [INFO] agent.server.raft: entering follower state: follower="Node at 192.168.149.128:8300 [Follower]" leader=

2020-07-20T08:51:16.996-0400 [DEBUG] agent.server.raft: accepted connection: local-address=192.168.149.128:8300 remote-address=192.168.149.130:33381

2020-07-20T08:51:17.132-0400 [DEBUG] agent.server.serf.lan: serf: messageUserEventType: consul:new-leader

2020-07-20T08:51:17.132-0400 [INFO] agent.server: New leader elected: payload="consul server3"

日志显示 129 被移除掉同时进行重新选举,130 被选举成了新的 leader.

这是因为根据 consul 的一致性原则,3个consul server 允许一台出问题,其它两台仍和继续工作。

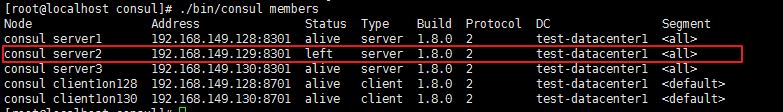

此时在 128 上执行./consul members 命令可以看到成员状态如下:

此时明显是:192.168.149.129 是 left 状态(正常离开集群状态)。

(2) 继续停止130

在128 consul server的日志上可以看到如下内容:

2020-07-20T08:56:36.860-0400 [DEBUG] agent.server.memberlist.lan: memberlist: Failed ping: consul server3 (timeout reached) 2020-07-20T08:56:37.361-0400 [INFO] agent.server.memberlist.lan: memberlist: Suspect consul server3 has failed, no acks received 2020-07-20T08:56:39.561-0400 [DEBUG] agent.server.memberlist.lan: memberlist: Initiating push/pull sync with: consul client1on130 192.168.149.130:8701 2020-07-20T08:56:39.864-0400 [DEBUG] agent.server.memberlist.lan: memberlist: Failed ping: consul server3 (timeout reached) 2020-07-20T08:56:40.363-0400 [INFO] agent.server.memberlist.lan: memberlist: Suspect consul server3 has failed, no acks received 2020-07-20T08:56:41.362-0400 [INFO] agent.server.memberlist.lan: memberlist: Marking consul server3 as failed, suspect timeout reached (2 peer confirmations) 2020-07-20T08:56:41.362-0400 [INFO] agent.server.serf.lan: serf: EventMemberFailed: consul server3 192.168.149.130 2020-07-20T08:56:41.363-0400 [INFO] agent.server: Removing LAN server: server="consul server3 (Addr: tcp/192.168.149.130:8300) (DC: test-datacenter1)" 2020-07-20T08:56:41.862-0400 [DEBUG] agent.server.memberlist.lan: memberlist: Failed ping: consul server3 (timeout reached) 2020-07-20T08:56:43.362-0400 [INFO] agent.server.memberlist.lan: memberlist: Suspect consul server3 has failed, no acks received 2020-07-20T08:56:45.165-0400 [WARN] agent.server.raft: heartbeat timeout reached, starting election: last-leader=192.168.149.130:8300 2020-07-20T08:56:45.165-0400 [INFO] agent.server.raft: entering candidate state: node="Node at 192.168.149.128:8300 [Candidate]" term=5 2020-07-20T08:56:45.172-0400 [DEBUG] agent.server.raft: votes: needed=2 2020-07-20T08:56:45.173-0400 [DEBUG] agent.server.raft: vote granted: from=a5c8f427-d4f7-571f-1057-1ec5d1981713 term=5 tally=1 2020-07-20T08:56:45.173-0400 [WARN] agent.server.raft: unable to get address for sever, using fallback address: id=105bc18f-aba5-a62c-ae2d-e5b2df9c007d fallback=192.168.149.130:8300 error="Could not find address for server id 105bc18f-aba5-a62c-ae2d-e5b2df9c007d" 2020-07-20T08:56:45.173-0400 [ERROR] agent.server.raft: failed to make requestVote RPC: target="{Voter 105bc18f-aba5-a62c-ae2d-e5b2df9c007d 192.168.149.130:8300}" error=EOF 2020-07-20T08:56:45.362-0400 [DEBUG] agent.server.memberlist.wan: memberlist: Failed ping: consul server3.test-datacenter1 (timeout reached) 2020-07-20T08:56:46.090-0400 [DEBUG] agent.server.memberlist.lan: memberlist: Stream connection from=192.168.149.128:57068 2020-07-20T08:56:47.361-0400 [INFO] agent.server.memberlist.wan: memberlist: Suspect consul server3.test-datacenter1 has failed, no acks received 2020-07-20T08:56:47.485-0400 [INFO] agent.server.serf.lan: serf: attempting reconnect to consul server3 192.168.149.130:8301 2020-07-20T08:56:47.490-0400 [DEBUG] agent.server.memberlist.lan: memberlist: Failed to join 192.168.149.130: dial tcp 192.168.149.130:8301: connect: connection refused 2020-07-20T08:56:50.258-0400 [WARN] agent.server.raft: Election timeout reached, restarting election 2020-07-20T08:56:50.258-0400 [INFO] agent.server.raft: entering candidate state: node="Node at 192.168.149.128:8300 [Candidate]" term=6 2020-07-20T08:56:50.261-0400 [DEBUG] agent.server.raft: votes: needed=2 2020-07-20T08:56:50.261-0400 [DEBUG] agent.server.raft: vote granted: from=a5c8f427-d4f7-571f-1057-1ec5d1981713 term=6 tally=1 2020-07-20T08:56:50.261-0400 [WARN] agent.server.raft: unable to get address for sever, using fallback address: id=105bc18f-aba5-a62c-ae2d-e5b2df9c007d fallback=192.168.149.130:8300 error="Could not find address for server id 105bc18f-aba5-a62c-ae2d-e5b2df9c007d" 2020-07-20T08:56:50.261-0400 [ERROR] agent.server.raft: failed to make requestVote RPC: target="{Voter 105bc18f-aba5-a62c-ae2d-e5b2df9c007d 192.168.149.130:8300}" error="dial tcp 192.168.149.128:0->192.168.149.130:8300: connect: connection refused"

虽然 130 被从 lan gossip pool中移除,但是128 进入候选者状态之后,仍然需要2票,一票自己投的,另一票还需要130投,也就是认为130必须投出有效票。否则无法成为Leader。

此时通过 members 命令查看成员状态

可以看出此时仅是认为 130 是failed状态,若此时将 130 consul server启动,就可以成功进行选举。

但是往往希望最后一个consul server有能够正常选举,那么退出 130 的时候,就必须用 leave命令。

此时 128 cosnul server日志显示为:

2020-07-20T09:27:12.153-0400 [DEBUG] agent.server.serf.lan: serf: messageLeaveType: consul server3 2020-07-20T09:27:12.161-0400 [DEBUG] agent.server.serf.lan: serf: messageLeaveType: consul server3 2020-07-20T09:27:12.163-0400 [DEBUG] agent.server.serf.lan: serf: messageLeaveType: consul server3 2020-07-20T09:27:12.556-0400 [INFO] agent.server.serf.lan: serf: EventMemberLeave: consul server3 192.168.149.130 2020-07-20T09:27:12.556-0400 [INFO] agent.server: Removing LAN server: server="consul server3 (Addr: tcp/192.168.149.130:8300) (DC: test-datacenter1)" 2020-07-20T09:27:12.557-0400 [INFO] agent.server: removing server by ID: id=105bc18f-aba5-a62c-ae2d-e5b2df9c007d 2020-07-20T09:27:12.557-0400 [INFO] agent.server.raft: updating configuration: command=RemoveServer server-id=105bc18f-aba5-a62c-ae2d-e5b2df9c007d server-addr= servers="[{Suffrage:Voter ID:a5c8f427-d4f7-571f-1057-1ec5d1981713 Address:192.168.149.128:8300}]" 2020-07-20T09:27:12.564-0400 [WARN] agent.server.raft: unable to get address for sever, using fallback address: id=105bc18f-aba5-a62c-ae2d-e5b2df9c007d fallback=192.168.149.130:8300 error="Could not find address for server id 105bc18f-aba5-a62c-ae2d-e5b2df9c007d" 2020-07-20T09:27:12.564-0400 [INFO] agent.server.raft: removed peer, stopping replication: peer=105bc18f-aba5-a62c-ae2d-e5b2df9c007d last-index=359 2020-07-20T09:27:12.564-0400 [INFO] agent.server: deregistering member: member="consul server3" reason=left 2020-07-20T09:27:12.564-0400 [INFO] agent.server.raft: aborting pipeline replication: peer="{Voter 105bc18f-aba5-a62c-ae2d-e5b2df9c007d 192.168.149.130:8300}"

且界面显示:

可以看出 在系统中正常运行的consul server实例数目若是达到了 Deployment Table 中的 Quorum size 值,此时要退出需要使用 leave,这样就能正常退出 集群,从 peer set中移除consul server,且Quorum size 发生变化,集群能够正常使用。

2、consul server启动自动组成集群

两种方式:

1)启动的 consul server至少一个是 bootstrap=true或bootstrap_expect=1进行启动的;

2)所有的 consul server 中的 bootstrap_expect的值相同;