苦逼的人生,开始了新一轮调研。这次是上面要看 MySQL Fabric 分片性能,好吧,开搞。

1 啥是 MySQL Fabric

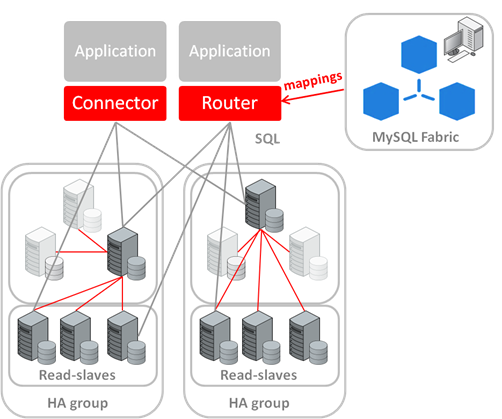

其实就是一个Python进程和应用端的Connector的组合。来一张官方图:

看到了吧,Fabric就是会启动一个python daemon进程作为xml rpc服务器,应用端的Connector会自动连接这个服务器获取信息判断该连接哪个MySQL服务器。Fabric服务器还会监控各个HA组,出现问题时自动切换主从。尼玛这性能能好才有鬼呢!

2 前置条件

- 需要 MySQL 版本 > 5.6.

- 需要单独一台 MySQL 作为Fabric服务器的 backing store.

- 各个受控的 MySQL 服务器要求打开: gtid_mode (GTID), bin_log (binary logging), 和 log_slave_updates ,并且 server_id 不能有冲突.

- 需要 Python > 2.6.

- 如果是写Java程序的话, Connector/J 版本 > 5.1.27.

3 安装 Fabric

官方文档说从1.6开始Fabric从MySQL Utilities里独立出来了,但是坑爹的是看了半天论坛又发现并没有1.6的包。最新就是1.5.6,请老实下载MySQL Utilities 1.5.6并安装吧,少年!

这里先提供一份我的 fabric.cnf 配置文件,具体就是配置 Fabric 的数据库信息,受控服务器的3种账号,客户端连接 Fabric 服务器使用 XmlRpc 或 MySQL 协议的信息。我这里把disable_authentication 打开了,取消连接 Fabric 时需要的验证,因为需要验证的情况我没试成功,总是 Permission Denie.

#fabric.cnf

[DEFAULT]

prefix =

sysconfdir = /home/will

logdir = /var/log

[storage]

address = 10.202.8.33:23308

user = root

password = root123

database = mysql_fabric

auth_plugin = mysql_native_password

connection_timeout = 6

connection_attempts = 6

connection_delay = 1

[servers]

user = root

password = root123

backup_user = root

backup_password = root123

restore_user = root

restore_password = root123

unreachable_timeout = 5

[protocol.xmlrpc]

address = 10.202.8.33:32274

threads = 5

user = admin

password = root123

disable_authentication = yes

realm =

ssl_ca =

ssl_cert =

ssl_key =

[protocol.mysql]

address = 10.202.8.33:32275

user = admin

password = root123

disable_authentication = yes

ssl_ca =

ssl_cert =

ssl_key =

[executor]

executors = 5

[logging]

level = INFO

url = file:///var/log/fabric.log

[sharding]

mysqldump_program = /usr/bin/mysqldump

mysqlclient_program = /usr/bin/mysql

[statistics]

prune_time = 3600

[failure_tracking]

notifications = 300

notification_clients = 50

notification_interval = 60

failover_interval = 0

detections = 3

detection_interval = 6

detection_timeout = 1

prune_time = 3600

[connector]

ttl = 1

4 安装数据库

做分片需要至少2台分片用服务器,1台全局服务器存不分片数据(这台的数据会同步的前2台分片服务器上),1台做back store,总共4台MySQL。记得打开: gtid_mode (GTID), bin_log (binary logging), 和 log_slave_updates。我这里打算用mysqld_multi启动多台实例,哈哈48 核,192G内存的机器。

#my.cnf

[mysqld1]

port = 23306

socket =/home/will/mysql/mysql.sock

datadir =/home/will/mysql/data

pid-file =/home/will/mysql/mysql.pid

user =mysql

log-bin =master-bin

log-bin-index =master-bin.index

binlog_format = ROW

binlog-row-image= minimal

binlog-do-db =rcc_will

server-id =1

symbolic-links =0

character_set_server=utf8

skip-external-locking

innodb_flush_log_at_trx_commit = 2

default-storage-engine =innodb

slave-skip-errors=all

max_binlog_size=200M

enforce-gtid-consistency = ON

gtid-mode = ON

log_slave_updates

master_info_repository = TABLE

relay_log_info_repository = TABLE

[mysqld2]

port = 23307

socket = /home/will/mysql2/mysql.sock

datadir =/home/will/mysql2/data

pid-file =/home/will/mysql2/mysql.pid

user =mysql

server-id =2

log-bin =master-bin

log-bin-index =master-bin.index

binlog_format = ROW

binlog-row-image= minimal

binlog-do-db =rcc_will

symbolic-links =0

character_set_server=utf8

skip-external-locking

innodb_flush_log_at_trx_commit = 2

default-storage-engine =innodb

slave-skip-errors=all

max_binlog_size=200M

enforce-gtid-consistency = ON

gtid-mode = ON

log_slave_updates

master_info_repository = TABLE

relay_log_info_repository = TABLE

[mysqld_back]

port = 23308

socket = /home/will/mysql3/mysql.sock

datadir =/home/will/mysql3/data

pid-file =/home/will/mysql3/mysql.pid

user =mysql

server-id =3

symbolic-links =0

character_set_server=utf8

skip-external-locking

innodb_flush_log_at_trx_commit = 2

default-storage-engine =innodb

slave-skip-errors=all

max_binlog_size=200M

enforce-gtid-consistency = ON

gtid-mode = ON

log_slave_updates

master_info_repository = TABLE

relay_log_info_repository = TABLE

[mysqld_global]

port = 23309

socket = /home/will/mysql4/mysql.sock

datadir =/home/will/mysql4/data

pid-file =/home/will/mysql4/mysql.pid

user =mysql

server-id =4

log-bin =master-bin

log-bin-index =master-bin.index

binlog_format = ROW

binlog-row-image= minimal

binlog-do-db =rcc_will

symbolic-links =0

character_set_server=utf8

skip-external-locking

innodb_flush_log_at_trx_commit = 2

default-storage-engine =innodb

slave-skip-errors=all

max_binlog_size=200M

enforce-gtid-consistency = ON

gtid-mode = ON

log_slave_updates

master_info_repository = TABLE

relay_log_info_repository = TABLE

5 初始化Fabric

先初始化 Backing Store 即 Fabric 服务器的 MySQL:

mysqlfabric --config=./fabric.cnf manage setup

再启动 Fabric 服务器:

mysqlfabric manage start

6 分组搞分片

Fabric的HA是按组来搞得,每个组的服务器组成主从并且可以自动切换。我这里每组就一台好了,主要是测分片嘛。

先来3个组:

mysqlfabric --config=./fabric.cnf group create my_group1

mysqlfabric --config=./fabric.cnf group create my_group2

mysqlfabric --config=./fabric.cnf group create my_group_global

再把服务器加入:

mysqlfabric --config=./fabric.cnf group add my_group1 10.202.8.33:23306

mysqlfabric --config=./fabric.cnf group add my_group2 10.202.8.33:23307

mysqlfabric --config=./fabric.cnf group add my_group_global 10.202.8.33:23309

搞起HA:

mysqlfabric --config=./fabric.cnf group promote my_group1

mysqlfabric --config=./fabric.cnf group promote my_group2

mysqlfabric --config=./fabric.cnf group promote my_group_global

激活自动切换,这步可选:

mysqlfabric --config=./fabric.cnf group activate my_group1

mysqlfabric --config=./fabric.cnf group activate my_group2

mysqlfabric --config=./fabric.cnf group activate my_group_global

定义分片的mapping(全局组,分片策略HASH):

mysqlfabric --config=./fabric.cnf sharding create_definition HASH my_group_global

给定义好的mapping(id 1)添加分片的table和列:

mysqlfabric --config=./fabric.cnf sharding add_table 1 db_will.db_users name

添加分片组:

mysqlfabric --config=./fabric.cnf sharding add_shard 1 "my_group1, my_group2" --state=ENABLED

7 创建数据库

这里需要在mysqld_global那台机器上手动create database db_will,然后就自动同步到其他服务器了。

8 Java客户端

// 数据源

FabricMySQLDataSource ds = new FabricMySQLDataSource();

ds.setServerName("10.202.8.33");

ds.setPort(32274);

ds.setDatabaseName("db_will");

ds.setFabricShardTable("db_users");

ds.setUser("root");

ds.setPassword("root123");

//创建表

JDBC4FabricMySQLConnection conn = (JDBC4FabricMySQLConnection) ds.getConnection();

Statement stat = conn.createStatement();

stat.execute(“CREATE TABLE `db_users` ( … ”);

//插入数据

JDBC4FabricMySQLConnection conn = (JDBC4FabricMySQLConnection) ds.getConnection();

PreparedStatement stat = conn.prepareStatement("insert into db_users (`name`) values (?)");

for (long i = 0; i < 20 * 1024 * 1024; i++) {

conn.setShardKey(String.valueOf(i));

stat.setString(1, String.valueOf(i));

stat.executeUpdate();

}

//查询数据

conn.setShardKey(imsi);

ResultSet rs = stat.executeQuery("SELECT * FROM `db_users` WHERE name=" + name);

可以看到主要是通过conn.setShardKey来实现选择分片组的。

9 性能

插入20M的数据,尼玛花了5个小时,每秒也就1000条吧。

然后增删改查基本都在20毫秒的级别。

最后同时查询也就能达到130个连接,再多就挂了,python服务器撑不住啊。