家裡或公司的舊電腦不夠力? 效能慢到想砸爛它們? 朋友或同事有電腦要丟嗎? 我有一個廢物利用的方法, 我收集了四台舊電腦, 組了一個Fully Distributed Mode的Hadoop Cluster, 在Hadoop上架了Hbase, 執行Nutch, 儲存Solr的資料在Hbase。

PC Specs

| Name | CPU | RAM |

|---|---|---|

| pigpigpig-client2 | T2400 1.82GHz | 2GB |

| pigpigpig-client4 | E7500 2.93GHz | 4GB |

| pigpigpig-client5 | E2160 1.80GHz | 4GB |

| pigpigpig-client6 | T7300 2.00GHz | 2GB |

Roles

| Name | Roles |

|---|---|

| pigpigpig-client2 | HQuorumPeer, SecondaryNameNode, ResourceManager, Solr |

| pigpigpig-client4 | NodeManager, HRegionServer, DataNode |

| pigpigpig-client5 | NodeManager, HRegionServer, DataNode |

| pigpigpig-client6 | NameNode, HMaster, Nutch |

Version

- Hadoop 2.7.0

- Hbase 0.98.8-hadoop2

- Gora 0.6.1-SNAPSHOT (P.S. 此時此刻官網尚未正式release, 請參考Build Apache Gora With Solr 5.1.0)

- Nutch 2.4-SNAPSHOT (P.S. 此時此刻官網尚未正式release, 請參考Build Apache Nutch with Solr 5.1.0)

Configuration

剛開始執行Nutch時, 並沒有特別修改預設的設定檔, 每次經過大約10小時, RegionServer一定會發生隨機crash, 錯誤訊息大概都是Out Of Memory之類的, 我們的限制是資源有限, 舊電腦已經無法升級, 不像EC2是資源不夠就能升級, 所以performance tuning對我們是很重要的議題。

in hadoop-env.sh

記憶體很珍貴, 因為只有兩個DATANODE, 不需要預設的512MB那麼多, 全部減半

export HADOOP_NAMENODE_OPTS=“-Dhadoop.security.logger=${HADOOP_SECURITY_LOGGER:-INFO,RFAS} -Dhdfs.audit.logger=${HDFS_AUDIT_LOGGER:-INFO,NullAppender} $HADOOP_NAMENODE_OPTS -Xmx256m”

export HADOOP_DATANODE_OPTS=“-Dhadoop.security.logger=ERROR,RFAS $HADOOP_DATANODE_OPTS -Xmx256m”

export HADOOP_SECONDARYNAMENODE_OPTS=“-Dhadoop.security.logger=${HADOOP_SECURITY_LOGGER:-INFO,RFAS} -Dhdfs.audit.logger=${HDFS_AUDIT_LOGGER:-INFO,NullAppender} $HADOOP_SECONDARYNAMENODE_OPTS -Xmx256m”

export HADOOP_PORTMAP_OPTS=“-Xmx256m $HADOOP_PORTMAP_OPTS”

export HADOOP_CLIENT_OPTS=“-Xmx256m $HADOOP_CLIENT_OPTS”

in hdfs-site.xml

為了避免hdfs timeout errors, 延長timeout的時間

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

|

in mapred-env.sh

export HADOOP_JOB_HISTORYSERVER_HEAPSIZE=256

in mapred-site.xml

CPU效能不好, node不夠多, mapred.task.timeout調高一點, 免得mapreduce來不及做完, 尤其nutch inject、generate、fetch、parse、updatedb執行幾輪之後, 每次處理的資料都幾百萬筆, timeout太低會做不完。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

|

|

in yarn-env.sh

JAVA_HEAP_MAX=-Xmx256m

YARN_HEAPSIZE=256

in yarn-site.xml

4GB的RAM要分配給OS、NodeManager、HRegionServer和DataNode, 資源實在很緊。分派一半的記憶體給YARN, 所以yarn.nodemanager.resource.memory-mb設成2048; 每個CPU有2個core, 所以mapreduce.map.memory.mb、mapreduce.reduce.memory.mb和yarn.scheduler.maximum-allocation-mb設成1024。yarn.nodemanager.vmem-pmem-ratio設高一點避免出現類似 “running beyond virtual memory limits. Killing container"之類的錯誤。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

|

|

in hbase-env.sh

# export HBASE_HEAPSIZE=1000

export HBASE_MASTER_OPTS=“$HBASE_MASTER_OPTS $HBASE_JMX_BASE -Xmx192m -Xms192m -Xmn72m”

export HBASE_REGIONSERVER_OPTS=“$HBASE_REGIONSERVER_OPTS $HBASE_JMX_BASE -Xmx1024m -Xms1024m -verbose:gc -Xloggc:/mnt/hadoop-2.4.1/hbase/logs/hbaseRgc.log -XX:+PrintAdaptiveSizePolicy -XX:+PrintGC -XX:+PrintGCDetails -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/mnt/hadoop-2.4.1/hbase/logs/java_pid{$$}.hprof”

export HBASE_ZOOKEEPER_OPTS=“$HBASE_ZOOKEEPER_OPTS $HBASE_JMX_BASE -Xmx192m -Xms72m”

in hbase-site.xml

RegionServer發生out of memory閃退跟hbase.hregion.max.filesize、hbase.hregion.memstore.flush.size和hbase.hregion.memstore.block.multiplier有關。

hbase.hregion.max.filesize太小的缺點

- 每台ResrionServer的Regions會太多 (P.S. 每個region的每個ColumnFamily會占用2MB的MSLAB)

- 造成頻繁的split和compact

- 開啟的storefile數量太多 (P.S. Potential Number of Open Files = (StoreFiles per ColumnFamily) x (regions per RegionServer))

hbase.hregion.max.filesize太大的缺點

- 太少Region, 沒有Distributed Mode的效果了

- split和compact時的pause也會過久

write buffer在server-side memory-used是(hbase.client.write.buffer) * (hbase.regionserver.handler.count), 所以hbase.client.write.buffer和hbase.regionserver.handler.count太高會吃掉太多記憶體, 但是太少會增加RPC的數量。

hbase.zookeeper.property.tickTime和zookeeper.session.timeout太短會造成ZooKeeper SessionExpired。hbase.ipc.warn.response.time設長一點可以suppress responseTooSlow warning。

hbase.hregion.memstore.flush.size和hbase.hregion.memstore.block.multiplier也會影響split和compact的頻率。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

|

|

Start Servers

- run hdfs namenode -format on pigpigpig-client6

- run start-dfs.sh on pigpigpig-client6

- run start-yarn.sh on pigpigpig-client2

- run start-yarn.sh on pigpigpig-client4

- run start-hbase.sh on pigpigpig-client6

- run java -Xmx1024m -Xms1024m -XX:+UseConcMarkSweepGC -jar start.jar in solr folder on pigpigpig-client2

- run hadoop fs -mkdir /user;hadoop fs -mkdir /user/pigpigpig;hadoop fs -put urls /user/pigpigpig in nutch folder on pigpigpig-client6

- run hadoop jar apache-nutch-2.4-SNAPSHOT.job org.apache.nutch.crawl.InjectorJob urls -crawlId webcrawl in nutch folder on pigpigpig-client6

- run hadoop jar apache-nutch-2.4-SNAPSHOT.job org.apache.nutch.crawl.GeneratorJob -crawlId webcrawl in nutch folder on pigpigpig-client6

- run hadoop jar apache-nutch-2.4-SNAPSHOT.job org.apache.nutch.fetcher.FetcherJob -all -crawlId webcrawl in nutch folder on pigpigpig-client6

- run hadoop jar apache-nutch-2.4-SNAPSHOT.job org.apache.nutch.parse.ParserJob -all -crawlId webcrawl in nutch folder on pigpigpig-client6

- run hadoop jar apache-nutch-2.4-SNAPSHOT.job org.apache.nutch.crawl.DbUpdaterJob -all -crawlId webcrawl in nutch folder on pigpigpig-client6

- run hadoop jar apache-nutch-2.4-SNAPSHOT.job org.apache.nutch.indexer.IndexingJob -D solr.server.url=http://pigpigpig-client2/solr/nutch/ -all -crawlId webcrawl in nutch folder on pigpigpig-client6

Stop Servers

- run stop-hbase.sh on pigpigpig-client6

- run stop-yarn.sh on pigpigpig-client2

- run stop-yarn.sh on pigpigpig-client4

- run stop-dfs.sh on pigpigpig-client6

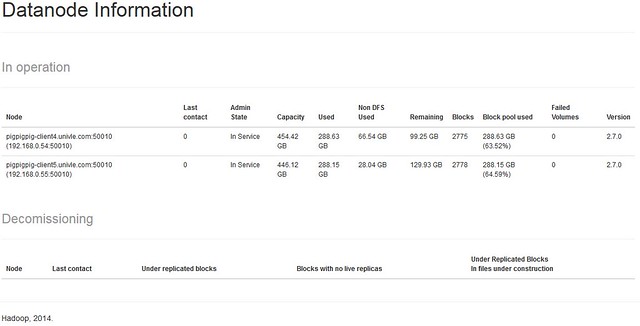

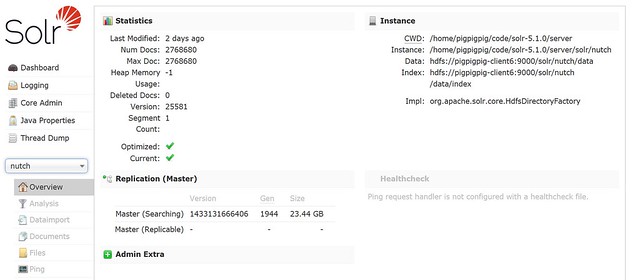

Screenshots