爬取网站:https://www.shujukuji.cn/guominjingjihangyefenlei/xiaolei-liebiao

一.数据:(由于文档中含有敏感词,上传失败)

网盘:

链接:https://pan.baidu.com/s/1vCUuCOEEQb786afZVhS8SQ

提取码:nsi4

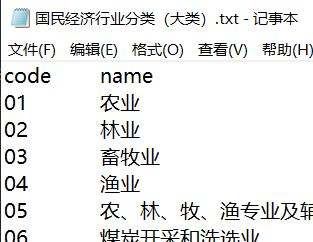

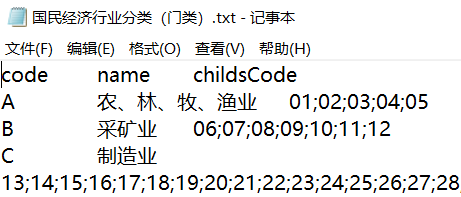

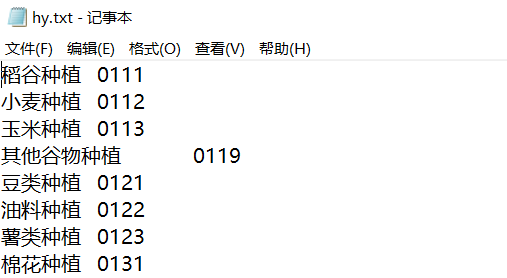

数据截图:

二.代码分析:(爬取小类)

如下图,可以发现代码与连接的关系https://www.shujukuji.cn/guominjingjihangyefenlei/xiaolei/...

①先爬取<a>标签中的代码

②根据代码创造链接url,爬取行业名称

三.注意:python对txt文档的读写规则以及文档指针问题

在使用Python进行txt文件的读写时,当打开文件后,首先用read()对文件的内容读取,然后再用write()写入,这时发现虽然是用“r+”模式打开,按道理是应该覆盖的,但是却出现了追加的情况。

这是因为在使用read后,文档的指针已经指向了文本最后,而write写入的时候是以指针为起始,因此就产生了追加的效果。

如果想要覆盖,需要先seek(0),然后使用truncate()清除后,即可实现重新覆盖写入

四.源代码:(爬取小类)

#!/usr/bin/env python # -*- coding: utf-8 -*- # @File : 国民经济行业分类及其代码.py # @Author: 田智凯 # @Date : 2020/3/19 # @Desc :爬取中国国民经济行业分类 from multiprocessing.pool import Pool import requests from lxml import etree import time headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36' } txtpath=r'D:hy.txt' #爬取表格得到小类代码 def get_codes(url): global codes res = requests.get(url, headers=headers) selector = etree.HTML(res.text) tb=selector.xpath('//*[@id="block-system-main"]/div/div/div[2]/table/tbody/tr') #不清空原来内容 with open(txtpath, "a",encoding='utf-8') as txt: for tr in tb: tds=tr.xpath('td') for td in tds: code=td.xpath('div/span/a/text()')[0] #print(code) hy=get_hangye(code) txt.write(hy) txt.write(' ') txt.write(code) txt.write(' ') txt.close() #根据代码得到名称 def get_hangye(code): url='https://www.shujukuji.cn/guominjingjihangyefenlei/xiaolei/{}'.format(code) #print(url) res = requests.get(url, headers=headers) selector = etree.HTML(res.text) hy = selector.xpath('//*[@id="block-system-main"]/div/div/div[2]/div/div[2]/span[2]/text()')[0] #print(hy) return hy if __name__ == '__main__': urls = ['https://www.shujukuji.cn/guominjingjihangyefenlei/xiaolei-liebiao?page={}'.format(str(p)) for p in range(0,15)] start=time.time() print('程序运行中...') pool=Pool(processes=4)#开启四个进程 pool.map(get_codes,urls) end=time.time() print('程序用时',end-start)

附上:

import requests from lxml import etree headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36' } txtpath=r'D:hy2.txt' def get_data(url): global codes res = requests.get(url, headers=headers) selector = etree.HTML(res.text) hy=selector.xpath('//*[@id="block-system-main"]/div/div/div[2]/div/div[1]/span[2]/a/text()')[0] code=selector.xpath('//*[@id="block-system-main"]/div/div/div[2]/div/div[2]/span[2]/text()')[0] #不清空原来内容 with open(txtpath, "a",encoding='utf-8') as txt: txt.write(hy) txt.write(' ') txt.write(code) txt.write(' ') txt.close() if __name__ == '__main__': for i in range(1,98): if i<10: url='https://www.shujukuji.cn/guominjingjihangyefenlei/dalei/{}'.format('0'+str(i)) #print(url) get_data(url) else: url = 'https://www.shujukuji.cn/guominjingjihangyefenlei/dalei/{}'.format(str(i)) get_data(url)

import requests from lxml import etree headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36' } txtpath=r'D:hy3.txt' def get_data(url): res = requests.get(url, headers=headers) selector = etree.HTML(res.text) infos=selector.xpath('//*[@id="block-system-main"]/div/div/div[3]/div[1]/div/table/tbody/tr') for info in infos: code=info.xpath('td[1]/a/text()')[0].strip() hy=info.xpath('td[2]/text()')[0].strip() #不清空原来内容 with open(txtpath, "a",encoding='utf-8') as txt: txt.write(hy) txt.write(' ') txt.write(code) txt.write(' ') txt.close() if __name__ == '__main__': for i in range(1,98): if i<10: url='https://www.shujukuji.cn/guominjingjihangyefenlei/dalei/{}'.format('0'+str(i)) #print(url) get_data(url) else: url = 'https://www.shujukuji.cn/guominjingjihangyefenlei/dalei/{}'.format(str(i)) get_data(url)

import requests from lxml import etree import re headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36' } txtpath=r'D:hy.txt' def get_data(url): res = requests.get(url, headers=headers) selector = etree.HTML(res.text) infos = selector.xpath('//*[@id="node-3"]/div/div/div/div/fieldset/div/div/table/tbody/tr') for info in infos: try: code = info.xpath('td[1]/a/text()')[0].strip() print(code) if (code): hy = info.xpath('td[3]/text()')[0].strip() content = info.xpath('td[4]/text()')[0].strip() list = re.findall(r'd+', content) # 挑选字符串中的数字(例:本门类包括01~05大类) min = int(list[0]) max = int(list[1]) # print(min,max) childs = '' for i in range(min, max + 1): if (i < 10): childs = childs + ';0' + str(i) else: childs = childs + ';' + str(i) print(childs.lstrip(';0')) # 不清空原来内容 with open(txtpath, "a", encoding='utf-8') as txt: txt.write(code) txt.write(' ') txt.write(hy) txt.write(' ') txt.write(childs.lstrip(';0')) txt.write(' ') txt.close() else: pass except: pass if __name__ == '__main__': get_data('https://www.shujukuji.cn/guominjingjihangyefenlei')