一、介绍

本例子用scrapy-splash抓取今日头条网站给定关键字抓取咨询信息。

给定关键字:打通;融合;电视

抓取信息内如下:

1、资讯标题

2、资讯链接

3、资讯时间

4、资讯来源

二、网站信息

三、数据抓取

针对上面的网站信息,来进行抓取

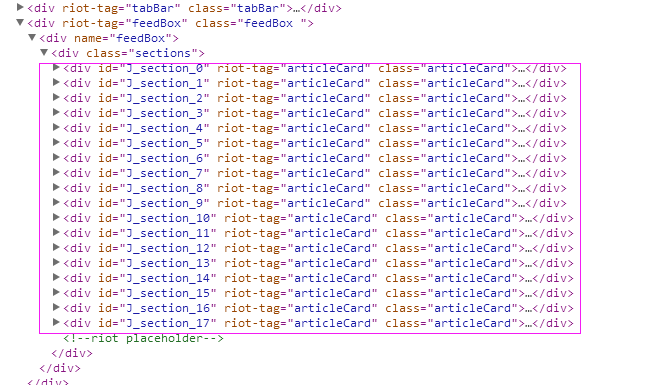

1、首先抓取信息列表

抓取代码:sels = site.xpath('//div[@class="articleCard"]')

2、抓取标题

由于在抓取标题的时候,在列表页不太好抓取,所以就绕了一下直接每条资讯的链接页面来抓取标题

抓取代码:titles = sel.xpath('.//div[@class="doc-title"]/text()')

3、抓取链接

抓取代码:url = 'http://www.toutiao.com' + str(sel.xpath('.//a[@class="link title"]/@href')[0].extract())

4、抓取日期

抓取代码:dates = sel.xpath('.//span[@class="lbtn"]/text()')

5、抓取来源

抓取代码:source=str(sel.xpath('.//a[@class="lbtn source J_source"]/text()')[0].extract())

四、完整代码

1、toutiaoSpider

# -*- coding: utf-8 -*- import scrapy from scrapy import Request from scrapy.spiders import Spider from scrapy_splash import SplashRequest from scrapy_splash import SplashMiddleware from scrapy.http import Request, HtmlResponse from scrapy.selector import Selector from scrapy_splash import SplashRequest from splash_test.items import SplashTestItem import IniFile import sys import os import re import time reload(sys) sys.setdefaultencoding('utf-8') # sys.stdout = open('output.txt', 'w') class toutiaoSpider(Spider): name = 'toutiao' configfile = os.path.join(os.getcwd(), 'splash_testspiderssetting.conf') cf = IniFile.ConfigFile(configfile) information_keywords = cf.GetValue("section", "information_keywords") information_wordlist = information_keywords.split(';') websearchurl = cf.GetValue("toutiao", "websearchurl") start_urls = [] for word in information_wordlist: start_urls.append(websearchurl + word) # request需要封装成SplashRequest def start_requests(self): for url in self.start_urls: index = url.rfind('=') yield SplashRequest(url , self.parse , args={'wait': '2'}, meta={'keyword': url[index + 1:]} ) def date_isValid(self, strDateText): ''' 判断日期时间字符串是否合法:如果给定时间大于当前时间是合法,或者说当前时间给定的范围内 :param strDateText: 四种格式 '2小时前'; '2天前' ; '昨天' ;'2017.2.12 ' :return: True:合法;False:不合法 ''' currentDate = time.strftime('%Y-%m-%d') if strDateText.find('分钟前') > 0 or strDateText.find('刚刚') > -1: return True, currentDate elif strDateText.find('小时前') > 0: datePattern = re.compile(r'd{1,2}') ch = int(time.strftime('%H')) # 当前小时数 strDate = re.findall(datePattern, strDateText) if len(strDate) == 1: if int(strDate[0]) <= ch: # 只有小于当前小时数,才认为是今天 return True, currentDate return False, '' def parse(self, response): site = Selector(response) # it_list = [] keyword = response.meta['keyword'] sels = site.xpath('//div[@class="articleCard"]') for sel in sels: dates = sel.xpath('.//span[@class="lbtn"]/text()') if len(dates) > 0: flag, date = self.date_isValid(dates[0].extract()) if flag: url = 'http://www.toutiao.com' + str(sel.xpath('.//a[@class="link title"]/@href')[0].extract()) source=str(sel.xpath('.//a[@class="lbtn source J_source"]/text()')[0].extract()) yield SplashRequest(url , self.parse_item , args={'wait': '1'}, meta={'date': date, 'url': url, 'keyword': keyword, 'source': source} ) def parse_item(self, response): site = Selector(response) it = SplashTestItem() titles = site.xpath('//h1[@class="article-title"]/text()') count = 0 if len(titles) > 0: keyword = response.meta['keyword'] strtiltle = str(titles[0].extract()) if strtiltle.find(keyword) > -1: it['title'] = strtiltle it['url'] = response.meta['url'] it['date'] = response.meta['date'] it['keyword'] = keyword it['source'] = response.meta['source'] return it

2、SplashTestItem

# -*- coding: utf-8 -*- # Define here the models for your scraped items # # See documentation in: # http://doc.scrapy.org/en/latest/topics/items.html import scrapy class SplashTestItem(scrapy.Item): #标题 title = scrapy.Field() #日期 date = scrapy.Field() #链接 url = scrapy.Field() #关键字 keyword = scrapy.Field() #来源网站 source = scrapy.Field()

3、SplashTestPipeline

# -*- coding: utf-8 -*- # Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: http://doc.scrapy.org/en/latest/topics/item-pipeline.html import codecs import json class SplashTestPipeline(object): def __init__(self): # self.file = open('data.json', 'wb') self.file = codecs.open( 'spider.txt', 'w', encoding='utf-8') # self.file = codecs.open( # 'spider.json', 'w', encoding='utf-8') def process_item(self, item, spider): line = json.dumps(dict(item), ensure_ascii=False) + " " self.file.write(line) return item def spider_closed(self, spider): self.file.close()

4、setting.conf

iedriverserver = C:Program FilesInternet ExplorerIEDriverServer.exe meeting_keywords = 互联网电视;智能电视;数字;影音;家庭娱乐;节目;视听;版权;数据 information_keywords = 电视;数字电视;OTT;节目内容;盒子;智能推荐;个性化;视频;影音;跨屏;融合;打通;多平台 #information_keywords = 电视;OTT invalid_day = 4 nexturllabel = 下一页 timesleep = 3 [toutiao] websearchurl = http://www.toutiao.com/search/?keyword=