LogStash plugins-filters-grok介绍

官方文档:https://www.elastic.co/guide/en/logstash/current/plugins-filters-grok.html

常用于对日志的拆分,如apache日志

grok利用正则表达式进行匹配,拆分日志。它提供一些常用的预定义的正则表达式名称,用于直接匹配。

预定义文件路径 /opt/logstash/vendor/bundle/jruby/1.9/gems/logstash-patterns-core-2.0.5/patterns

grok-patterns文件包含apache预定义。

logstash所有预定义的可以通过 如下地址查看

https://github.com/logstash-plugins/logstash-patterns-core/tree/master/patterns

自己写的规则可以通过如下地址,做debug测试

http://grokdebug.herokuapp.com/

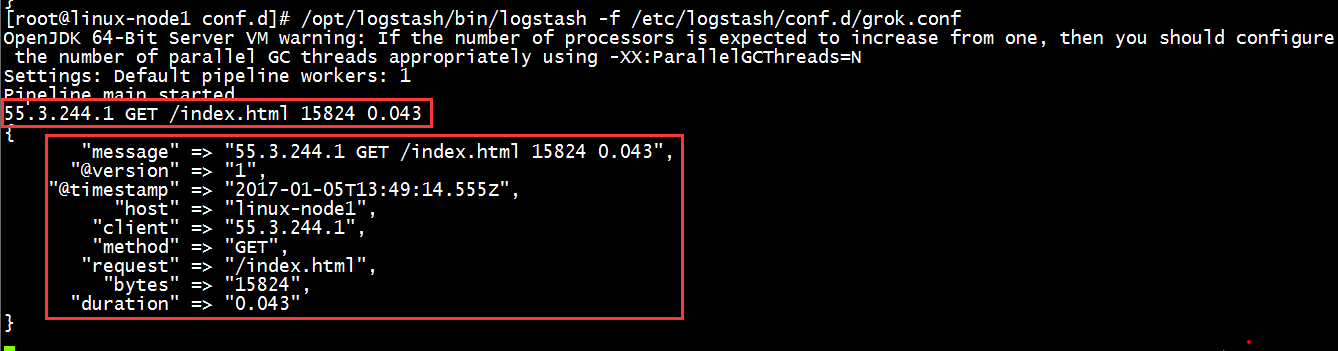

grok插件使用

1)标准输入、标准输出

input {

stdin {}

}

filter {

grok {

match => { "message" => "%{IP:client} %{WORD:method} %{URIPATHPARAM:request} %{NUMBER:bytes} %{NUMBER:duration}" }

}

}

output{

stdout{

codec => rubydebug

}

}

启动/opt/logstash/bin/logstash -f /etc/logstash/conf.d/grok.conf

输入测试数据 55.3.244.1 GET /index.html 15824 0.043

apache日志收集

本示例为收集默认的apache日志,如果自定义apache日志格式,需要编写自定义的正则表达式,通过grok进行匹配

1、标准输出

input {

file {

path => "/etc/httpd/logs/access_log"

start_position => "beginning"

}

}

filter {

grok {

match => { "message" => "%{COMBINEDAPACHELOG}" }

}

}

output{

stdout{

codec => rubydebug

}

}

启动/opt/logstash/bin/logstash -f /etc/logstash/conf.d/grok_apache.conf

2、elasticsearch插件输出

input {

file {

path => "/etc/httpd/logs/access_log"

start_position => "beginning"

}

}

filter {

grok {

match => { "message" => "%{COMBINEDAPACHELOG}" }

}

}

output{

elasticsearch {

hosts => ["192.168.137.11:9200"]

index => "apache-accesslog-%{+YYYY.MM.dd}"

}

}

启动/opt/logstash/bin/logstash -f /etc/logstash/conf.d/grok_apache.conf