HDFS支持主从结构,主节点称为NameNode,是因为主节点上运行的有NameNode的进程,NameNode支持多个。

从节点称为DataNode,是因为从节点上运行的有DataNode进程。

HDFS还包含一个SecondaryNameNode进程。

HDFS体系结构如下图

NameNode介绍

NameNode:主要维护整个文件系统的文件目录树,文件/目录的信息和每个文件对应的数据块列表,并且还负责接收用户的操作请求。

- 文件/目录信息:表示文件/目录的一些基本信息,所有者 属组 修改时间 文件大小等信息。

- 每个文件对应的数据块列表:如果一个文件太大,那么在集群中存储的时候就会对文件进行切割。类似于会把文件分成一块一块的,存储到不同的机器上,所以HDFS需要记录一个文件分成了多少块,存储在哪台机器上。

- 接收用户请求:使用命令hdfs操作的时候,是需要先和nameNode通信,才能开始操作数据。

NameNode中包含的文件

在hadoop安装包中,有hdfs-default.xml文件(hadoop-3.2.0/share/hadoop/hdfs/hadoop-hdfs-3.2.0.jar),hdfs-site.xml属于default的扩展文件,如果hdfs-site中配置了,则优先生效hdfs-site.xml

<property>

<name>dfs.namenode.name.dir</name>

<value>file://${hadoop.tmp.dir}/dfs/name</value>

<description>Determines where on the local filesystem the DFS name node

should store the name table(fsimage). If this is a comma-delimited list

of directories then the name table is replicated in all of the

directories, for redundancy. </description>

</property>

<!--dataNode->

<property>

<name>dfs.datanode.data.dir</name>

<value>file://${hadoop.tmp.dir}/dfs/data</value>

<description>Determines where on the local filesystem an DFS data node

should store its blocks. If this is a comma-delimited

list of directories, then data will be stored in all named

directories, typically on different devices. The directories should be tagged

with corresponding storage types ([SSD]/[DISK]/[ARCHIVE]/[RAM_DISK]) for HDFS

storage policies. The default storage type will be DISK if the directory does

not have a storage type tagged explicitly. Directories that do not exist will

be created if local filesystem permission allows.

</description>

</property>

在file://${hadoop.tmp.dir}/dfs/name目录下存储Namenode相关信息。

[root@bigdata02 name]# cd current/

You have new mail in /var/spool/mail/root

[root@bigdata02 current]# ls

edits_0000000000000000001-0000000000000000002

edits_0000000000000000003-0000000000000000004

edits_0000000000000000005-0000000000000000005

edits_0000000000000000006-0000000000000000007

edits_0000000000000000008-0000000000000000009

edits_0000000000000000010-0000000000000000011

edits_0000000000000000012-0000000000000000013

edits_0000000000000000014-0000000000000000015

edits_0000000000000000016-0000000000000000017

edits_0000000000000000018-0000000000000000019

edits_0000000000000000020-0000000000000000033

edits_0000000000000000034-0000000000000000035

edits_0000000000000000036-0000000000000000037

edits_0000000000000000038-0000000000000000039

edits_0000000000000000040-0000000000000000041

edits_0000000000000000042-0000000000000000043

edits_0000000000000000044-0000000000000000045

edits_0000000000000000046-0000000000000000047

edits_0000000000000000048-0000000000000000049

edits_0000000000000000050-0000000000000000051

edits_0000000000000000052-0000000000000000053

edits_0000000000000000054-0000000000000000055

edits_0000000000000000056-0000000000000000057

edits_inprogress_0000000000000000058

fsimage_0000000000000000000

fsimage_0000000000000000000.md5

seen_txid

VERSION

[root@bigdata02 current]# pwd

/data/hadoop_repo/dfs/name/current

如查看fsimage_0000000000000000000,-i后表示输入地址,-o表示输出地址)

[root@bigdata02 current]# hdfs oiv -p XML -i fsimage_0000000000000000000 -o fsimage_0.xml

2021-04-03 10:10:18,910 INFO offlineImageViewer.FSImageHandler: Loading 2 strings

2021-04-03 10:10:18,959 INFO namenode.FSDirectory: GLOBAL serial map: bits=29 maxEntries=536870911

2021-04-03 10:10:18,959 INFO namenode.FSDirectory: USER serial map: bits=24 maxEntries=16777215

2021-04-03 10:10:18,959 INFO namenode.FSDirectory: GROUP serial map: bits=24 maxEntries=16777215

2021-04-03 10:10:18,959 INFO namenode.FSDirectory: XATTR serial map: bits=24 maxEntries=16777215

``` xml

<?xml version="1.0"?>

<fsimage><version><layoutVersion>-65</layoutVersion><onDiskVersion>1</onDiskVersion><oivRevision>e97acb3bd8f3befd27418996fa5d4b50bf2e17bf</oivRevision></version>

<NameSection><namespaceId>425898831</namespaceId><genstampV1>1000</genstampV1><genstampV2>1000</genstampV2><genstampV1Limit>0</genstampV1Limit><lastAllocatedBlockId>1073741824</lastAllocatedBlockId><txid>0</txid></NameSection>

<ErasureCodingSection>

<erasureCodingPolicy>

<policyId>5</policyId><policyName>RS-10-4-1024k</policyName><cellSize>1048576</cellSize><policyState>DISABLED</policyState><ecSchema>

<codecName>rs</codecName><dataUnits>10</dataUnits><parityUnits>4</parityUnits></ecSchema>

</erasureCodingPolicy>

<erasureCodingPolicy>

<policyId>2</policyId><policyName>RS-3-2-1024k</policyName><cellSize>1048576</cellSize><policyState>DISABLED</policyState><ecSchema>

<codecName>rs</codecName><dataUnits>3</dataUnits><parityUnits>2</parityUnits></ecSchema>

</erasureCodingPolicy>

<erasureCodingPolicy>

<policyId>1</policyId><policyName>RS-6-3-1024k</policyName><cellSize>1048576</cellSize><policyState>ENABLED</policyState><ecSchema>

<codecName>rs</codecName><dataUnits>6</dataUnits><parityUnits>3</parityUnits></ecSchema>

</erasureCodingPolicy>

<erasureCodingPolicy>

<policyId>3</policyId><policyName>RS-LEGACY-6-3-1024k</policyName><cellSize>1048576</cellSize><policyState>DISABLED</policyState><ecSchema>

<codecName>rs-legacy</codecName><dataUnits>6</dataUnits><parityUnits>3</parityUnits></ecSchema>

</erasureCodingPolicy>

<erasureCodingPolicy>

<policyId>4</policyId><policyName>XOR-2-1-1024k</policyName><cellSize>1048576</cellSize><policyState>DISABLED</policyState><ecSchema>

<codecName>xor</codecName><dataUnits>2</dataUnits><parityUnits>1</parityUnits></ecSchema>

</erasureCodingPolicy>

</ErasureCodingSection>

<INodeSection><lastInodeId>16385</lastInodeId><numInodes>1</numInodes><inode><id>16385</id><type>DIRECTORY</type><name></name><mtime>0</mtime><permission>root:supergroup:0755</permission><nsquota>9223372036854775807</nsquota><dsquota>-1</dsquota></inode>

</INodeSection>

<INodeReferenceSection></INodeReferenceSection><SnapshotSection><snapshotCounter>0</snapshotCounter><numSnapshots>0</numSnapshots></SnapshotSection>

<INodeDirectorySection></INodeDirectorySection>

<FileUnderConstructionSection></FileUnderConstructionSection>

<SecretManagerSection><currentId>0</currentId><tokenSequenceNumber>0</tokenSequenceNumber><numDelegationKeys>0</numDelegationKeys><numTokens>0</numTokens></SecretManagerSection><CacheManagerSection><nextDirectiveId>1</nextDirectiveId><numDirectives>0</numDirectives><numPools>0</numPools></CacheManagerSection>

</fsimage>

查看edits文件

[root@bigdata02 current]# hdfs oev -i edits_0000000000000000074-0000000000000000081 -o edits.xml

[root@bigdata02 current]# cat edits.xml

<?xml version="1.0" encoding="UTF-8" standalone="yes"?>

<EDITS>

<EDITS_VERSION>-65</EDITS_VERSION>

<RECORD>

<OPCODE>OP_START_LOG_SEGMENT</OPCODE>

<DATA>

<TXID>74</TXID>

</DATA>

</RECORD>

<RECORD>

<OPCODE>OP_ADD</OPCODE>

<DATA>

<TXID>75</TXID>

<LENGTH>0</LENGTH>

<INODEID>16387</INODEID>

<PATH>/README.txt._COPYING_</PATH>

<REPLICATION>2</REPLICATION>

<MTIME>1617416075722</MTIME>

<ATIME>1617416075722</ATIME>

<BLOCKSIZE>134217728</BLOCKSIZE>

<CLIENT_NAME>DFSClient_NONMAPREDUCE_-138837667_1</CLIENT_NAME>

<CLIENT_MACHINE>192.168.21.102</CLIENT_MACHINE>

<OVERWRITE>true</OVERWRITE>

<PERMISSION_STATUS>

<USERNAME>root</USERNAME>

<GROUPNAME>supergroup</GROUPNAME>

<MODE>420</MODE>

</PERMISSION_STATUS>

<ERASURE_CODING_POLICY_ID>0</ERASURE_CODING_POLICY_ID>

<RPC_CLIENTID>c00ba5aa-d2b7-431c-914d-2a4c23209297</RPC_CLIENTID>

<RPC_CALLID>3</RPC_CALLID>

</DATA>

</RECORD>

<RECORD>

<OPCODE>OP_ALLOCATE_BLOCK_ID</OPCODE>

<DATA>

<TXID>76</TXID>

<BLOCK_ID>1073741828</BLOCK_ID>

</DATA>

</RECORD>

<RECORD>

<OPCODE>OP_SET_GENSTAMP_V2</OPCODE>

<DATA>

<TXID>77</TXID>

<GENSTAMPV2>1004</GENSTAMPV2>

</DATA>

</RECORD>

<RECORD>

<OPCODE>OP_ADD_BLOCK</OPCODE>

<DATA>

<TXID>78</TXID>

<PATH>/README.txt._COPYING_</PATH>

<BLOCK>

<BLOCK_ID>1073741828</BLOCK_ID>

<NUM_BYTES>0</NUM_BYTES>

<GENSTAMP>1004</GENSTAMP>

</BLOCK>

<RPC_CLIENTID/>

<RPC_CALLID>-2</RPC_CALLID>

</DATA>

</RECORD>

<RECORD>

<OPCODE>OP_CLOSE</OPCODE>

<DATA>

<TXID>79</TXID>

<LENGTH>0</LENGTH>

<INODEID>0</INODEID>

<PATH>/README.txt._COPYING_</PATH>

<REPLICATION>2</REPLICATION>

<MTIME>1617416075930</MTIME>

<ATIME>1617416075722</ATIME>

<BLOCKSIZE>134217728</BLOCKSIZE>

<CLIENT_NAME/>

<CLIENT_MACHINE/>

<OVERWRITE>false</OVERWRITE>

<BLOCK>

<BLOCK_ID>1073741828</BLOCK_ID>

<NUM_BYTES>1361</NUM_BYTES>

<GENSTAMP>1004</GENSTAMP>

</BLOCK>

<PERMISSION_STATUS>

<USERNAME>root</USERNAME>

<GROUPNAME>supergroup</GROUPNAME>

<MODE>420</MODE>

</PERMISSION_STATUS>

</DATA>

</RECORD>

<RECORD>

<OPCODE>OP_RENAME_OLD</OPCODE>

<DATA>

<TXID>80</TXID>

<LENGTH>0</LENGTH>

<SRC>/README.txt._COPYING_</SRC>

<DST>/README.txt</DST>

<TIMESTAMP>1617416075939</TIMESTAMP>

<RPC_CLIENTID>c00ba5aa-d2b7-431c-914d-2a4c23209297</RPC_CLIENTID>

<RPC_CALLID>8</RPC_CALLID>

</DATA>

</RECORD>

<RECORD>

<OPCODE>OP_END_LOG_SEGMENT</OPCODE>

<DATA>

<TXID>81</TXID>

</DATA>

</RECORD>

</EDITS>

查看 seentxid

[root@bigdata02 current]# cat seen_txid

100

查看 VERSION(格式化之后内容会发生变化)

cat VERSION

#Sat Mar 27 14:16:52 CST 2021

namespaceID=425898831

clusterID=CID-eb08d57b-dd80-4f37-8941-690b25f34c13

cTime=1616825812250

storageType=NAME_NODE

blockpoolID=BP-694070002-192.168.21.102-1616825812250

layoutVersion=-65

fsimage

fsimages:元数据镜像文件,存储某一时刻NameNode内存中的元数据信息,类似于定时快照。[此处原信息指文目录树、文件/目录的信息、每个文件对应的数据块列表]

edits

操作日志文件[事务文件],这里面会实时记录用户的所有操作

seentxid

seentxid:存放transactionId文件,format之后是0,它代表的是namenode里面的edits文件的尾数,namenode重启的时候,会按照seen_txid的数字,顺序从头到尾跑edits_0000001~到seen_txid的数字,如果根据对应的seen_txid无法加载到对应的文件,NameNode进程将不会完成启动以保护数据的一致性。

version

VERSION:保存集群的版本信息

SecondaryNameNode

SecondaryNameNode主要负责定期的把edits文件中的内容合并到fsimage中,这个合并操作称为checkpoint,在合并的时候会对edits中的内容进行转换,生成新的内容保存到fsimage文件中。

注意:在NameNode HA 架构中没有SecondaryNameNode进程,文件合并操作会友standby NameNode负责实现。

因此在Hadoop集群中,SecondaryNameNode不是必须的

DataNode介绍。

DataNode是提供真实文件数据的存储服务。

查看DataNode文件目录

<property>

<name>dfs.datanode.data.dir</name>

<value>file://${hadoop.tmp.dir}/dfs/data</value>

<description>Determines where on the local filesystem an DFS data node

should store its blocks. If this is a comma-delimited

list of directories, then data will be stored in all named

directories, typically on different devices. The directories should be tagged

with corresponding storage types ([SSD]/[DISK]/[ARCHIVE]/[RAM_DISK]) for HDFS

storage policies. The default storage type will be DISK if the directory does

not have a storage type tagged explicitly. Directories that do not exist will

be created if local filesystem permission allows.

</description>

</property>

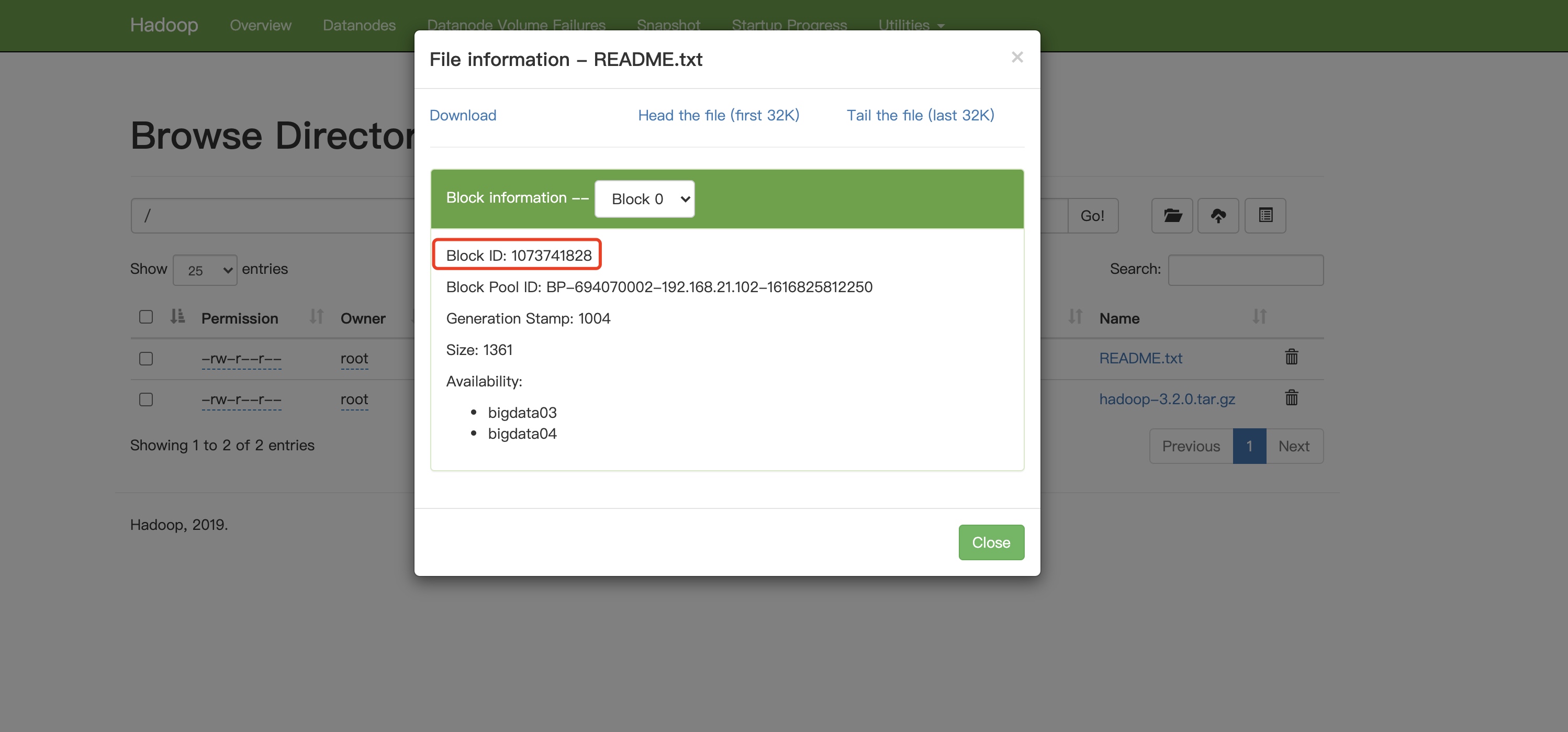

查看某个block文件

[root@bigdata03 subdir0]# cat blk_1073741828

For the latest information about Hadoop, please visit our website at:

http://hadoop.apache.org/

and our wiki, at:

http://wiki.apache.org/hadoop/

This distribution includes cryptographic software. The country in

which you currently reside may have restrictions on the import,

possession, use, and/or re-export to another country, of

encryption software. BEFORE using any encryption software, please

check your country's laws, regulations and policies concerning the

import, possession, or use, and re-export of encryption software, to

see if this is permitted. See <http://www.wassenaar.org/> for more

information.

The U.S. Government Department of Commerce, Bureau of Industry and

Security (BIS), has classified this software as Export Commodity

Control Number (ECCN) 5D002.C.1, which includes information security

software using or performing cryptographic functions with asymmetric

algorithms. The form and manner of this Apache Software Foundation

distribution makes it eligible for export under the License Exception

ENC Technology Software Unrestricted (TSU) exception (see the BIS

Export Administration Regulations, Section 740.13) for both object

code and source code.

The following provides more details on the included cryptographic

software:

Hadoop Core uses the SSL libraries from the Jetty project written

by mortbay.org.

Block

HDFS会按照固定的大小,顺序对文件进行划分并编号,划分好的每一个块称为一个block,HDFS默认Block大小是128MB

Block块是HDFS读写数据的基本单位

如果一个文件小于一个数据块的大小,并不会占用整个数据块的存储空间。

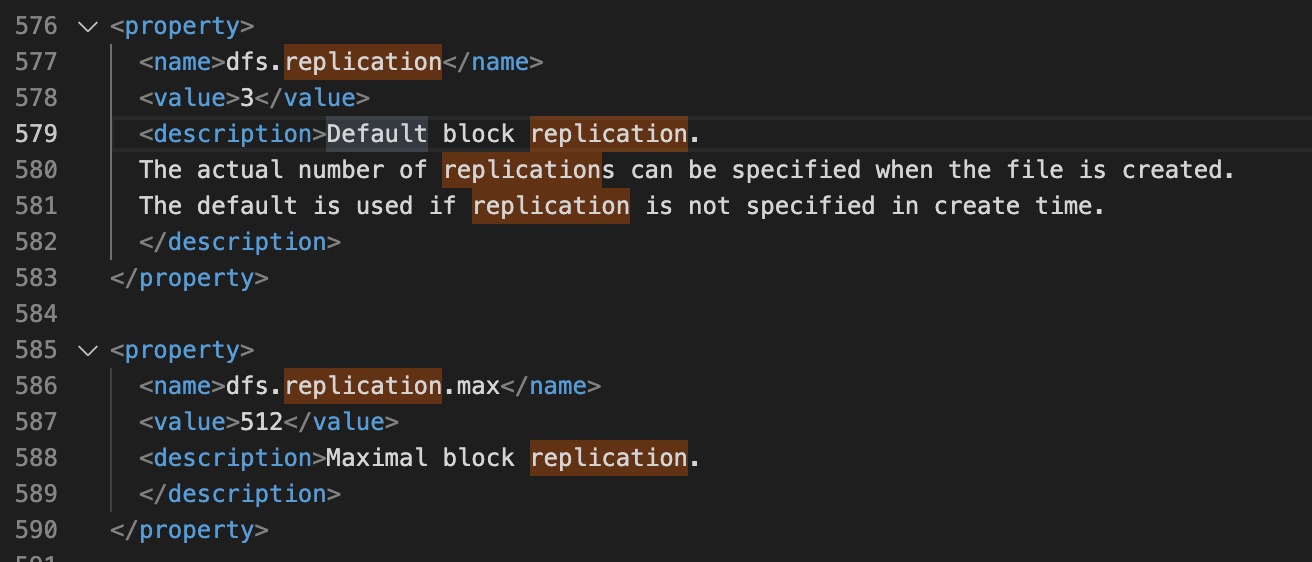

副本数

默认副本数是3,副本保证数据安全

配置地址hdfs-site.xml中进行配置,dfs.replication

<configuration>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>bigdata02:50090</value>

</property>

</configuration>

而在hdfs-default.xml中可看到默认副本数为3