概述

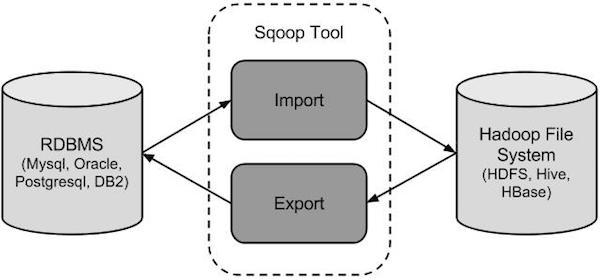

sqoop是apache旗下hadoop和关系型数据库之间传送数据的工具.

核心功能有两个:

导入、迁入

导出、迁出

导入数据:MySQL、Oracle、Sql Server(sqoop2系列支持)导入数据到HDFS、HIVE、HBASE等数据存储系统

导出数据:从Hadoop的文件系统中导出数据到关系型数据库MySQL等。

Sqoop和Hive的区别

sqoop:本质就是迁移数据,迁移方式:把sqoop的迁移命令转换为MR程序.

hive:本质就是执行计算,依赖于HDFS存储数据,把Hive SQL转换为MR程序

工作机制

将导出/导入命令翻译为MapReduce程序来实现,在翻译出的MapReduce中主要是对InputFormat和OutputFormat进行定制。

安装

安装概述

sqoop就是一个工具,只需要在一个节点上进行安装即可。

解压

tar -zxvf sqoop-1.99.7.tar.gz -C /usr/local/

修改配置文件

(1) sqoop-env-template.sh修改为sqoop-env.sh

mv sqoop-env-template.sh sqoop-env.sh

(2) 修改sqoop-env.sh

export HADOOP_COMMON_HOME=/data/soft/hadoop-3.2.0

#Set path to where hadoop-*-core.jar is available

export HADOOP_MAPRED_HOME=/data/soft/hadoop-3.2.0

#set the path to where bin/hbase is available

export HBASE_HOME=/usr/local/hbase-2.3.4

#Set the path to where bin/hive is available

export HIVE_HOME=/data/soft/hive-3.1.2

#Set the path for where zookeper config dir is

export ZOOCFGDIR=/usr/local/zookeeper-3.4.6/conf

(3) 加入myql驱动包到sqoop/lib下

cp /data/soft/hive-3.12/lib/mysql-connector-java-5.1.41.jar /data/soft/sqoop-1.4.7/lib/

(4) 配置环境变量

export SQOOP_HOME=/data/soft/sqoop-1.4.7

export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HIVE_HOME/bin:$SQOOP_HOME/bin:$PATH

验证

sqoop-version 或 sqoop version

[root@bigdata02 bin]# sqoop version

2021-05-28 11:40:58,609 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

Sqoop 1.4.7

git commit id 2328971411f57f0cb683dfb79d19d4d19d185dd8

Compiled by maugli on Thu Dec 21 15:59:58 STD 2017

Q & A

(1). Sqoop - 无法找到或加载主类org.apache.sqoop.Sqoop(Sqoop - Could not find or load main class org.apache.sqoop.Sqoop)

A: 在bin中查看sqoop脚本会发现sqoop启动加载主类的地址

exec ${HADOOP_COMMON_HOME}/bin/hadoop

在网上下载sqoop.1.4.7.jar安装包,然后将上述修改,注意路径相匹配。

exec ${HADOOP_COMMON_HOME}/bin/hadoop jar ${SQOOP_HOME}/lib/sqoop-1.4.7.jar org.apache.sqoop.Sqoop "$@"

(2). 启动sqoop version时会提示一堆warning信息,please set $HCAT_HOME to the root of your HCatalog installation A: 在$SQOOP_HOME/bin目录下面修改configure-sqoop文件,注释掉以下内容:

#if [ -z "${HCAT_HOME}" ]; then

# if [ -d "/usr/lib/hive-hcatalog" ]; then

# HCAT_HOME=/usr/lib/hive-hcatalog

# elif [ -d "/usr/lib/hcatalog" ]; then

# HCAT_HOME=/usr/lib/hcatalog

# else

# HCAT_HOME=${SQOOP_HOME}/../hive-hcatalog

# if [ ! -d ${HCAT_HOME} ]; then

# HCAT_HOME=${SQOOP_HOME}/../hcatalog

# fi

# fi

#fi

#if [ -z "${ACCUMULO_HOME}" ]; then

# if [ -d "/usr/lib/accumulo" ]; then

# ACCUMULO_HOME=/usr/lib/accumulo

# else

# ACCUMULO_HOME=${SQOOP_HOME}/../accumulo

# fi

#fi

## Moved to be a runtime check in sqoop.

#if [ ! -d "${HCAT_HOME}" ]; then

# echo "Warning: $HCAT_HOME does not exist! HCatalog jobs will fail."

# echo 'Please set $HCAT_HOME to the root of your HCatalog installation.'

#fi

#if [ ! -d "${ACCUMULO_HOME}" ]; then

# echo "Warning: $ACCUMULO_HOME does not exist! Accumulo imports will fail."

# echo 'Please set $ACCUMULO_HOME to the root of your Accumulo installation.'

#fi

随后启动sqoop,warning信息消失。

实例

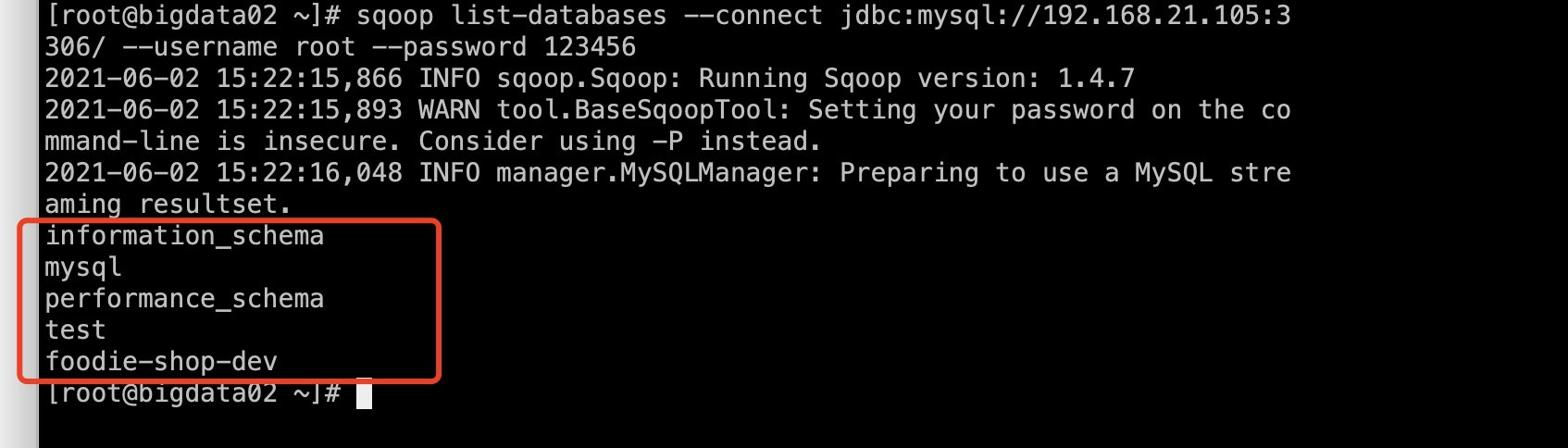

列出连接的MySQL有哪些数据库

sqoop list-databases --connect jdbc:mysql://192.168.21.105:3306/ --username root --password 123456

2021-06-02 15:22:15,866 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

2021-06-02 15:22:15,893 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

2021-06-02 15:22:16,048 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

information_schema

mysql

performance_schema

test

foodie-shop-dev

列出MySQL的某个数据库有哪些表

sqoop list-tables --connect jdbc:mysql://192.168.21.105:3306/foodie-shop-dev --username root --password 123456

2021-06-02 15:23:24,143 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

2021-06-02 15:23:24,167 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

2021-06-02 15:23:24,322 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

carousel

category

items

items_comments

items_img

items_param

items_spec

order_items

order_status

orders

stu

user_address

users

创建一张跟测试表一样的hive table

sqoop create-hive-table --connect jdbc:mysql://192.168.21.105:3306/foodie-shop-dev --username root --password 123456 --table users --hive-table users_ht

Sqoop的数据导入

导入工具,导入单个表从RDBMS到HDFS,表中的每一行被视为HDFS的记录,所有记录都存储为文本文件的文本数据(或者Avro、Sequence文件等二进制数据)。

1.从RDBMS导入到HDFS中

sqoop import [GENERIC-ARGS] [TOOL-ARGS]

举例,

- 将batch_insert库中的web_user_info表同步到HDFS中

sqoop import --connect jdbc:mysql://192.168.21.105:3306/foodie-shop-dev --username root --password 123456 --table users -m 1

默认存储路径为/user/用户名/表名

- 查看同步到HDFS中的文件

hdfs dfs -cat /user/root/users/part-m-00000

[root@bigdata02 ~]# hdfs dfs -cat /user/root/users/part-m-00000

1908017YR51G1XWH,imooc,Qpf0SxOVUjUkWySXOZ16kw==,imooc,null,http://122.152.205.72:88/group1/M00/00/05/CpoxxFw_8_qAIlFXAAAcIhVPdSg994.png,null,null,2,1900-01-01,2019-08-14 23:44:30.0,2019-08-14 23:44:30.0

190815GTKCBSS7MW,test,Qpf0SxOVUjUkWySXOZ16kw==,test,null,http://122.152.205.72:88/group1/M00/00/05/CpoxxFw_8_qAIlFXAAAcIhVPdSg994.png,null,null,2,1900-01-01,2019-08-15 22:11:58.0,2019-08-15 22:11:58.0

190816HH9RDPD6Y8,abc,Qpf0SxOVUjUkWySXOZ16kw==,abc,null,http://122.152.205.72:88/group1/M00/00/05/CpoxxFw_8_qAIlFXAAAcIhVPdSg994.png,null,null,2,1900-01-01,2019-08-16 23:14:12.0,2019-08-16 23:14:12.0

1908189H7TNWDTXP,imooc123,Qpf0SxOVUjUkWySXOZ16kw==,imooc123,null,http://122.152.205.72:88/group1/M00/00/05/CpoxxFw_8_qAIlFXAAAcIhVPdSg994.png,null,null,2,1900-01-01,2019-08-18 13:25:30.0,2019-08-18 13:25:30.0

190818A4HC2BPDP0,test123,Qpf0SxOVUjUkWySXOZ16kw==,test123,null,http://122.152.205.72:88/group1/M00/00/05/CpoxxFw_8_qAIlFXAAAcIhVPdSg994.png,null,null,2,1900-01-01,2019-08-18 14:14:28.0,2019-08-18 14:14:28.0

190818AWZ22872FW,1imooc,Qpf0SxOVUjUkWySXOZ16kw==,1imooc,null,http://122.152.205.72:88/group1/M00/00/05/CpoxxFw_8_qAIlFXAAAcIhVPdSg994.png,null,null,2,1900-01-01,2019-08-18 15:15:39.0,2019-08-18 15:15:39.0

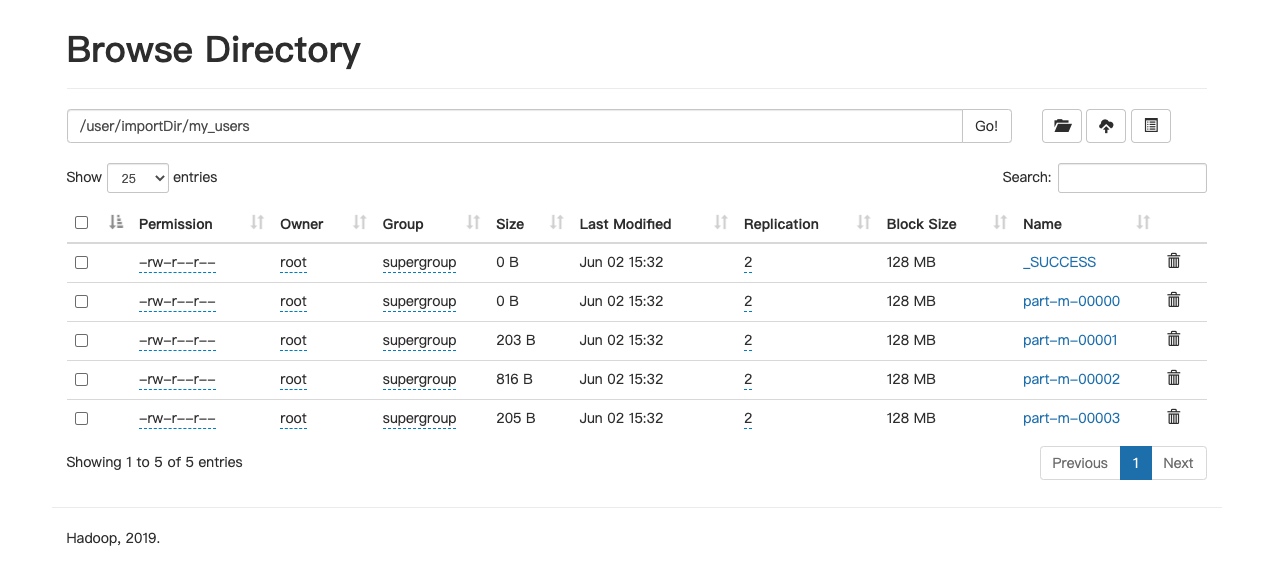

- 指定上传到HDFS上的目录和分隔符

sqoop import --connect jdbc:mysql://192.168.21.105:3306/foodie-shop-dev --username root --password 123456 --table users --target-dir /user/importDir/my_users --fields-terminated-by ' ' -m 2

Caused by: Generating splits for a textual index column allowed only in case of "-Dorg.apache.sqoop.splitter.allow_text_splitter=true" property passed as a parameter

at org.apache.sqoop.mapreduce.db.TextSplitter.split(TextSplitter.java:67)

at org.apache.sqoop.mapreduce.db.DataDrivenDBInputFormat.getSplits(DataDrivenDBInputFormat.java:201)

... 29 more

主要问题是“--split-by id”这个参数指定的id是一个文本格式,所以需要在命令中加入选项"-Dorg.apache.sqoop.splitter.allow_text_splitter=true",补齐命令:

sqoop import "-Dorg.apache.sqoop.splitter.allow_text_splitter=true" --connect jdbc:mysql://192.168.21.105:3306/foodie-shop-dev --username root --password 123456 --table users --target-dir /user/importDir/my_users --fields-terminated-by ' ' -m 2

- 查看上传到HDFS数据

- 查询筛选条件下的数据

- 准备查询数据

sqoop import --connect jdbc:mysql://192.168.21.105:3306/foodie-shop-dev --username root --password 123456 --where "username = 'imooc' " --table users -m 1

验证上传的数据仅where筛选条件后的数据。

[root@bigdata02 hadoop]# hdfs dfs -cat /user/root/users/part-m-00000

1908017YR51G1XWH,imooc,Qpf0SxOVUjUkWySXOZ16kw==,imooc,null,http://122.152.205.72:88/group1/M00/00/05/CpoxxFw_8_qAIlFXAAAcIhVPdSg994.png,null,null,2,1900-01-01,2019-08-14 23:44:30.0,2019-08-14 23:44:30.0

- 查看指定列

sqoop import --connect jdbc:mysql://192.168.21.105:3306/foodie-shop-dev --username root --password 123456 --target-dir /user/importDir/my_users_limit_cloumn -m 1 --query "select id,username,nickname from users where username = 'imooc' "

执行得到报错信息如下:

2021-06-02 16:07:20,424 INFO tool.CodeGenTool: Beginning code generation

2021-06-02 16:07:20,438 ERROR tool.ImportTool: Import failed: java.io.IOException: Query [select id,username,nickname from users where username = 'imooc' ] must contain '$CONDITIONS' in WHERE clause.

at org.apache.sqoop.manager.ConnManager.getColumnTypes(ConnManager.java:332)

at org.apache.sqoop.orm.ClassWriter.getColumnTypes(ClassWriter.java:1872)

at org.apache.sqoop.orm.ClassWriter.generate(ClassWriter.java:1671)

at org.apache.sqoop.tool.CodeGenTool.generateORM(CodeGenTool.java:106)

at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:501)

at org.apache.sqoop.tool.ImportTool.run(ImportTool.java:628)

at org.apache.sqoop.Sqoop.run(Sqoop.java:147)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:183)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:234)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:243)

at org.apache.sqoop.Sqoop.main(Sqoop.java:252)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:323)

at org.apache.hadoop.util.RunJar.main(RunJar.java:236)

显示where条件后需要加入$CONDITIONS.

sqoop import --connect jdbc:mysql://192.168.21.105:3306/foodie-shop-dev --username root --password 123456 --target-dir /sqoop/conditiontest --delete-target-dir --query 'select id,username,nickname from users where username = "imooc" and $CONDITIONS' --m 1

2.从RDBMS导入到Hive中

sqoop 从RDBMS到hive的过程,是先导入到hdfs,后导入到hive

普通导入:数据存储在默认的default hive数据库中,表名即为mysql对应的表名

sqoop import --connect jdbc:mysql://192.168.21.105:3306/foodie-shop-dev --username root --password 123456 --table users --hive-import -m 1

提示Output directory hdfs://bigdata02:9000/user/root/users already exists

2021-06-02 16:32:11,082 ERROR tool.ImportTool: Import failed: org.apache.hadoop.mapred.FileAlreadyExistsException: Output directory hdfs://bigdata02:9000/user/root/users already exists

at org.apache.hadoop.mapreduce.lib.output.FileOutputFormat.checkOutputSpecs(FileOutputFormat.java:164)

at org.apache.hadoop.mapreduce.JobSubmitter.checkSpecs(JobSubmitter.java:277)

at org.apache.hadoop.mapreduce.JobSubmitter.submitJobInternal(JobSubmitter.java:143)

at org.apache.hadoop.mapreduce.Job$11.run(Job.java:1570)

at org.apache.hadoop.mapreduce.Job$11.run(Job.java:1567)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1730)

at org.apache.hadoop.mapreduce.Job.submit(Job.java:1567)

at org.apache.hadoop.mapreduce.Job.waitForCompletion(Job.java:1588)

at org.apache.sqoop.mapreduce.ImportJobBase.doSubmitJob(ImportJobBase.java:200)

导入过程:

- 导入users的数据到hdfs默认路径

- 自动仿照users表结构去创建一张hive表,创建在默认的default库

- 把临时目录中的数据导入到hive表中

hive> show tables;

OK

users

web_user_info_ht

Time taken: 0.277 seconds, Fetched: 2 row(s)

hive> select * from users;

OK

1908017YR51G1XWH imooc Qpf0SxOVUjUkWySXOZ16kw== imooc null http://122.152.205.72:88/group1/M00/00/05/CpoxxFw_8_qAIlFXAAAcIhVPdSg994.png null null 2 1900-01-01 2019-08-14 23:44:30.0 2019-08-14 23:44:30.0

190815GTKCBSS7MW test Qpf0SxOVUjUkWySXOZ16kw== test null http://122.152.205.72:88/group1/M00/00/05/CpoxxFw_8_qAIlFXAAAcIhVPdSg994.png null null 2 1900-01-01 2019-08-15 22:11:58.0 2019-08-15 22:11:58.0

190816HH9RDPD6Y8 abc Qpf0SxOVUjUkWySXOZ16kw== abc null http://122.152.205.72:88/group1/M00/00/05/CpoxxFw_8_qAIlFXAAAcIhVPdSg994.png null null 2 1900-01-01 2019-08-16 23:14:12.0 2019-08-16 23:14:12.0

1908189H7TNWDTXP imooc123 Qpf0SxOVUjUkWySXOZ16kw== imooc12null http://122.152.205.72:88/group1/M00/00/05/CpoxxFw_8_qAIlFXAAAcIhVPdSg994.png null null 2 1900-01-01 2019-08-18 13:25:30.0 2019-08-18 13:25:30.0

190818A4HC2BPDP0 test123 Qpf0SxOVUjUkWySXOZ16kw== test123 null http://122.152.205.72:88/group1/M00/00/05/CpoxxFw_8_qAIlFXAAAcIhVPdSg994.png null null 2 1900-01-01 2019-08-18 14:14:28.0 2019-08-18 14:14:28.0

190818AWZ22872FW 1imooc Qpf0SxOVUjUkWySXOZ16kw== 1imooc null http://122.152.205.72:88/group1/M00/00/05/CpoxxFw_8_qAIlFXAAAcIhVPdSg994.png null null 2 1900-01-01 2019-08-18 15:15:39.0 2019-08-18 15:15:39.0

Time taken: 3.13 seconds, Fetched: 6 row(s)

hive> create table users;

FAILED: SemanticException [Error 10043]: Either list of columns or a custom serializer should be specified

hive> show create table users;

OK

CREATE TABLE `users`(

`id` string,

`username` string,

`password` string,

`nickname` string,

`realname` string,

`face` string,

`mobile` string,

`email` string,

`sex` int,

`birthday` string,

`created_time` string,

`updated_time` string)

COMMENT 'Imported by sqoop on 2021/06/02 16:36:04'

ROW FORMAT SERDE

'org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe'

WITH SERDEPROPERTIES (

'field.delim'='',

'line.delim'='

',

'serialization.format'='')

STORED AS INPUTFORMAT

'org.apache.hadoop.mapred.TextInputFormat'

OUTPUTFORMAT

'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION

'hdfs://bigdata02:9000/user/hive/warehouse/users'

TBLPROPERTIES (

'bucketing_version'='2',

'transient_lastDdlTime'='1622622991')

Time taken: 0.218 seconds, Fetched: 29 row(s)

查看数据

[root@bigdata02 ~]# hdfs dfs -cat /user/hive/warehouse/users/part-m-00000

- 指定行分隔符和列分隔符,指定数据库,指定覆盖导入,指定自动创建hive表,指定表名,指定删除中间结果数据目录

sqoop import -Dorg.apache.sqoop.splitter.allow_text_splitter=true --connect jdbc:mysql://192.168.21.105:3306/foodie-shop-dev --username root --password 123456 --table users --fields-terminated-by " " --lines-terminated-by "

" --hive-import --hive-overwrite --create-hive-table --delete-target-dir --hive-database mydb_test --hive-table new_help_keyword

提示报错:

2021-06-02 16:55:19,345 ERROR tool.ImportTool: Import failed: java.io.IOException: Hive exited with status 88

at org.apache.sqoop.hive.HiveImport.executeExternalHiveScript(HiveImport.java:384)

at org.apache.sqoop.hive.HiveImport.executeScript(HiveImport.java:337)

at org.apache.sqoop.hive.HiveImport.importTable(HiveImport.java:241)

at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:537)

at org.apache.sqoop.tool.ImportTool.run(ImportTool.java:628)

at org.apache.sqoop.Sqoop.run(Sqoop.java:147)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:183)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:234)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:243)

at org.apache.sqoop.Sqoop.main(Sqoop.java:252)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:323)

at org.apache.hadoop.util.RunJar.main(RunJar.java:236)

报错原因是hive-import 当使用这个导入命令,sqoop会自动创建hive表,但是不会自动创建不存在的库。

hive> create database mydb_test;

OK

Time taken: 0.965 seconds

创建对应的database之后,重新执行该语句。

hive> use mydb_test;

OK

Time taken: 0.1 seconds

hive> show tables;

OK

new_users

Time taken: 0.196 seconds, Fetched: 1 row(s)

hive> select * from new_users;

OK

1908017YR51G1XWH imooc Qpf0SxOVUjUkWySXOZ16kw== imooc null http://122.152.205.72:88/group1/M00/00/05/CpoxxFw_8_qAIlFXAAAcIhVPdSg994.png null null 2 1900-01-01 2019-08-14 23:44:30.0 2019-08-14 23:44:30.0

190815GTKCBSS7MW test Qpf0SxOVUjUkWySXOZ16kw== test null http://122.152.205.72:88/group1/M00/00/05/CpoxxFw_8_qAIlFXAAAcIhVPdSg994.png null null 2 1900-01-01 2019-08-15 22:11:58.0 2019-08-15 22:11:58.0

190816HH9RDPD6Y8 abc Qpf0SxOVUjUkWySXOZ16kw== abc null http://122.152.205.72:88/group1/M00/00/05/CpoxxFw_8_qAIlFXAAAcIhVPdSg994.png null null 2 1900-01-01 2019-08-16 23:14:12.0 2019-08-16 23:14:12.0

1908189H7TNWDTXP imooc123 Qpf0SxOVUjUkWySXOZ16kw== imooc12null http://122.152.205.72:88/group1/M00/00/05/CpoxxFw_8_qAIlFXAAAcIhVPdSg994.png null null 2 1900-01-01 2019-08-18 13:25:30.0 2019-08-18 13:25:30.0

190818A4HC2BPDP0 test123 Qpf0SxOVUjUkWySXOZ16kw== test123 null http://122.152.205.72:88/group1/M00/00/05/CpoxxFw_8_qAIlFXAAAcIhVPdSg994.png null null 2 1900-01-01 2019-08-18 14:14:28.0 2019-08-18 14:14:28.0

190818AWZ22872FW 1imooc Qpf0SxOVUjUkWySXOZ16kw== 1imooc null http://122.152.205.72:88/group1/M00/00/05/CpoxxFw_8_qAIlFXAAAcIhVPdSg994.png null null 2 1900-01-01 2019-08-18 15:15:39.0 2019-08-18 15:15:39.0

Time taken: 0.756 seconds, Fetched: 6 row(s)

增量导入到hive库

sqoop import --connect jdbc:mysql://192.168.21.105:3306/foodie-shop-dev --username root --password 123456 --table users --target-dir /users/sqoop_import/append2Hive --incremental append --check-column id --last-value 3

提示报错:

2021-06-02 17:07:51,145 INFO tool.ImportTool: Maximal id query for free form incremental import: SELECT MAX(

id) FROMusers2021-06-02 17:07:51,148 ERROR tool.ImportTool: Import failed: Character column (id) can not be used to determine which rows to incrementally import.

提示非数值型的值不允许当做增量

3. 从sqoop将MySQL中数据导入到HBase中

sqoop从HDFS中导出数据到MySQL

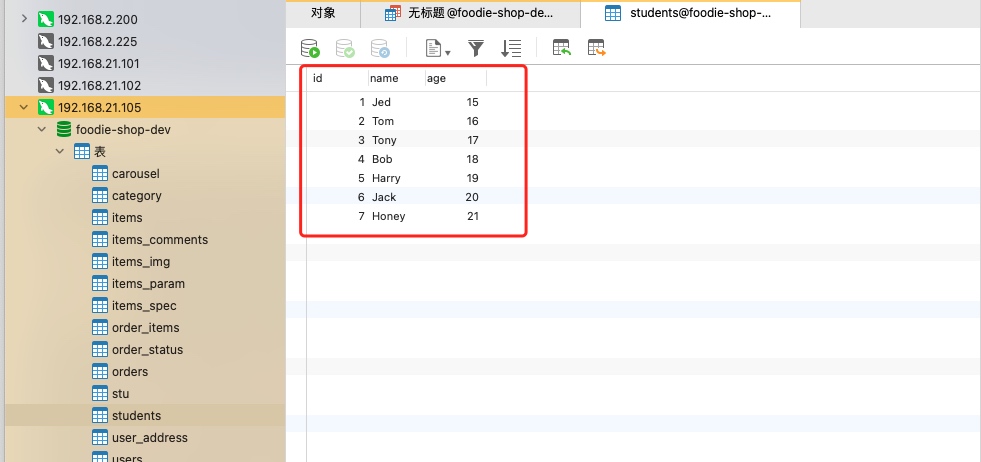

- 上传student.txt到HDFS

bash [root@bigdata02 ~]# hdfs dfs -cat /student.txt 1,Jed,15 2,Tom,16 3,Tony,17 4,Bob,18 5,Harry,19 6,Jack,20 7,Honey,21 - 执行sqoop命令

bash sqoop export --connect jdbc:mysql://192.168.21.105:3306/foodie-shop-dev --username root --password 123456 --table students --export-dir /student.txt --num-mappers 1 执行出现报错:Student表未创建

2021-06-02 17:21:51,895 ERROR manager.SqlManager: Error executing statement: com.mysql.jdbc.exceptions.jdbc4.MySQLSyntaxErrorException: Table 'foodie-shop-dev.students' doesn't exist com.mysql.jdbc.exceptions.jdbc4.MySQLSyntaxErrorException: Table 'foodie-shop-dev.students' doesn't exist创建students表后,重新执行该命令,数据正常导入mysql。