(Mainly quoted from its official website)

Summary:

1. TensorFlow™ is an open source software library.

2. Virtualenv is a tool to create isolated Python environments.

3. Docker:

An image is a executable package.

A container is a runtime instance of an image.

4. CUDA® is a parallel computing platform and programming model.

The CUDA Toolkit is used to develop GPU-accelerated applications.

5. cuDNN is a GPU-accelerated library of primitives for deep neural networks.

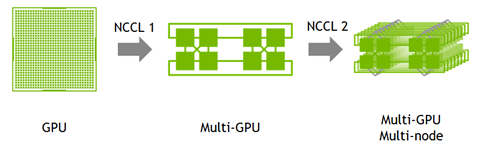

6. NCCL implements multi-GPU and multi-node collective communication primitives.

7. Bazel is an open-source build and test tool.

------------------------------------------------------------------------------------

TensorFlow™

TensorFlow™ is an open source software library for high performance numerical computation.

Its flexible architecture allows easy deployment of computation across a variety of platforms (CPUs, GPUs, TPUs), and from desktops to clusters of servers to mobile and edge devices.

------------------------------

Virtualenv

Virtualenv is a tool to create isolated Python environments.

e.g. Some applications need python 2.x, while some need python 3.x, so that they cannot share the system default python.

One solution is to use virtualenv to create isolated python environments for different applications.

Usage:

- Create an environment (e.g. venv)

- Use source to enter this environment (e.g. $ source venv/bin/activate)

- Inside the venv, the packages installed by pip will be installed in the venv and will not be influenced by system default python. (e.g. (venv) $ pip install ***)

- Use deactivate to exit the current environment. (e.g. (venv) $ deactivate)

------------------------------

Docker

An image is a lightweight, stand-alone, executable package that includes everything needed to run a piece of software, including the code, a runtime, libraries, environment variables, and config files.

A container is a runtime instance of an image—what the image becomes in memory when actually executed. It runs completely isolated from the host environment by default, only accessing host files and ports if configured to do so.

Container vs. virtual machines

Containers run apps natively on the host machine’s kernel. They have better performance characteristics than virtual machines that only get virtual access to host resources through a hypervisor. Containers can get native access, each one running in a discrete process, taking no more memory than any other executable.

Virtual machines run guest operating systems—note the OS layer in each box. This is resource intensive, and the resulting disk image and application state is an entanglement of OS settings, system-installed dependencies, OS security patches, and other easy-to-lose, hard-to-replicate ephemera. 这是资源密集型的,所产生的磁盘映像和应用程序状态,会导致众多问题的纠缠,如操作系统设置、系统安装依赖项、OS安全修补程序和其他易丢失、因仅短期有效而难以复制的生命期。

Containers can share a single kernel, and the only information that needs to be in a container image is the executable and its package dependencies, which never need to be installed on the host system. These processes run like native processes, and you can manage them individually by running commands like docker ps—just like you would run ps on Linux to see active processes. Finally, because they contain all their dependencies, there is no configuration entanglement; a containerized app “runs anywhere.” 容器可以共享单个内核,并且容器映像中唯一需要的信息是可执行文件及其包依赖项,它们永远不需要安装在主机系统上。 这些进程像本机进程一样运行,您可以通过运行docker ps之类的命令来单独管理它们,就像在Linux上运行ps来查看活动进程一样。 最后,因为它们包含所有依赖项,所以没有配置纠缠; 容器化的应用程序“随处运行”。

With containerization, developers don’t need to write application code into different VMs operating different app components to retrieve compute, storage and networking resources. A complete application component can be executed in its entirety within its isolated environment without affecting other app components or software. Conflicts within libraries or app components do not occur during execution and the application container can move between the cloud or data center instances efficiently.

(https://www.bmc.com/blogs/containers-vs-virtual-machines/)

------------------

Virtualenv vs. Container

Virtualenv is used to isolate different python versions.

Container is used to let different components of a complete application be executed in its entirety within its isolated resources. Each container contains everything needed to run a piece of software.

-----------------------------------

CUDA (https://developer.nvidia.com/cuda-zone)

CUDA® is a parallel computing platform and programming model developed by NVIDIA for general computing on graphical processing units (GPUs). With CUDA, developers are able to dramatically speed up computing applications by harnessing the power of GPUs.

In GPU-accelerated applications, the sequential part of the workload runs on the CPU – which is optimized for single-threaded performance – while the compute intensive portion of the application runs on thousands of GPU cores in parallel. When using CUDA, developers program in popular languages such as C, C++, Fortran, Python and MATLAB and express parallelism through extensions in the form of a few basic keywords.

The CUDA Toolkit from NVIDIA provides everything you need to develop GPU-accelerated applications. The CUDA Toolkit includes GPU-accelerated libraries, a compiler, development tools and the CUDA runtime.

-------------------------------

cuDNN (https://developer.nvidia.com/cudnn)

The NVIDIA CUDA® Deep Neural Network library (cuDNN) is a GPU-accelerated library of primitives for deep neural networks. cuDNN provides highly tuned implementations for standard routines such as forward and backward convolution, pooling, normalization, and activation layers. cuDNN is part of the NVIDIA Deep Learning SDK.

Deep learning researchers and framework developers worldwide rely on cuDNN for high-performance GPU acceleration. It allows them to focus on training neural networks and developing software applications rather than spending time on low-level GPU performance tuning. cuDNN accelerates widely used deep learning frameworks, including Caffe,Caffe2, Chainer, Keras,MATLAB, MxNet, TensorFlow, and PyTorch.

---------------------

NCCL

The NVIDIA Collective Communications Library (NCCL) implements multi-GPU and multi-node collective communication primitives that are performance optimized for NVIDIA GPUs. NCCL provides routines such as all-gather, all-reduce, broadcast, reduce, reduce-scatter, that are optimized to achieve high bandwidth over PCIe and NVLink high-speed interconnect.

Developers of deep learning frameworks can rely on NCCL’s highly optimized, MPI compatible and topology aware routines, to take full advantage of all available GPUs within and across multiple nodes. Leading deep learning frameworks such as Caffe,Caffe2, Chainer, MxNet, TensorFlow, and PyTorch have integrated NCCL to accelerate deep learning training on multi-GPU systems.

---------------------

Bazel

Bazel is an open-source build and test tool similar to Make, Maven, and Gradle. It uses a human-readable, high-level build language. Bazel supports projects in multiple languages and builds outputs for multiple platforms. Bazel supports large codebases across multiple repositories, and large numbers of users.

(Can use it to build tensorflow from source.)