推理代码 multi-person-openpose_rknn-cam_coco.py

import cv2

import time

import numpy as np

from random import randint

from rknn.api import RKNN

from processing_openpose import extract_parts, draw

rknn = RKNN()

output = 'result_rknn.png'

rknn.load_rknn('./coco_quantization_368_654.rknn')

ret = rknn.init_runtime(target='rk1808', target_sub_class='AICS')

if ret != 0:

print('Init runtime environment failed')

exit(ret)

print('done')

cap = cv2.VideoCapture(0)

hasFrame, frame = cap.read()

while cv2.waitKey(1) < 0:

t = time.time()

hasFrame, frame = cap.read()

tic = time.time()

img_image = cv2.imread('E:\usb_test\example\yolov3\openpose_keras_18key\640_360.jpg')

if not hasFrame:

cv2.waitKey()

break

body_parts, all_peaks, subset, candidate = extract_parts(img_image,rknn)

t4 = time.time()

canvas = draw(img_image, all_peaks, subset, candidate)

print("t4",time.time()-t4)

toc = time.time()

print('processing time is %.5f' % (toc - tic))

#

cv2.imwrite(output, canvas)

#

cv2.destroyAllWindows()

rknn.release()

processing_openpose.py

import math

import numpy as np

from scipy.ndimage.filters import gaussian_filter

import cv2

import scipy.io as scio

import util

import time

COCO_BODY_PARTS = ['nose', 'neck',

'right_shoulder', ' right_elbow', 'right_wrist',

'left_shoulder', 'left_elbow', 'left_wrist',

'right_hip', 'right_knee', 'right_ankle',

'left_hip', 'left_knee', 'left_ankle',

'right_eye', 'left_eye', 'right_ear', 'left_ear', 'background'

]

def extract_parts(input_image,rknn):

start_time = time.time()

# Body parts location heatmap, one per part (19)

heatmap_avg = np.zeros((input_image.shape[0], input_image.shape[1], 19))

paf_avg = np.zeros((input_image.shape[0], input_image.shape[1], 38))

#scale = 1.5333333333333334 #552 984

scale = 1.0222222222222221 #368 656

image_to_test = cv2.resize(input_image, (0, 0), fx=scale, fy=scale, interpolation=cv2.INTER_CUBIC)

frame_input = np.transpose(image_to_test, [2, 0, 1])

#print(frame_input.shape)

image_to_test_padded, pad = util.pad_right_down_corner(image_to_test, 8,

128)

frameWidth = image_to_test.shape[1]

frameHeight = image_to_test.shape[0]

inHeight = 368

inWidth = int((inHeight / frameHeight) * frameWidth)

#print(frame_input.shape)

[output] = rknn.inference(inputs=[frame_input], data_format="nchw")

print(output.shape)

#kk = output.flatten()

#st = ''

#print(len(kk))

#for x in kk:

# st+= ' '+str(x)

#with open('t.txt','a') as file_handle:

# file_handle.write(st) # 写入

# rknn输出的数组转为1x57x46x46的矩阵

output_blobs = output.reshape(1, 57, 46, 82)

scio.savemat("stat1.mat", {'A':output_blobs})

#inpBlob = cv2.dnn.blobFromImage(image_to_test, 1.0 / 255, (inWidth, inHeight), (0, 0, 0), swapRB=False, crop=False)

# required shape (1, width, height, channels)

#input_img = np.transpose(np.float32(image_to_test_padded[:, :, :, np.newaxis]), (3, 0, 1, 2))

#print(image_to_test_padded.shape)

#model.setInput(inpBlob )

#output_blobs = model.forward()

output_blobs = output_blobs.transpose([0, 2, 3, 1])

heatmap = output_blobs[0, :, :, 0:19]

paf = output_blobs[0, :, :, 19:]

print("inference time is ",time.time() - start_time)

#print(heatmap.shape)

#print(paf.shape)

heatmap = cv2.resize(heatmap, (0, 0), fx=8, fy=8,

interpolation=cv2.INTER_CUBIC)

heatmap = heatmap[:image_to_test_padded.shape[0] - pad[2], :image_to_test_padded.shape[1] - pad[3], :]

heatmap = cv2.resize(heatmap, (input_image.shape[1], input_image.shape[0]), interpolation=cv2.INTER_CUBIC)

#paf = np.squeeze(output_blobs[0]) # output 0 is PAFs

paf = cv2.resize(paf, (0, 0), fx=8, fy=8,

interpolation=cv2.INTER_CUBIC)

paf = paf[:image_to_test_padded.shape[0] - pad[2], :image_to_test_padded.shape[1] - pad[3], :]

paf = cv2.resize(paf, (input_image.shape[1], input_image.shape[0]), interpolation=cv2.INTER_CUBIC)

heatmap_avg = heatmap

paf_avg = paf

all_peaks = []

peak_counter = 0

t0 = time.time()

for part in range(18):

hmap_ori = heatmap_avg[:, :, part]

hmap = gaussian_filter(hmap_ori, sigma=3)

# Find the pixel that has maximum value compared to those around it

hmap_left = np.zeros(hmap.shape)

hmap_left[1:, :] = hmap[:-1, :]

hmap_right = np.zeros(hmap.shape)

hmap_right[:-1, :] = hmap[1:, :]

hmap_up = np.zeros(hmap.shape)

hmap_up[:, 1:] = hmap[:, :-1]

hmap_down = np.zeros(hmap.shape)

hmap_down[:, :-1] = hmap[:, 1:]

# reduce needed because there are > 2 arguments

peaks_binary = np.logical_and.reduce(

(hmap >= hmap_left, hmap >= hmap_right, hmap >= hmap_up, hmap >= hmap_down, hmap > 0.1))

peaks = list(zip(np.nonzero(peaks_binary)[1], np.nonzero(peaks_binary)[0])) # note reverse

peaks_with_score = [x + (hmap_ori[x[1], x[0]],) for x in peaks] # add a third element to tuple with score

idx = range(peak_counter, peak_counter + len(peaks))

peaks_with_score_and_id = [peaks_with_score[i] + (idx[i],) for i in range(len(idx))]

all_peaks.append(peaks_with_score_and_id)

peak_counter += len(peaks)

connection_all = []

special_k = []

mid_num = 10

#print(len(util.hmapIdx))

print("t0",time.time()-t0)

t1 = time.time()

for k in range(len(util.hmapIdx)):

score_mid_t = time.time()

score_mid = paf_avg[:, :, [x - 19 for x in util.hmapIdx[k]]]

cand_a = all_peaks[util.limbSeq[k][0] - 1]

cand_b = all_peaks[util.limbSeq[k][1] - 1]

print("score_mid_t:",time.time()-score_mid_t)#0.14

n_a = len(cand_a)

n_b = len(cand_b)

# index_a, index_b = util.limbSeq[k]

t1_0 =time.time()

if n_a != 0 and n_b != 0:

connection_candidate = []

print("n_a:%d n_b:%d"%(n_a,n_b))

t1_i =time.time()

for i in range(n_a):

t1_j =time.time()

for j in range(n_b):

vec = np.subtract(cand_b[j][:2], cand_a[i][:2])

norm = math.sqrt(vec[0] * vec[0] + vec[1] * vec[1])

# failure case when 2 body parts overlaps

if norm == 0:

continue

vec = np.divide(vec, norm)

startend = list(zip(np.linspace(cand_a[i][0], cand_b[j][0], num=mid_num),

np.linspace(cand_a[i][1], cand_b[j][1], num=mid_num)))

#print("startend:%d"%(len(startend)))

vec_x = np.array(

[score_mid[int(round(startend[I][1])), int(round(startend[I][0])), 0]

for I in range(len(startend))])

vec_y = np.array(

[score_mid[int(round(startend[I][1])), int(round(startend[I][0])), 1]

for I in range(len(startend))])

score_midpts = np.multiply(vec_x, vec[0]) + np.multiply(vec_y, vec[1])

score_with_dist_prior = sum(score_midpts) / len(score_midpts) + min(

0.5 * input_image.shape[0] / norm - 1, 0)

criterion1 = len(np.nonzero(score_midpts > 0.05)[0]) > 0.8 * len(

score_midpts)

criterion2 = score_with_dist_prior > 0

if criterion1 and criterion2:

connection_candidate.append([i, j, score_with_dist_prior,

score_with_dist_prior + cand_a[i][2] + cand_b[j][2]])

#print("t1_j:",time.time() - t1_j)

#print("t1_i:",time.time() - t1_i)

t1_1 = time.time()

connection_candidate = sorted(connection_candidate, key=lambda x: x[2], reverse=True)

print("t1_1",time.time() - t1_1)

connection = np.zeros((0, 5))

for c in range(len(connection_candidate)):

i, j, s = connection_candidate[c][0:3]

if i not in connection[:, 3] and j not in connection[:, 4]:

connection = np.vstack([connection, [cand_a[i][3], cand_b[j][3], s, i, j]])

if len(connection) >= min(n_a, n_b):

break

connection_all.append(connection)

else:

special_k.append(k)

connection_all.append([])

print("t1_0",time.time()-t1_0)

# last number in each row is the total parts number of that person

# the second last number in each row is the score of the overall configuration

subset = np.empty((0, 20))

candidate = np.array([item for sublist in all_peaks for item in sublist])

print("t1",time.time()-t1)

t2 = time.time()

for k in range(len(util.hmapIdx)):

if k not in special_k:

part_as = connection_all[k][:, 0]

part_bs = connection_all[k][:, 1]

index_a, index_b = np.array(util.limbSeq[k]) - 1

for i in range(len(connection_all[k])): # = 1:size(temp,1)

found = 0

subset_idx = [-1, -1]

for j in range(len(subset)): # 1:size(subset,1):

if subset[j][index_a] == part_as[i] or subset[j][index_b] == part_bs[i]:

subset_idx[found] = j

found += 1

if found == 1:

j = subset_idx[0]

if subset[j][index_b] != part_bs[i]:

subset[j][index_b] = part_bs[i]

subset[j][-1] += 1

subset[j][-2] += candidate[part_bs[i].astype(int), 2] + connection_all[k][i][2]

elif found == 2: # if found 2 and disjoint, merge them

j1, j2 = subset_idx

membership = ((subset[j1] >= 0).astype(int) + (subset[j2] >= 0).astype(int))[:-2]

if len(np.nonzero(membership == 2)[0]) == 0: # merge

subset[j1][:-2] += (subset[j2][:-2] + 1)

subset[j1][-2:] += subset[j2][-2:]

subset[j1][-2] += connection_all[k][i][2]

subset = np.delete(subset, j2, 0)

else: # as like found == 1

subset[j1][index_b] = part_bs[i]

subset[j1][-1] += 1

subset[j1][-2] += candidate[part_bs[i].astype(int), 2] + connection_all[k][i][2]

# if find no partA in the subset, create a new subset

elif not found and k < 17:

row = -1 * np.ones(20)

row[index_a] = part_as[i]

row[index_b] = part_bs[i]

row[-1] = 2

row[-2] = sum(candidate[connection_all[k][i, :2].astype(int), 2]) + connection_all[k][i][2]

subset = np.vstack([subset, row])

# delete some rows of subset which has few parts occur

print("t2",time.time()-t2)

t3 = time.time()

delete_idx = []

for i in range(len(subset)):

if subset[i][-1] < 4 or subset[i][-2] / subset[i][-1] < 0.4:

delete_idx.append(i)

subset = np.delete(subset, delete_idx, axis=0)

points = []

for peak in all_peaks:

try:

points.append((peak[0][:2]))

except IndexError:

points.append((None, None))

body_parts = dict(zip(COCO_BODY_PARTS, points))

return body_parts, all_peaks, subset, candidate

pirnt("t3",time.time()-t3)

def draw(input_image, all_peaks, subset, candidate, resize_fac=1):

canvas = input_image.copy()

for i in range(18):

for j in range(len(all_peaks[i])):

a = all_peaks[i][j][0] * resize_fac

b = all_peaks[i][j][1] * resize_fac

cv2.circle(canvas, (a, b), 2, util.colors[i], thickness=-1)

stickwidth = 1

for i in range(17):

for s in subset:

index = s[np.array(util.limbSeq[i]) - 1]

if -1 in index:

continue

cur_canvas = canvas.copy()

y = candidate[index.astype(int), 0]

x = candidate[index.astype(int), 1]

m_x = np.mean(x)

m_y = np.mean(y)

length = ((x[0] - x[1]) ** 2 + (y[0] - y[1]) ** 2) ** 0.5

angle = math.degrees(math.atan2(x[0] - x[1], y[0] - y[1]))

polygon = cv2.ellipse2Poly((int(m_y * resize_fac), int(m_x * resize_fac)),

(int(length * resize_fac / 2), stickwidth), int(angle), 0, 360, 1)

cv2.fillConvexPoly(cur_canvas, polygon, util.colors[i])

canvas = cv2.addWeighted(canvas, 0.4, cur_canvas, 0.6, 0)

return canvas

util.py

import numpy as np

from io import StringIO

import PIL.Image

from IPython.display import Image, display

# find connection in the specified sequence, center 29 is in the position 15

limbSeq = [[2, 3], [2, 6], [3, 4], [4, 5], [6, 7], [7, 8], [2, 9], [9, 10],

[10, 11], [2, 12], [12, 13], [13, 14], [2, 1], [1, 15], [15, 17],

[1, 16], [16, 18], [3, 17], [6, 18]]

#

# # the middle joints heatmap correpondence

hmapIdx = [[31, 32], [39, 40], [33, 34], [35, 36], [41, 42], [43, 44], [19, 20], [21, 22],

[23, 24], [25, 26], [27, 28], [29, 30], [47, 48], [49, 50], [53, 54], [51, 52],

[55, 56], [37, 38], [45, 46]]

# limbSeq = [[1,2], [1,5], [2,3], [3,4], [5,6], [6,7],

# [1,8], [8,9], [9,10], [1,11], [11,12], [12,13],

# [1,0], [0,14], [14,16], [0,15], [15,17],

# [2,17], [5,16] ]

# visualize

colors = [[255, 0, 0], [255, 85, 0], [255, 170, 0], [255, 255, 0], [170, 255, 0], [85, 255, 0],

[0, 255, 0],

[0, 255, 85], [0, 255, 170], [0, 255, 255], [0, 170, 255], [0, 85, 255], [0, 0, 255],

[85, 0, 255],

[170, 0, 255], [255, 0, 255], [255, 0, 170], [255, 0, 85]]

def show_bgr_image(a, fmt='jpeg'):

a = np.uint8(np.clip(a, 0, 255))

a[:, :, [0, 2]] = a[:, :, [2, 0]] # for B,G,R order

f = StringIO()

PIL.Image.fromarray(a).save(f, fmt)

display(Image(data=f.getvalue()))

def showmap(a, fmt='png'):

a = np.uint8(np.clip(a, 0, 255))

f = StringIO()

PIL.Image.fromarray(a).save(f, fmt)

display(Image(data=f.getvalue()))

# def checkparam(param):

# octave = param['octave']

# starting_range = param['starting_range']

# ending_range = param['ending_range']

# assert starting_range <= ending_range, 'starting ratio should <= ending ratio'

# assert octave >= 1, 'octave should >= 1'

# return starting_range, ending_range, octave

def get_jet_color(v, vmin, vmax):

c = np.zeros(3)

if v < vmin:

v = vmin

if v > vmax:

v = vmax

dv = vmax - vmin

if v < (vmin + 0.125 * dv):

c[0] = 256 * (0.5 + (v * 4)) # B: 0.5 ~ 1

elif v < (vmin + 0.375 * dv):

c[0] = 255

c[1] = 256 * (v - 0.125) * 4 # G: 0 ~ 1

elif v < (vmin + 0.625 * dv):

c[0] = 256 * (-4 * v + 2.5) # B: 1 ~ 0

c[1] = 255

c[2] = 256 * (4 * (v - 0.375)) # R: 0 ~ 1

elif v < (vmin + 0.875 * dv):

c[1] = 256 * (-4 * v + 3.5) # G: 1 ~ 0

c[2] = 255

else:

c[2] = 256 * (-4 * v + 4.5) # R: 1 ~ 0.5

return c

def colorize(gray_img):

out = np.zeros(gray_img.shape + (3,))

for y in range(out.shape[0]):

for x in range(out.shape[1]):

out[y, x, :] = get_jet_color(gray_img[y, x], 0, 1)

return out

def pad_right_down_corner(img, stride, pad_value):

h = img.shape[0]

w = img.shape[1]

pad = 4 * [None]

pad[0] = 0 # up

pad[1] = 0 # left

pad[2] = 0 if (h % stride == 0) else stride - (h % stride) # down

pad[3] = 0 if (w % stride == 0) else stride - (w % stride) # right

img_padded = img

pad_up = np.tile(img_padded[0:1, :, :] * 0 + pad_value, (pad[0], 1, 1))

img_padded = np.concatenate((pad_up, img_padded), axis=0)

pad_left = np.tile(img_padded[:, 0:1, :] * 0 + pad_value, (1, pad[1], 1))

img_padded = np.concatenate((pad_left, img_padded), axis=1)

pad_down = np.tile(img_padded[-2:-1, :, :] * 0 + pad_value, (pad[2], 1, 1))

img_padded = np.concatenate((img_padded, pad_down), axis=0)

pad_right = np.tile(img_padded[:, -2:-1, :] * 0 + pad_value, (1, pad[3], 1))

img_padded = np.concatenate((img_padded, pad_right), axis=1)

return img_padded, pad

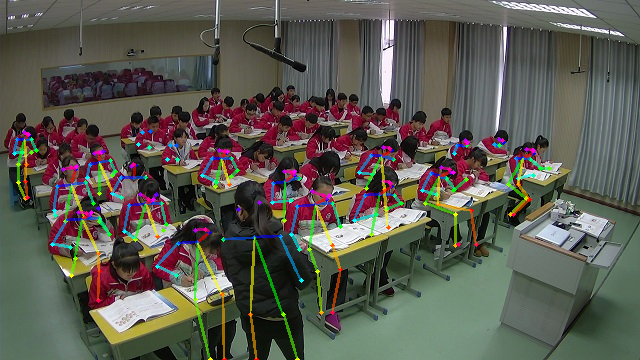

测试效果如下:

检测速度优化:

1.在rknn模型推理时间为370ms,但在处理模型的推理结果时耗时1100ms,猜测可能原因是python代码效率低的原因

2.解决方案:参考如下开源c++代码:https://github.com/dlunion/EasyOpenPose,进行推理结果的处理,时间尽缩短到60ms左右,提高了尽20倍,惊呼C++的效率

3.下定决心学好c++

分类: RKNN, 开源有趣的项目记录,可以用来玩玩