Speech 服务是认知服务的一种,提供了语音转文本,文本转语音, 语音翻译等,今天我们实战的是语音转文本(Speech To Text)。

STT支持两种访问方式,1.是SDK,2.是REST API。

其中:

SDK方式支持 识别麦克风的语音流 和 语音文件;

REST API方式仅支持语音文件;

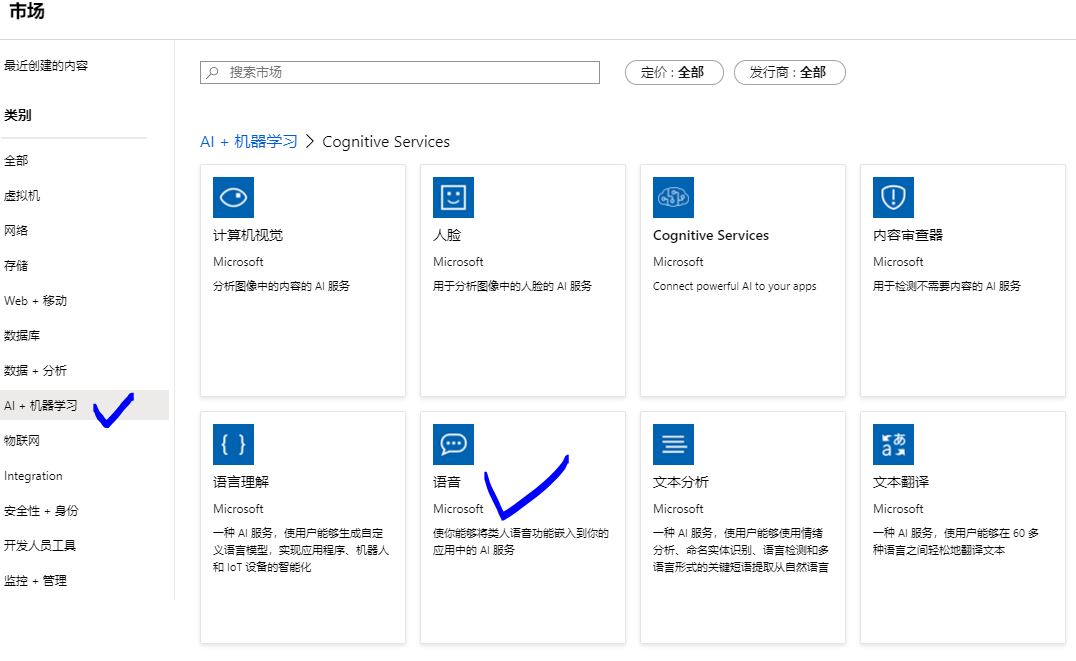

准备工作:创建 认知服务之Speech服务:

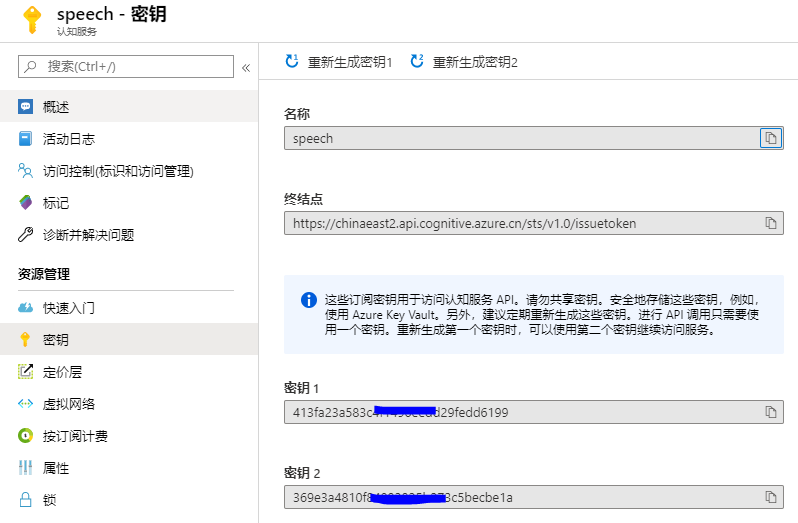

创建完成后,两个重要的参数可以在页面查看:

一. REST API方式将语音文件转换成文本:

Azure global的 Speech API 终结点请参考:

https://docs.microsoft.com/zh-cn/azure/cognitive-services/speech-service/rest-speech-to-text#regions-and-endpoints

Azure 中国区 的 Speech API 终结点:

截至到2020.2月,仅中国东部2区域已开通Speech服务,服务终结点为:

https://chinaeast2.stt.speech.azure.cn/speech/recognition/conversation/cognitiveservices/v1

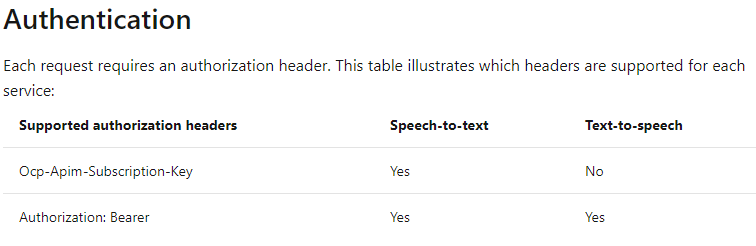

对于Speech To Text来说,有两种身份验证方式:

其中Authorization Token有效期为10分钟。

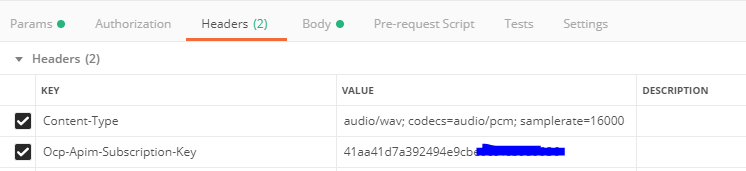

为了简便,本文使用了Ocp-Apim-Subscription-Key的方式。

注意:如果要实现文本转语音,按照上表,则必须使用 Authorization Token形式进行身份验证。

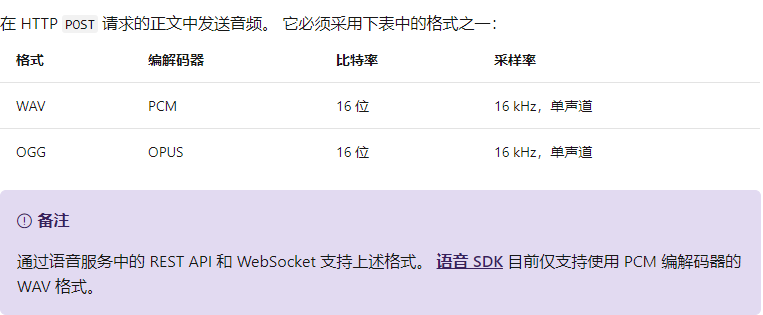

构建请求的其他注意事项:

- 文件格式:

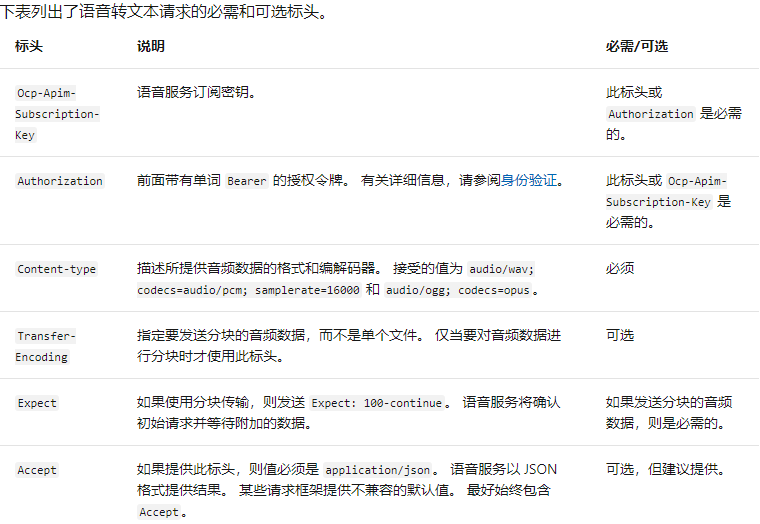

- 请求头:

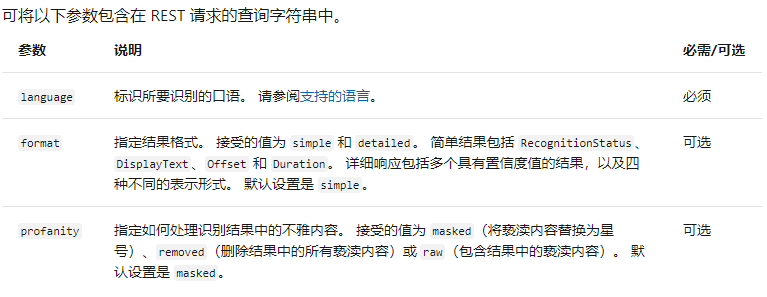

需要注意的是,Key或者Authorization是二选一的关系。 - 请求参数:

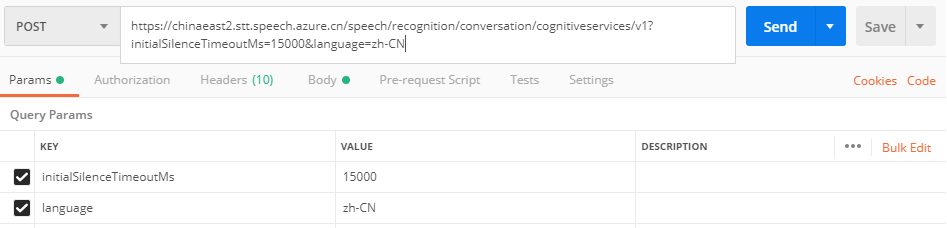

在Postman中的示例如下:

如果要在REST API中使用 Authorization Token,则需要先获得Token:

Global 获取Token的终结点:

https://docs.microsoft.com/zh-cn/azure/cognitive-services/speech-service/rest-speech-to-text#authentication

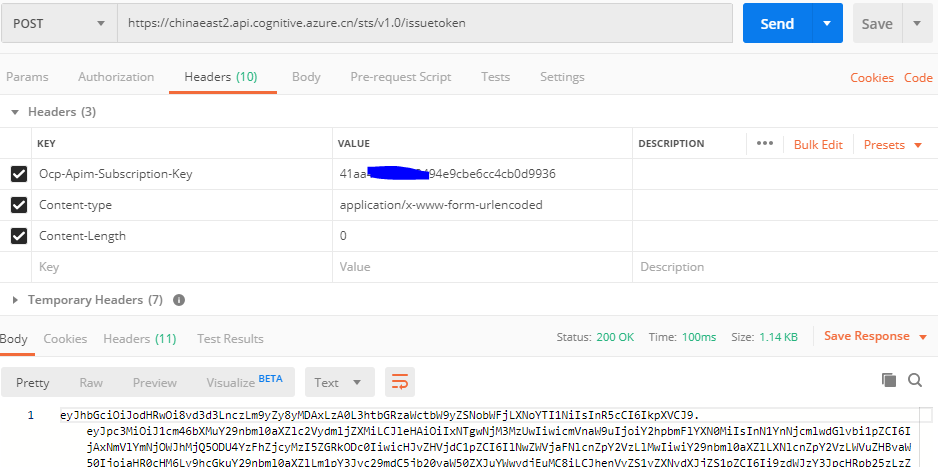

中国区获取Token的终结点:

截至2020.02,只有中国东部2有Speech服务,其Token终结点为:

https://chinaeast2.api.cognitive.azure.cn/sts/v1.0/issuetoken

Postman获取Token 参考如下:

二. SDK方式将语音文件转换成文本(Python示例):

在官网可以看到类似的代码,但需要注意的是,该代码仅在Azure Global的Speech服务中正常工作,针对中国区,需要做特定的修改(见下文)。

import azure.cognitiveservices.speech as speechsdk

# Creates an instance of a speech config with specified subscription key and service region.

# Replace with your own subscription key and service region (e.g., "chinaeast2").

speech_key, service_region = "YourSubscriptionKey", "YourServiceRegion"

speech_config = speechsdk.SpeechConfig(subscription=speech_key, region=service_region)

# Creates an audio configuration that points to an audio file.

# Replace with your own audio filename.

audio_filename = "whatstheweatherlike.wav"

audio_input = speechsdk.AudioConfig(filename=audio_filename)

# Creates a recognizer with the given settings

speech_recognizer = speechsdk.SpeechRecognizer(speech_config=speech_config, audio_config=audio_input)

print("Recognizing first result...")

# Starts speech recognition, and returns after a single utterance is recognized. The end of a

# single utterance is determined by listening for silence at the end or until a maximum of 15

# seconds of audio is processed. The task returns the recognition text as result.

# Note: Since recognize_once() returns only a single utterance, it is suitable only for single

# shot recognition like command or query.

# For long-running multi-utterance recognition, use start_continuous_recognition() instead.

result = speech_recognizer.recognize_once()

# Checks result.

if result.reason == speechsdk.ResultReason.RecognizedSpeech:

print("Recognized: {}".format(result.text))

elif result.reason == speechsdk.ResultReason.NoMatch:

print("No speech could be recognized: {}".format(result.no_match_details))

elif result.reason == speechsdk.ResultReason.Canceled:

cancellation_details = result.cancellation_details

print("Speech Recognition canceled: {}".format(cancellation_details.reason))

if cancellation_details.reason == speechsdk.CancellationReason.Error:

print("Error details: {}".format(cancellation_details.error_details))

代码提供页面:

https://docs.azure.cn/zh-cn/cognitive-services/speech-service/quickstarts/speech-to-text-from-file?tabs=linux&pivots=programming-language-python#create-a-python-application-that-uses-the-speech-sdk

针对中国区,需要使用自定义终结点的方式,才能正常使用SDK:

speech_key, service_region = "Your Key", "chinaeast2" template = "wss://{}.stt.speech.azure.cn/speech/recognition" "/conversation/cognitiveservices/v1?initialSilenceTimeoutMs={:d}&language=zh-CN" speech_config = speechsdk.SpeechConfig(subscription=speech_key, endpoint=template.format(service_region, int(initial_silence_timeout_ms)))

中国区完整代码如下:

#!/usr/bin/env python # coding: utf-8 # Copyright (c) Microsoft. All rights reserved. # Licensed under the MIT license. See LICENSE.md file in the project root for full license information. """ Speech recognition samples for the Microsoft Cognitive Services Speech SDK """ import time import wave try: import azure.cognitiveservices.speech as speechsdk except ImportError: print(""" Importing the Speech SDK for Python failed. Refer to https://docs.microsoft.com/azure/cognitive-services/speech-service/quickstart-python for installation instructions. """) import sys sys.exit(1) # Set up the subscription info for the Speech Service: # Replace with your own subscription key and service region (e.g., "westus"). speech_key, service_region = "your key", "chinaeast2" # Specify the path to an audio file containing speech (mono WAV / PCM with a sampling rate of 16 # kHz). filename = "D:FFOutputspeechtotext.wav" def speech_recognize_once_from_file_with_custom_endpoint_parameters(): """performs one-shot speech recognition with input from an audio file, specifying an endpoint with custom parameters""" initial_silence_timeout_ms = 15 * 1e3 template = "wss://{}.stt.speech.azure.cn/speech/recognition/conversation/cognitiveservices/v1?initialSilenceTimeoutMs={:d}&language=zh-CN" speech_config = speechsdk.SpeechConfig(subscription=speech_key, endpoint=template.format(service_region, int(initial_silence_timeout_ms))) print("Using endpoint", speech_config.get_property(speechsdk.PropertyId.SpeechServiceConnection_Endpoint)) audio_config = speechsdk.audio.AudioConfig(filename=filename) # Creates a speech recognizer using a file as audio input. # The default language is "en-us". speech_recognizer = speechsdk.SpeechRecognizer(speech_config=speech_config, audio_config=audio_config) result = speech_recognizer.recognize_once() # Check the result if result.reason == speechsdk.ResultReason.RecognizedSpeech: print("Recognized: {}".format(result.text)) elif result.reason == speechsdk.ResultReason.NoMatch: print("No speech could be recognized: {}".format(result.no_match_details)) elif result.reason == speechsdk.ResultReason.Canceled: cancellation_details = result.cancellation_details print("Speech Recognition canceled: {}".format(cancellation_details.reason)) if cancellation_details.reason == speechsdk.CancellationReason.Error: print("Error details: {}".format(cancellation_details.error_details)) speech_recognize_once_from_file_with_custom_endpoint_parameters()

需要注意的是,如果我们使用SDK识别麦克风中的语音,则将

speech_recognizer = speechsdk.SpeechRecognizer(speech_config=speech_config, audio_config=audio_config),修改为:

speech_recognizer = speechsdk.SpeechRecognizer(speech_config=speech_config)