开发环境:

win10+idea+jdk1.8+scala2.12.4

具体步骤:

- 编写scala测试类

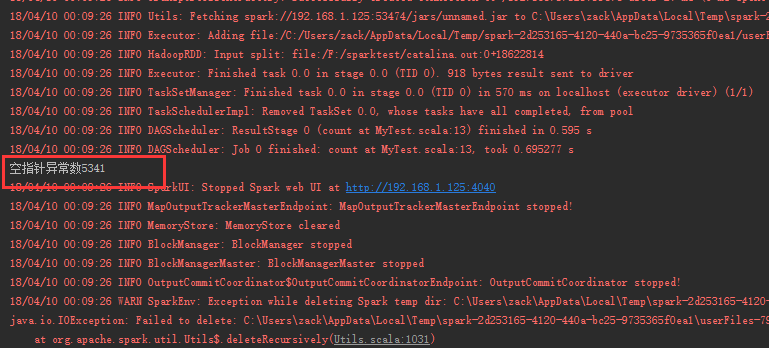

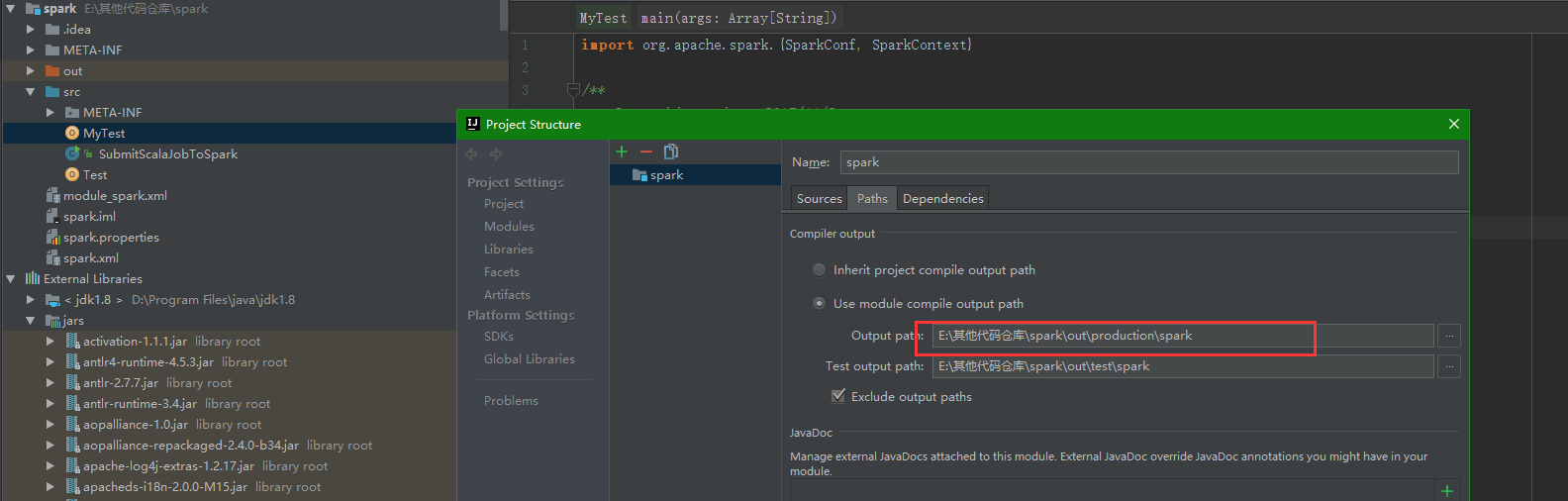

object MyTest { def main(args: Array[String]): Unit = { val conf = new SparkConf() conf.setAppName("MyTest") conf.setMaster("local") val sc = new SparkContext(conf) val input = sc.textFile("file:///F:/sparktest/catalina.out") val count = input.filter(_.contains("java.lang.NullPointerException")).count System.out.println("空指针异常数" + count) sc.stop() } } - 设置工程输出路径

- 打jar包设置

- java编写调用类(需要依赖saprk包,可以将所有相关的包都加到lib依赖)

public class SubmitScalaJobToSpark { public static void main(String[] args) { String[] arg0 = new String[]{ "--master", "spark://node101:7077", "--deploy-mode", "client", "--name", "test java submit job to spark", "--class", "MyTest",//指定spark任务执行函数所在类 "--executor-memory", "1G",//运行内存 "E:\其他代码仓库\spark\out\artifacts\unnamed\unnamed.jar",//jar包路径 }; SparkSubmit.main(arg0); } } - 运行测试