k8s 集群部署

环境:

centos 7.x k8s 1.12 docker 18.xx-ce etcd 3.x flannel 0.10为所有容器提供可以跨机器网络访问 利用etcd 存储网络路由

方式一 minkube: 适用于日常开发适用

方式二 kubeadm

问题:

(1)开发的证书一年

(2)版本还是在测试阶段

方式三 二进制方式:推荐

最新稳定版本v1.12.3

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.12.md#v1123

集群模式选择:

单Master集群:

master 挂了,整个集群就挂了

多Master集群:前面要加一个LB,所有的node都要连接lb,lb帮忙转发到apiserver,然后apiserver再进行相应的操作

这里我们先部署单master 集群的方式:

打开三台虚拟机

现在由单master开始,然后扩展到多master

1.一个master,两个node

2.三台机器都装上etcd,组成集群(建议三台允许坏一台,官方建议五台允许坏两台)

3.安装cfssl

4.自签证书,利用openssl或者cfssl,这里用cfssl(简单)

cfssl 生成证书

cfssljson 传入json文件生成证书

cfssl-certinfo 可以查看生成证书的信息

脚本中的hosts 是部署etcd的机器IP

5.为etcd签发ssl证书

三台主机IP是

192.168.20.11

master

kube-apiserver

kube-controller-manager

kube-scheduler

etcd

192.168.20.12

node

kubelet

kube-proxy

docker

fannel

etcd

192.168.20.13

node

kubelet

kube-proxy

docker

fannel

etcd

在安装etcd 之前我们先制作自签CA证书

install_cfssl.sh

#######

curl -L https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -o /usr/local/bin/cfssl

curl -L https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -o /usr/local/bin/cfssljson

curl -L https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -o /usr/local/bin/cfssl-certinfo

chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson /usr/local/bin/cfssl-certinfo

etcd_cert.sh ######### cat > ca-config.json <<EOF { "signing": { "default": { "expiry": "87600h" }, "profiles": { "www": { "expiry": "87600h", "usages": [ "signing", "key encipherment", "server auth", "client auth" ] } } } } EOF cat > ca-csr.json <<EOF { "CN": "etcd CA", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "Beijing", "ST": "Beijing" } ] } EOF cfssl gencert -initca ca-csr.json | cfssljson -bare ca - #----------------------- cat > server-csr.json <<EOF { "CN": "etcd", "hosts": [ "192.168.20.11", "192.168.20.12", "192.168.20.13" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing" } ] } EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

sh install_cfssl.sh

sh etcd_cert.sh 执行后生成下面文件

[root@hu-001 etcd-cert]# ll total 44 -rw-r--r-- 1 root root 287 Dec 4 04:01 ca-config.json -rw-r--r-- 1 root root 956 Dec 4 04:01 ca.csr -rw-r--r-- 1 root root 209 Dec 4 04:01 ca-csr.json -rw------- 1 root root 1679 Dec 4 04:01 ca-key.pem -rw-r--r-- 1 root root 1265 Dec 4 04:01 ca.pem -rw-r--r-- 1 root root 1088 Aug 27 09:51 etcd-cert.sh -rw-r--r-- 1 root root 1013 Dec 4 04:01 server.csr -rw-r--r-- 1 root root 293 Dec 4 04:01 server-csr.json -rw------- 1 root root 1679 Dec 4 04:01 server-key.pem -rw-r--r-- 1 root root 1338 Dec 4 04:01 server.pem

然后我们将我们下载的etcd的包上传到服务器上(观看时间一小时,正在部署etcd,有问题)

看一下etcd的启动参数的含义

2379和2380端口分别代表啥

2379 数据端口

2380 集群端口

etcd 集群部署完毕之后,我们使用etcdctl 来检测各个节点是否健康

node 节点安装Docker

Flannel 容器集群网络的部署

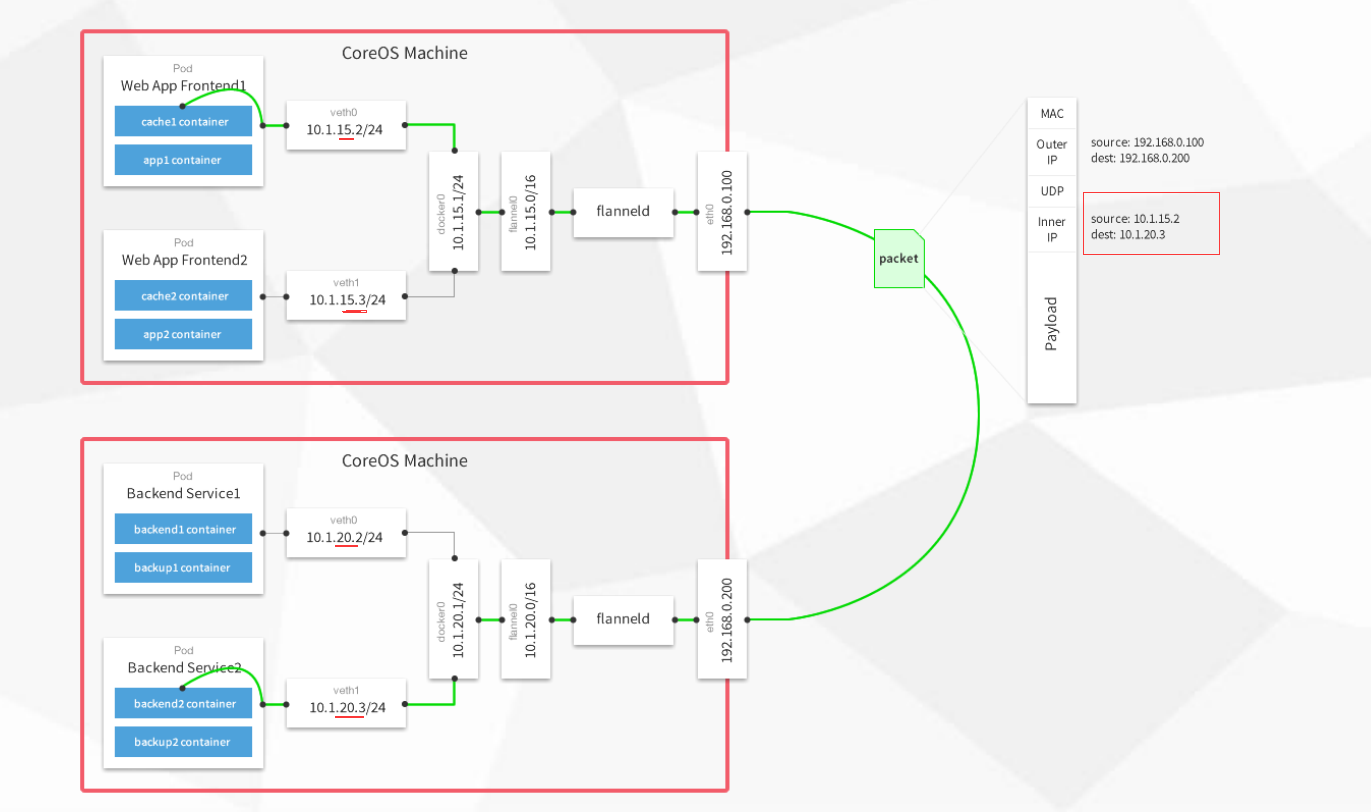

Overlay Network

VXLAN

Flannel

Calico(大公司用的网络架构https://www.cnblogs.com/netonline/p/9720279.html)

Flannel 网络原理

首先之前我们得写入分配的子网段到etcd中,供flannel使用

配置的网段一定不能和宿主机的网段相同,写入网段,以及网络类型到etcd中

启动完Flannel后我们要重启一下Docker,保证Container 和 flannel 在一个网段

然后我们在两台宿主机下再分别启动一个Docker容器,我们ping 对方的容器,虽然网段不同,但是还是可以ping通的(Flannel起的作用)

接下来部署k8s组件

master:

必须首先部署apiserver,其他两个组件无序

首先我们得给kube-apiserver 自签一个证书

k8s_cert.sh

###############################################

cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat > ca-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

#-----------------------

cat > server-csr.json <<EOF

{

"CN": "kubernetes",

"hosts": [

"10.0.0.1",#不要修改

"127.0.0.1",#不要修改

"192.168.20.11", #master_ip,LB_ip,vip

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s", #不要修改

"OU": "System" #不要修改

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

#-----------------------

cat > admin-csr.json <<EOF

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

#-----------------------

cat > kube-proxy-csr.json <<EOF

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

部署Apiserver组件

apiserver.sh

#################################################

#!/bin/bash

MASTER_ADDRESS=$1

ETCD_SERVERS=$2

cat <<EOF >/opt/kubernetes/cfg/kube-apiserver

KUBE_APISERVER_OPTS="--logtostderr=true \

--v=4 \ #日志级别

--etcd-servers=${ETCD_SERVERS} \ # etcd地址

--bind-address=${MASTER_ADDRESS} \ # 绑定当前IP

--secure-port=6443 \ #默认监听端口

--advertise-address=${MASTER_ADDRESS} \ #集群通告地址

--allow-privileged=true \

--service-cluster-ip-range=10.0.0.0/24 \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \ 启用准入控制

--authorization-mode=RBAC,Node \

--kubelet-https=true \

--enable-bootstrap-token-auth \ # 启用token认证

--token-auth-file=/opt/kubernetes/cfg/token.csv \ #token认证文件

--service-node-port-range=30000-50000 \

--tls-cert-file=/opt/kubernetes/ssl/server.pem \

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \

--client-ca-file=/opt/kubernetes/ssl/ca.pem \

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \

--etcd-cafile=/opt/etcd/ssl/ca.pem \

--etcd-certfile=/opt/etcd/ssl/server.pem \

--etcd-keyfile=/opt/etcd/ssl/server-key.pem"

EOF

cat <<EOF >/usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-apiserver

ExecStart=/opt/kubernetes/bin/kube-apiserver $KUBE_APISERVER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kube-apiserver

systemctl restart kube-apiserver

具体的操作步骤如下:

k8s 单Master集群的部署

笔记链接:

https://www.jianshu.com/p/33b5f47ababc

1、安装cfssl工具(使用下面的脚步安装cfssl)

curl -L https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -o /usr/local/bin/cfssl

curl -L https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -o /usr/local/bin/cfssljson

curl -L https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -o /usr/local/bin/cfssl-certinfo

chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson /usr/local/bin/cfssl-certinfo

[root@hu-001 tools]# ls -lh /usr/local/bin/cfssl*

-rwxr-xr-x 1 root root 9.9M Dec 4 03:57 /usr/local/bin/cfssl

-rwxr-xr-x 1 root root 6.3M Dec 4 03:58 /usr/local/bin/cfssl-certinfo

-rwxr-xr-x 1 root root 2.2M Dec 4 03:58 /usr/local/bin/cfssljson

2、使用cfssl创建CA证书以及etcd的TLS认证证书

2.1 创建CA证书

mkdir /data/k8s/etcd-cert/ 创建一个专门用来生成ca证书的文件夹

cd /data/k8s/etcd-cert

创建CA配置文件

cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"www": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

字段解释:

"ca-config.json":可以定义多个 profiles,分别指定不同的过期时间、使用场景等参数;后续在签名证书时使用某个 profile;

"signing":表示该证书可用于签名其它证书;生成的 ca.pem 证书中 CA=TRUE;

"server auth":表示client可以用该 CA 对server提供的证书进行验证;

"client auth":表示server可以用该CA对client提供的证书进行验证;

创建CA证书签名请求

cat > ca-csr.json <<EOF

{

"CN": "etcd CA",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing"

}

]

}

EOF

字段解释:

"CN":Common Name,etcd 从证书中提取该字段作为请求的用户名 (User Name);浏览器使用该字段验证网站是否合法;

"O":Organization,etcd 从证书中提取该字段作为请求用户所属的组 (Group);

这两个参数在后面的kubernetes启用RBAC模式中很重要,因为需要设置kubelet、admin等角色权限,那么在配置证书的时候就必须配置对了,具体后面在部署kubernetes的时候会进行讲解。

"在etcd这两个参数没太大的重要意义,跟着配置就好。"

接下来就是生成CA证书和私钥了

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

这个命令生成了"ca-csr.json ca-key.pem ca.pem"三个文件

3、创建Etcd的TLS认证证书:

创建etcd证书签名请求

cat > server-csr.json <<EOF

{

"CN": "etcd",

"hosts": [

"192.168.20.11",

"192.168.20.12",

"192.168.20.13"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing"

}

]

}

EOF

字段解释:

hosts:这里填写etcd集群节点机器的IP(可以理解成信任列表),指定授权使用该证书的IP列表

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

上面的命令生成 "server-csr.json server-key.pem server.pem" 三个文件

这里我们可以把TLS认证文件拷贝到自己常用位置证书目录下或者当前位置

cp *pem /data/etcd/ssl/

我们可以把上面的几个步骤放在一个脚本里执行

个人建议,因为是部署集群(个人虚拟机环境),这里我们最好还是关闭防火墙以及进行时间同步

4、接下来就是安装etcd服务了

将我们下载好的包文件上传到服务器上(可自行下载https://github.com/etcd-io/etcd/releases)

etcd-v3.3.10-linux-amd64.tar.gz

mkdir -p /data/etcd/{cfg,bin,ssl}

cp /data/k8s/etcd-cert/{server-csr.json,server-key.pem,server.pem} /data/etcd/ssl/

tar -xf etcd-v3.3.10-linux-amd64.tar.gz

cp etcd-v3.3.10-linux-amd64/etcd /data/etcd/bin

cp etcd-v3.3.10-linux-amd64/etcdctl /data/etcd/bin

[root@hu-001 etcd-cert]# cat etcd.sh

#!/bin/bash

# example: ./etcd.sh etcd01 192.168.20.11 etcd02=https://192.168.20.12:2380,etcd03=https://192.168.20.13:2380

ETCD_NAME=$1

ETCD_IP=$2

ETCD_CLUSTER=$3

WORK_DIR=/data/etcd

cat <<EOF >$WORK_DIR/cfg/etcd

#[Member]

ETCD_NAME="${ETCD_NAME}"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://${ETCD_IP}:2380"

ETCD_LISTEN_CLIENT_URLS="https://${ETCD_IP}:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://${ETCD_IP}:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://${ETCD_IP}:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://${ETCD_IP}:2380,${ETCD_CLUSTER}"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

EOF

cat <<EOF >/usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=${WORK_DIR}/cfg/etcd

ExecStart=${WORK_DIR}/bin/etcd

--name=${ETCD_NAME}

--data-dir=${ETCD_DATA_DIR}

--listen-peer-urls=${ETCD_LISTEN_PEER_URLS}

--listen-client-urls=${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379

--advertise-client-urls=${ETCD_ADVERTISE_CLIENT_URLS}

--initial-advertise-peer-urls=${ETCD_INITIAL_ADVERTISE_PEER_URLS}

--initial-cluster=${ETCD_INITIAL_CLUSTER}

--initial-cluster-token=${ETCD_INITIAL_CLUSTER_TOKEN}

--initial-cluster-state=new

--cert-file=${WORK_DIR}/ssl/server.pem

--key-file=${WORK_DIR}/ssl/server-key.pem

--peer-cert-file=${WORK_DIR}/ssl/server.pem

--peer-key-file=${WORK_DIR}/ssl/server-key.pem

--trusted-ca-file=${WORK_DIR}/ssl/ca.pem

--peer-trusted-ca-file=${WORK_DIR}/ssl/ca.pem

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable etcd

systemctl restart etcd

这个时候我们查看一下etcd的状态 systemctl status etcd.service ,看到该节点已经起来了

参数解释:

参数说明:

1、指定 etcd 的工作目录为 /var/lib/etcd,数据目录为 /var/lib/etcd,需在启动服务前创建这两个目录;

在配置中的命令是这条:

WorkingDirectory=/var/lib/etcd/

2、为了保证通信安全,需要指定 etcd 的公私钥(cert-file和key-file)、Peers 通信的公私钥和 CA 证书(peer-cert-file、peer-key-file、peer-trusted-ca-file)、客户端的CA证书(trusted-ca-file);

在配置中添加etcd证书的命令是以下:

--cert-file=/data/etcd/ssl/server.pem

--key-file=/data/etcd/ssl/server-key.pem

--peer-cert-file=/data/etcd/ssl/server.pem

--peer-key-file=/data/etcd/ssl/server-key.pem

--trusted-ca-file=/data/etcd/ssl/ca.pem

--peer-trusted-ca-file=/data/etcd/ssl/ca.pem

#3、配置etcd的endpoint:

# --initial-cluster infra1=https://172.16.5.81:2380

4、配置etcd的监听服务集群:

--initial-advertise-peer-urls ${ETCD_INITIAL_ADVERTISE_PEER_URLS}

--listen-peer-urls ${ETCD_LISTEN_PEER_URLS}

--listen-client-urls ${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379

--advertise-client-urls ${ETCD_ADVERTISE_CLIENT_URLS}

5、配置etcd创建的集群为新集群,则定义集群状态为new

--initial-cluster-state 值为 new

6、定义etcd节点的名称,该名称等下从配置文件中获取:

--name ${ETCD_NAME}

其中配置文件:EnvironmentFile=/data/etcd/cfg/etcd

这个时候我们把上面相应的文件拷贝到另外两台节点上

ssh-keygen

ssh-copy-id root@192.168.20.12

ssh-copy-id root@192.168.20.13

scp -r /data/etcd root@192.168.20.12:/data/

scp -r /data/etcd root@192.168.20.13:/data/

scp /usr/lib/systemd/system/etcd.service root@192.168.20.12:/usr/lib/systemd/system/

scp /usr/lib/systemd/system/etcd.service root@192.168.20.13:/usr/lib/systemd/system/

然后我们再去修改另外两台节点的配置文件

vim /data/etcd/cfg/etcd

#[Member]

ETCD_NAME="etcd03"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.20.13:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.20.13:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.20.13:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.20.13:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.20.11:2380,etcd02=https://192.168.20.12:2380,etcd03=https://192.168.20.13:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

systemctl daemon-reload

systemctl enable etcd

systemctl restart etcd

至此三个节点的etcd集群就部署好了

监测一下etcd集群服务的健康状态:

[root@hu-001 etcd-v3.3.10-linux-amd64]# /data/etcd/bin/etcdctl

> --ca-file=/data/etcd/ssl/ca.pem

> --cert-file=/data/etcd/ssl/server.pem

> --key-file=/data/etcd/ssl/server-key.pem cluster-health

member 98aa99c4dcd6c4 is healthy: got healthy result from https://192.168.20.11:2379

member 12446003b2a53d43 is healthy: got healthy result from https://192.168.20.12:2379

member 667c9c7ba890c3f7 is healthy: got healthy result from https://192.168.20.13:2379

cluster is healthy

Node节点安装Docker

安装Docker环境所需要的依赖包

yum install -y yum-utils device-mapper-persistent-data lvm2

添加Docker软件包源

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.re

使用上面的源可能会安装失败。这里时候我们可以选择使用阿里的

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

安装Docker-CE

yum -y install docker-ce

启动Docker服务并设置开机启动

systemctl start docker

systemctl enable docker

Flannel 集群网络部署

接下来就是部署容器集群Flannel网络了

https://www.cnblogs.com/kevingrace/p/6859114.html

首先我们写入分配的子网段(和宿主机不要在一个网段)到etcd中,供flannel使用

/data/etcd/bin/etcdctl --ca-file=/data/etcd/ssl/ca.pem --cert-file=/data/etcd/ssl/server.pem --key-file=/data/etcd/ssl/server-key.pem --endpoints="https://192.168.20.11:2379,https://192.168.20.12:2379,https://192.168.20.13:2379" set /coreos.com/network/config '{"Network":"192.168.10.0/16","Backend":{"Type":"vxlan"}}'

这里我们宿主机的网段是192.168.20.0,我们给flannel 分配的望断时192.168.10.0

声明网络类型为vxlan

给各node主机上传flannel网络包

flannel-v0.10.0-linux-amd64.tar.gz

mkdir /data/kubernetes/{bin,cfg,ssl}

tar -xf flannel-v0.10.0-linux-amd64.tar.gz -C /data/kubernetes/bin/

然后执行下面的脚本:

这里在操作的时候把Docker的给先去掉,其实只是在原有的Docker启动脚本上加上了两行,这里我们可以单独手动修改,或者后面完善脚本也可以

#!/bin/bash

ETCD_ENDPOINTS=${1:-"http://127.0.0.1:2379"}

#" ${1:-"http://127.0.0.1:2379"} "解释 第一个参数要是不传的话就使用http://127.0.0.1:2379

cat <<EOF >/data/kubernetes/cfg/flanneld

FLANNEL_dataIONS="--etcd-endpoints=${ETCD_ENDPOINTS}

-etcd-cafile=/data/etcd/ssl/ca.pem

-etcd-certfile=/data/etcd/ssl/server.pem

-etcd-keyfile=/data/etcd/ssl/server-key.pem"

EOF

cat <<EOF >/usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/data/kubernetes/cfg/flanneld

ExecStart=/data/kubernetes/bin/flanneld --ip-masq $FLANNEL_dataIONS

ExecStartPost=/data/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_dataIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

#这里生成子网信息到/run/flannel/subnet.env,然后Docker启动的时候从这里获取子网信息

#修改Docker的网络,新增下面两行,修改后在启动flannel 成功后记得要重启docker服务

#EnvironmentFile=/run/flannel/subnet.env

#ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_dataIONS

cat <<EOF >/usr/lib/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/run/flannel/subnet.env

ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_dataIONS

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable flanneld

systemctl restart flanneld

systemctl restart docker

#!/bin/bash

ETCD_ENDPOINTS=${1:-"http://127.0.0.1:2379"}

#" ${1:-"http://127.0.0.1:2379"} "解释 第一个参数要是不传的话就使用http://127.0.0.1:2379

cat <<EOF >/data/kubernetes/cfg/flanneld

FLANNEL_dataIONS="--etcd-endpoints=${ETCD_ENDPOINTS}

-etcd-cafile=/data/etcd/ssl/ca.pem

-etcd-certfile=/data/etcd/ssl/server.pem

-etcd-keyfile=/data/etcd/ssl/server-key.pem"

EOF

cat <<EOF >/usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/data/kubernetes/cfg/flanneld

ExecStart=/data/kubernetes/bin/flanneld --ip-masq $FLANNEL_dataIONS

ExecStartPost=/data/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_dataIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

#这里生成子网信息到/run/flannel/subnet.env,然后Docker启动的时候从这里获取子网信息

#修改Docker的网络,新增下面两行,修改后在启动flannel 成功后记得要重启docker服务

#EnvironmentFile=/run/flannel/subnet.env

#ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_dataIONS

systemctl daemon-reload

systemctl enable flanneld

systemctl restart flanneld

systemctl restart docker

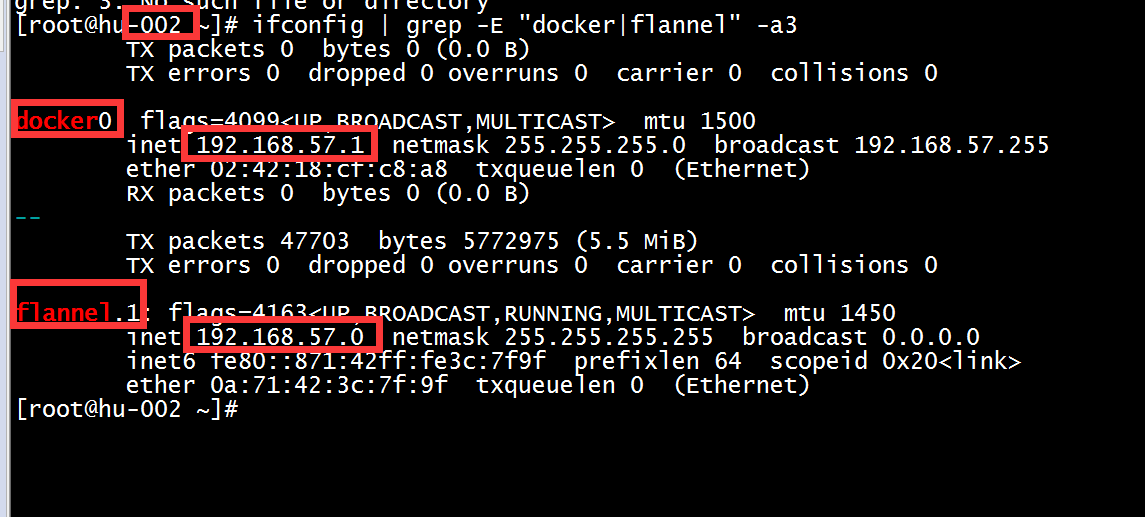

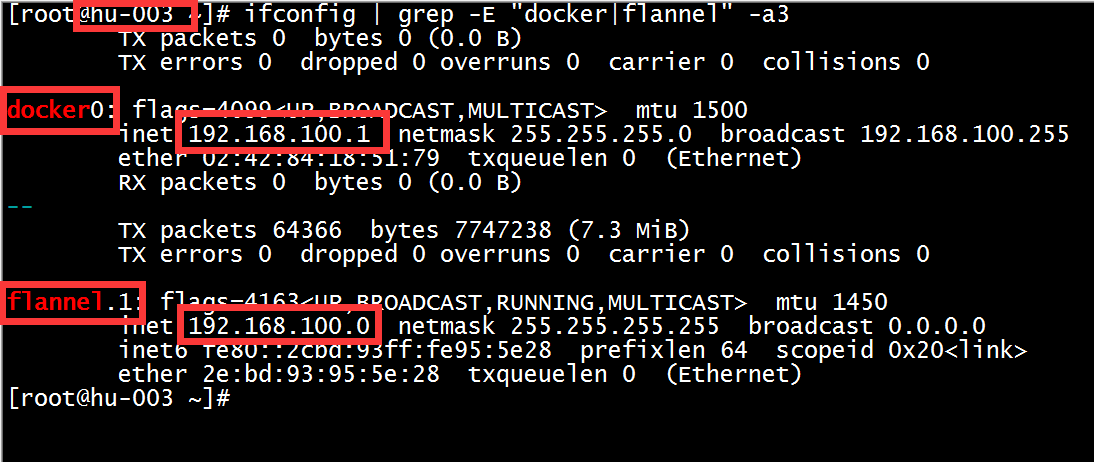

这样我们看到两台Node节点的网络情况是这样的

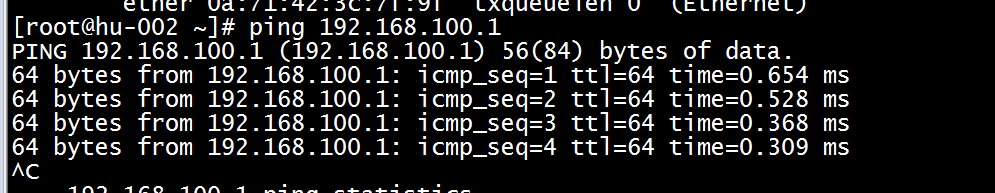

我们看到两台处于不同的网段,但是确实可以互ping相通

这个时候我们可以在两台Node节点上分别启动一个容器,然后看两个容器的网络是否相通

部署Master组件

必须第一部署apiserver,其他两个组件可以不按顺序

首先利用脚本自签一个apiserver用到的证书:

cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat > ca-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

#-----------------------

cat > server-csr.json <<EOF

{

"CN": "kubernetes",

"hosts": [

"10.0.0.1",

"127.0.0.1",

"192.168.20.11",

"192.168.20.12",

"192.168.20.13",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

# 这里的hosts中10.0.0.1,127.0.0.1 不要删除了,k8s自用,然后我们加上master_ip,LB_ip,Vip

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

#-----------------------

cat > admin-csr.json <<EOF

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

#-----------------------

cat > kube-proxy-csr.json <<EOF

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

mkdir /data/kubernetes/{bin,ssl,cfg} -p

mv ca.pem server.pem ca-key.pem server-key.pem /data/kubernetes/ssl/

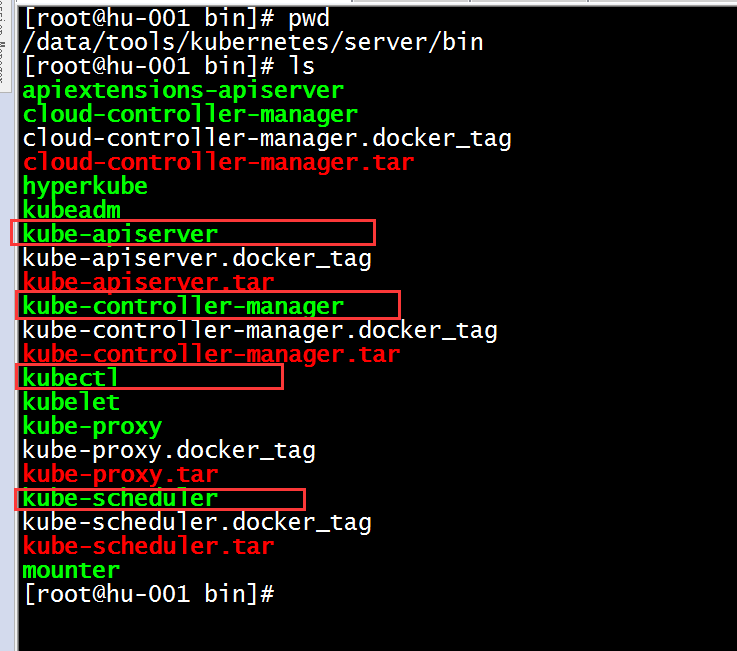

[root@hu-001 tools]# tar -xf kubernetes-server-linux-amd64.tar.gz

解压后拷贝需要的可执行文件

[root@hu-001 bin]# cp kubectl kube-apiserver kube-controller-manager kube-scheduler /data/kubernetes/bin/

使用下面的命令

# 创建 TLS Bootstrapping Token

#BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ')

[root@hu-001 master_sh]# cat /data/kubernetes/cfg/token.csv f23bd9cb6289ab11ddb622ec9de9ed6f,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

apiserver.sh脚本内容如下:

#!/bin/bash

MASTER_ADDRESS=$1

ETCD_SERVERS=$2

mkdir -p /data/kubernetes/{cfg,bin,ssl}

cat <<EOF >/data/kubernetes/cfg/kube-apiserver

KUBE_APISERVER_dataS="--logtostderr=true \

--v=4 \

--etcd-servers=${ETCD_SERVERS} \

--bind-address=${MASTER_ADDRESS} \

--secure-port=6443 \

--advertise-address=${MASTER_ADDRESS} \

--allow-privileged=true \

--service-cluster-ip-range=10.0.0.0/24 \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \

--authorization-mode=RBAC,Node \

--kubelet-https=true \

--enable-bootstrap-token-auth \

--token-auth-file=/data/kubernetes/cfg/token.csv \

--service-node-port-range=30000-50000 \

--tls-cert-file=/data/kubernetes/ssl/server.pem \

--tls-private-key-file=/data/kubernetes/ssl/server-key.pem \

--client-ca-file=/data/kubernetes/ssl/ca.pem \

--service-account-key-file=/data/kubernetes/ssl/ca-key.pem \

--etcd-cafile=/data/etcd/ssl/ca.pem \

--etcd-certfile=/data/etcd/ssl/server.pem \

--etcd-keyfile=/data/etcd/ssl/server-key.pem"

EOF

cat <<EOF >/usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/data/kubernetes/cfg/kube-apiserver

ExecStart=/data/kubernetes/bin/kube-apiserver $KUBE_APISERVER_dataS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kube-apiserver

systemctl restart kube-apiserver

[root@hu-001 master_sh]# sh apiserver.sh 192.168.20.11 https://192.168.20.11:2379,https://192.168.20.12:2379,https://192.168.20.13:2379

至此kube-apiserver 就已经启动成功了,观看时间到38分钟