问题导读:

1.如何通过官网src包,获取hadoop的全部代码

2.通过什么样的操作,可以查看hadoop某个函数或则类的实现?

3.maven的作用是什么?![]()

我们如果想搞开发,研究源码对我们的帮助很大。不明白原理就如同黑盒子,遇到问题,我们也摸不着思路。所以这里交给大家

一.如何获取源码

二.如何关联源码

一.如何获取源码

1.下载hadoop的maven程序包

(1)官网下载

这里我们先从官网上下载maven包hadoop-2.4.0-src.tar.gz。

官网下载地址

对于不知道怎么去官网下载,可以查看:新手指导:hadoop官网介绍及如何下载hadoop(2.4)各个版本与查看hadoop API介绍

(2)网盘下载

也可以从网盘下载:

http://pan.baidu.com/s/1kToPuGB

2.通过maven获取源码

获取源码的方式有两种,一种是通过命令行的方式,一种是通过eclipse。这里主要讲通过命令的方式

通过命令的方式获取源码:

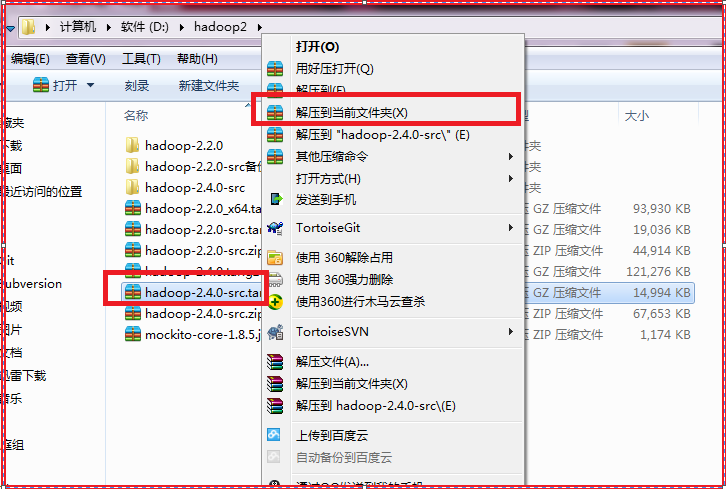

1.解压包

<ignore_js_op>

解压包的时候遇到了下面问题。不过不用管,我们继续往下走

1 : 无法创建文件:D:hadoop2hadoop-2.4.0-srchadoop-yarn-projecthadoop-yarnhadoop-yarn-serverhadoop-yarn-server-applicationhistoryservice argetclassesorgapachehadoopyarnserverapplicationhistoryserviceApplicationHistoryClientService$ApplicationHSClientProtocolHandler.class:

路径和文件名总长度不能超过260个字符

系统找不到指定的路径。 D:hadoop2hadoop-2.4.0-src.zip

2 : 无法创建文件:D:hadoop2hadoop-2.4.0-srchadoop-yarn-projecthadoop-yarnhadoop-yarn-serverhadoop-yarn-server-applicationhistoryservice argetclassesorgapachehadoopyarnserverapplicationhistoryservice imelineLeveldbTimelineStore$LockMap$CountingReentrantLock.class:系统找不到指定的路径。 D:hadoop2hadoop-2.4.0-src.zip

3 : 无法创建文件:D:hadoop2hadoop-2.4.0-srchadoop-yarn-projecthadoop-yarnhadoop-yarn-serverhadoop-yarn-server-applicationhistoryservice arget est-classesorgapachehadoopyarnserverapplicationhistoryservicewebappTestAHSWebApp$MockApplicationHistoryManagerImpl.class:系统找不到指定的路径。 D:hadoop2hadoop-2.4.0-src.zip

4 : 无法创建文件:D:hadoop2hadoop-2.4.0-srchadoop-yarn-projecthadoop-yarnhadoop-yarn-serverhadoop-yarn-server-resourcemanager arget est-classesorgapachehadoopyarnserver esourcemanagermonitorcapacityTestProportionalCapacityPreemptionPolicy$IsPreemptionRequestFor.class:

路径和文件名总长度不能超过260个字符

系统找不到指定的路径。 D:hadoop2hadoop-2.4.0-src.zip

5 : 无法创建文件:D:hadoop2hadoop-2.4.0-srchadoop-yarn-projecthadoop-yarnhadoop-yarn-serverhadoop-yarn-server-resourcemanager arget est-classesorgapachehadoopyarnserver esourcemanager ecoveryTestFSRMStateStore$TestFSRMStateStoreTester$TestFileSystemRMStore.class:系统找不到指定的路径。 D:hadoop2hadoop-2.4.0-src.zip

6 : 无法创建文件:D:hadoop2hadoop-2.4.0-srchadoop-yarn-projecthadoop-yarnhadoop-yarn-serverhadoop-yarn-server-resourcemanager arget est-classesorgapachehadoopyarnserver esourcemanager ecoveryTestZKRMStateStore$TestZKRMStateStoreTester$TestZKRMStateStoreInternal.class:

路径和文件名总长度不能超过260个字符

系统找不到指定的路径。 D:hadoop2hadoop-2.4.0-src.zip

7 : 无法创建文件:D:hadoop2hadoop-2.4.0-srchadoop-yarn-projecthadoop-yarnhadoop-yarn-serverhadoop-yarn-server-resourcemanager arget est-classesorgapachehadoopyarnserver esourcemanager ecoveryTestZKRMStateStoreZKClientConnections$TestZKClient$TestForwardingWatcher.class:

路径和文件名总长度不能超过260个字符

系统找不到指定的路径。 D:hadoop2hadoop-2.4.0-src.zip

8 : 无法创建文件:D:hadoop2hadoop-2.4.0-srchadoop-yarn-projecthadoop-yarnhadoop-yarn-serverhadoop-yarn-server-resourcemanager arget est-classesorgapachehadoopyarnserver esourcemanager ecoveryTestZKRMStateStoreZKClientConnections$TestZKClient$TestZKRMStateStore.class:

路径和文件名总长度不能超过260个字符

系统找不到指定的路径。 D:hadoop2hadoop-2.4.0-src.zip

9 : 无法创建文件:D:hadoop2hadoop-2.4.0-srchadoop-yarn-projecthadoop-yarnhadoop-yarn-serverhadoop-yarn-server-resourcemanager arget est-classesorgapachehadoopyarnserver esourcemanager mappattemptTestRMAppAttemptTransitions$TestApplicationAttemptEventDispatcher.class:

路径和文件名总长度不能超过260个字符

系统找不到指定的路径。 D:hadoop2hadoop-2.4.0-src.zip

2.通过maven获取源码

这里需要说明的是,在使用maven的时候,需要先安装jdk,protoc ,如果没有安装可以参考win7如何安装maven、安装protoc

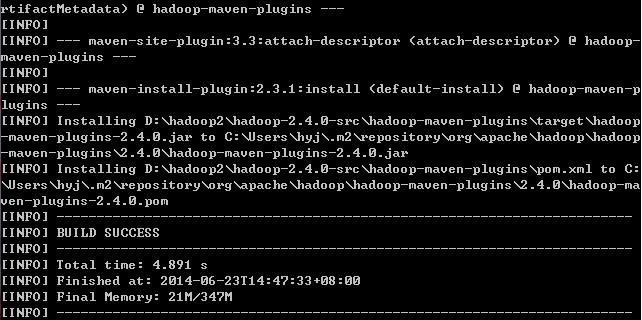

(1)进入hadoop-2.4.0-srchadoop-maven-plugins,运行mvn install

- D:hadoop2hadoop-2.4.0-srchadoop-maven-plugins>mvn install

显示如下信息

- [INFO] Scanning for projects...

- [WARNING]

- [WARNING] Some problems were encountered while building the effective model for

- org.apache.hadoop:hadoop-maven-plugins:maven-plugin:2.4.0

- [WARNING] 'build.plugins.plugin.(groupId:artifactId)' must be unique but found d

- uplicate declaration of plugin org.apache.maven.plugins:maven-enforcer-plugin @

- org.apache.hadoop:hadoop-project:2.4.0, D:hadoop2hadoop-2.4.0-srchadoop-proje

- ctpom.xml, line 1015, column 15

- [WARNING]

- [WARNING] It is highly recommended to fix these problems because they threaten t

- he stability of your build.

- [WARNING]

- [WARNING] For this reason, future Maven versions might no longer support buildin

- g such malformed projects.

- [WARNING]

- [INFO]

- [INFO] Using the builder org.apache.maven.lifecycle.internal.builder.singlethrea

- ded.SingleThreadedBuilder with a thread count of 1

- [INFO]

- [INFO] ------------------------------------------------------------------------

- [INFO] Building Apache Hadoop Maven Plugins 2.4.0

- [INFO] ------------------------------------------------------------------------

- [INFO]

- [INFO] --- maven-antrun-plugin:1.7:run (create-testdirs) @ hadoop-maven-plugins

- ---

- [INFO] Executing tasks

- main:

- [INFO] Executed tasks

- [INFO]

- [INFO] --- maven-plugin-plugin:3.0:descriptor (default-descriptor) @ hadoop-mave

- n-plugins ---

- [INFO] Using 'UTF-8' encoding to read mojo metadata.

- [INFO] Applying mojo extractor for language: java-annotations

- [INFO] Mojo extractor for language: java-annotations found 2 mojo descriptors.

- [INFO] Applying mojo extractor for language: java

- [INFO] Mojo extractor for language: java found 0 mojo descriptors.

- [INFO] Applying mojo extractor for language: bsh

- [INFO] Mojo extractor for language: bsh found 0 mojo descriptors.

- [INFO]

- [INFO] --- maven-resources-plugin:2.2:resources (default-resources) @ hadoop-mav

- en-plugins ---

- [INFO] Using default encoding to copy filtered resources.

- [INFO]

- [INFO] --- maven-compiler-plugin:2.5.1:compile (default-compile) @ hadoop-maven-

- plugins ---

- [INFO] Nothing to compile - all classes are up to date

- [INFO]

- [INFO] --- maven-plugin-plugin:3.0:descriptor (mojo-descriptor) @ hadoop-maven-p

- lugins ---

- [INFO] Using 'UTF-8' encoding to read mojo metadata.

- [INFO] Applying mojo extractor for language: java-annotations

- [INFO] Mojo extractor for language: java-annotations found 2 mojo descriptors.

- [INFO] Applying mojo extractor for language: java

- [INFO] Mojo extractor for language: java found 0 mojo descriptors.

- [INFO] Applying mojo extractor for language: bsh

- [INFO] Mojo extractor for language: bsh found 0 mojo descriptors.

- [INFO]

- [INFO] --- maven-resources-plugin:2.2:testResources (default-testResources) @ ha

- doop-maven-plugins ---

- [INFO] Using default encoding to copy filtered resources.

- [INFO]

- [INFO] --- maven-compiler-plugin:2.5.1:testCompile (default-testCompile) @ hadoo

- p-maven-plugins ---

- [INFO] No sources to compile

- [INFO]

- [INFO] --- maven-surefire-plugin:2.16:test (default-test) @ hadoop-maven-plugins

- ---

- [INFO] No tests to run.

- [INFO]

- [INFO] --- maven-jar-plugin:2.3.1:jar (default-jar) @ hadoop-maven-plugins ---

- [INFO] Building jar: D:hadoop2hadoop-2.4.0-srchadoop-maven-plugins argethad

- oop-maven-plugins-2.4.0.jar

- [INFO]

- [INFO] --- maven-plugin-plugin:3.0:addPluginArtifactMetadata (default-addPluginA

- rtifactMetadata) @ hadoop-maven-plugins ---

- [INFO]

- [INFO] --- maven-site-plugin:3.3:attach-descriptor (attach-descriptor) @ hadoop-

- maven-plugins ---

- [INFO]

- [INFO] --- maven-install-plugin:2.3.1:install (default-install) @ hadoop-maven-p

- lugins ---

- [INFO] Installing D:hadoop2hadoop-2.4.0-srchadoop-maven-plugins argethadoop

- -maven-plugins-2.4.0.jar to C:Usershyj.m2 epositoryorgapachehadoophadoop

- -maven-plugins2.4.0hadoop-maven-plugins-2.4.0.jar

- [INFO] Installing D:hadoop2hadoop-2.4.0-srchadoop-maven-pluginspom.xml to C:

- Usershyj.m2 epositoryorgapachehadoophadoop-maven-plugins2.4.0hadoop-ma

- ven-plugins-2.4.0.pom

- [INFO] ------------------------------------------------------------------------

- [INFO] BUILD SUCCESS

- [INFO] ------------------------------------------------------------------------

- [INFO] Total time: 4.891 s

- [INFO] Finished at: 2014-06-23T14:47:33+08:00

- [INFO] Final Memory: 21M/347M

- [INFO] ------------------------------------------------------------------------

部分截图如下:

<ignore_js_op>

<ignore_js_op>

(2)运行

- mvn eclipse:eclipse -DskipTests

这时候注意,我们进入的是hadoop_home,我这里是D:hadoop2hadoop-2.4.0-src

部分信息如下

- [INFO]

- [INFO] ------------------------------------------------------------------------

- [INFO] Reactor Summary:

- [INFO]

- [INFO] Apache Hadoop Main ................................ SUCCESS [ 0.684 s]

- [INFO] Apache Hadoop Project POM ......................... SUCCESS [ 0.720 s]

- [INFO] Apache Hadoop Annotations ......................... SUCCESS [ 0.276 s]

- [INFO] Apache Hadoop Project Dist POM .................... SUCCESS [ 0.179 s]

- [INFO] Apache Hadoop Assemblies .......................... SUCCESS [ 0.121 s]

- [INFO] Apache Hadoop Maven Plugins ....................... SUCCESS [ 1.680 s]

- [INFO] Apache Hadoop MiniKDC ............................. SUCCESS [ 1.802 s]

- [INFO] Apache Hadoop Auth ................................ SUCCESS [ 1.024 s]

- [INFO] Apache Hadoop Auth Examples ....................... SUCCESS [ 0.160 s]

- [INFO] Apache Hadoop Common .............................. SUCCESS [ 1.061 s]

- [INFO] Apache Hadoop NFS ................................. SUCCESS [ 0.489 s]

- [INFO] Apache Hadoop Common Project ...................... SUCCESS [ 0.056 s]

- [INFO] Apache Hadoop HDFS ................................ SUCCESS [ 2.770 s]

- [INFO] Apache Hadoop HttpFS .............................. SUCCESS [ 0.965 s]

- [INFO] Apache Hadoop HDFS BookKeeper Journal ............. SUCCESS [ 0.629 s]

- [INFO] Apache Hadoop HDFS-NFS ............................ SUCCESS [ 0.284 s]

- [INFO] Apache Hadoop HDFS Project ........................ SUCCESS [ 0.061 s]

- [INFO] hadoop-yarn ....................................... SUCCESS [ 0.052 s]

- [INFO] hadoop-yarn-api ................................... SUCCESS [ 0.842 s]

- [INFO] hadoop-yarn-common ................................ SUCCESS [ 0.322 s]

- [INFO] hadoop-yarn-server ................................ SUCCESS [ 0.065 s]

- [INFO] hadoop-yarn-server-common ......................... SUCCESS [ 0.972 s]

- [INFO] hadoop-yarn-server-nodemanager .................... SUCCESS [ 0.580 s]

- [INFO] hadoop-yarn-server-web-proxy ...................... SUCCESS [ 0.379 s]

- [INFO] hadoop-yarn-server-applicationhistoryservice ...... SUCCESS [ 0.281 s]

- [INFO] hadoop-yarn-server-resourcemanager ................ SUCCESS [ 0.378 s]

- [INFO] hadoop-yarn-server-tests .......................... SUCCESS [ 0.534 s]

- [INFO] hadoop-yarn-client ................................ SUCCESS [ 0.307 s]

- [INFO] hadoop-yarn-applications .......................... SUCCESS [ 0.050 s]

- [INFO] hadoop-yarn-applications-distributedshell ......... SUCCESS [ 0.202 s]

- [INFO] hadoop-yarn-applications-unmanaged-am-launcher .... SUCCESS [ 0.194 s]

- [INFO] hadoop-yarn-site .................................. SUCCESS [ 0.057 s]

- [INFO] hadoop-yarn-project ............................... SUCCESS [ 0.066 s]

- [INFO] hadoop-mapreduce-client ........................... SUCCESS [ 0.091 s]

- [INFO] hadoop-mapreduce-client-core ...................... SUCCESS [ 1.321 s]

- [INFO] hadoop-mapreduce-client-common .................... SUCCESS [ 0.786 s]

- [INFO] hadoop-mapreduce-client-shuffle ................... SUCCESS [ 0.456 s]

- [INFO] hadoop-mapreduce-client-app ....................... SUCCESS [ 0.508 s]

- [INFO] hadoop-mapreduce-client-hs ........................ SUCCESS [ 0.834 s]

- [INFO] hadoop-mapreduce-client-jobclient ................. SUCCESS [ 0.541 s]

- [INFO] hadoop-mapreduce-client-hs-plugins ................ SUCCESS [ 0.284 s]

- [INFO] Apache Hadoop MapReduce Examples .................. SUCCESS [ 0.851 s]

- [INFO] hadoop-mapreduce .................................. SUCCESS [ 0.099 s]

- [INFO] Apache Hadoop MapReduce Streaming ................. SUCCESS [ 0.742 s]

- [INFO] Apache Hadoop Distributed Copy .................... SUCCESS [ 0.335 s]

- [INFO] Apache Hadoop Archives ............................ SUCCESS [ 0.397 s]

- [INFO] Apache Hadoop Rumen ............................... SUCCESS [ 0.371 s]

- [INFO] Apache Hadoop Gridmix ............................. SUCCESS [ 0.230 s]

- [INFO] Apache Hadoop Data Join ........................... SUCCESS [ 0.184 s]

- [INFO] Apache Hadoop Extras .............................. SUCCESS [ 0.217 s]

- [INFO] Apache Hadoop Pipes ............................... SUCCESS [ 0.048 s]

- [INFO] Apache Hadoop OpenStack support ................... SUCCESS [ 0.244 s]

- [INFO] Apache Hadoop Client .............................. SUCCESS [ 0.590 s]

- [INFO] Apache Hadoop Mini-Cluster ........................ SUCCESS [ 0.230 s]

- [INFO] Apache Hadoop Scheduler Load Simulator ............ SUCCESS [ 0.650 s]

- [INFO] Apache Hadoop Tools Dist .......................... SUCCESS [ 0.334 s]

- [INFO] Apache Hadoop Tools ............................... SUCCESS [ 0.042 s]

- [INFO] Apache Hadoop Distribution ........................ SUCCESS [ 0.144 s]

- [INFO] ------------------------------------------------------------------------

- [INFO] BUILD SUCCESS

- [INFO] ------------------------------------------------------------------------

- [INFO] Total time: 31.234 s

- [INFO] Finished at: 2014-06-23T14:55:08+08:00

- [INFO] Final Memory: 84M/759M

- [INFO] ------------------------------------------------------------------------

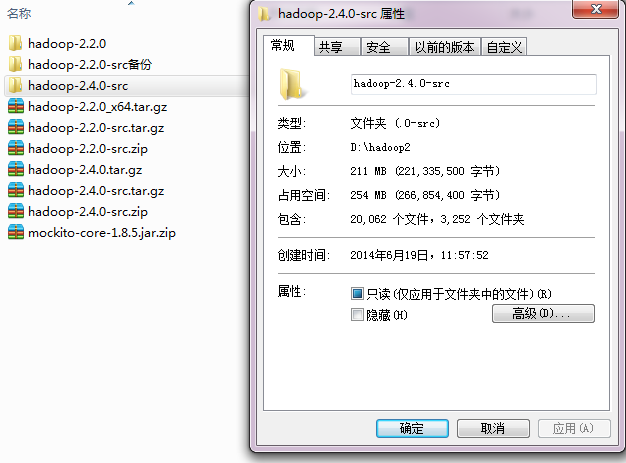

这时候,我们已经把源码给下载下来了。这时候,我们会看到文件会明显增大。

<ignore_js_op>

3.关联eclipse源码

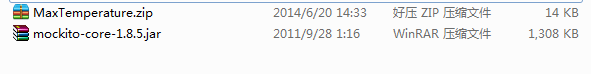

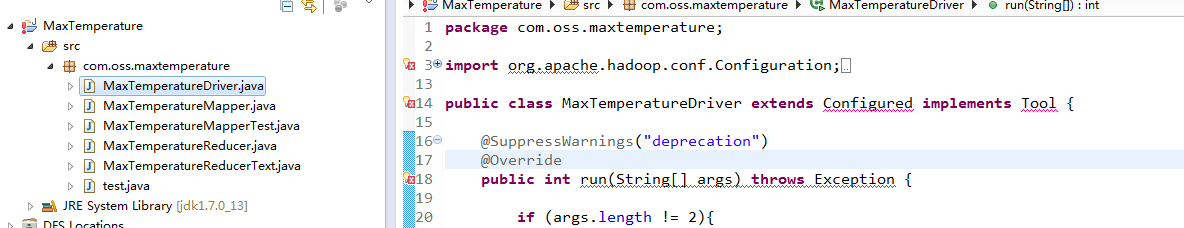

加入我们以下程序

<ignore_js_op> hadoop2.2mapreduce例子.rar

hadoop2.2mapreduce例子.rar

如下图示,对他们进行了打包

<ignore_js_op>

这两个文件, MaxTemperature.zip为mapreduce例子,mockito-core-1.8.5.jar为mapreduce例子所引用的包

(这里需要说明的是,mapreduce为2.2,但是不影响关联源码,只是交给大家该如何关联源码)

我们解压之后,导入eclipse

(对于导入项目不熟悉,参考零基础教你如何导入eclipse项目)

<ignore_js_op>

我们导入之后,看到很多的红线,这些其实都是没有引用包,下面我们开始解决这些语法问题。

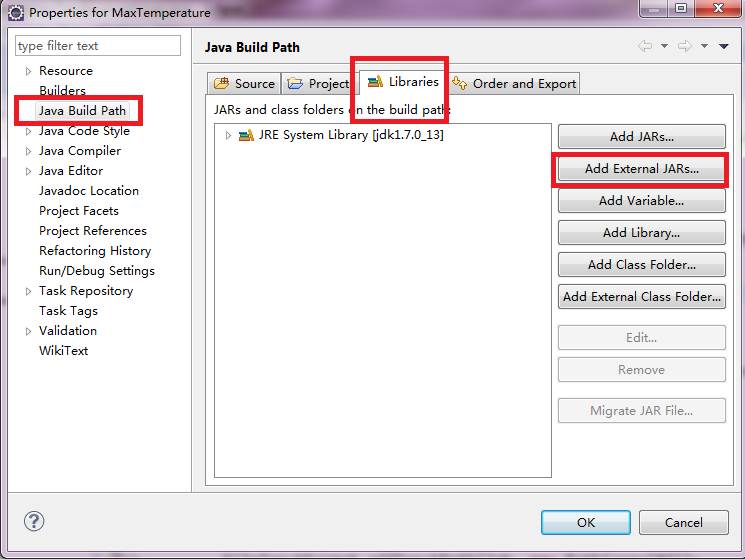

一、解决导入jar包

(1)引入mockito-core-1.8.5.jar

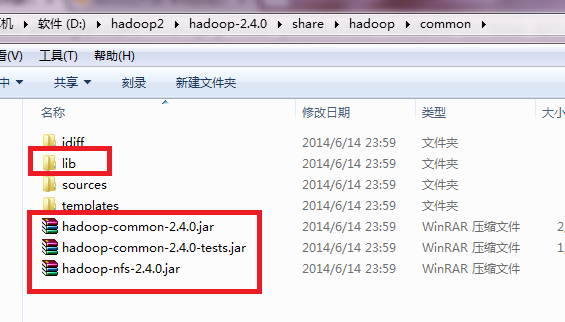

(2)hadoop2.4编译包中的jar文件,这些文件的位置如下:

hadoop_home中sharehadoop文件夹下,具体我这的位置D:hadoop2hadoop-2.4.0sharehadoop

找到里面的jar包,举例如下:lib文件中的jar包,以及下面的jar包都添加到buildpath中。

如果对于引用包,不知道该如何添加这些jar包,参考hadoop开发方式总结及操作指导。

(注意的是,我们这里是引入的是编译包,编译的下载hadoop--642.4.0.tar.gz

链接: http://pan.baidu.com/s/1c0vPjG0 密码:xj6l)

更多包下载可以参考hadoop家族、strom、spark、Linux、flume等jar包、安装包汇总下载

<ignore_js_op>

<ignore_js_op>

二、关联源码

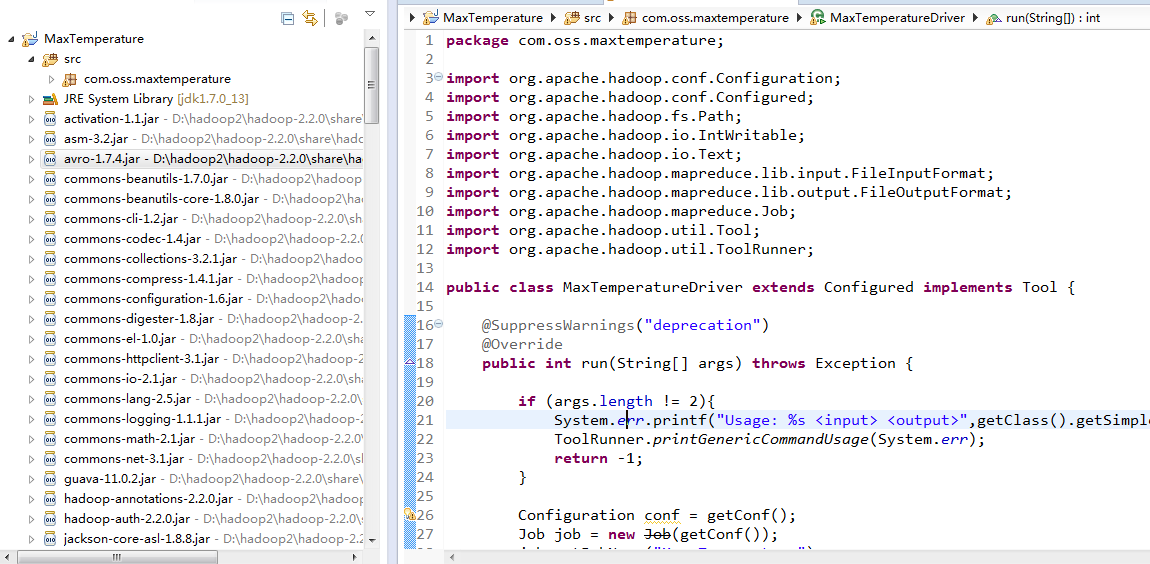

1.我们导入jar包之后,就没有错误了,如下图所示

<ignore_js_op>

2.找不到源码

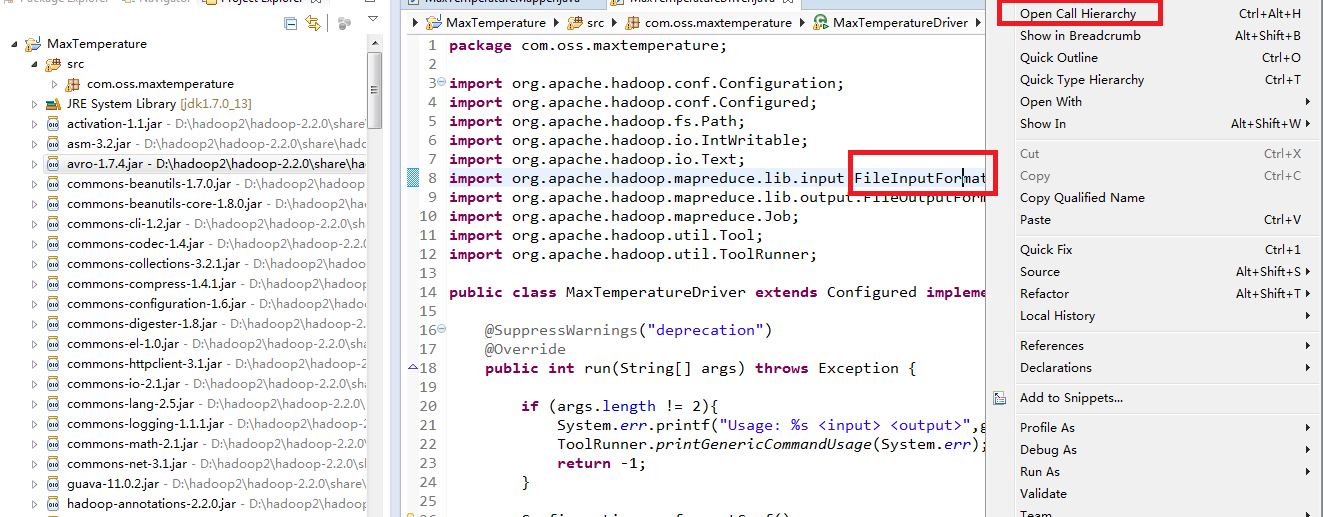

当我们想看一个类或则函数怎么实现的时候,通过Open Call Hierarchy,却找不到源文件。

<ignore_js_op>

<ignore_js_op>

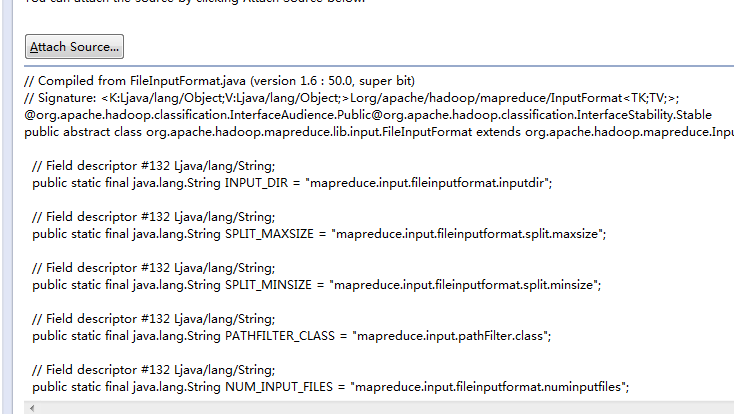

3.Attach Source

<ignore_js_op>

上面三处,我们按照顺序添加即可,我们选定压缩包之后,单击确定,ok了,我们的工作已经完毕。

注意:对于hadoop-2.2.0-src.zip则是我们上面通过maven下载的源码,然后压缩的文件,记得一定是压缩文件zip的形式

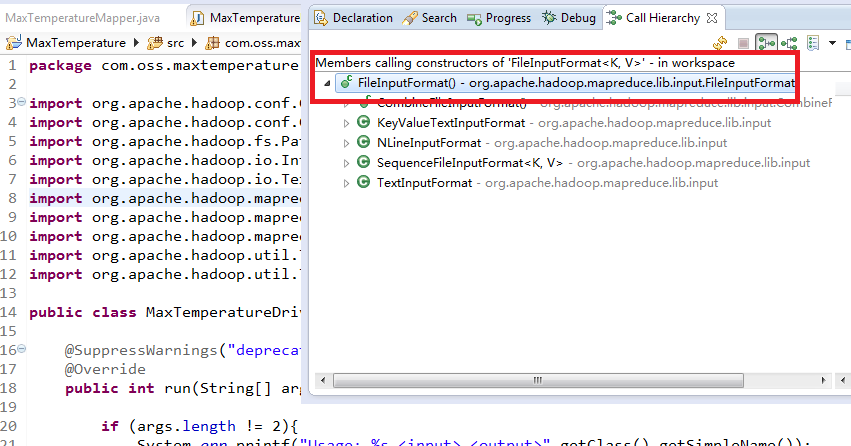

4.验证关联后查看源码

我们再次执行上面操作,通过Open Call Hierarchy

看到下面内容

<ignore_js_op>

然后我们双击上图主类,即红字部分,我们看到下面内容:

<ignore_js_op>

问题:

细心的同学,这里面我们产生一个问题,因为我们看到的是.class文件,而不是.java文件。那么他会不会和我们所看到的.java文件不一样那。

其实是一样的,感兴趣的同学,可以验证一下。