import nltk

from nltk.corpus import stopwords

from nltk.stem import WordNetLemmatizer

def preprocessing(text):

tokens=[word for sent in nltk.sent_tokenize(text) for word in nltk.word_tokenize(sent)]

stops=stopwords.words('english')

tokens=[token for token in tokens if token not in stops]

tokens=[token.lower() for token in tokens if len(tokens)>=3]

lmtzr=WordNetLemmatizer()

tokens=[lmtzr.lemmatize(token) for token in tokens]

preprocessed_text=' '.join(tokens)

return preprocessed_text

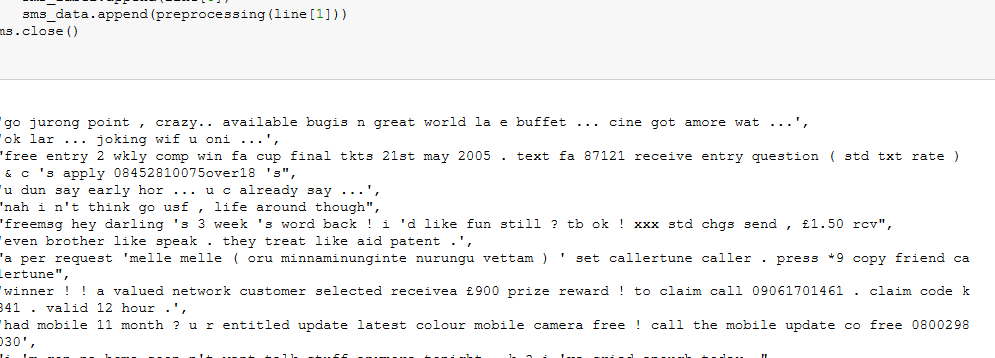

import csv

file_path=r'F:duymaisms.txt'

sms=open(file_path,'r',encoding='utf-8')

sms_data=[]

sms_label=[]

csv_reader=csv.reader(sms,delimiter=' ')

for line in csv_reader:

sms_label.append(line[0])

sms_data.append(preprocessing(line[1]))

sms.close()

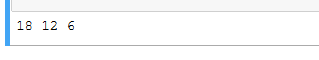

from sklearn.model_selection import train_test_split x_train,x_test,y_train,y_test=train_test_split(sms_data,sms_label,test_size=0.3,random_state=0,stratify=sms_label) print(len(sms_data),len(x_train),len(x_test))

# 将其向量化 from sklearn.feature_extraction.text import TfidfVectorizer vectorizer=TfidfVectorizer(min_df=2,ngram_range=(1,2),stop_words='english',strip_accents='unicode')#,,norm='12' X_train=vectorizer.fit_transform(x_train) X_test=vectorizer.transform(x_test)

# 朴素贝叶斯分类器 from sklearn.naive_bayes import MultinomialNB clf=MultinomialNB().fit(X_train,y_train) y_nb_pred=clf.predict(X_test)

# 分类结果显示

from sklearn.metrics import confusion_matrix

from sklearn.metrics import classification_report

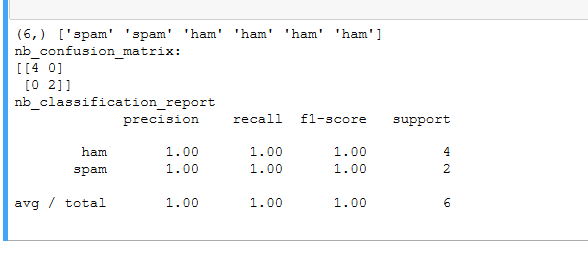

print(y_nb_pred.shape,y_nb_pred)

print('nb_confusion_matrix:')

cm=confusion_matrix(y_test,y_nb_pred)

print(cm)

print('nb_classification_report')

cr=classification_report(y_test,y_nb_pred)

print(cr)

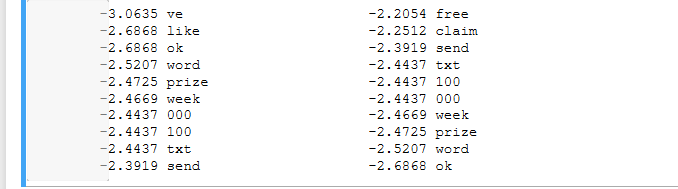

feature_names=vectorizer.get_feature_names()

coefs=clf.coef_

intercept=clf.intercept_

coefs_with_fns=sorted(zip(coefs[0],feature_names))

n=10

top=zip(coefs_with_fns[:n],coefs_with_fns[:-(n+1):-1])

for (coef_1,fn_1),(coef_2,fn_2) in top:

print(' %.4f %-15s %.4f %-15s'%(coef_1,fn_1,coef_2,fn_2))