自从学习了爬虫,就想在B站爬取点什么数据,最近看到一些个up主涨粉很快,于是对up主的粉丝数量产生了好奇,所以就有了标题~

首先,我天真的以为通过up主个人空间的地址就能爬到

https://space.bilibili.com/137952

但事与愿违,给这个地址发送请求返回来的并不是我们想要的页面数据,而是一个类似于要求用户更换浏览器的错误页面

我们可以使用postman来模拟发送这个请求

响应的页面就是这个

<!DOCTYPE html> <html> <head> <meta name=spm_prefix content=333.999> <meta charset=UTF-8> <meta http-equiv=X-UA-Compatible content="IE=edge,chrome=1"> <meta name=renderer content=webkit|ie-comp|ie-stand> <link rel=stylesheet type=text/css href=//at.alicdn.com/t/font_438759_ivzgkauwm755qaor.css> <script type=text/javascript>var ua = window.navigator.userAgent var agents = ["Android","iPhone","SymbianOS","Windows Phone","iPod"] var pathname = /d+/.exec(window.location.pathname) var getCookie = function(sKey) { return decodeURIComponent( document.cookie.replace( new RegExp('(?:(?:^|.*;)\s*' + encodeURIComponent(sKey).replace(/[-.+*]/g, '\$&') + '\s*\=\s*([^;]*).*$)|^.*$'), '$1' ) ) || null } var DedeUserID = getCookie('DedeUserID') var mid = pathname ? +pathname[0] : DedeUserID === null ? 0 : +DedeUserID if (mid < 1) { window.location.href = 'https://passport.bilibili.com/login?gourl=https://space.bilibili.com' } else { window._bili_space_mid = mid window._bili_space_mymid = DedeUserID === null ? 0 : +DedeUserID var prefix = /^/v/.test(pathname) ? '/v' : '' window.history.replaceState({}, '', prefix + '/' + mid + '/' + (pathname ? window.location.hash : '#/')) for (var i = 0; i < agents.length; i++) { if (ua.indexOf(agents[i]) > -1) { window.location.href = 'https://m.bilibili.com/space/' + mid break } } } </script> <link href=//s1.hdslb.com/bfs/static/jinkela/space/css/space.25.bbaa2f1b5482f89caf23662936077cf2ae130dd9.css rel=stylesheet> <link href=//s1.hdslb.com/bfs/static/jinkela/space/css/space.26.bbaa2f1b5482f89caf23662936077cf2ae130dd9.css rel=stylesheet> </head> <body> <div class="z-top-container has-top-search"></div> <!--[if lt IE 9]> <div id="browser-version-tip"> <div class="wrapper"> 抱歉,您正在使用不支持的浏览器访问个人空间。推荐您 <a href="//www.google.cn/chrome/browser/desktop/index.html">安装 Chrome 浏览器</a>以获得更好的体验 ヾ(o◕∀◕)ノ </div> </div> <![endif]--> <div id=space-app></div> <script type=text/javascript>//日志上报 window.spaceReport = {} window.reportConfig = { sample: 1, scrollTracker: true, msgObjects: 'spaceReport' } var reportScript = document.createElement('script') reportScript.src = '//s1.hdslb.com/bfs/seed/log/report/log-reporter.js' document.getElementsByTagName('body')[0].appendChild(reportScript) reportScript.onerror = function() { console.warn('log-reporter.js加载失败,放弃上报') var noop = function() {} window.reportObserver = { sendPV: noop, forceCommit: noop } }</script> <script src=//static.hdslb.com/js/jquery.min.js></script> <script src=//s1.hdslb.com/bfs/seed/jinkela/header/header.js></script> <script type=text/javascript src=//s1.hdslb.com/bfs/static/jinkela/space/manifest.bbaa2f1b5482f89caf23662936077cf2ae130dd9.js></script> <script type=text/javascript src=//s1.hdslb.com/bfs/static/jinkela/space/vendor.bbaa2f1b5482f89caf23662936077cf2ae130dd9.js></script> <script type=text/javascript src=//s1.hdslb.com/bfs/static/jinkela/space/space.bbaa2f1b5482f89caf23662936077cf2ae130dd9.js></script> </body> </html>

这是怎么回事呢?

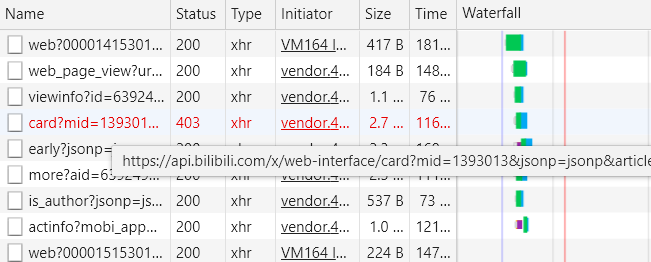

让我们来换个思路吧,随便打开一个up主的专栏,按下F12,可以看到发送了这么些请求

其中的这个请求便是我们所需要的

https://api.bilibili.com/x/web-interface/card?mid=1393013&jsonp=jsonp&article=true

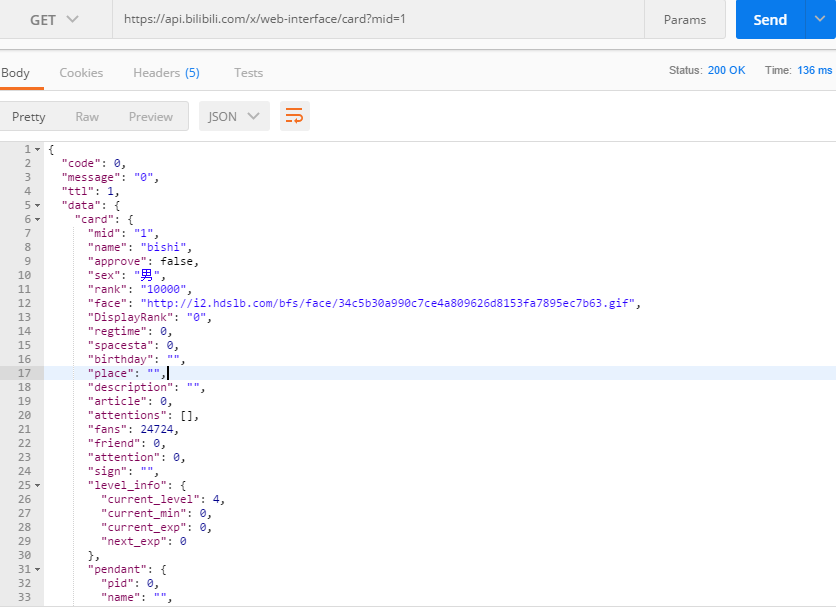

让我们再用postman来测试一下

由图可见,返回的json串就是我们想要的数据

接下来,我们就用Java的爬虫框架WebMagic来编写爬虫程序,爬取1~1000的用户信息(粉丝数 >= 10000)

package com.tangzhe.spider.webmagic; import com.alibaba.fastjson.JSONObject; import com.mongodb.*; import com.mongodb.client.MongoCollection; import com.mongodb.client.MongoDatabase; import com.tangzhe.spider.webmagic.entity.UpMaster; import us.codecraft.webmagic.Page; import us.codecraft.webmagic.Site; import us.codecraft.webmagic.Spider; import us.codecraft.webmagic.processor.PageProcessor; import us.codecraft.webmagic.selector.Json; import java.util.ArrayList; import java.util.List; /** * Created by 唐哲 * 2018-06-21 17:36 * 爬取b站粉丝数 */ public class BilibiliPageProcess implements PageProcessor { private static MongoCollection<DBObject> collection = null; static { MongoClientOptions options = MongoClientOptions.builder().connectTimeout(60000).build(); MongoClient client = new MongoClient(new ServerAddress("localhost", 27017), options); MongoDatabase db = client.getDatabase("bilibili"); collection = db.getCollection("up_master", DBObject.class); } private Site site = Site.me() .setRetrySleepTime(3); //.addHeader("Cookie", "sid=73z7tai9; fts=1499404833; pgv_pvi=4286189568; LIVE_BUVID=038be0e7dbefae118807a05ce6758c31; LIVE_BUVID__ckMd5=35b7fa0ba25cd9c6; rpdid=iwpsxqxxqxdopllxpxipw; buvid3=6ECE81DC-0F17-4805-A8D8-93AAA590623537243infoc; biliMzIsnew=1; biliMzTs=0; UM_distinctid=160c4c3cad640-0317802b275f19-5a442916-144000-160c4c3cad724e; im_notify_type_1393013=0; _cnt_dyn=undefined; _cnt_pm=0; _cnt_notify=0; uTZ=-480; CURRENT_QUALITY=64; im_local_unread_1393013=0; im_seqno_1393013=37; finger=edc6ecda; DedeUserID=1393013; DedeUserID__ckMd5=afb19007fffe33b0; SESSDATA=0ea7d6d3%2C1531986297%2C72337142; bili_jct=1814a4be6409416a0cc9f606e5494a09; _dfcaptcha=bebbef520c7c46f8cb0a877c33c677e1; bp_t_offset_1393013=132005944396809066") //.addHeader("User-Agent", "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.87 Safari/537.36"); @Override public Site getSite() { return this.site; } @Override public void process(Page page) { Json result = page.getJson(); JSONObject jsonObject = JSONObject.parseObject(result.toString()); JSONObject data = jsonObject.getJSONObject("data"); JSONObject card = data.getJSONObject("card"); String mid = card.getString("mid"); // mid String name = card.getString("name"); // name String face = card.getString("face"); // 头像 String fans = card.getString("fans"); // 粉丝数 if (Long.parseLong(fans) <= 10000) { return; } String attention = card.getString("attention"); // 关注数 String sign = card.getString("sign"); // 签名 JSONObject levelInfo = card.getJSONObject("level_info"); String level = levelInfo.getString("current_level"); // 会员等级 BasicDBObject document = new BasicDBObject(); document.append("mid", Long.parseLong(mid)) .append("name", name) .append("face", face) .append("fans", Long.parseLong(fans)) .append("attention", Long.parseLong(attention)) .append("sign", sign) .append("level", Integer.parseInt(level)); collection.insertOne(document); } public static void main(String[] args) { List<String> urls = new ArrayList<>(); for (int i = 1; i <= 1000; i++) { urls.add("https://api.bilibili.com/x/web-interface/card?mid=" + i); } Spider.create(new BilibiliPageProcess()).addRequest().addUrl(urls.toArray(new String[urls.size()])).thread(10).run(); } }

爬取的数据存储到mongodb中,打开mongodb查看存下来的数据:

uid在1~1000以内粉丝数在10000以上包括10000的up主就全部存储到数据库中了

我们可以用spingboot写一个web应用,通过页面和接口更好地查看这些数据

pom文件:

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>com.tangzhe</groupId> <artifactId>spider-demo</artifactId> <version>1.0</version> <packaging>jar</packaging> <name>spider-demo</name> <description>this is my spider demo</description> <parent> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-parent</artifactId> <version>1.5.10.RELEASE</version> </parent> <properties> <project.build.sourceEncoding>UTF-8</project.build.sourceEncoding> <project.reporting.outputEncoding>UTF-8</project.reporting.outputEncoding> <java.version>1.8</java.version> </properties> <dependencies> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-web</artifactId> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-test</artifactId> <scope>test</scope> </dependency> <!-- webmagic --> <dependency> <groupId>us.codecraft</groupId> <artifactId>webmagic-core</artifactId> <version>0.6.1</version> </dependency> <dependency> <groupId>us.codecraft</groupId> <artifactId>webmagic-extension</artifactId> <version>0.6.1</version> </dependency> <dependency> <groupId>org.projectlombok</groupId> <artifactId>lombok</artifactId> <version>1.16.12</version> </dependency> <dependency> <groupId>commons-logging</groupId> <artifactId>commons-logging</artifactId> <version>1.2</version> </dependency> <dependency> <groupId>net.sourceforge.htmlunit</groupId> <artifactId>htmlunit</artifactId> <version>2.23</version> </dependency> <dependency> <groupId>org.apache.commons</groupId> <artifactId>commons-lang3</artifactId> <version>3.7</version> </dependency> <dependency> <groupId>commons-io</groupId> <artifactId>commons-io</artifactId> <version>2.6</version> </dependency> <dependency> <groupId>org.seleniumhq.selenium</groupId> <artifactId>selenium-java</artifactId> <version>2.3.0</version> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-thymeleaf</artifactId> </dependency> <dependency> <groupId>org.jsoup</groupId> <artifactId>jsoup</artifactId> <version>1.11.3</version> </dependency> <dependency> <groupId>com.alibaba</groupId> <artifactId>fastjson</artifactId> <version>1.2.47</version> </dependency> <!-- mongodb --> <dependency> <groupId>org.mongodb</groupId> <artifactId>mongo-java-driver</artifactId> <version>3.3.0</version> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-data-mongodb</artifactId> </dependency>

<dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-freemarker</artifactId> </dependency> </dependencies> <build> <plugins> <plugin> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-maven-plugin</artifactId> </plugin> </plugins> </build> </project>

配置文件:

server: port: 8888 spring: application: name: spider-demo profiles: active: dev http: encoding: force: true charset: UTF-8 enabled: true thymeleaf: encoding: UTF-8 cache: false mode: HTML5 mongo: host: localhost port: 27017 timeout: 60000 db: test spring: freemarker: allow-request-override: false cache: true check-template-location: true charset: UTF-8 content-type: text/html expose-request-attributes: false expose-session-attributes: false expose-spring-macro-helpers: false

mongodb配置类:

package com.tangzhe.spider.webmagic.config; import com.mongodb.MongoClient; import com.mongodb.MongoClientOptions; import com.mongodb.ServerAddress; import lombok.Data; import org.springframework.boot.context.properties.ConfigurationProperties; import org.springframework.context.annotation.Bean; import org.springframework.context.annotation.Configuration; import org.springframework.data.mongodb.core.MongoTemplate; import org.springframework.data.mongodb.core.SimpleMongoDbFactory; import org.springframework.data.mongodb.core.convert.DefaultMongoTypeMapper; import org.springframework.data.mongodb.core.convert.MappingMongoConverter; import org.springframework.data.mongodb.core.mapping.MongoMappingContext; import org.springframework.data.mongodb.gridfs.GridFsTemplate; /** * MongoDB配置 */ @Configuration @ConfigurationProperties(prefix = "mongo") @Data public class MongoDBConfiguration { //mongodb服务地址 private String host; //mongodb服务端口号 private Integer port; //连接超时 private Integer timeout; //mongodb数据库名 private String db; /** * 配置MongoDB模板 */ @Bean public MongoTemplate mongoTemplate(SimpleMongoDbFactory mongoDbFactory, MappingMongoConverter mappingMongoConverter) { return new MongoTemplate(mongoDbFactory, mappingMongoConverter); } /** * 配置自增ID监听器 */ // @Bean // public SaveMongoEventListener saveMongoEventListener() { // return new SaveMongoEventListener(); // } /** * 配置GridFs模板,实现文件上传下载 */ @Bean public GridFsTemplate gridFsTemplate(SimpleMongoDbFactory mongoDbFactory, MappingMongoConverter mappingMongoConverter) { return new GridFsTemplate(mongoDbFactory, mappingMongoConverter); } /** * 配置mongoDbFactory */ @Bean public SimpleMongoDbFactory mongoDbFactory() { MongoClientOptions options = MongoClientOptions.builder().connectTimeout(timeout).build(); MongoClient client = new MongoClient(new ServerAddress(host, port), options); return new SimpleMongoDbFactory(client, db); } /** * 配置mongoMappingContext */ @Bean public MongoMappingContext mongoMappingContext() { return new MongoMappingContext(); } /** * 配置defaultMongoTypeMapper */ @Bean public DefaultMongoTypeMapper defaultMongoTypeMapper() { //去掉_class字段 return new DefaultMongoTypeMapper(null); } /** * 配置mappingMongoConverter */ @Bean public MappingMongoConverter mappingMongoConverter(SimpleMongoDbFactory mongoDbFactory, MongoMappingContext mongoMappingContext, DefaultMongoTypeMapper defaultMongoTypeMapper) { MappingMongoConverter mappingMongoConverter = new MappingMongoConverter(mongoDbFactory, mongoMappingContext); mappingMongoConverter.setTypeMapper(defaultMongoTypeMapper); return mappingMongoConverter; } }

首先编写一个跟mongodb交互的entity类:

package com.tangzhe.spider.webmagic.entity; import lombok.Data; import org.springframework.data.mongodb.core.mapping.Document; @Document(collection = "up_master") @Data public class UpMaster { private String mid; private Long uid; private String name; // 头像 private String face; // 粉丝 private Long fans; // 关注 private Long attention; // 签名 private String sign; // 会员等级 private Integer level; }

持久层:

这里有三个方法

第一个是通过uid查询用户

第二个是查询所有用户并通过粉丝数排序

第三个是查询粉丝数排行前十名

package com.tangzhe.spider.webmagic.repository; import com.tangzhe.spider.webmagic.entity.UpMaster; import org.springframework.data.repository.CrudRepository; import java.util.List; public interface UpMasterRepository extends CrudRepository<UpMaster, Long> { List<UpMaster> findAllByOrderByMid(); List<UpMaster> findAllByOrderByFansDesc(); List<UpMaster> findTop10ByOrderByFansDesc(); }

业务层:

查询前10up主

package com.tangzhe.spider.webmagic.service; import com.tangzhe.spider.webmagic.entity.UpMaster; import com.tangzhe.spider.webmagic.repository.UpMasterRepository; import org.springframework.beans.factory.annotation.Autowired; import org.springframework.data.domain.Sort; import org.springframework.stereotype.Service; import java.util.List; @Service public class UpMasterServiceImpl implements UpMasterService { @Autowired private UpMasterRepository upMasterRepository; @Override public List<UpMaster> findAllOrderByMid() { Sort.Order order = new Sort.Order(Sort.Direction.DESC, "mid"); Sort sort = new Sort(order); return upMasterRepository.findTop10ByOrderByFansDesc(); } @Override public void add(UpMaster upMaster) { upMasterRepository.save(upMaster); } }

controller层:

这个接口可以查询当前mongodb中排名前10的up主信息

package com.tangzhe.spider.webmagic.controller; import com.tangzhe.spider.webmagic.entity.UpMaster; import com.tangzhe.spider.webmagic.service.UpMasterService; import org.springframework.beans.factory.annotation.Autowired; import org.springframework.web.bind.annotation.GetMapping; import org.springframework.web.bind.annotation.RequestMapping; import org.springframework.web.bind.annotation.RestController; import java.util.List; @RestController @RequestMapping("/up") public class UpMasterController { @Autowired private UpMasterService upMasterService; @GetMapping("/list") public List<UpMaster> list() { List<UpMaster> list = upMasterService.findAllOrderByMid(); return list; } }

最后写一个前端页面展示up主粉丝排行:

<!DOCTYPE html> <html lang="en"> <head> <meta charset="UTF-8" /> <title>B站up主粉丝数排行榜</title> <style type="text/css"> .face { width: 128px; height: 128px; } .content { position: absolute; } .info { float: left; padding-right: 250px; } </style> <script type="text/javascript" src="/jquery-1.8.3.js"></script> <script type="text/javascript"> $.ajax({ url: "/up/list", type: "GET", success: function(data){ $(data).each(function(i, up){ var no = i+1; var name = "<div>"+"NO."+no+" "+up.name+"</div>"; var uid = "<div>UID: "+up.uid+"</div>"; var face = "<img src="+up.face+" />"; var fans = "<div>粉丝:"+up.fans+"</div><br/>" var temp = "info"; var info = "<div class="+temp+">" + name + uid + face + fans + "</div>"; $(".content").append(info); $(".content img").addClass("face"); }); } }); </script> </head> <body> <div class="content"></div> </body> </html>

现在就可以执行springboot主类运行项目

访问 http://localhost:8888/

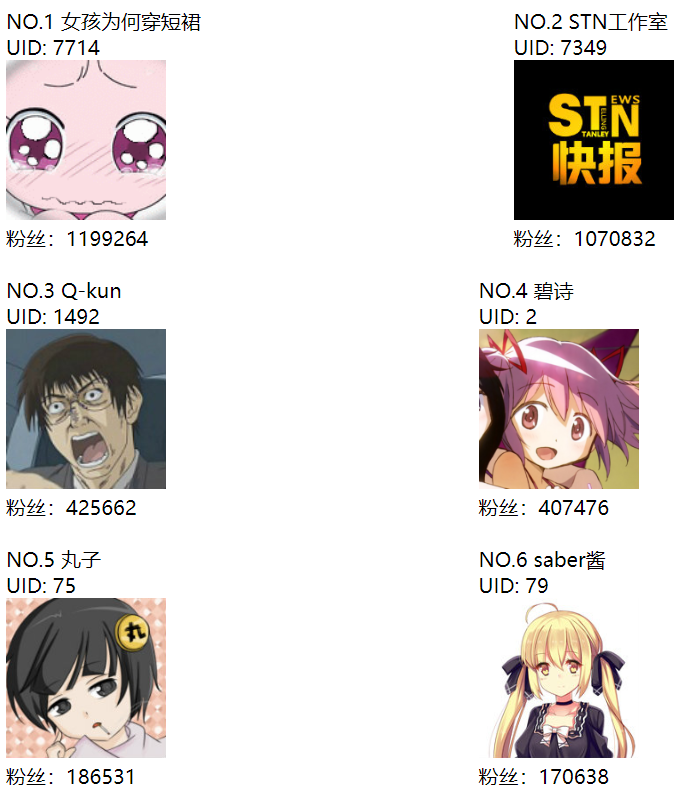

图中展示出来的就是B站用户Uid从1~5000的粉丝数在1W以上的up主的前十名排行榜了

看起来很不错,一个爬虫程序加上web端页面展示就完成了~

但是,当爬取上万甚至上百万B站用户信息的时候,爬虫效率并不高,可以说是很慢,这个问题让我很纠结。。。

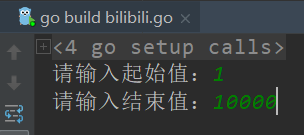

于是我便想用go语言来写一段同样的爬虫程序,试试看会不会快一点,说写就写~

这里为了方便起见,就不分层了,直接将爬虫程序放到一个文件中

package main import ( "fmt" "strconv" "net/http" "encoding/json" "log" "gopkg.in/mgo.v2" "time" ) // B站接口返回数据类型 type Result struct { Code int `json:"code"` Message string `json:"message"` Ttl int `json:"ttl"` Data Data `json:"data"` } type Data struct { Card UpMaster `json:"card"` } // up主结构体 type UpMaster struct { Mid string `json:"mid"` Uid int64 `json:"uid"` Name string `json:"name"` Face string `json:"face"` Fans int64 `json:"fans"` Attention int64 `json:"attention"` Sign string `json:"sign"` } const urlPrefix = "https://api.bilibili.com/x/web-interface/card?mid=" // url前缀 var conn *mgo.Collection func init() { // 初始化mongodb session, err := mgo.Dial("localhost:27017") if err != nil { panic(err) } session.SetMode(mgo.Monotonic, true) conn = session.DB("test").C("up_master") } // 爬虫执行函数,供外部调用 func Work(f func(i int, page chan<- int) ()) { var start, end int fmt.Printf("请输入起始值:") fmt.Scan(&start) fmt.Printf("请输入结束值:") fmt.Scan(&end) fmt.Printf("现在开始爬取UID从%d~%d的B站用户粉丝数 ", start, end) page := make(chan int) for i:=start; i<=end; i++ { go f(i, page) } for i:=start; i<=end; i++ { fmt.Printf("%d爬取完成 ", <-page) } } // 通过用户id爬取粉丝数量 func SpideBilibiliFansByUid(i int, page chan<- int) { // 明确爬取的url url := urlPrefix + strconv.Itoa(i) //fmt.Println(url) resp, err := http.Get(url) if err != nil { fmt.Println("http.Get err =", err) return } defer resp.Body.Close() var result string buf := make([]byte, 4*1024) for { n, _ := resp.Body.Read(buf) if n == 0 { break } result += string(buf[:n]) } //fmt.Println(result) // 将接口返回的json串封装成upMaster对象 var res Result err = json.Unmarshal([]byte(result), &res) if err != nil { log.Fatal(err) } //fmt.Println(res.Data.Card) up := res.Data.Card // up主对象 atoi, _ := strconv.Atoi(up.Mid) up.Uid = int64(atoi) // 将up主对象存入mongodb // 粉丝数 >= 10000 if up.Fans >= 10000 { conn.Insert(up) } time.Sleep(10 * time.Millisecond) page <- i } func main() { Work(SpideBilibiliFansByUid) }

go语言在语言层面天生支持多线程,只要在前面加上go关键字,就能使用协程了 go func(){}

运行程序(运行之前需要先开mongodb):

这里输入的两个数字就是B站用户的uid,图中是1~10000

经测试,速度比Java的WebMagic快了好几个层级,所以爬虫程序就选用go语言的了,web项目还是采用springboot的。

最后奉上Uid从1~10000的up主粉丝大于1W的用户数据:

web页面展示前十名:

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

DuangDuangDuangDuang,由于篇幅有限,未能展示所有UP主,毕竟有好几十亿的用户啊(这得爬到什么时候呢。。。)

不过后续还会推出更多的排名,慢慢地接近爬取所有UP主粉丝数

请大家拭目以待哦~