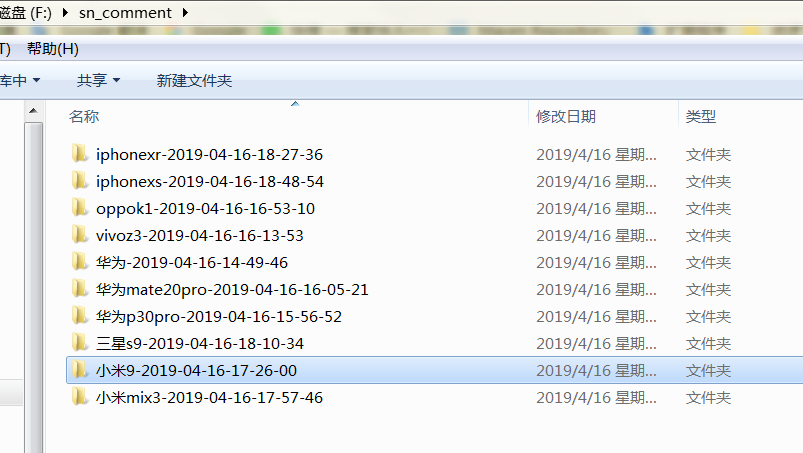

毕设需要大量的商品评论,网上找的数据比较旧了,自己动手

代理池用的proxypool,github:https://github.com/jhao104/proxy_pool

ua:fake_useragent

1 # 评价较多的店铺(苏宁推荐)

2 https://tuijian.suning.com/recommend-portal/recommendv2/biz.jsonp?parameter=%E5%8D%8E%E4%B8%BA&sceneIds=2-1&count=10

3

4 # 评价

5 https://review.suning.com/ajax/cluster_review_lists/general-30259269-000000010748901691-0000000000-total-1-default-10-----reviewList.htm?callback=reviewList

6

7 # clusterid

8 # 在查看全部评论的href中,实际测试发现是执行js加上的,两种方案

9 # 1.去匹配js中的clusterId

10 # 2.或者用selenium/phantomjs去请求执行js之后的页面然后解析html获得href

代码:

1 # -*- coding: utf-8 -*-

2 # @author: Tele

3 # @Time : 2019/04/15 下午 8:20

4 import time

5 import requests

6 import os

7 import json

8 import re

9 from fake_useragent import UserAgent

10

11

12 class SNSplider:

13 flag = True

14 regex_cluser_id = re.compile(""clusterId":"(.{8})"")

15 regex_comment = re.compile("reviewList((.*))")

16

17 @staticmethod

18 def get_proxy():

19 return requests.get("http://127.0.0.1:5010/get/").content.decode()

20

21 @staticmethod

22 def get_ua():

23 ua = UserAgent()

24 return ua.random

25

26 def __init__(self, kw_list):

27 self.kw_list = kw_list

28 # 评论url 参数顺序:cluser_id,sugGoodsCode,页码

29 self.url_temp = "https://review.suning.com/ajax/cluster_review_lists/general-{}-{}-0000000000-total-{}-default-10-----reviewList.htm"

30 self.headers = {

31 "User-Agent": "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36",

32 }

33 self.proxies = {

34 "http": None

35 }

36 self.parent_dir = None

37 self.file_dir = None

38

39 # ua,proxy

40 def check(self):

41 self.headers["User-Agent"] = SNSplider.get_ua()

42 proxy = "http://" + SNSplider.get_proxy()

43 self.proxies["http"] = proxy

44 print("ua:", self.headers["User-Agent"])

45 print("proxy:", self.proxies["http"])

46

47 # 评论

48 def parse_url(self, cluster_id, sugGoodsCode, page):

49 url = self.url_temp.format(cluster_id, sugGoodsCode, page)

50 response = requests.get(url, headers=self.headers, proxies=self.proxies, verify=False)

51 if response.status_code == 200:

52 print(url)

53 if len(response.content) < 0:

54 return

55 data = json.loads(SNSplider.regex_comment.findall(response.content.decode())[0])

56 if "commodityReviews" in data:

57 # 评论

58 comment_list = data["commodityReviews"]

59 if len(comment_list) > 0:

60 item_list = list()

61 for comment in comment_list:

62 item = dict()

63 try:

64 # 商品名

65 item["referenceName"] = comment["commodityInfo"]["commodityName"]

66 except:

67 item["referenceName"] = None

68 # 评论时间

69 item["creationTime"] = comment["publishTime"]

70 # 内容

71 item["content"] = comment["content"]

72 # label

73 item["label"] = comment["labelNames"]

74 item_list.append(item)

75

76 # 保存

77 with open(self.file_dir, "a", encoding="utf-8") as file:

78 file.write(json.dumps(item_list, ensure_ascii=False, indent=2))

79 file.write("

")

80 time.sleep(5)

81 else:

82 SNSplider.flag = False

83 else:

84 print("评论页出错")

85

86 # 提取商品信息

87 def get_product_info(self):

88 url_temp = "https://tuijian.suning.com/recommend-portal/recommendv2/biz.jsonp?parameter={}&sceneIds=2-1&count=10"

89 result_list = list()

90 for kw in self.kw_list:

91 url = url_temp.format(kw)

92 response = requests.get(url, headers=self.headers, proxies=self.proxies, verify=False)

93 if response.status_code == 200:

94 kw_dict = dict()

95 id_list = list()

96 data = json.loads(response.content.decode())

97 skus_list = data["sugGoods"][0]["skus"]

98 if len(skus_list) > 0:

99 for skus in skus_list:

100 item = dict()

101 sugGoodsCode = skus["sugGoodsCode"]

102 # 请求cluserId

103 item["sugGoodsCode"] = sugGoodsCode

104 item["cluster_id"] = self.get_cluster_id(sugGoodsCode)

105 id_list.append(item)

106 kw_dict["title"] = kw

107 kw_dict["id_list"] = id_list

108 result_list.append(kw_dict)

109 else:

110 pass

111 return result_list

112

113 # cluserid

114 def get_cluster_id(self, sugGoodsCode):

115 self.check()

116 url = "https://product.suning.com/0000000000/{}.html".format(sugGoodsCode[6::])

117 response = requests.get(url, headers=self.headers, proxies=self.proxies, verify=False)

118 if response.status_code == 200:

119 cluser_id = None

120 try:

121 cluser_id = SNSplider.regex_cluser_id.findall(response.content.decode())[0]

122 except:

123 pass

124 return cluser_id

125 else:

126 print("请求cluster id出错")

127

128 def get_comment(self, item_list):

129 if len(item_list) > 0:

130 for item in item_list:

131 id_list = item["id_list"]

132 item_title = item["title"]

133 if len(id_list) > 0:

134 self.parent_dir = "f:/sn_comment/" + item_title + time.strftime("-%Y-%m-%d-%H-%M-%S",

135 time.localtime(time.time()))

136 if not os.path.exists(self.parent_dir):

137 os.makedirs(self.parent_dir)

138 for product_code in id_list:

139 # 检查proxy,ua

140 sugGoodsCode = product_code["sugGoodsCode"]

141 cluster_id = product_code["cluster_id"]

142 if not cluster_id:

143 continue

144 page = 1

145 # 检查目录

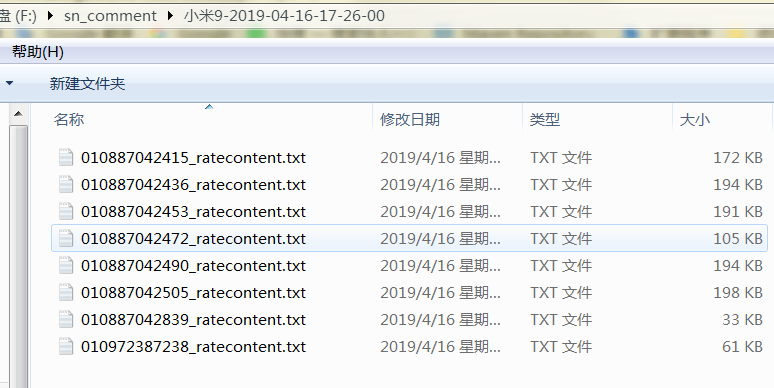

146 self.file_dir = self.parent_dir + "/" + sugGoodsCode[6::] + "_ratecontent.txt"

147 self.check()

148 while SNSplider.flag:

149 self.parse_url(cluster_id, sugGoodsCode, page)

150 page += 1

151 SNSplider.flag = True

152 else:

153 print("---error,empty id list---")

154 else:

155 print("---error,empty item list---")

156

157 def run(self):

158 self.check()

159 item_list = self.get_product_info()

160 print(item_list)

161 self.get_comment(item_list)

162

163

164 def main():

165 # , "华为mate20pro", "vivoz3", "oppok1", "荣耀8x", "小米9", "小米mix3", "三星s9", "iphonexr", "iphonexs"

166 # "华为p30pro", "华为mate20pro", "vivoz3""oppok1""荣耀8x", "小米9"

167 kw_list = ["小米mix3", "三星s9", "iphonexr", "iphonexs"]

168 splider = SNSplider(kw_list)

169 splider.run()

170

171

172 if __name__ == '__main__':

173 main()