环境

zk: 3.4.10

hadoop 2.7.7

jdk8

hbase 2.0.2

三台已安装配置好的hadoop002,hadoop003,hadoop004

1.上传并解压hbase-2.1.1-bin.tar.gz到hadoop002上,解压到/opt/module/hbase-2.1.1

2.配置文件

hbase-env.sh修改两处

JAVA_HOME是指定jdk,不多说了,false表示不使用hbase自带的zk

1 export JAVA_HOME=/opt/module/jdk1.8.0_181

2 export HBASE_MANAGES_ZK=false

hbase-site.xml

1 <configuration>

2 <property>

3 <name>hbase.rootdir</name>

4 <value>hdfs://hadoop002:9000/hbase</value>

5 </property>

6

7 <property>

8 <name>hbase.cluster.distributed</name>

9 <value>true</value>

10 </property>

11

12 <property>

13 <name>hbase.master</name>

14 <value>hadoop002:16000</value>

15 </property>

16

17 <property>

18 <name>hbase.zookeeper.quorum</name>

19 <value>hadoop002:2181,hadoop003:2181,hadoop003:2181</value>

20 </property>

21

22 <property>

23 <name>hbase.zookeeper.property.dataDir</name>

24 <value>/opt/module/zookeeper-3.4.10/zkData</value>

25 </property>

26 </configuration>

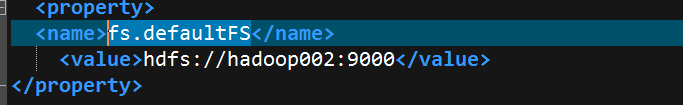

需要注意的是hbase.rootdir要和你的hadoop中的core-site.xml的fs.defaultFS的值对应,比如我的

regionservers

1 hadoop002

2 hadoop003

3 hadoop004

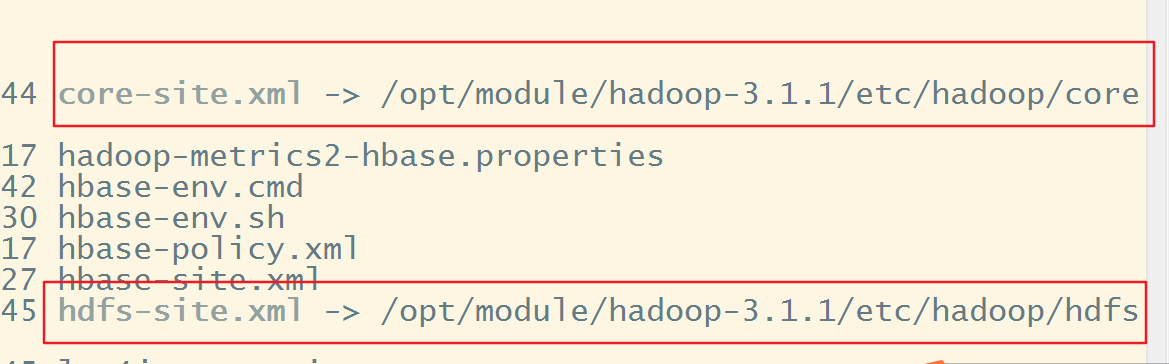

可以拷贝hadoop下的core-site.xml和hdfs-site.xml到hbase的conf下,也可以使用软链接

3.jar包替换

我们下载的hbase-2.1.1-bin.tar.gz中关于hadoop的jar包都是2.7.7的,如果你的hadoop不是2.7.7,要对所有hadoop开头的jar进行替换

替换方式为先进入lib目录下, rm -rf hadoop-*.jar,之后上传你的版本的hadoop的jar包即可,此外还需要替换zookeeperjar包为你的版本的zookeeper的jar包

涉及的jar(改为你的版本)

hadoop-annotations-3.1.1.jar hadoop-auth-3.1.1.jar hadoop-client-3.1.1.jar hadoop-common-3.1.1.jar hadoop-hdfs-3.1.1.jar hadoop-mapreduce-client-app-3.1.1.jar hadoop-mapreduce-client-common-3.1.1.jar hadoop-mapreduce-client-core-3.1.1.jar hadoop-mapreduce-client-hs-3.1.1.jar hadoop-mapreduce-client-hs-plugins-3.1.1.jar hadoop-mapreduce-client-jobclient-3.1.1-tests.jar hadoop-mapreduce-client-jobclient-3.1.1.jar hadoop-mapreduce-client-shuffle-3.1.1.jar hadoop-yarn-api-3.1.1.jar hadoop-yarn-applications-distributedshell-3.1.1.jar hadoop-yarn-applications-unmanaged-am-launcher-3.1.1.jar hadoop-yarn-client-3.1.1.jar hadoop-yarn-common-3.1.1.jar hadoop-yarn-server-applicationhistoryservice-3.1.1.jar hadoop-yarn-server-common-3.1.1.jar hadoop-yarn-server-nodemanager-3.1.1.jar hadoop-yarn-server-resourcemanager-3.1.1.jar hadoop-yarn-server-tests-3.1.1.jar hadoop-yarn-server-web-proxy-3.1.1.jar

4.分发hbase到hadoop003.hadoop004

5.时间同步

参考https://www.cnblogs.com/tele-share/p/9513300.html

6.启动

先启动zk,然后启动hadoop,二者都启动完成后启动hbase

start-hbase.sh

之后jps

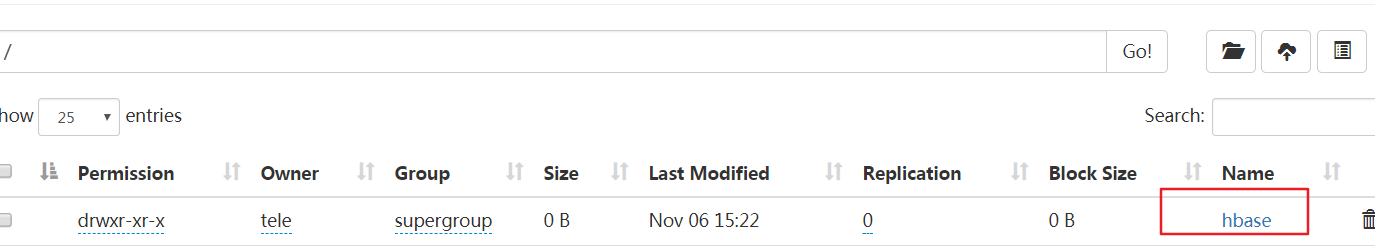

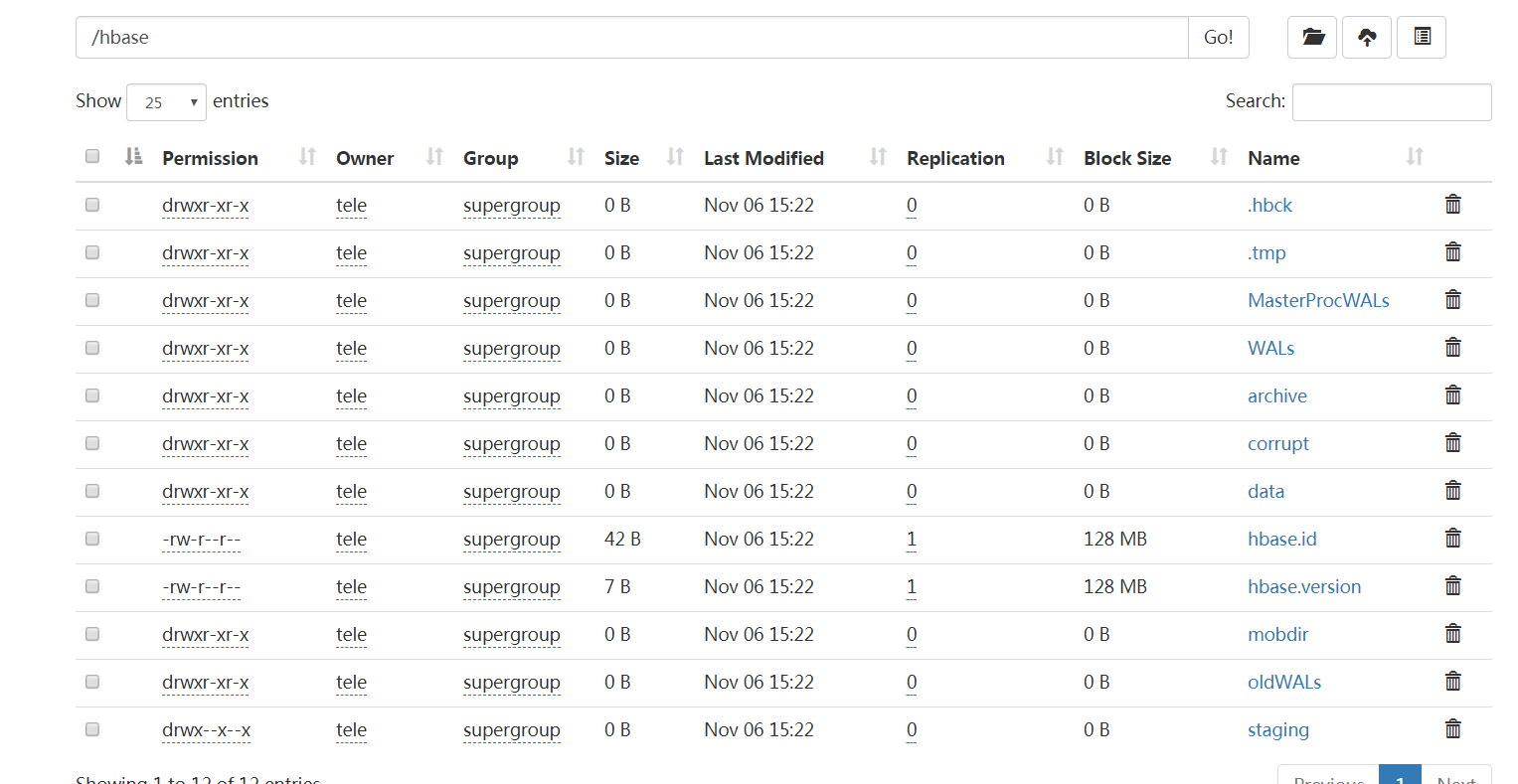

查看hdfs

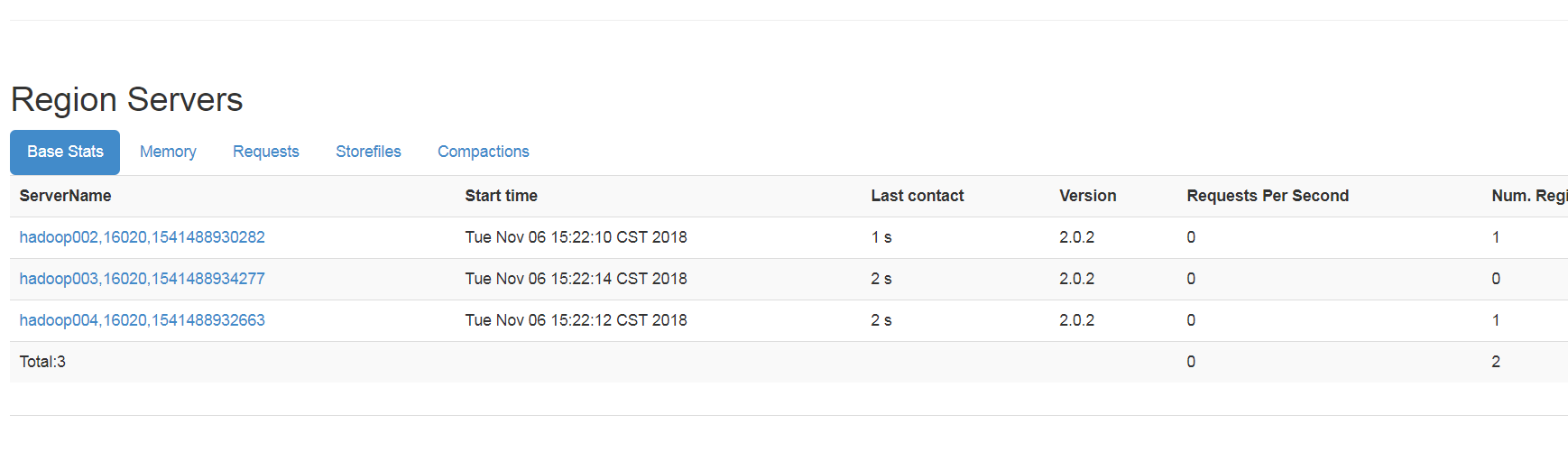

web页面端口号16010

http://hadoop002:16010

ps:最初的时候使用的hadoop3.1.1和hbase2.1.1,但是始终有问题,后来查了下版本,于是重新搭建了hadoop2.7.7,又重新下了hbase2.0.2才成功部署

版本兼容请查看:https://blog.csdn.net/vtopqx/article/details/77882491