在HDFS中,提供了fsck命令,用于检查HDFS上文件和目录的健康状态、获取文件的block信息和位置信息等。

我们在master机器上执行hdfs fsck就可以看到这个命令的用法。

[hadoop-twq@master ~]$ hdfs fsck Usage: hdfs fsck <path> [-list-corruptfileblocks | [-move | -delete | -openforwrite] [-files [-blocks [-locations | -racks]]]] [-includeSnapshots] [-storagepolicies] [-blockId <blk_Id>] <path> start checking from this path -move move corrupted files to /lost+found -delete delete corrupted files -files print out files being checked -openforwrite print out files opened for write -includeSnapshots include snapshot data if the given path indicates a snapshottable directory or there are snapshottable directories under it -list-corruptfileblocks print out list of missing blocks and files they belong to -blocks print out block report -locations print out locations for every block -racks print out network topology for data-node locations -storagepolicies print out storage policy summary for the blocks -blockId print out which file this blockId belongs to, locations (nodes, racks) of this block, and other diagnostics info (under replicated, corrupted or not, etc)

查看文件目录的健康信息

执行如下的命令:

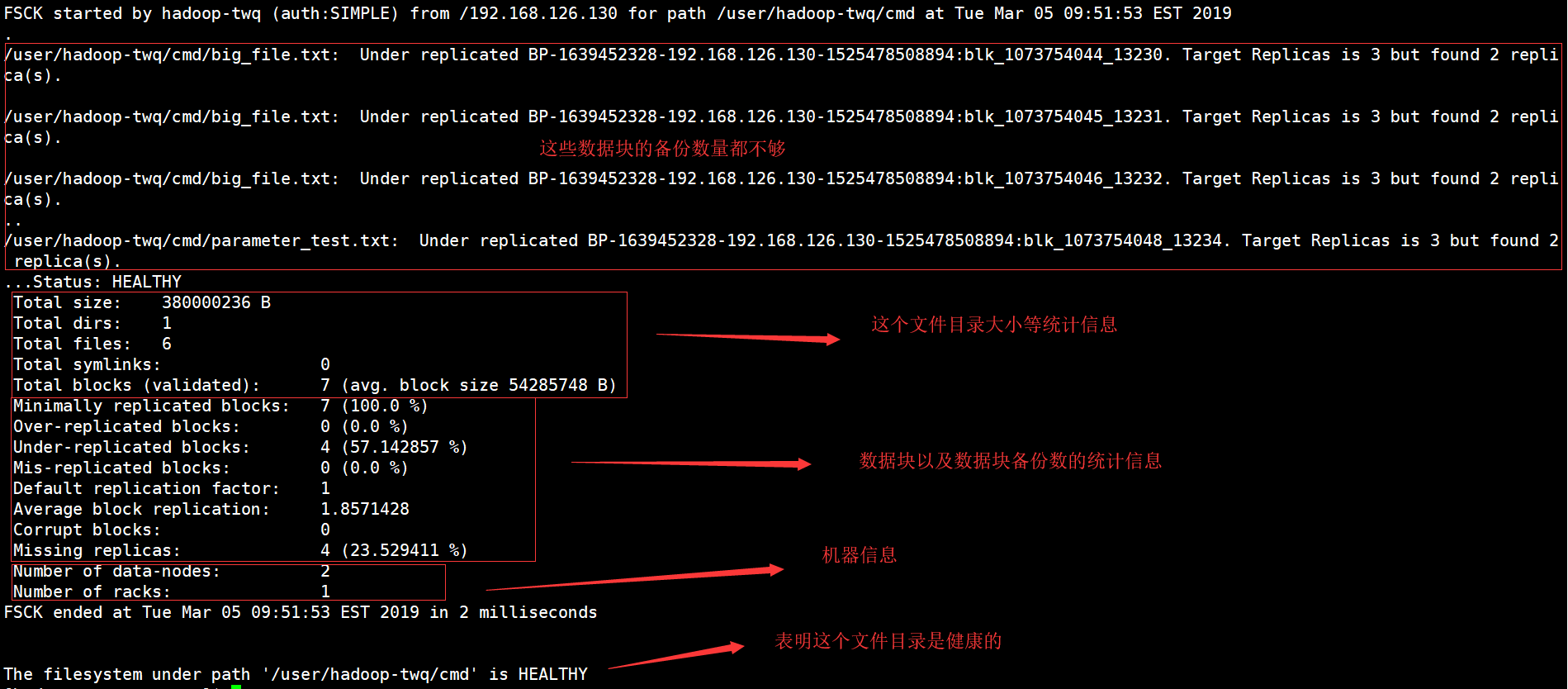

hdfs fsck /user/hadoop-twq/cmd

可以查看/user/hadoop-twq/cmd目录的健康信息:

其中有一个比较重要的信息,就是Corrupt blocks,表示损坏的数据块的数量

查看文件中损坏的块 (-list-corruptfileblocks)

[hadoop-twq@master ~]$ hdfs fsck /user/hadoop-twq/cmd -list-corruptfileblocks Connecting to namenode via http://master:50070/fsck?ugi=hadoop-twq&listcorruptfileblocks=1&path=%2Fuser%2Fhadoop-twq%2Fcmd The filesystem under path '/user/hadoop-twq/cmd' has 0 CORRUPT files

上面的命令可以找到某个目录下面的损坏的数据块,但是上面表示没有看到坏的数据块

损坏文件的处理

将损坏的文件移动至/lost+found目录 (-move)

hdfs fsck /user/hadoop-twq/cmd -move

删除有损坏数据块的文件 (-delete)

hdfs fsck /user/hadoop-twq/cmd -delete

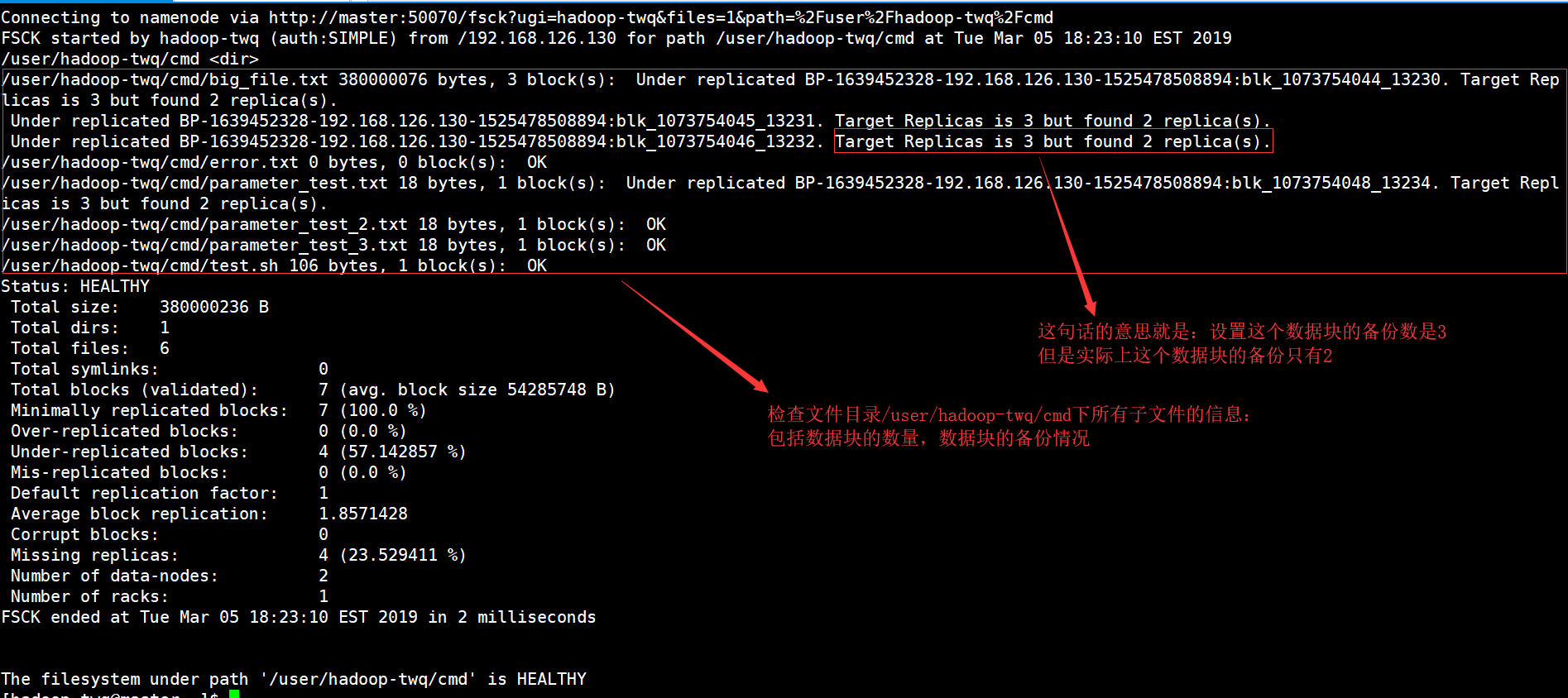

检查并列出所有文件状态(-files)

执行如下的命令:

hdfs fsck /user/hadoop-twq/cmd -files

显示结果如下:

上面的命令可以检查指定路径下的所有文件的信息,包括:数据块的数量以及数据块的备份情况

检查并打印正在被打开执行写操作的文件(-openforwrite)

执行下面的命令可以检查指定路径下面的哪些文件正在执行写操作:

hdfs fsck /user/hadoop-twq/cmd -openforwrite

打印文件的Block报告(-blocks)

执行下面的命令,可以查看一个指定文件的所有的Block详细信息,需要和-files一起使用:

hdfs fsck /user/hadoop-twq/cmd/big_file.txt -files -blocks

结果如下:

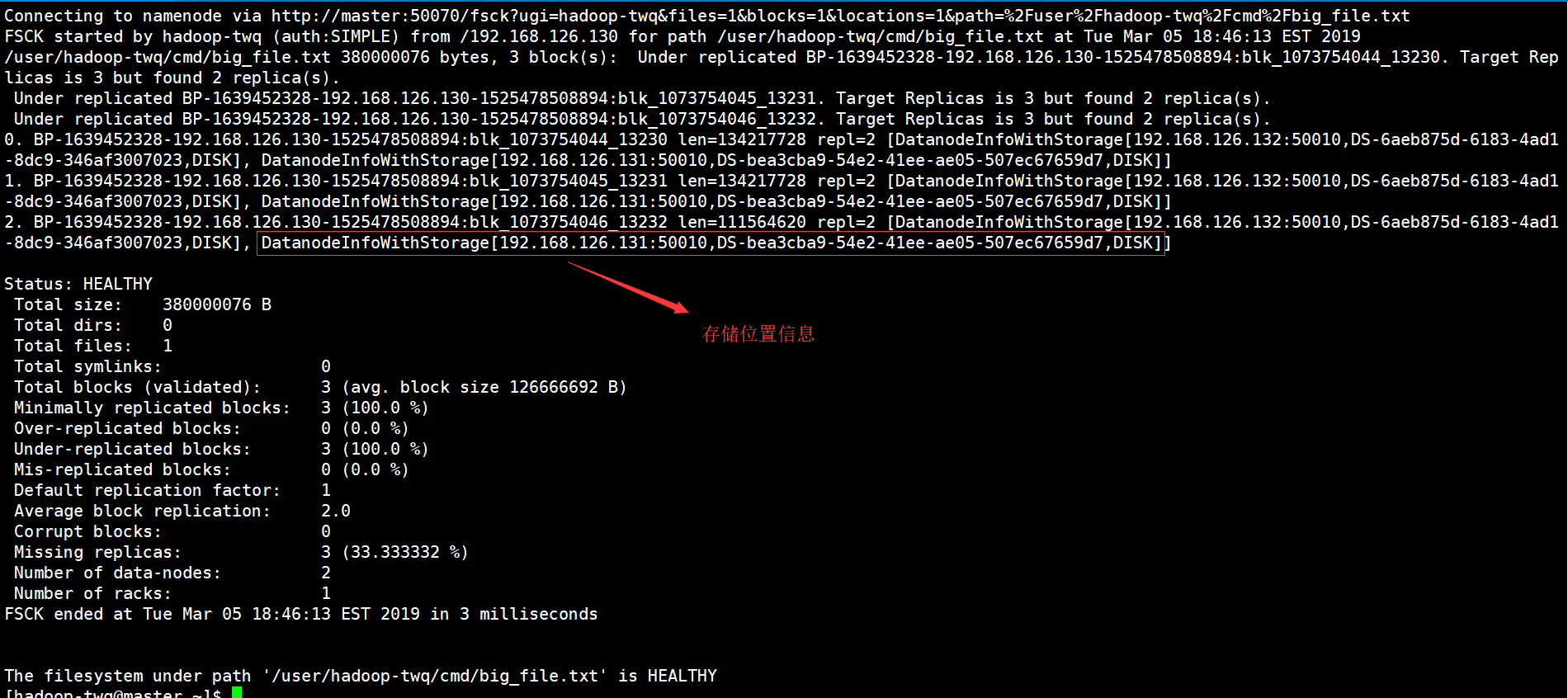

如果,我们在上面的命令再加上-locations的话,就是表示还需要打印每一个数据块的位置信息,如下命令:

hdfs fsck /user/hadoop-twq/cmd/big_file.txt -files -blocks -locations

结果如下:

如果,我们在上面的命令再加上-racks的话,就是表示还需要打印每一个数据块的位置所在的机架信息,如下命令:

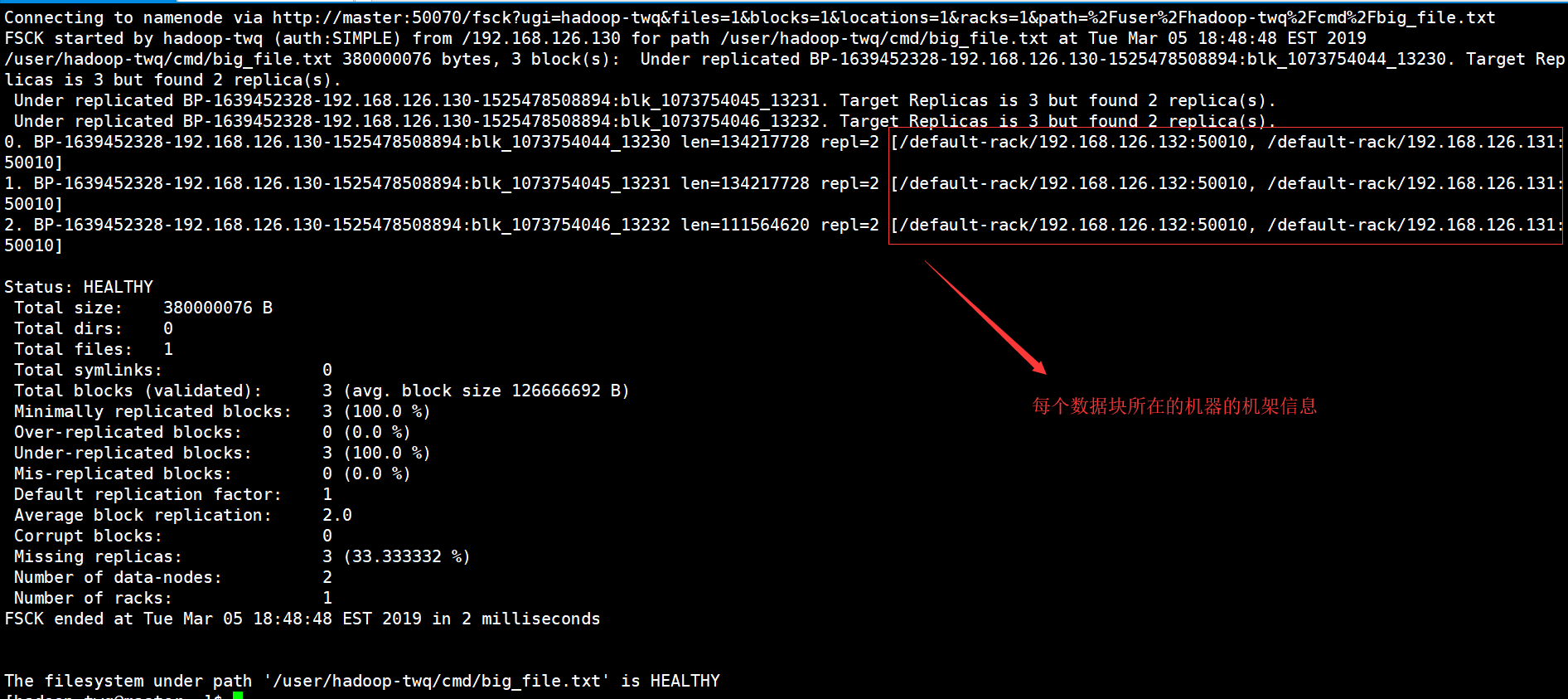

hdfs fsck /user/hadoop-twq/cmd/big_file.txt -files -blocks -locations -racks

结果如下:

hdfs fsck的使用场景

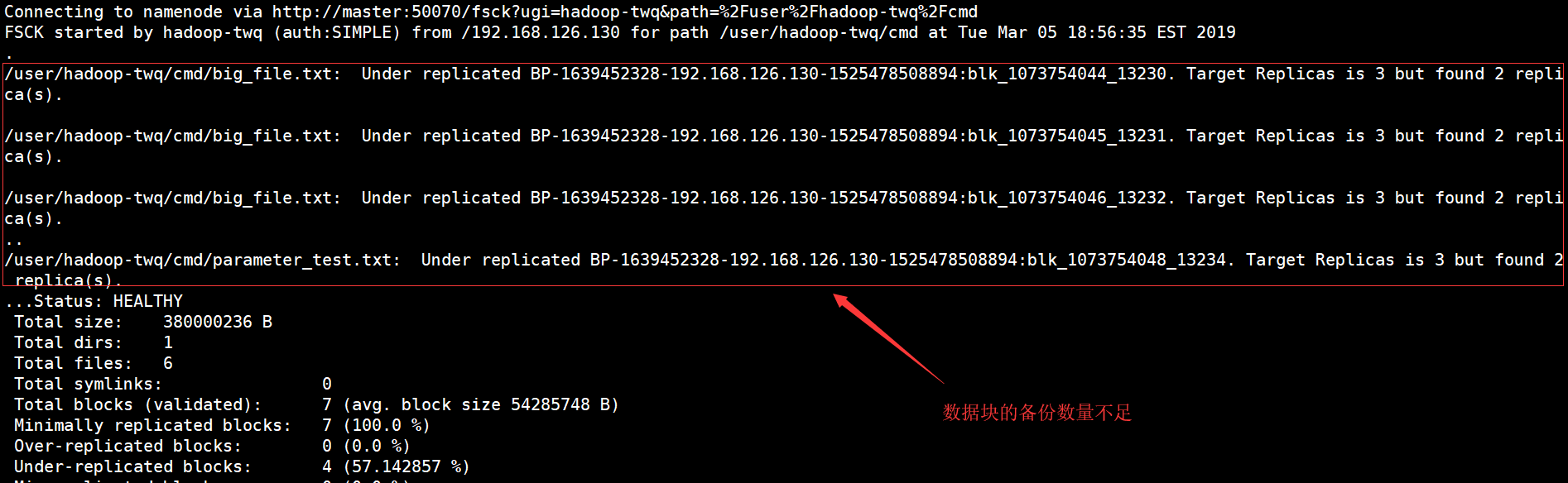

场景一

当我们执行如下的命令:

hdfs fsck /user/hadoop-twq/cmd

可以查看/user/hadoop-twq/cmd目录的健康信息:

我们可以看出,有两个文件的数据块的备份数量不足,这个我们可以通过如下的命令,重新设置两个文件数据块的备份数:

## 将文件big_file.txt对应的数据块备份数设置为1 hadoop fs -setrep -w 1 /user/hadoop-twq/cmd/big_file.txt ## 将文件parameter_test.txt对应的数据块备份数设置为1 hadoop fs -setrep -w 1 /user/hadoop-twq/cmd/parameter_test.txt

上面命令中 -w 参数表示等待备份数到达指定的备份数,加上这个参数后再执行的话,则需要比较长的时间

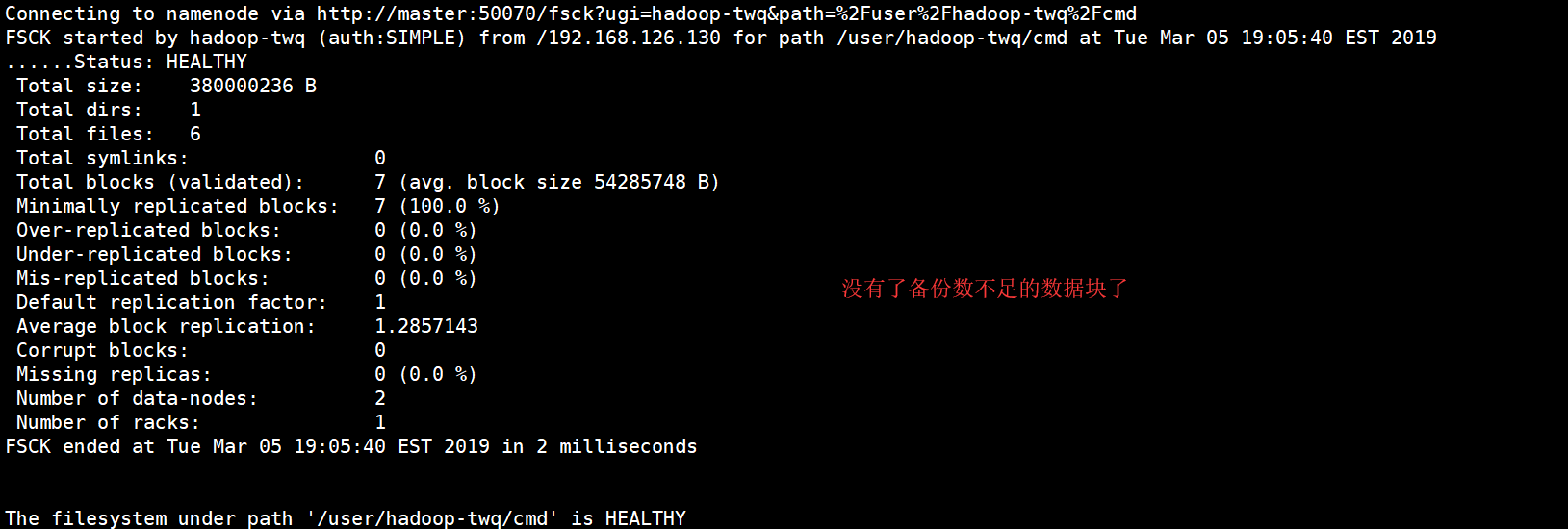

执行完上面的命令后,我们再来执行下面的命令:

hdfs fsck /user/hadoop-twq/cmd

结果如下:

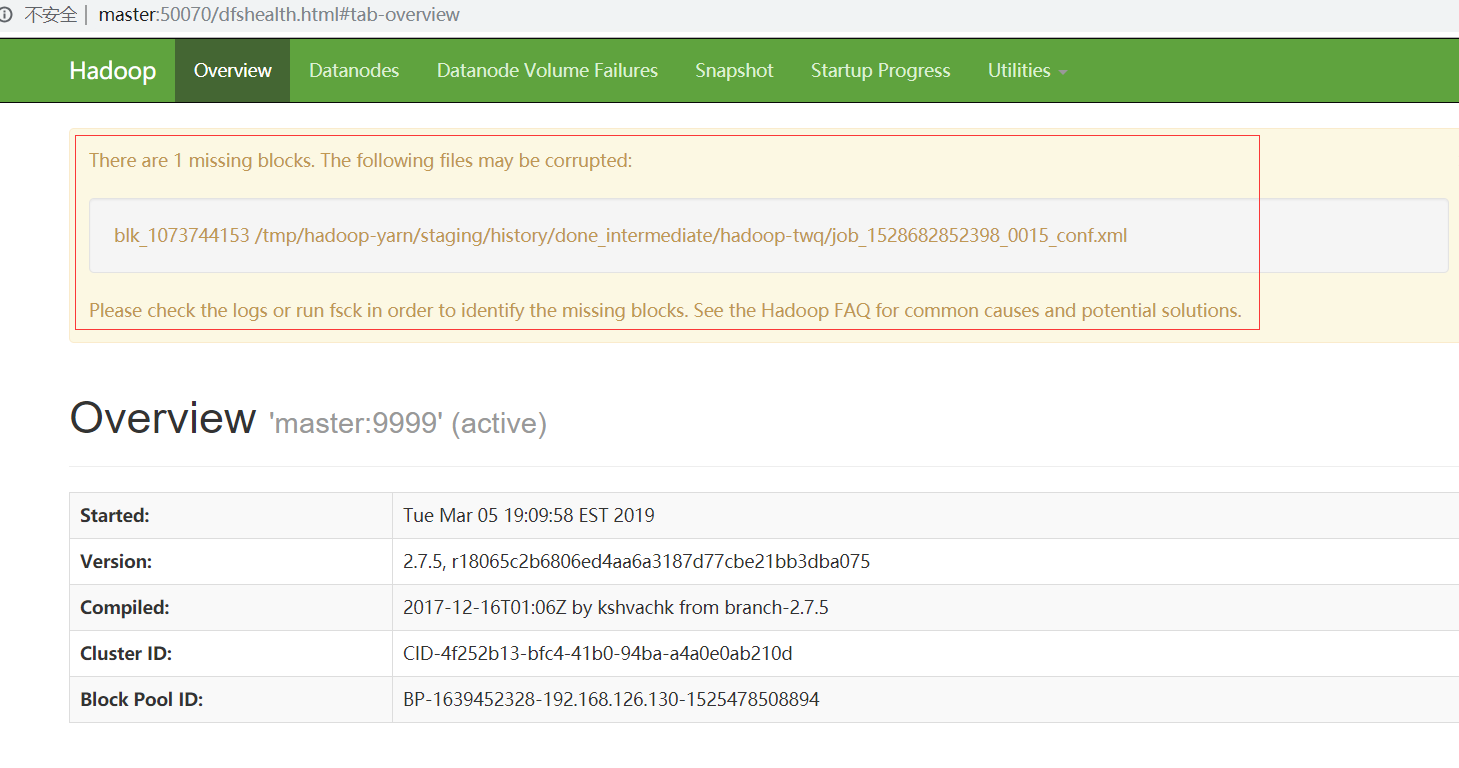

场景二

当我们访问HDFS的WEB UI的时候,出现了如下的警告信息:

表明有一个数据块丢失了,这个时候我们执行下面的命令来确定是哪一个文件的数据块丢失了:

[hadoop-twq@master ~]$ hdfs fsck / -list-corruptfileblocks Connecting to namenode via http://master:50070/fsck?ugi=hadoop-twq&listcorruptfileblocks=1&path=%2F The list of corrupt files under path '/' are: blk_1073744153 /tmp/hadoop-yarn/staging/history/done_intermediate/hadoop-twq/job_1528682852398_0015_conf.xml The filesystem under path '/' has 1 CORRUPT files

发现是数据块blk_1073744153丢失了,这个数据块是淑文文件/tmp/hadoop-yarn/staging/history/done_intermediate/hadoop-twq/job_1528682852398_0015_conf.xml的。

如果出现这种场景是因为在DataNode中没有这个数据块,但是在NameNode的元数据中有这个数据块的信息,我们可以执行下面的命令,把这些没用的数据块信息删除掉,如下:

[hadoop-twq@master ~]$ hdfs fsck /tmp/hadoop-yarn/staging/history/done_intermediate/hadoop-twq/ -delete Connecting to namenode via http://master:50070/fsck?ugi=hadoop-twq&delete=1&path=%2Ftmp%2Fhadoop-yarn%2Fstaging%2Fhistory%2Fdone_intermediate%2Fhadoop-twq FSCK started by hadoop-twq (auth:SIMPLE) from /192.168.126.130 for path /tmp/hadoop-yarn/staging/history/done_intermediate/hadoop-twq at Tue Mar 05 19:18:00 EST 2019 .................................................................................................... .. /tmp/hadoop-yarn/staging/history/done_intermediate/hadoop-twq/job_1528682852398_0015_conf.xml: CORRUPT blockpool BP-1639452328-192.168.126.130-1525478508894 block blk_1073744153 /tmp/hadoop-yarn/staging/history/done_intermediate/hadoop-twq/job_1528682852398_0015_conf.xml: MISSING 1 blocks of total size 220262 B................................................................................................... .................................................................................................... ........................Status: CORRUPT Total size: 28418833 B Total dirs: 1 Total files: 324 Total symlinks: 0 Total blocks (validated): 324 (avg. block size 87712 B) ******************************** UNDER MIN REPL'D BLOCKS: 1 (0.30864197 %) dfs.namenode.replication.min: 1 CORRUPT FILES: 1 MISSING BLOCKS: 1 MISSING SIZE: 220262 B CORRUPT BLOCKS: 1 ******************************** Minimally replicated blocks: 323 (99.69136 %) Over-replicated blocks: 0 (0.0 %) Under-replicated blocks: 0 (0.0 %) Mis-replicated blocks: 0 (0.0 %) Default replication factor: 1 Average block replication: 0.99691355 Corrupt blocks: 1 Missing replicas: 0 (0.0 %) Number of data-nodes: 2 Number of racks: 1 FSCK ended at Tue Mar 05 19:18:01 EST 2019 in 215 milliseconds

然后执行:

[hadoop-twq@master ~]$ hdfs fsck / -list-corruptfileblocks Connecting to namenode via http://master:50070/fsck?ugi=hadoop-twq&listcorruptfileblocks=1&path=%2F The filesystem under path '/' has 0 CORRUPT files

丢失的数据块没有的,被删除了。我们也可以刷新WEB UI,也没有了警告信息: