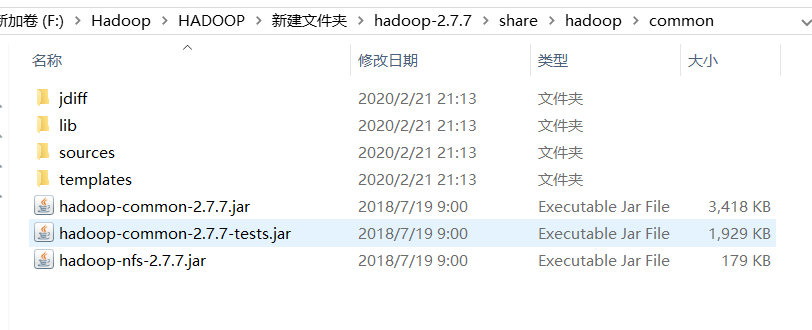

1.导入jar包

解压hadoop-2.7.7.tar.gz,并在如下路径找到图中所示三个jar包

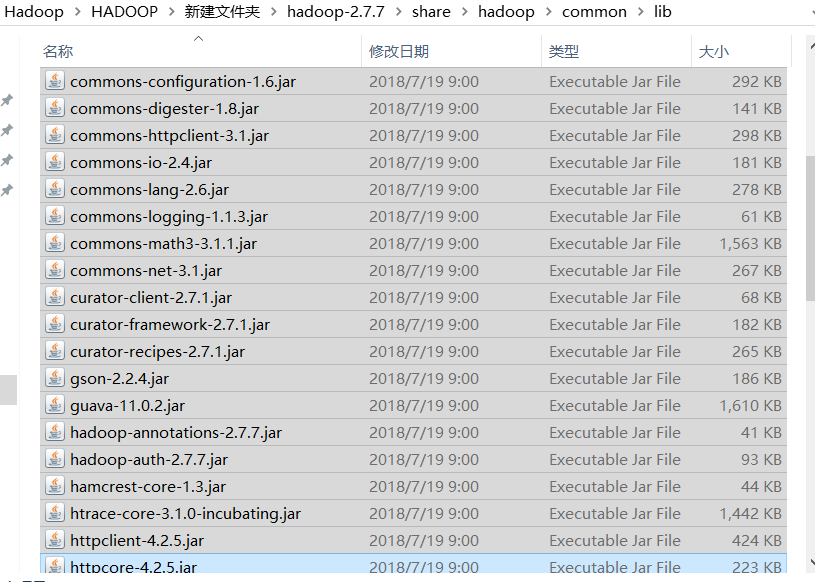

下图所示路径所有jar包

还要用到下面三个jar包

下图所示路径所有jar包

把以上jar包全部加入到项目中

2.查看文件信息

@Test

public void connectHDFS()

{

Configuration conf = new Configuration(); //做一个配置

conf.set("fs.defaultFS", "hdfs://192.168.0.51:9000");//服务器的地址 端口号

try {

FileSystem fileSystem = FileSystem.get(conf);

FileStatus fileStatus = fileSystem.getFileStatus(new Path("/upload/hello.txt"));

System.out.println(fileStatus.isFile()); //判断是不是一个文件

System.out.println(fileStatus.isDirectory()); //判断是不是一个文件夹

System.out.println(fileStatus.getPath()); //获取文件路径

System.out.println(fileStatus.getLen()); //获取文件大小

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

3.修改文件名

@Test

public void rename()

{

Configuration configuration = new Configuration();

configuration.set("fs.defaultFS", "hdfs://192.168.0.51:9000");//服务器的地址 端口号

Properties properties = System.getProperties(); //这两行告诉hadoop访问时的用户为root

properties.setProperty("HADOOP_USER_NAME", "root");

try {

FileSystem fileSystem = FileSystem.get(configuration);

boolean rename = fileSystem.rename(new Path("/upload/jdk-8u221-linux-x64.tar.gz"),new Path("/upload/jdk1.8.tar.gz"));

System.out.println(rename?"修改成功!":"修改失败!");

fileSystem.close();

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

4.创建文件夹

@Test

public void mkdir()

{

Configuration configuration = new Configuration();

configuration.set("fs.defaultFS", "hdfs://192.168.0.51:9000");

Properties properties = System.getProperties(); //这两行告诉hadoop访问时的用户为root

properties.setProperty("HADOOP_USER_NAME", "root");

try {

FileSystem fileSystem = FileSystem.get(configuration);

fileSystem.mkdirs(new Path("/user"));

fileSystem.mkdirs(new Path("/bailiban/yw"));

fileSystem.close(); //关闭

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

5.文件上传

@Test

public void upload()

{

Configuration configuration = new Configuration();

configuration.set("fs.defaultFS", "hdfs://192.168.0.51:9000");

Properties properties = System.getProperties(); //这两行告诉hadoop访问时的用户为root

properties.setProperty("HADOOP_USER_NAME", "root");

try {

FileSystem fileSystem = FileSystem.get(configuration);

FSDataOutputStream out = fileSystem.create(new Path("/doupo.txt"));

FileInputStream in = new FileInputStream("F:\doupo.txt");

byte [] b = new byte[1024];

int len = 0;

while((len=in.read(b))!=-1)

{

out.write(b,0,len);

}

in.close();

out.close();

fileSystem.close(); //关闭

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

6.文件下载

@Test

public void download()

{

Configuration configuration = new Configuration();

configuration.set("fs.defaultFS", "hdfs://192.168.0.51:9000");

Properties properties = System.getProperties(); //这两行告诉hadoop访问时的用户为root

properties.setProperty("HADOOP_USER_NAME", "root");

try {

FileSystem fileSystem = FileSystem.get(configuration);

FSDataInputStream in = fileSystem.open(new Path("/upload/hello.txt"));

FileOutputStream out = new FileOutputStream(new File("F:\hello.txt"));

byte [] b = new byte [1024];

int len = 0;

while((len=in.read(b))!=-1)

{

out.write(b,0,len);

}

in.close();

out.close();

fileSystem.close(); //关闭

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

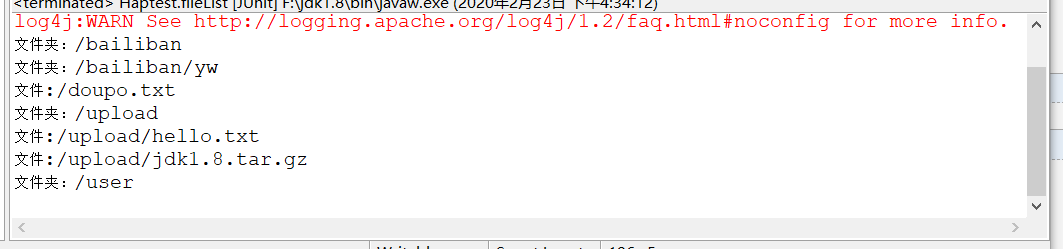

7.递归遍历所有文件和文件夹

@Test

public void fileList()

{

Configuration configuration = new Configuration();

configuration.set("fs.defaultFS", "hdfs://192.168.0.51:9000");

Properties properties = System.getProperties(); //这两行告诉hadoop访问时的用户为root

properties.setProperty("HADOOP_USER_NAME", "root");

try {

FileSystem fileSystem = FileSystem.get(configuration);

FileStatus[] listStatus = fileSystem.listStatus(new Path("/"));

for (FileStatus fileStatus : listStatus)

{

digui(fileStatus,fileSystem);

}

fileSystem.close(); //关闭

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

private void digui(FileStatus fileStatus, FileSystem fileSystem) {

String name = "";

if(fileStatus.isDirectory())

{

name = fileStatus.getPath()+"";

System.out.println("文件夹:"+name.split("9000")[1]);

try {

FileStatus[] listStatus = fileSystem.listStatus(new Path(name.split("9000")[1]));

if(listStatus.length!=0)

{

for (FileStatus fileStatus2 : listStatus)

{

digui(fileStatus2,fileSystem);

}

}

} catch (FileNotFoundException e) {

// TODO Auto-generated catch block

e.printStackTrace();

} catch (IllegalArgumentException e) {

// TODO Auto-generated catch block

e.printStackTrace();

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

else

{

System.out.println("文件:"+(fileStatus.getPath()+"").split("9000")[1]);

}

}