1 torch.nn.Conv2d(in_channels, out_channels, kernel_size, stride=1, padding=0, dilation=1, groups=1, bias=True, padding_mode='zeros')

Parameters

1 in_channels (int) – Number of channels in the input image 2 out_channels (int) – Number of channels produced by the convolution 3 kernel_size (int or tuple) – Size of the convolving kernel 4 stride (int or tuple, optional) – Stride of the convolution. Default: 1 5 padding (int or tuple, optional) – Zero-padding added to both sides of the input. Default: 0 6 padding_mode (string, optional) – 'zeros', 'reflect', 'replicate' or 'circular'. Default: 'zeros' 7 dilation (int or tuple, optional) – Spacing between kernel elements. Default: 1 8 groups (int, optional) – Number of blocked connections from input channels to output channels. Default: 1 9 bias (bool, optional) – If True, adds a learnable bias to the output. Default: True

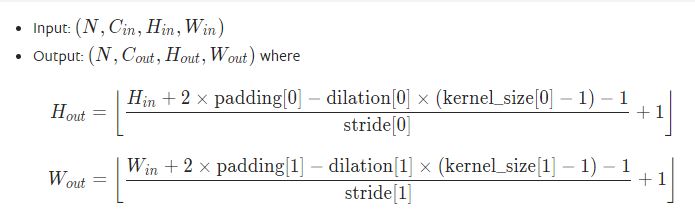

Shape

Examples

[32, 3, 112, 112] ——> [32, 64, 112, 112]

1 import torch 2 import torch.nn as nn 3 4 input = torch.randn(32, 3, 112, 112) 5 conv2d = nn.Conv2d(3, 64, 3, stride=1, padding=1, bias=False) 6 print(conv2d(input).size())

[32, 3, 224, 224] ——> [32, 64, 112, 112]

1 import torch 2 import torch.nn as nn 3 4 input = torch.randn(32, 3, 224, 224) 5 conv2d = nn.Conv2d(3, 64, 3, stride=2, padding=1, bias=False) 6 print(conv2d(input).size())