- 目标:爬取知乎用户信息

spider处源码:

# -*- coding: utf-8 -*- import json import scrapy from zhihuuser.items import ZhihuItem class ZhihuSpider(scrapy.Spider): name = 'zhihu' allowed_domains = ['www.zhihu.com'] start_urls = ['http://www.zhihu.com/'] user_url = 'https://www.zhihu.com/api/v4/members/{user}?include={include}' follows_url = 'https://www.zhihu.com/api/v4/members/{user}/followees?include={include}&offset={offset}&limit={limit}' start_user = 'excited-vczh' users_qurey = 'allow_message%2Cis_followed%2Cis_following%2Cis_org%2Cis_blocking%2Cemployments%2Canswer_count%2Cfollower_count%2Carticles_count%2Cgender%2Cbadge%5B%3F(type%3Dbest_answerer)%5D.topics' follows_qurey ='data[*].answer_count,articles_count,gender,follower_count,is_followed,is_following,badge[?(type=best_answerer)].topics' def start_requests(self): yield scrapy.Request(self.user_url.format(user=self.start_user,include=self.users_qurey),self.parse_user) yield scrapy.Request(self.follows_url.format(user=self.start_user,include=self.follows_qurey,limit=20,offset=0),self.parse_follows) def parse_user(self, response): result = json.loads(response.text) item = ZhihuItem() for field in item.fields: if field in result.keys(): item[field] = result.get(field) yield item def parse_follows(self, response): results = json.loads(response.text) if 'data' in results.keys(): for result in results.get('data'): yield scrapy.Request(self.user_url.format(user=result.get('url_token'), include=self.users_qurey),self.parse_user) if 'paging' in results.keys() and results.get('paging').get('is_end') == False: next_page = results.get('paging').get('next') yield scrapy.Request(next_page,self.parse_follows)

setting.py处需要设置user-agent头和把robots.协议关闭

只收取了name和id 那个ajax加载的接口 我和视频里不一样,视频里是几乎预加载了所有的东西,而我找到那个接口就只有很少的信息,所以我干脆久只爬取id和name,算是个练习把

逻辑是采用递归的思想,爬取一个用户关注的人的信息,然后爬取 ”关注的人“ 关注的人的信息,但是这里爬大概 1700多条,就会被禁ip

- 学到得小知识:

1)如果spider 中有 start_requests(self) 则开始就会从start_requests()开始

2)关于json库

json.loads 将已编码的 JSON 字符串解码为 Python 对象

取对象得所有键值: result.keys()

取对象对应得值: result.get(' key_name ')

3) format的应用

4)

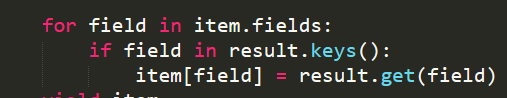

这里得 item.fields 取到得值是item中所有得变量名

- 最后 ,如果想要学习爬虫得视频可以在下方留言哦,我给你发过来