import os

import numpy as np

import matplotlib.pyplot as plt

from skimage import color,data,transform,io

labelList = os.listdir("F:\MachineLearn\ML-xiaoxueqi\fruits\Training")

allFruitsImageName = []

for i in range(len(labelList)):

allFruitsImageName.append(os.listdir("F:\MachineLearn\ML-xiaoxueqi\fruits\Training\"+labelList[i]))

allsortImageName = []

for i in range(len(allFruitsImageName)):

oneClass = allFruitsImageName[i]

nr = []

r = []

r2 = []

for i in range(len(oneClass)):

if(oneClass[i].split("_")[0].isdigit()):

nr.append(int(oneClass[i].split("_")[0]))

else:

if(len(oneClass[i].split("_")[0])==1):

r.append(int(oneClass[i].split("_")[1]))

else:

r2.append(int(oneClass[i].split("_")[1]))

sortnr = sorted(nr)

sortnrImageName = []

for i in range(len(sortnr)):

sortnrImageName.append(str(sortnr[i])+"_100.jpg")

sortr = sorted(r)

sortrImageName = []

for i in range(len(sortr)):

sortrImageName.append("r_"+str(sortr[i])+"_100.jpg")

sortr2 = sorted(r2)

sortr2ImageName = []

for i in range(len(sortr2)):

sortr2ImageName.append("r2_"+str(sortr2[i])+"_100.jpg")

sortnrImageName.extend(sortrImageName)

sortnrImageName.extend(sortr2ImageName)

allsortImageName.append(sortnrImageName)

trainData = []

for i in range(len(allsortImageName)):

one = []

for j in range(len(allsortImageName[i])):

rgb=io.imread("F:\MachineLearn\ML-xiaoxueqi\fruits\Training\"+labelList[i]+"\" + allsortImageName[i][j]) #读取图片

gray=color.rgb2gray(rgb) #将彩色图片转换为灰度图片

dst=transform.resize(gray,(64,64)) #调整大小,图像分辨率为64*64

one.append(dst)

trainData.append(one)

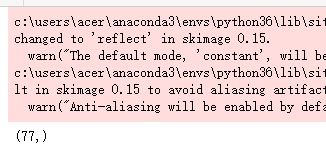

print(np.shape(trainData))

trainLabelNum = []

for i in range(len(trainData)):

for j in range(len(trainData[i])):

trainLabelNum.append(i)

imageGray = trainData[i][j]

io.imsave("F:\MachineLearn\ML-xiaoxueqi\fruits\trainGrayImage\"+str(i)+"_"+str(j)+".jpg",imageGray)

np.save("F:\MachineLearn\ML-xiaoxueqi\fruits\trainLabelNum",trainLabelNum)

print("图片处理完了")

testLabelList = os.listdir("F:\MachineLearn\ML-xiaoxueqi\fruits\Test")

testallFruitsImageName = []

for i in range(len(testLabelList)):

testallFruitsImageName.append(os.listdir("F:\MachineLearn\ML-xiaoxueqi\fruits\Test\"+testLabelList[i]))

testallsortImageName = []

for i in range(len(testallFruitsImageName)):

oneClass = testallFruitsImageName[i]

nr = []

r = []

r2 = []

for i in range(len(oneClass)):

if(oneClass[i].split("_")[0].isdigit()):

nr.append(int(oneClass[i].split("_")[0]))

else:

if(len(oneClass[i].split("_")[0])==1):

r.append(int(oneClass[i].split("_")[1]))

else:

r2.append(int(oneClass[i].split("_")[1]))

sortnr = sorted(nr)

sortnrImageName = []

for i in range(len(sortnr)):

sortnrImageName.append(str(sortnr[i])+"_100.jpg")

sortr = sorted(r)

sortrImageName = []

for i in range(len(sortr)):

sortrImageName.append("r_"+str(sortr[i])+"_100.jpg")

sortr2 = sorted(r2)

sortr2ImageName = []

for i in range(len(sortr2)):

sortr2ImageName.append("r2_"+str(sortr2[i])+"_100.jpg")

sortnrImageName.extend(sortrImageName)

sortnrImageName.extend(sortr2ImageName)

testallsortImageName.append(sortnrImageName)

testData = []

for i in range(len(testallsortImageName)):

one = []

for j in range(len(testallsortImageName[i])):

rgb=io.imread("F:\MachineLearn\ML-xiaoxueqi\fruits\Test\"+testLabelList[i]+"\" + testallsortImageName[i][j])

gray=color.rgb2gray(rgb)

dst=transform.resize(gray,(64,64))

one.append(dst)

testData.append(one)

print(np.shape(testData))

testLabelNum = []

for i in range(len(testData)):

for j in range(len(testData[i])):

testLabelNum.append(i)

imageGray = testData[i][j]

io.imsave("F:\MachineLearn\ML-xiaoxueqi\fruits\testGrayImage\"+str(i)+"_"+str(j)+".jpg",imageGray)

np.save("F:\MachineLearn\ML-xiaoxueqi\fruits\testLabelNum",testLabelNum)

print("图片处理完了")

import os

import numpy as np

import matplotlib.pyplot as plt

from skimage import color,data,transform,io

trainDataDirList = os.listdir("F:\MachineLearn\ML-xiaoxueqi\fruits\trainGrayImage")

trainDataList = []

for i in range(len(trainDataDirList)):

image = io.imread("F:\MachineLearn\ML-xiaoxueqi\fruits\trainGrayImage\"+trainDataDirList[i])

trainDataList.append(image)

trainLabelNum = np.load("F:\MachineLearn\ML-xiaoxueqi\fruits\trainLabelNum.npy")

testDataDirList = os.listdir("F:\MachineLearn\ML-xiaoxueqi\fruits\testGrayImage")

testDataList = []

for i in range(len(testDataDirList)):

image = io.imread("F:\MachineLearn\ML-xiaoxueqi\fruits\testGrayImage\"+testDataDirList[i])

testDataList.append(image)

testLabelNum = np.load("F:\MachineLearn\ML-xiaoxueqi\fruits\testLabelNum.npy")

import tensorflow as tf

from random import shuffle

INPUT_NODE = 64*64

OUT_NODE = 77

IMAGE_SIZE = 64

NUM_CHANNELS = 1

NUM_LABELS = 77

#第一层卷积层的尺寸和深度

CONV1_DEEP = 64

CONV1_SIZE = 5

#第二层卷积层的尺寸和深度

CONV2_DEEP = 128

CONV2_SIZE = 5

#全连接层的节点数

FC_SIZE = 1024

def inference(input_tensor, train, regularizer):

#卷积

with tf.variable_scope('layer1-conv1'):

conv1_weights = tf.Variable(tf.random_normal([CONV1_SIZE,CONV1_SIZE,NUM_CHANNELS,CONV1_DEEP],stddev=0.1),name='weight')

conv1_biases = tf.Variable(tf.Variable(tf.random_normal([CONV1_DEEP])),name="bias")

conv1 = tf.nn.conv2d(input_tensor,conv1_weights,strides=[1,1,1,1],padding='SAME')

relu1 = tf.nn.relu(tf.nn.bias_add(conv1,conv1_biases))

#池化

with tf.variable_scope('layer2-pool1'):

pool1 = tf.nn.max_pool(relu1,ksize=[1,2,2,1],strides=[1,2,2,1],padding='SAME')

#卷积

with tf.variable_scope('layer3-conv2'):

conv2_weights = tf.Variable(tf.random_normal([CONV2_SIZE,CONV2_SIZE,CONV1_DEEP,CONV2_DEEP],stddev=0.1),name='weight')

conv2_biases = tf.Variable(tf.random_normal([CONV2_DEEP]),name="bias")

#卷积向前学习

conv2 = tf.nn.conv2d(pool1,conv2_weights,strides=[1,1,1,1],padding='SAME')

relu2 = tf.nn.relu(tf.nn.bias_add(conv2,conv2_biases))

#池化

with tf.variable_scope('layer4-pool2'):

pool2 = tf.nn.max_pool(relu2,ksize=[1,2,2,1],strides=[1,2,2,1],padding='SAME')

#卷积

with tf.variable_scope('layer5-conv3'):

conv3_weights = tf.Variable(tf.random_normal([5,5,CONV2_DEEP,512],stddev=0.1),name='weight')

conv3_biases = tf.Variable(tf.random_normal([512]),name="bias")

#卷积向前学习

conv3 = tf.nn.conv2d(pool2,conv3_weights,strides=[1,1,1,1],padding='SAME')

relu3 = tf.nn.relu(tf.nn.bias_add(conv3,conv3_biases))

#池化

with tf.variable_scope('layer6-pool3'):

pool3 = tf.nn.max_pool(relu3,ksize=[1,2,2,1],strides=[1,2,2,1],padding='SAME')

#卷积

with tf.variable_scope('layer7-conv4'):

conv4_weights = tf.Variable(tf.random_normal([5,5,512,64],stddev=0.1),name='weight')

conv4_biases = tf.Variable(tf.random_normal([64]),name="bias")

#卷积向前学习

conv4 = tf.nn.conv2d(pool3,conv4_weights,strides=[1,1,1,1],padding='SAME')

relu4 = tf.nn.relu(tf.nn.bias_add(conv4,conv4_biases))

#池化

with tf.variable_scope('layer7-pool4'):

pool4 = tf.nn.max_pool(relu3,ksize=[1,2,2,1],strides=[1,2,2,1],padding='SAME')

#变型

pool_shape = pool4.get_shape().as_list()

#计算最后一次池化后对象的体积(数据个数节点数像素个数)

nodes = pool_shape[1]*pool_shape[2]*pool_shape[3]

#根据上面的nodes再次把最后池化的结果pool2变为batch行nodes列的数据

reshaped = tf.reshape(pool4,[-1,nodes])

#全连接层

with tf.variable_scope('layer8-fc1'):

fc1_weights = tf.Variable(tf.random_normal([nodes,FC_SIZE],stddev=0.1),name='weight')

if(regularizer != None):

tf.add_to_collection('losses',tf.contrib.layers.l2_regularizer(0.03)(fc1_weights))

fc1_biases = tf.Variable(tf.random_normal([FC_SIZE]),name="bias")

#预测

fc1 = tf.nn.relu(tf.matmul(reshaped,fc1_weights)+fc1_biases)

if(train):

fc1 = tf.nn.dropout(fc1,0.5)

#全连接层

with tf.variable_scope('layer9-fc2'):

fc2_weights = tf.Variable(tf.random_normal([FC_SIZE,64],stddev=0.1),name="weight")

if(regularizer != None):

tf.add_to_collection('losses',tf.contrib.layers.l2_regularizer(0.03)(fc2_weights))

fc2_biases = tf.Variable(tf.random_normal([64]),name="bias")

#预测

fc2 = tf.nn.relu(tf.matmul(fc1,fc2_weights)+fc2_biases)

if(train):

fc2 = tf.nn.dropout(fc2,0.5)

#全连接层

with tf.variable_scope('layer10-fc3'):

fc3_weights = tf.Variable(tf.random_normal([64,NUM_LABELS],stddev=0.1),name="weight")

if(regularizer != None):

tf.add_to_collection('losses',tf.contrib.layers.l2_regularizer(0.03)(fc3_weights))

fc3_biases = tf.Variable(tf.random_normal([NUM_LABELS]),name="bias")

#预测

logit = tf.matmul(fc2,fc3_weights)+fc3_biases

return logit

import keras

import time

from keras.utils import np_utils

X = np.vstack(trainDataList).reshape(-1, 64,64,1)

Y = np.vstack(trainLabelNum).reshape(-1, 1)

Xrandom = []

Yrandom = []

index = [i for i in range(len(X))]

shuffle(index)

for i in range(len(index)):

Xrandom.append(X[index[i]])

Yrandom.append(Y[index[i]])

np.save("E:\Xrandom",Xrandom)

np.save("E:\Xrandom",Yrandom)

X = Xrandom

Y = Yrandom

Y=keras.utils.to_categorical(Y,OUT_NODE)

batch_size = 200

n_classes=77

epochs=20#循环次数

learning_rate=1e-4

batch_num=int(np.shape(X)[0]/batch_size)

dropout=0.75

x=tf.placeholder(tf.float32,[None,64,64,1])

y=tf.placeholder(tf.float32,[None,n_classes])

# keep_prob = tf.placeholder(tf.float32)

pred=inference(x,1,"regularizer")

cost=tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=pred,labels=y))

# 三种优化方法选择一个就可以

optimizer=tf.train.AdamOptimizer(1e-4).minimize(cost)

# train_step = tf.train.GradientDescentOptimizer(0.001).minimize(cost)

# train_step = tf.train.MomentumOptimizer(0.001,0.9).minimize(cost)

keep_prob = tf.placeholder(dtype=tf.float32, name="keep_prob")

correct_pred=tf.equal(tf.argmax(pred,1),tf.argmax(y,1))

accuracy=tf.reduce_mean(tf.cast(correct_pred,tf.float32))

# merged = tf.summary.merge_all()

init=tf.global_variables_initializer()

start_time = time.time()

with tf.Session() as sess:

sess.run(init)

# writer = tf.summary.FileWriter('./fruit', sess.graph)

for i in range(epochs):

for j in range(batch_num):

start = (j*batch_size)

end = start+batch_size

sess.run(optimizer, feed_dict={x:X[start:end],y:Y[start:end],keep_prob: 0.5})

loss,acc = sess.run([cost,accuracy],feed_dict={x:X[start:end],y:Y[start:end],keep_prob: 1})

# result = sess.run(merged, feed_dict={x:X[start:end],y:Y[start:end]})

# writer.add_summary(result, i)

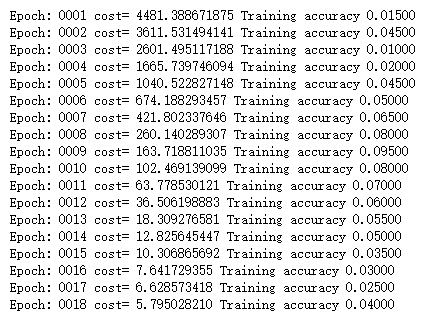

if epochs % 1 == 0:

print("Epoch:", '%04d' % (i+1),"cost=", "{:.9f}".format(loss),"Training accuracy","{:.5f}".format(acc*100))

end_time = time.time()

print('运行时间:',(end_time-start_time))

print('Optimization Completed')

def gen_small_data(inputs,batch_size):

i=0

j = True

while j:

small_data=inputs[i:(batch_size+i)]

i+=batch_size

if len(small_data)!=0:

yield small_data

if len(small_data)==0:

j=False

with tf.Session() as sess:

sess.run(init)

# writer = tf.summary.FileWriter('./fruit', sess.graph)

for i in range(epochs):

x_=gen_small_data(X,batch_size)

y_=gen_small_data(Y,batch_size)

X = next(x_)

Y = next(y_)

sess.run(optimizer, feed_dict={x:X,y:Y})

loss,acc = sess.run([cost,accuracy],feed_dict={x:X,y:Y})

# result = sess.run(merged, feed_dict={x:X[start:end],y:Y[start:end]})

# writer.add_summary(result, i)

if epochs % 1 == 0:

print("Epoch:", '%04d' % (i+1),"cost=", "{:.9f}".format(loss),"Training accuracy","{:.5f}".format(acc))

labelNameList = []

for i in range(len(labelList)):

labelNameList.append("label:"+labelList[i])

theFireImage = []

for i in range(len(allsortImageName)):

theFireImage.append(plt.imread("F:\MachineLearn\ML-xiaoxueqi\fruits\Training\"+labelList[i]+"\" + allsortImageName[i][4]))

gs = plt.GridSpec(11,7)

fig = plt.figure(figsize=(10,10))

imageIndex = 0

ax = plt.gca()

for i in range(11):

for j in range(7):

fi = fig.add_subplot(gs[i,j])

fi.imshow(theFireImage[imageIndex])

plt.xticks(())

plt.yticks(())

plt.axis('off')

plt.title(labelNameList[imageIndex],fontsize=7)

ax.set_xticks([])

ax.set_yticks([])

ax.spines['top'].set_color('none')

ax.spines['left'].set_color('none')

ax.spines['right'].set_color('none')

ax.spines['bottom'].set_color('none')

imageIndex += 1

plt.show()