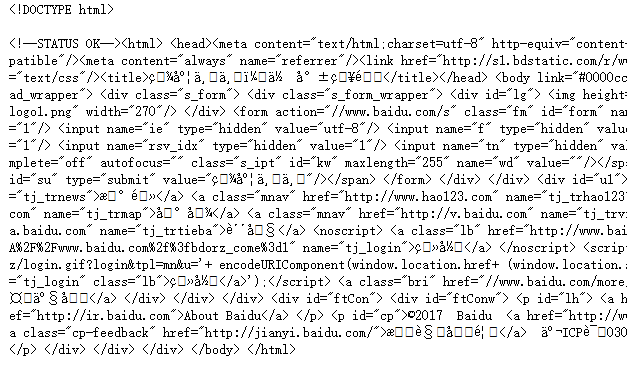

import requests from bs4 import BeautifulSoup url = 'http://www.baidu.com' html = requests.get(url) sp = BeautifulSoup(html.text, 'html.parser') print(sp)

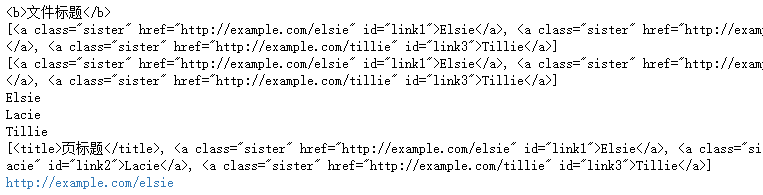

html_doc = """ <html><head><title>页标题</title></head> <p class="title"><b>文件标题</b></p> <p class="story">Once upon a time there were three little sisters; and their names were <a href="http://example.com/elsie" class="sister" id="link1">Elsie</a>, <a href="http://example.com/lacie" class="sister" id="link2">Lacie</a> and <a href="http://example.com/tillie" class="sister" id="link3">Tillie</a>; and they lived at the bottom of a well.</p> <p class="story">...</p> """ from bs4 import BeautifulSoup sp = BeautifulSoup(html_doc,'html.parser') print(sp.find('b')) # 返回值:<b>文件标题</b> print(sp.find_all('a')) #返回值: [<b>文件标题</b>] print(sp.find_all("a", {"class":"sister"})) data1=sp.find("a", {"href":"http://example.com/elsie"}) print(data1.text) # 返回值:Elsie data2=sp.find("a", {"id":"link2"}) print(data2.text) # 返回值:Lacie data3 = sp.select("#link3") print(data3[0].text) # 返回值:Tillie print(sp.find_all(['title','a'])) data1=sp.find("a", {"id":"link1"}) print(data1.get("href")) #返回值: http://example.com/elsie

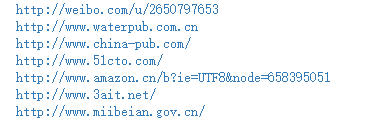

import requests from bs4 import BeautifulSoup url = 'http://www.wsbookshow.com/' html = requests.get(url) html.encoding="gbk" sp=BeautifulSoup(html.text,"html.parser") links=sp.find_all(["a","img"]) # 同时读取 <a> 和 <img> for link in links: href=link.get("href") # 读取 href 属性的值 # 判断值是否为非 None,以及是不是以http://开头 if((href != None) and (href.startswith("http://"))): print(href)

import requests from bs4 import BeautifulSoup url = 'http://www.taiwanlottery.com.tw/' html = requests.get(url) sp = BeautifulSoup(html.text, 'html.parser') data1 = sp.select("#rightdown") print(data1)

data2 = data1[0].find('div', {'class':'contents_box02'}) print(data2) print() data3 = data2.find_all('div', {'class':'ball_tx'}) print(data3)

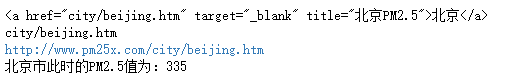

import requests from bs4 import BeautifulSoup url1 = 'http://www.pm25x.com/' #获得主页面链接 html = requests.get(url1) #抓取主页面数据 sp1 = BeautifulSoup(html.text, 'html.parser') #把抓取的数据进行解析 city = sp1.find("a",{"title":"北京PM2.5"}) #从解析结果中找出title属性值为"北京PM2.5"的标签 print(city) citylink=city.get("href") #从找到的标签中取href属性值 print(citylink) url2=url1+citylink #生成二级页面完整的链接地址 print(url2) html2=requests.get(url2) #抓取二级页面数据 sp2=BeautifulSoup(html2.text,"html.parser") #二级页面数据解析 #print(sp2) data1=sp2.select(".aqivalue") #通过类名aqivalue抓取包含北京市pm2.5数值的标签 pm25=data1[0].text #获取标签中的pm2.5数据 print("北京市此时的PM2.5值为:"+pm25) #显示pm2.5值

import requests,os from bs4 import BeautifulSoup from urllib.request import urlopen url = 'http://www.tooopen.com/img/87.aspx' html = requests.get(url) html.encoding="utf-8" sp = BeautifulSoup(html.text, 'html.parser') # 建立images目录保存图片 images_dir="E:\images\" if not os.path.exists(images_dir): os.mkdir(images_dir) # 取得所有 <a> 和 <img> 标签 all_links=sp.find_all(['a','img']) for link in all_links: # 读取 src 和 href 属性内容 src=link.get('src') href = link.get('href') attrs=[src,src] for attr in attrs: # 读取 .jpg 和 .png 檔 if attr != None and ('.jpg' in attr or '.png' in attr): # 设置图片文件完整路径 full_path = attr filename = full_path.split('/')[-1] # 取得图片名 ext = filename.split('.')[-1] #取得扩展名 filename = filename.split('.')[-2] #取得主文件名 if 'jpg' in ext: filename = filename + '.jpg' else: filename = filename + '.png' print(attr) # 保存图片 try: image = urlopen(full_path) f = open(os.path.join(images_dir,filename),'wb') f.write(image.read()) f.close() except: print("{} 无法读取!".format(filename))