import os import tensorflow as tf from tensorflow.examples.tutorials.mnist import input_data from tensorflow.contrib.tensorboard.plugins import projector INPUT_NODE = 784 OUTPUT_NODE = 10 LAYER1_NODE = 500 def get_weight_variable(shape, regularizer): weights = tf.get_variable("weights", shape, initializer=tf.truncated_normal_initializer(stddev=0.1)) if regularizer != None: tf.add_to_collection('losses', regularizer(weights)) return weights def inference(input_tensor, regularizer): with tf.variable_scope('layer1'): weights = get_weight_variable([INPUT_NODE, LAYER1_NODE], regularizer) biases = tf.get_variable("biases", [LAYER1_NODE], initializer=tf.constant_initializer(0.0)) layer1 = tf.nn.relu(tf.matmul(input_tensor, weights) + biases) with tf.variable_scope('layer2'): weights = get_weight_variable([LAYER1_NODE, OUTPUT_NODE], regularizer) biases = tf.get_variable("biases", [OUTPUT_NODE], initializer=tf.constant_initializer(0.0)) layer2 = tf.matmul(layer1, weights) + biases return layer2 BATCH_SIZE = 100 LEARNING_RATE_BASE = 0.8 LEARNING_RATE_DECAY = 0.99 REGULARIZATION_RATE = 0.0001 TRAINING_STEPS = 10000 MOVING_AVERAGE_DECAY = 0.99 LOG_DIR = 'F:\temp\log\' SPRITE_FILE = 'F:\temp\log\mnist_sprite.jpg' META_FIEL = "F:\temp\log\mnist_meta.tsv" TENSOR_NAME = "FINAL_LOGITS"

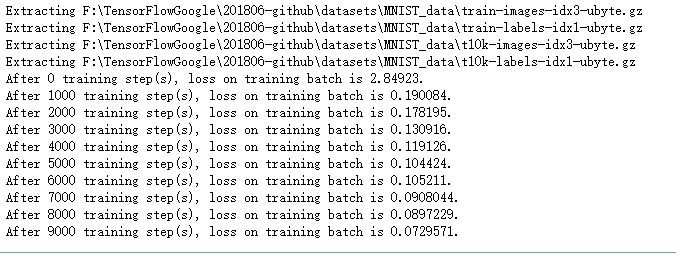

def train(mnist): # 输入数据的命名空间。 with tf.name_scope('input'): x = tf.placeholder(tf.float32, [None, INPUT_NODE], name='x-input') y_ = tf.placeholder(tf.float32, [None, OUTPUT_NODE], name='y-input') regularizer = tf.contrib.layers.l2_regularizer(REGULARIZATION_RATE) y = inference(x, regularizer) global_step = tf.Variable(0, trainable=False) # 处理滑动平均的命名空间。 with tf.name_scope("moving_average"): variable_averages = tf.train.ExponentialMovingAverage(MOVING_AVERAGE_DECAY, global_step) variables_averages_op = variable_averages.apply(tf.trainable_variables()) # 计算损失函数的命名空间。 with tf.name_scope("loss_function"): cross_entropy = tf.nn.sparse_softmax_cross_entropy_with_logits(logits=y, labels=tf.argmax(y_, 1)) cross_entropy_mean = tf.reduce_mean(cross_entropy) loss = cross_entropy_mean + tf.add_n(tf.get_collection('losses')) # 定义学习率、优化方法及每一轮执行训练的操作的命名空间。 with tf.name_scope("train_step"): learning_rate = tf.train.exponential_decay(LEARNING_RATE_BASE,global_step,mnist.train.num_examples / BATCH_SIZE, LEARNING_RATE_DECAY,staircase=True) train_step = tf.train.GradientDescentOptimizer(learning_rate).minimize(loss, global_step=global_step) with tf.control_dependencies([train_step, variables_averages_op]): train_op = tf.no_op(name='train') # 训练模型。 with tf.Session() as sess: tf.global_variables_initializer().run() for i in range(TRAINING_STEPS): xs, ys = mnist.train.next_batch(BATCH_SIZE) _, loss_value, step = sess.run([train_op, loss, global_step], feed_dict={x: xs, y_: ys}) if(i % 1000 == 0): print("After %d training step(s), loss on training batch is %g." % (i, loss_value)) final_result = sess.run(y, feed_dict={x: mnist.test.images}) return final_result

def visualisation(final_result): y = tf.Variable(final_result, name = TENSOR_NAME) summary_writer = tf.summary.FileWriter(LOG_DIR) config = projector.ProjectorConfig() embedding = config.embeddings.add() embedding.tensor_name = y.name # Specify where you find the metadata embedding.metadata_path = META_FIEL # Specify where you find the sprite (we will create this later) embedding.sprite.image_path = SPRITE_FILE embedding.sprite.single_image_dim.extend([28,28]) # Say that you want to visualise the embeddings projector.visualize_embeddings(summary_writer, config) sess = tf.InteractiveSession() sess.run(tf.global_variables_initializer()) saver = tf.train.Saver() saver.save(sess, os.path.join(LOG_DIR, "model"), TRAINING_STEPS) summary_writer.close()

def main(argv=None): mnist = input_data.read_data_sets("F:\TensorFlowGoogle\201806-github\datasets\MNIST_data", one_hot=True) final_result = train(mnist) visualisation(final_result) if __name__ == '__main__': main()