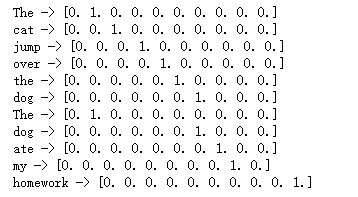

import numpy as np samples = ['The cat jump over the dog', 'The dog ate my homework'] #我们先将每个单词放置到一个哈希表中 token_index = {} for sample in samples: #将一个句子分解成多个单词 for word in sample.split(): if word not in token_index: token_index[word] = len(token_index) + 1 #设置句子的最大长度 max_length = 10 results = np.zeros((len(samples), max_length, max(token_index.values()) + 1)) for i, sample in enumerate(samples): for j, word in list(enumerate(sample.split()))[: max_length]: index = token_index.get(word) results[i, j, index] = 1. print("{0} -> {1}".format(word, results[i, j]))

from keras.preprocessing.text import Tokenizer def oneHotEncode(samples): #只考虑最常使用的前1000个单词 tokenizer = Tokenizer(num_words = 1000) tokenizer.fit_on_texts(samples) #把句子分解成单词数组 sequences = tokenizer.texts_to_sequences(samples) return sequences samples = ['The cat jump over the dog', 'The dog ate my homework'] vecs = oneHotEncode(samples) print(vecs)

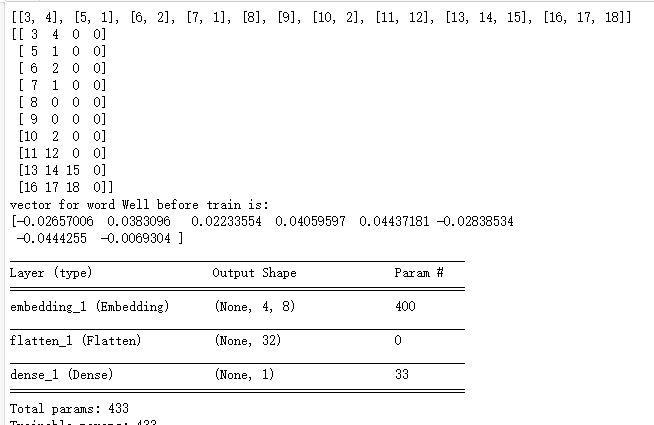

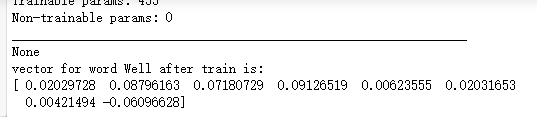

from numpy import array from keras.preprocessing.text import one_hot from keras.preprocessing.sequence import pad_sequences from keras.models import Sequential from keras.layers import Dense from keras.layers import Flatten from keras.layers.embeddings import Embedding # 定义一系列简单文本,前一半表示赞美,后一半表示批判 docs = ['Well done', 'Good work', 'Great effort', 'nice work', 'Excellent', 'Weak', 'Poor effort', 'quit bad', 'it is terrible', 'like a shit'] # 属于赞美性质的文本用1表示,属于匹配性质的文本用0表示 labels = array([1,1,1,1,1,0,0,0,0,0]) # 假定单词量有50个 vocab_size = 50 encoded_docs = oneHotEncode(docs) print(encoded_docs) # 规定每个文本4个单词,不足4个的用0补足 max_length = 4 padded_docs = pad_sequences(encoded_docs, maxlen=max_length, padding='post') print(padded_docs) model = Sequential() ''' Embedding层本质上是一个矩阵,高位vocab_size, 宽为8,矩阵的每一行对应每个单词向量 由于我们设定每篇文本的单词量为4个,每个单词对应一个8元素的向量, 因此我们把一篇文本对应的向量也就是一个含有4个元素的向量输入Embedding层后, 得到4*8的一个二维矩阵,其中的4对应输入文本向量中元素个数,8对应每个单词的向量维度 ''' emebdding_layer = Embedding(vocab_size, 8, input_length=max_length) model.add(emebdding_layer) print("vector for word Well before train is:") print(emebdding_layer.get_weights()[0][0]) model.add(Flatten()) model.add(Dense(1, activation='sigmoid')) model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['acc']) print(model.summary()) # 训练网络 model.fit(padded_docs, labels, epochs=50, verbose=0) print("vector for word Well after train is:") print(emebdding_layer.get_weights()[0][0])

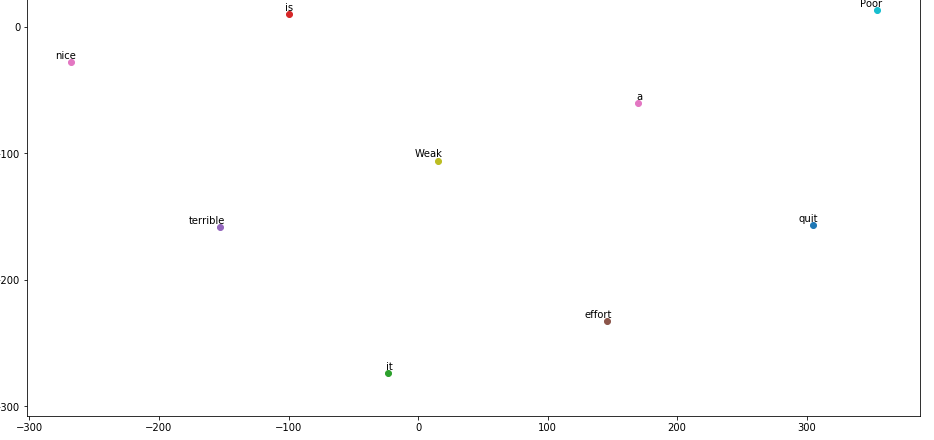

from sklearn.manifold import TSNE import matplotlib.pyplot as plt #建立单词与编号之间的对应关系 word2Num = {} for idx, doc in enumerate(docs): words = doc.split() for i, word in enumerate(words): print("{0} => {1}".format(word, encoded_docs[idx][i])) word2Num[word] = encoded_docs[idx][i] embeddings = emebdding_layer.get_weights()[0] #建立单词与向量之间的连续 vectors = [] words = [] for word, num in word2Num.items(): print("{0} => {1}".format(word, embeddings[num])) words.append(word) vectors.append(embeddings[num])

tsne_model = TSNE(perplexity=40, n_components=2, init='pca', n_iter=2500, random_state=23) new_values = tsne_model.fit_transform(vectors) x = [] y = [] for value in new_values: x.append(value[0]) y.append(value[1]) plt.figure(figsize=(16, 16)) for i in range(len(x)): plt.scatter(x[i], y[i]) plt.annotate(words[i], xy=(x[i], y[i]), xytext=(5,2), textcoords='offset points', ha='right',va='bottom') plt.show()