一、环境介绍

刚开始学k8s,使用二进制搭建k8s集群,网上教程大多都是v1.20版本的,要搞就搞难的,直接部署一个目前最新版v1.22.2的,想着报了错就地解决以后工作中遇到了也好整。

好家伙,真没想到搞了将近15天,装的我人都傻了。

本套搭建是参考这篇博文,非常感谢该博主解惑。

1.1 规划

先部署单Master节点环境,之后再扩容成为多Master节点,以及多Work节点。

| 节点 | IP | 复用 |

|---|---|---|

| k8s-master01 | 10.154.0.111 | etcd01 |

| k8s-node01 | 10.154.0.112 | etcd02 |

| k8s-node02 | 10.154.0.113 | etcd03 |

这里节点复用,把etcd集群装在这三个节点上

如果你的实验环境IP跟我的不一样,不要手动改,直接ctrl+h替换为你的IP,一定要注意,这样避免改错

1.2 环境配置

以下如未特别说明,则所有机器都要做,使用xshell-->工具-->发送键到所有会话会很方便操作

如果你的linux内核小于5.x,需要先更新内核

#修改时区,同步时间

yum install ntpdate -y

ntpdate time2.aliyun.com

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

echo 'Asia/Shanghai' > /etc/timezone

crontab -e

0 12 * * * /usr/sbin/ntpdate time2.aliyun.com

#关闭防火墙,selinux,swap

systemctl stop firewalld

systemctl disable firewalld

sed -i 's/enforcing/disabled/' /etc/selinux/config

setenforce 0

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

#系统优化

cat > /etc/sysctl.d/k8s_better.conf << EOF

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1

net.ipv4.tcp_tw_recycle=0

vm.swappiness=0

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_instances=8192

fs.inotify.max_user_watches=1048576

fs.file-max=52706963

fs.nr_open=52706963

net.ipv6.conf.all.disable_ipv6=1

net.netfilter.nf_conntrack_max=2310720

EOF

sysctl -p /etc/sysctl.d/k8s_better.conf

#修改hosts文件

cat >> /etc/hosts << "EOF"

10.154.0.111 k8s-master01

10.154.0.112 k8s-node01

10.154.0.113 k8s-node02

EOF

#确保每台机器的uuid不一致,如果是克隆机器,修改网卡配置文件删除uuid那一行

cat /sys/class/dmi/id/product_uuid

#更改主机名,根据实际情况修改,分别在每台操作

hostnamectl set-hostname k8s-master01

hostnamectl set-hostname k8s-node01

hostnamectl set-hostname k8s-node02

#配置免密登录,在master01上操作

ssh-keygen -t rsa

ssh-copy-id root@10.154.0.112

ssh-copy-id root@10.154.0.113

#重启

reboot

二、安装docker

这里介绍二进制安装,yum源安装也可以

下载docker:官方下载

我使用的是目前最新版本为v20.10.9

1.卸载旧版本docker

所有机器都做

yum remove -y docker

docker-client

docker-client-latest

docker-common

docker-latest

docker-latest-logrotate

docker-logrotate

docker-engine

docker-ce*

rm -rf /var/lib/docker

2.解压二进制包

在master01上操作

mkdir ~/tools

cd ~/tools

tar zxvf docker-20.10.9.tgz

cp docker/* /usr/bin

scp -r docker/* root@10.154.0.112:/usr/bin

scp -r docker/* root@10.154.0.113:/usr/bin

3.使用systemd管理docker

在master01上操作

cat > /usr/lib/systemd/system/docker.service << EOF

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/bin/dockerd

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

EOF

scp -r /usr/lib/systemd/system/docker.service root@10.154.0.112:/usr/lib/systemd/system/docker.service

scp -r /usr/lib/systemd/system/docker.service root@10.154.0.113:/usr/lib/systemd/system/docker.service

4.配置镜像加速

在master01上操作

mkdir /etc/docker

cat > /etc/docker/daemon.json << "EOF"

{

"exec-opts": [

"native.cgroupdriver=systemd"

],

"log-driver": "json-file",

"log-level": "warn",

"log-opts": {

"max-size": "1000m",

"max-file": "3"

},

"registry-mirrors": [

"https://xxxxxx.mirror.aliyuncs.com"

],

"insecure-registries": [],

"selinux-enabled": false

}

EOF

scp -r /etc/docker/daemon.json root@10.154.0.112:/etc/docker/daemon.json

scp -r /etc/docker/daemon.json root@10.154.0.113:/etc/docker/daemon.json

最好在这里把cgroupdriver改为systemd,不然API启动会报错

这里使用的阿里云镜像加速器,需要自己申请

5.启动并设置开机启动

所有机器上操作

systemctl daemon-reload

systemctl start docker

systemctl enable docker

systemctl status docker

三、部署etcd

下载etcd:官方下载

我使用的是目前最新版本为3.5.1

3.1 颁发证书

以下没有特殊说明均在master01上操作

1.创建目录

mkdir -p /opt/cluster/ssl/{rootca,etcd,kubernetes}

mkdir -p /opt/cluster/kubelet/ssl

mkdir -p /opt/cluster/log/{kube-apiserver,kube-controller-manager,kube-scheduler,kube-proxy,kubelet}

mkdir -p /opt/cluster/plugins/{calico,coredns}

mkdir -p /opt/cluster/etcd/{data,wal}

2.上传工具

cd ~/tools

mv cfssl_1.6.1_linux_amd64 cfssl

mv cfssl-certinfo_1.6.1_linux_amd64 cfssl-certinfo

mv cfssljson_1.6.1_linux_amd64 cfssljson

chmod +x cfssl*

cp cfssl* /usr/local/bin

3.生成证书

cd /opt/cluster/ssl

cat > cfssl-conf.json << "EOF"

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"common": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "87600h"

}

}

}

}

EOF

cd /opt/cluster/ssl

cat > rootca/rootca-csr.json << "EOF"

{

"CN": "rootca",

"key": {

"algo": "ecdsa",

"size": 256

},

"names": [{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "ROOTCA",

"OU": "tz"

}]

}

EOF

cd /opt/cluster/ssl

cat > etcd/etcd-csr.json << "EOF"

{

"CN": "etcd-cluster",

"hosts": [

"127.0.0.1",

"10.154.0.111",

"10.154.0.112",

"10.154.0.113"

],

"key": {

"algo": "ecdsa",

"size": 256

},

"names": [{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "KUBERNETES-ETCD",

"OU": "tz"

}]

}

EOF

cd /opt/cluster/ssl

cfssl gencert -initca rootca/rootca-csr.json | cfssljson -bare rootca/rootca

cfssl gencert

-ca=rootca/rootca.pem

-ca-key=rootca/rootca-key.pem

--config=cfssl-conf.json

-profile=common etcd/etcd-csr.json | cfssljson -bare etcd/etcd

4.证书传给其他机器

scp -r /opt/cluster/ssl 10.154.0.112:/opt/cluster/

scp -r /opt/cluster/ssl 10.154.0.113:/opt/cluster/

3.2 部署etcd

1.上传工具

cd ~/tools/

tar zxvf etcd-v3.5.1-linux-amd64.tar.gz

cp etcd-v3.5.1-linux-amd64/{etcd,etcdctl} /usr/local/bin

chmod +x /usr/local/bin/

scp -r etcd-v3.5.1-linux-amd64/{etcd,etcdctl} root@10.154.0.112:/usr/local/bin

scp -r etcd-v3.5.1-linux-amd64/{etcd,etcdctl} root@10.154.0.113:/usr/local/bin

2.编写systemd配置文件

k8s-master01配置文件如下

cat > /usr/lib/systemd/system/etcd.service << "EOF"

[Unit]

Description=Kubernetes:Etcd

After=network.target network-online.target

Wants=network-online.target

[Service]

Restart=on-failure

RestartSec=5

ExecStart=/usr/local/bin/etcd

--name=etcd01

--data-dir=/opt/cluster/etcd/data

--wal-dir=/opt/cluster/etcd/wal

--listen-peer-urls=https://10.154.0.111:2380

--listen-client-urls=https://10.154.0.111:2379,http://127.0.0.1:2379

--initial-advertise-peer-urls=https://10.154.0.111:2380

--initial-cluster=etcd01=https://10.154.0.111:2380,etcd02=https://10.154.0.112:2380,etcd03=https://10.154.0.113:2380

--initial-cluster-state=new

--initial-cluster-token=373b3543a301630c

--advertise-client-urls=https://10.154.0.111:2379

--cert-file=/opt/cluster/ssl/etcd/etcd.pem

--key-file=/opt/cluster/ssl/etcd/etcd-key.pem

--peer-cert-file=/opt/cluster/ssl/etcd/etcd.pem

--peer-key-file=/opt/cluster/ssl/etcd/etcd-key.pem

--trusted-ca-file=/opt/cluster/ssl/rootca/rootca.pem

--peer-trusted-ca-file=/opt/cluster/ssl/rootca/rootca.pem

--client-cert-auth=true

--peer-client-cert-auth=true

--logger=zap

--log-outputs=default

--log-level=info

--listen-metrics-urls=https://10.154.0.111:2381

--enable-pprof=false

[Install]

WantedBy=multi-user.target

EOF

k8s-node01配置文件如下

cat > /usr/lib/systemd/system/etcd.service << "EOF"

[Unit]

Description=Kubernetes:Etcd

After=network.target network-online.target

Wants=network-online.target

[Service]

Restart=on-failure

RestartSec=5

ExecStart=/usr/local/bin/etcd

--name=etcd02

--data-dir=/opt/cluster/etcd/data

--wal-dir=/opt/cluster/etcd/wal

--listen-peer-urls=https://10.154.0.112:2380

--listen-client-urls=https://10.154.0.112:2379,http://127.0.0.1:2379

--initial-advertise-peer-urls=https://10.154.0.112:2380

--initial-cluster=etcd01=https://10.154.0.111:2380,etcd02=https://10.154.0.112:2380,etcd03=https://10.154.0.113:2380

--initial-cluster-state=new

--initial-cluster-token=373b3543a301630c

--advertise-client-urls=https://10.154.0.112:2379

--cert-file=/opt/cluster/ssl/etcd/etcd.pem

--key-file=/opt/cluster/ssl/etcd/etcd-key.pem

--peer-cert-file=/opt/cluster/ssl/etcd/etcd.pem

--peer-key-file=/opt/cluster/ssl/etcd/etcd-key.pem

--trusted-ca-file=/opt/cluster/ssl/rootca/rootca.pem

--peer-trusted-ca-file=/opt/cluster/ssl/rootca/rootca.pem

--client-cert-auth=true

--peer-client-cert-auth=true

--logger=zap

--log-outputs=default

--log-level=info

--listen-metrics-urls=https://10.154.0.112:2381

--enable-pprof=false

[Install]

WantedBy=multi-user.target

EOF

k8s-node02配置文件如下

cat > /usr/lib/systemd/system/etcd.service << "EOF"

[Unit]

Description=Kubernetes:Etcd

After=network.target network-online.target

Wants=network-online.target

[Service]

Restart=on-failure

RestartSec=5

ExecStart=/usr/local/bin/etcd

--name=etcd03

--data-dir=/opt/cluster/etcd/data

--wal-dir=/opt/cluster/etcd/wal

--listen-peer-urls=https://10.154.0.113:2380

--listen-client-urls=https://10.154.0.113:2379,http://127.0.0.1:2379

--initial-advertise-peer-urls=https://10.154.0.113:2380

--initial-cluster=etcd01=https://10.154.0.111:2380,etcd02=https://10.154.0.112:2380,etcd03=https://10.154.0.113:2380

--initial-cluster-state=new

--initial-cluster-token=373b3543a301630c

--advertise-client-urls=https://10.154.0.113:2379

--cert-file=/opt/cluster/ssl/etcd/etcd.pem

--key-file=/opt/cluster/ssl/etcd/etcd-key.pem

--peer-cert-file=/opt/cluster/ssl/etcd/etcd.pem

--peer-key-file=/opt/cluster/ssl/etcd/etcd-key.pem

--trusted-ca-file=/opt/cluster/ssl/rootca/rootca.pem

--peer-trusted-ca-file=/opt/cluster/ssl/rootca/rootca.pem

--client-cert-auth=true

--peer-client-cert-auth=true

--logger=zap

--log-outputs=default

--log-level=info

--listen-metrics-urls=https://10.154.0.113:2381

--enable-pprof=false

[Install]

WantedBy=multi-user.target

EOF

3.启动etcd

所有机器都操作

systemctl daemon-reload &&

systemctl enable etcd.service &&

systemctl start etcd.service &&

systemctl status etcd.service

报错看这里

journalctl -u etcd >error.log

vim error.log

如果要重新部署一定要清理残留数据

rm -rf /opt/cluster/etcd/wal/

rm -rf /opt/cluster/etcd/data/

rm -rf /opt/cluster/ssl/etcd/

4.验证

任意一台都可执行

ETCDCTL_API=3 /usr/local/bin/etcdctl

--cacert=/opt/cluster/ssl/rootca/rootca.pem

--cert=/opt/cluster/ssl/etcd/etcd.pem

--key=/opt/cluster/ssl/etcd/etcd-key.pem

--endpoints="https://10.154.0.111:2379,https://10.154.0.112:2379,https://10.154.0.113:2379"

endpoint health --write-out=table

四、部署API

本小节搭建是参考这篇博文,非常感谢该博主解惑。

4.1 整体规划

前面我们简单说了一下单点master部署环境,这里说一下整体架构环境。

| 节点 | IP | 复用 |

|---|---|---|

| k8s-master01 | 10.154.0.111 | etcd01 |

| k8s-master02 | 10.154.0.115 | |

| k8s-node01 | 10.154.0.112 | etcd02 |

| k8s-node02 | 10.154.0.113 | etcd03 |

| k8s-node03 | 10.154.0.114 | |

| k8s-lb01 | 10.154.0.116 | |

| k8s-lb02 | 10.154.0.117 | |

| vip地址 | 10.154.0.118 |

这里使用两台Master节点,三台Work节点,两台负载均衡服务器,一共七台机器。

负载均衡器使用KEEPALIVE+LVS来实现,会用到一个虚拟IP地址。

etcd集群复用的一台Master两台Work节点。

在这里说这些主要是kube-api生成证书需要用到这些ip地址

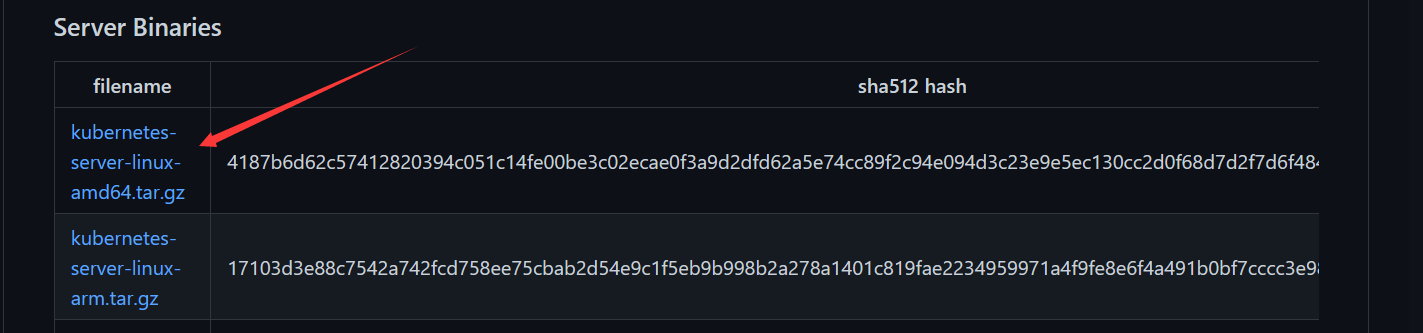

4.2 下载API

我使用的是目前最新版本为v1.22.2

下载api:官方下载

注:打开链接你会发现里面有很多包,下载一个Server Binaries包就够了,里面包含了Master和Worker节点的二进制文件。

4.3 颁发证书

1.上传工具

cd ~/tools/

tar zxvf kubernetes-server-linux-amd64.tar.gz

cd kubernetes/server/bin

cp kube-apiserver kube-scheduler kube-controller-manager /usr/local/bin

cp kubectl /usr/local/bin

2.生成证书

cd /opt/cluster/ssl

cat > kubernetes/kube-apiserver-csr.json << "EOF"

{

"CN": "kube-apiserver",

"hosts": [

"127.0.0.1",

"10.154.0.111",

"10.154.0.115",

"10.154.0.116",

"10.154.0.117",

"10.154.0.118",

"10.96.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "ecdsa",

"size": 256

},

"names": [{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "tz"

}]

}

EOF

cd /opt/cluster/ssl

cfssl gencert

-ca=rootca/rootca.pem

-ca-key=rootca/rootca-key.pem

--config=cfssl-conf.json

-profile=common kubernetes/kube-apiserver-csr.json | cfssljson -bare kubernetes/kube-apiserver

Work节点的证书使用API授权,不自己签发,所以这里的IP地址除了Work节点不用写,其他都要写。

10.96.0.1是service-cluster-ip的首个IP

4.4 部署API

1.生成token.csv

cd /opt/cluster/ssl

echo $(head -c 16 /dev/urandom | od -An -t x | tr -d ' '),kubelet-bootstrap,10001,"system:kubelet-bootstrap" > kubernetes/kube-apiserver.token.csv

Work节点请求证书需要用到,这里是注册了一个低权限的用户kubelet-bootstrap,工作节点使用该用户向API请求证书

2.编写systemd配置文件

cat > /usr/lib/systemd/system/kube-apiserver.service << "EOF"

[Unit]

Description=Kubernetes:Apiserver

After=network.target network-online.target

Wants=network-online.target

[Service]

Restart=on-failure

RestartSec=5

ExecStart=/usr/local/bin/kube-apiserver

--runtime-config=api/all=true

--anonymous-auth=false

--bind-address=0.0.0.0

--advertise-address=10.154.0.111

--secure-port=6443

--tls-cert-file=/opt/cluster/ssl/kubernetes/kube-apiserver.pem

--tls-private-key-file=/opt/cluster/ssl/kubernetes/kube-apiserver-key.pem

--client-ca-file=/opt/cluster/ssl/rootca/rootca.pem

--etcd-cafile=/opt/cluster/ssl/rootca/rootca.pem

--etcd-certfile=/opt/cluster/ssl/etcd/etcd.pem

--etcd-keyfile=/opt/cluster/ssl/etcd/etcd-key.pem

--etcd-servers=https://10.154.0.111:2379,https://10.154.0.112:2379,https://10.154.0.113:2379

--kubelet-client-certificate=/opt/cluster/ssl/kubernetes/kube-apiserver.pem

--kubelet-client-key=/opt/cluster/ssl/kubernetes/kube-apiserver-key.pem

--service-account-key-file=/opt/cluster/ssl/rootca/rootca-key.pem

--service-account-signing-key-file=/opt/cluster/ssl/rootca/rootca-key.pem

--service-account-issuer=https://kubernetes.default.svc.cluster.local

--enable-bootstrap-token-auth=true

--token-auth-file=/opt/cluster/ssl/kubernetes/kube-apiserver.token.csv

--allow-privileged=true

--service-cluster-ip-range=10.96.0.0/16

--service-node-port-range=30000-50000

--authorization-mode=RBAC,Node

--enable-aggregator-routing=true

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction

--audit-log-maxage=30

--audit-log-maxbackup=3

--audit-log-maxsize=100

--audit-log-path=/opt/cluster/log/kube-apiserver/audit.log

--logtostderr=false

--v=2

--log-dir=/opt/cluster/log/kube-apiserver

[Install]

WantedBy=multi-user.target

EOF

3.启动

systemctl daemon-reload &&

systemctl enable --now kube-apiserver.service &&

systemctl status kube-apiserver.service

报错看日志

journalctl -u kube-apiserver > error.log

vim error.log

五、部署kubectl

kubectl是一个管理集群的工具

1.生成证书

cd /opt/cluster/ssl

cat > kubernetes/kubectl-csr.json << "EOF"

{

"CN": "clusteradmin",

"key": {

"algo": "ecdsa",

"size": 256

},

"names": [{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "tz"

}]

}

EOF

cd /opt/cluster/ssl

cfssl gencert -ca=rootca/rootca.pem

-ca-key=rootca/rootca-key.pem

--config=cfssl-conf.json

-profile=common kubernetes/kubectl-csr.json | cfssljson -bare kubernetes/kubectl

2.生成kubeconfig配置文件

我是先部署的单点Master集群,还没用负载均衡器,所以这里的--server填写的是k8s-master01的地址,如果部署了负载均衡器,则填写VIP地址。

cd /opt/cluster/ssl

kubectl config set-cluster kubernetes

--certificate-authority=/opt/cluster/ssl/rootca/rootca.pem

--embed-certs=true

--server=https://10.154.0.111:6443

--kubeconfig=kubernetes/kubectl.kubeconfig

kubectl config set-credentials clusteradmin

--client-certificate=/opt/cluster/ssl/kubernetes/kubectl.pem

--client-key=/opt/cluster/ssl/kubernetes/kubectl-key.pem

--embed-certs=true

--kubeconfig=kubernetes/kubectl.kubeconfig

kubectl config set-context default

--cluster=kubernetes

--user=clusteradmin

--kubeconfig=kubernetes/kubectl.kubeconfig

kubectl config use-context default

--kubeconfig=kubernetes/kubectl.kubeconfig

mkdir /root/.kube

cp /opt/cluster/ssl/kubernetes/kubectl.kubeconfig /root/.kube/config

报错看日志

journalctl -u kubectl > error.log

vim error.log

如果重新部署需要删除相关证书

rm -rf /opt/cluster/ssl/kubernetes/kubectl*

rm -rf /opt/cluster/ssl/kubernetes/kube-api*

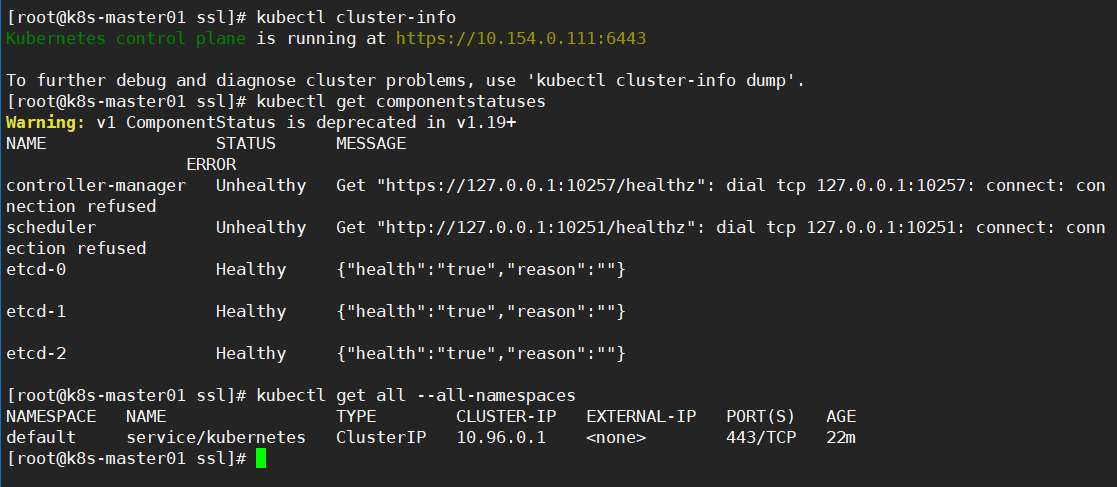

3.获取集群信息

kubectl cluster-info

kubectl get cs

kubectl get all --all-namespaces

#命令补全[需要退出SHELL环境重新进入]

kubectl completion bash > /usr/share/bash-completion/completions/kubectl

六、部署controller-manager

本小节搭建是参考这篇博文,非常感谢该博主解惑。

6.1 签发证书

这里给Master节点签发证书

cd /opt/cluster/ssl

cat > kubernetes/kube-controller-manager-csr.json << "EOF"

{

"CN": "system:kube-controller-manager",

"hosts": [

"127.0.0.1",

"10.154.0.111",

"10.154.0.115"

],

"key": {

"algo": "ecdsa",

"size": 256

},

"names": [{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "KUBERNETES",

"OU": "tz"

}]

}

EOF

cd /opt/cluster/ssl

cfssl gencert -ca=rootca/rootca.pem

-ca-key=rootca/rootca-key.pem

--config=cfssl-conf.json

-profile=common kubernetes/kube-controller-manager-csr.json | cfssljson -bare kubernetes/kube-controller-manager

6.2 部署controller-manager

1.编写kubeconfig配置文件

cd /opt/cluster/ssl

kubectl config set-cluster kubernetes --certificate-authority=/opt/cluster/ssl/rootca/rootca.pem

--embed-certs=true --server=https://10.154.0.111:6443

--kubeconfig=kubernetes/kube-controller-manager.kubeconfig

kubectl config set-credentials kube-controller-manager --client-certificate=kubernetes/kube-controller-manager.pem

--client-key=kubernetes/kube-controller-manager-key.pem --embed-certs=true

--kubeconfig=kubernetes/kube-controller-manager.kubeconfig

kubectl config set-context default --cluster=kubernetes --user=kube-controller-manager

--kubeconfig=kubernetes/kube-controller-manager.kubeconfig

kubectl config use-context default --kubeconfig=kubernetes/kube-controller-manager.kubeconfig

2.编写systemd配置文件

cat > /usr/lib/systemd/system/kube-controller-manager.service << "EOF"

[Unit]

Description=Kubernetes:Kube-Controller-Manager

After=network.target network-online.target

Wants=network-online.target

[Service]

Restart=on-failure

RestartSec=5

ExecStart=/usr/local/bin/kube-controller-manager

--cluster-name=kubernetes

--secure-port=10257

--bind-address=127.0.0.1

--service-cluster-ip-range=10.96.0.0/16

--allocate-node-cidrs=true

--cluster-cidr=10.97.0.0/16

--leader-elect=true

--controllers=*,bootstrapsigner,tokencleaner

--kubeconfig=/opt/cluster/ssl/kubernetes/kube-controller-manager.kubeconfig

--tls-cert-file=/opt/cluster/ssl/kubernetes/kube-controller-manager.pem

--tls-private-key-file=/opt/cluster/ssl/kubernetes/kube-controller-manager-key.pem

--cluster-signing-cert-file=/opt/cluster/ssl/rootca/rootca.pem

--cluster-signing-key-file=/opt/cluster/ssl/rootca/rootca-key.pem

--cluster-signing-duration=87600h0m0s

--use-service-account-credentials=true

--root-ca-file=/opt/cluster/ssl/rootca/rootca.pem

--service-account-private-key-file=/opt/cluster/ssl/rootca/rootca-key.pem

--logtostderr=false

--v=2

--log-dir=/opt/cluster/log/kube-controller-manager

[Install]

WantedBy=multi-user.target

EOF

3.启动

systemctl daemon-reload &&

systemctl enable --now kube-controller-manager.service &&

systemctl status kube-controller-manager.service

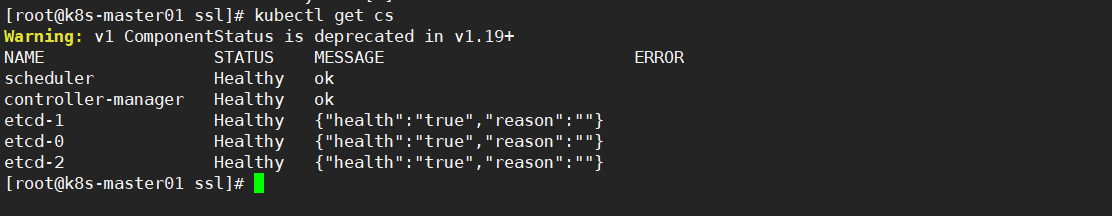

验证

kubectl get componentstatuses

报错查看日志

journalctl -u kube-controller-manager > error.log

vim error.log

七、部署scheduler

7.1 签发证书

这里给Master节点签发证书

cd /opt/cluster/ssl

cat > kubernetes/kube-scheduler-csr.json << "EOF"

{

"CN": "system:kube-scheduler",

"hosts": [

"127.0.0.1",

"10.154.0.111",

"10.154.0.115"

],

"key": {

"algo": "ecdsa",

"size": 256

},

"names": [{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "KUBERNETES",

"OU": "tz"

}]

}

EOF

cd /opt/cluster/ssl

cfssl gencert

-ca=rootca/rootca.pem

-ca-key=rootca/rootca-key.pem

--config=cfssl-conf.json

-profile=common kubernetes/kube-scheduler-csr.json | cfssljson -bare kubernetes/kube-scheduler

7.2 部署kube-scheduler

1.编写kubeconfig配置文件

cd /opt/cluster/ssl

kubectl config set-cluster kubernetes --certificate-authority=/opt/cluster/ssl/rootca/rootca.pem

--embed-certs=true --server=https://10.154.0.111:6443

--kubeconfig=kubernetes/kube-scheduler.kubeconfig

kubectl config set-credentials kube-scheduler --client-certificate=kubernetes/kube-scheduler.pem

--client-key=kubernetes/kube-scheduler-key.pem --embed-certs=true

--kubeconfig=kubernetes/kube-scheduler.kubeconfig

kubectl config set-context default --cluster=kubernetes --user=kube-scheduler

--kubeconfig=kubernetes/kube-scheduler.kubeconfig

kubectl config use-context default --kubeconfig=kubernetes/kube-scheduler.kubeconfig

2.编写systemd配置文件

cat > /usr/lib/systemd/system/kube-scheduler.service << "EOF"

[Unit]

Description=Kubernetes:Kube-Scheduler

After=network.target network-online.target

Wants=network-online.target

[Service]

Restart=on-failure

RestartSec=5

ExecStart=/usr/local/bin/kube-scheduler

--kubeconfig=/opt/cluster/ssl/kubernetes/kube-scheduler.kubeconfig

--address=127.0.0.1

--leader-elect=true

--logtostderr=false

--v=2

--log-dir=/opt/cluster/log/kube-scheduler

[Install]

WantedBy=multi-user.target

EOF

3.启动

systemctl daemon-reload &&

systemctl enable --now kube-scheduler.service &&

systemctl status kube-scheduler.service

验证

kubectl get cs

报错查看日志

journalctl -u kube-controller-manager > error.log

vim error.log

八、部署kubelet

本小节搭建是参考这篇博文,非常感谢该博主解惑。

1.上传工具

cd /root/tools/kubernetes/server/bin

cp kubelet kube-proxy /usr/local/bin

scp -r kubelet kube-proxy root@10.154.0.112:/usr/local/bin

scp -r kubelet kube-proxy root@10.154.0.113:/usr/local/bin

2.编写kubeconfig配置文件

cd /opt/cluster/ssl

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

kubectl config set-cluster kubernetes --certificate-authority=/opt/cluster/ssl/rootca/rootca.pem

--embed-certs=true --server=https://10.154.0.111:6443

--kubeconfig=kubernetes/kubelet-bootstrap.kubeconfig

kubectl config set-credentials kubelet-bootstrap --token=$(awk -F "," '{print $1}' /opt/cluster/ssl/kubernetes/kube-apiserver.token.csv)

--kubeconfig=kubernetes/kubelet-bootstrap.kubeconfig

kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap

--kubeconfig=kubernetes/kubelet-bootstrap.kubeconfig

kubectl config use-context default --kubeconfig=kubernetes/kubelet-bootstrap.kubeconfig

3.编写kubelet.conf配置文件

cd /opt/cluster/ssl

cat > kubernetes/kubelet.conf << "EOF"

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 0.0.0.0

port: 10250

readOnlyPort: 0

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /opt/cluster/ssl/rootca/rootca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

cgroupDriver: systemd

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

healthzBindAddress: 127.0.0.1

healthzPort: 10248

rotateCertificates: true

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

maxOpenFiles: 1000000

maxPods: 110

EOF

4.编写systemd配置文件

cat > /usr/lib/systemd/system/kubelet.service << "EOF"

[Unit]

Description=Kubernetes:Kubelet

After=network.target network-online.target docker.service

Requires=docker.service

[Service]

Restart=on-failure

RestartSec=5

ExecStart=/usr/local/bin/kubelet

--bootstrap-kubeconfig=/opt/cluster/ssl/kubernetes/kubelet-bootstrap.kubeconfig

--config=/opt/cluster/ssl/kubernetes/kubelet.conf

--kubeconfig=/opt/cluster/kubelet/kubelet.kubeconfig

--cert-dir=/opt/cluster/kubelet/ssl

--network-plugin=cni

--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2

--logtostderr=false

--v=2

--log-dir=/opt/cluster/log/kubelet

[Install]

WantedBy=multi-user.target

EOF

5.复制证书配置文件到其他节点

scp -r /opt/cluster/ssl root@10.154.0.112:/opt/cluster/

scp -r /opt/cluster/ssl root@10.154.0.113:/opt/cluster/

scp -r /usr/lib/systemd/system/kubelet.service root@10.154.0.112:/usr/lib/systemd/system/kubelet.service

scp -r /usr/lib/systemd/system/kubelet.service root@10.154.0.113:/usr/lib/systemd/system/kubelet.service

6.启动

所有节点都执行

systemctl daemon-reload &&

systemctl enable --now kubelet.service &&

systemctl status kubelet.service

报错查看日志

journalctl -u kubelet> error.log

vim error.log

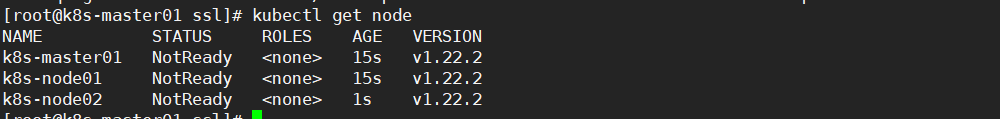

7.授权证书

#查看需要授权的证书

kubectl get csr

#授权证书

kubectl certificate approve <CSR_NAME>

kubectl get node

九、部署kube-proxy

1.生成证书

cd /opt/cluster/ssl

cat > kubernetes/kube-proxy-csr.json << "EOF"

{

"CN": "system:kube-proxy",

"key": {

"algo": "ecdsa",

"size": 256

},

"names": [{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "KUBERNETES",

"OU": "tz"

}]

}

EOF

cd /opt/cluster/ssl

cfssl gencert

-ca=rootca/rootca.pem

-ca-key=rootca/rootca-key.pem

--config=cfssl-conf.json

-profile=common kubernetes/kube-proxy-csr.json | cfssljson -bare kubernetes/kube-proxy

2.编写kubeconfig文件

cd /opt/cluster/ssl

kubectl config set-cluster kubernetes --certificate-authority=/opt/cluster/ssl/rootca/rootca.pem

--embed-certs=true --server=https://10.154.0.111:6443

--kubeconfig=kubernetes/kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy --client-certificate=/opt/cluster/ssl/kubernetes/kube-proxy.pem

--client-key=/opt/cluster/ssl/kubernetes/kube-proxy-key.pem --embed-certs=true

--kubeconfig=kubernetes/kube-proxy.kubeconfig

kubectl config set-context default --cluster=kubernetes --user=kube-proxy

--kubeconfig=kubernetes/kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kubernetes/kube-proxy.kubeconfig

3.编写kube-proxy配置文件

cat > kubernetes/kube-proxy.conf << "EOF"

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

clientConnection:

kubeconfig: /opt/cluster/ssl/kubernetes/kube-proxy.kubeconfig

bindAddress: 0.0.0.0

clusterCIDR: "10.97.0.0/16"

healthzBindAddress: "0.0.0.0:10256"

metricsBindAddress: "0.0.0.0:10249"

mode: ipvs

ipvs:

scheduler: "rr"

EOF

4.编写systemd配置文件

这里需要注意,我把Master01也部署为Work节点,一方面可以监控,还能跑Pod,如果不想在Master上跑Pod,可以添加污点。

这里是在Master01上,所以--hostname-override值为k8s-master01

cat > /usr/lib/systemd/system/kube-proxy.service << "EOF"

[Unit]

Description=Kubernetes:Kube-Proxy

After=network.target network-online.target

Wants=network-online.target

[Service]

Restart=on-failure

RestartSec=5

ExecStart=/usr/local/bin/kube-proxy

--config=/opt/cluster/ssl/kubernetes/kube-proxy.conf

--logtostderr=false

--v=2

--log-dir=/opt/cluster/log/kube-proxy

--hostname-override=k8s-master01

[Install]

WantedBy=multi-user.target

EOF

5.复制证书跟配置文件到其他节点

scp -r /opt/cluster/ssl 10.154.0.112:/opt/cluster/

scp -r /opt/cluster/ssl 10.154.0.113:/opt/cluster/

scp -r /usr/lib/systemd/system/kube-proxy.service root@10.154.0.112:/usr/lib/systemd/system/kube-proxy.service

scp -r /usr/lib/systemd/system/kube-proxy.service root@10.154.0.113:/usr/lib/systemd/system/kube-proxy.service

在k8s-node01跟k8s-node02上修改

#在node01上修改

vim /usr/lib/systemd/system/kube-proxy.service

...

--hostname-override=k8s-node01

...

#在node02上修改

vim /usr/lib/systemd/system/kube-proxy.service

...

--hostname-override=k8s-node02

...

6.启动

systemctl daemon-reload &&

systemctl enable --now kube-proxy.service &&

systemctl status kube-proxy.service

报错查看日志

journalctl -u kubelet> error.log

vim error.log

十、部署网络组件

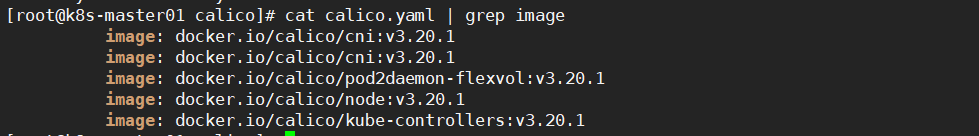

10.1 部署calico插件

本小节环境搭建是参考这篇博文,非常感谢该博主解惑。

目前最新版本为v3.20

下载地址:官网下载

1.修改calico.yaml文件

cd /opt/cluster/plugins/calico

#在3878行

vim calico.yaml

- name: CALICO_IPV4POOL_CIDR

value: "10.97.0.0/16"

2.应用yaml文件

kubectl apply -f calico.yaml

calico网络插件是以容器化启动的,需要下载以下四个容器

当无法启动calico插件时,需要先使用

docker pull拉取它们以排查是否是网络原因造成的无法启动

3.验证

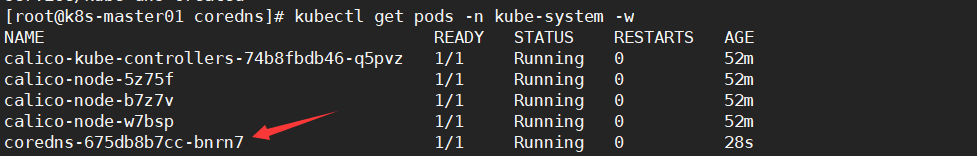

kubectl get pods -n kube-system

#-w可以实时查看

kubectl get pods -n kube-system -w

kubectl get node

正常情况下,calico插件状态为Running,各节点状态为Ready

报错查看

#查看容器事件描述,用来排查故障

kubectl describe pod -n kube-system calico-node-b7z7v

#查看calico日志

tail -f /var/log/calico/cni/cni.log

如果重新部署需要删除calico网络环境

#清理网络环境

kubectl delete -f calico.yaml

rm -rf /run/calico

/sys/fs/bpf/calico

/var/lib/calico

/var/log/calico

/opt/cluster/plugins/calico

/opt/cni/bin/calico

#查看是否还有残留的calico的pod

kubectl get pods -n kube-system

#强制删除Pod

kubectl delete pod <pod名字> -n kube-system --force --grace-period=0

10.2 CoreDNS插件

目前最新版本为v1.8.6

下载地址:官网下载

1.修改coredns.yaml文件

cd /opt/cluster/plugins/coredns

vim coredns.yaml

---

...

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes CLUSTER_DOMAIN REVERSE_CIDRS { # 修改此处的"CLUSTER_DOMAIN"为"cluster.local",表示集群域名

fallthrough in-addr.arpa ip6.arpa # 修改此处的"REVERSE_CIDRS"为"in-addr.arpa ip6.arpa";本处的配置涉及的是DNS的反向解释功能

}

prometheus :9153

forward . UPSTREAMNAMESERVER { # 修改此处的"UPSTREAMNAMESERVER"为"/etc/resolv.conf";本处的配置涉及的是DNS的正向解释功能

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}STUBDOMAINS # 删除此处的"STUBDOMAINS";

# 新版本的YAML文件中有这个字段[若不存在则不需要任何操作]

---

...

spec:

selector:

k8s-app: kube-dns

clusterIP: CLUSTER_DNS_IP # 修改此处的"CLUSTER_DNS_IP"为"10.96.0.10";本处为定义K8S集群内的DNS服务器的地址;

# 这个值应该与"kubelet.conf"中定义的"clusterDNS"配置项的值相同;

也可参考:部署coredns

2.应用yaml文件

cd /opt/cluster/plugins/coredns

kubectl apply -f coredns.yaml

3.验证

#-w可以实时查看

kubectl get pods -n kube-system -w

kubectl get node

报错查看

#查看事件日志

kubectl describe pod -n kube-system coredns-675db8b7cc-bnrn7

如果重新部署需要删除coredns网络环境

kubectl delete -f coredns.yaml

至此,单Master节点部署完成,后续会写部署多Master节点跟新增Work节点