参考:《机器学习实战》- Machine Learning in Action

一、 基本思想

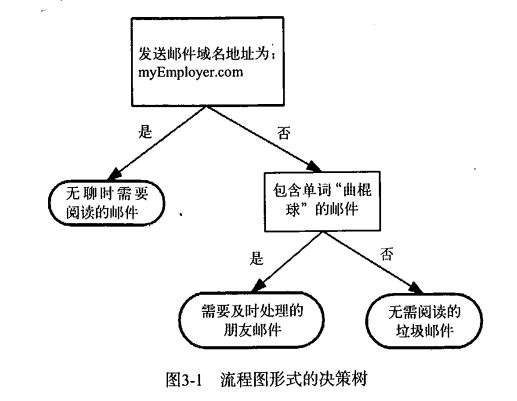

我们所熟知的决策树的形状可能如下:

使用决策树算法的目的就是生成类似于上图的分类效果。所以算法的主要步骤就是如何去选择结点。

划分数据集的最大原则是:将无序的数据变得更加有序。我们可以使用多种方法划分数据集,但是每种方法都有各自的优缺点。集合信息的度量方式称为香农熵。

伪代码如下:

检测数据集中的每个子项是否属于同一分类;

if so return 类标签;

else

寻找划分数据集的最好特征

划分数据集

创建分支节点

for 每个划分的子集

调用createBranch并增加返回结果到分支节点中

return 分支节点

一般而言,计算距离会采用欧式距离。

二、 代码

# -*- coding:utf8 -*-

import operator

from math import log

#计算信息熵

def calcShannonEnt(dataSet):

numEntries = len(dataSet)

labelCounts = {}

for featVec in dataSet:

currentLabel = featVec[-1]

if currentLabel not in labelCounts.keys():

labelCounts[currentLabel] = 0

labelCounts[currentLabel] += 1

shannonEnt = 0.0

for key in labelCounts:

prob = float(labelCounts[key])/numEntries

shannonEnt -= prob*log(prob, 2)

return shannonEnt

#按照给定特征划分数据集

def splitDataSet(dataSet, axis, value):

retDataSet = []

for featVec in dataSet:

if featVec[axis] == value:

reducedFeatVec = featVec[:axis]

reducedFeatVec.extend(featVec[axis+1:])

retDataSet.append(reducedFeatVec)

return retDataSet

#选择最好的数据集划分方式

def chooseBestFeatureToSplit(dataSet):

numFeatures = len(dataSet[0]) - 1

baseEntropy = calcShannonEnt(dataSet)

bestInfoGain = 0.0

bestFeature = -1

for i in range(numFeatures):

featList = [example[i] for example in dataSet]

uniqueVals = set(featList)

newEntropy = 0.0

for value in uniqueVals:

subDataSet = splitDataSet(dataSet, i, value)

prob = len(subDataSet)/float(len(dataSet))

newEntropy += prob * calcShannonEnt(subDataSet)

infoGain = baseEntropy - newEntropy

if (infoGain > bestInfoGain):

bestInfoGain = infoGain

bestFeature = i

return bestFeature

#构造决策树

def majorityCnt(classList):

classCount = {}

for vote in classList:

if vote not in classCount.keys():

classCount[vote] = 0

classCount[vote] += 1

sortedClassCount = sorted(classCount.items(),

key=lambda item:item[1], reverse=True)

return sortedClassCount[0][0]

def createTree(dataSet, labels):

classList = [example[-1] for example in dataSet]

if classList.count(classList[0]) == len(classList):

return classList[0]

if len(dataSet[0]) == 1:

return majorityCnt(classList)

bestFeat = chooseBestFeatureToSplit(dataSet)

bestFeatLabel = labels[bestFeat]

myTree = {bestFeatLabel:{}}

del labels[bestFeat]

featValues = [example[bestFeat] for example in dataSet]

uniqueVals = set(featValues)

for value in uniqueVals:

subLabels = labels[:]

myTree[bestFeatLabel][value] = createTree(splitDataSet(dataSet, bestFeat, value), subLabels)

return myTree