预训练模型是在像ImageNet这样的大型基准数据集上训练得到的神经网络模型。

现在通过Pytorch的torchvision.models 模块中现有模型如 ResNet,用一张图片去预测其类别。

1. 下载资源

这里随意从网上下载一张狗的图片。

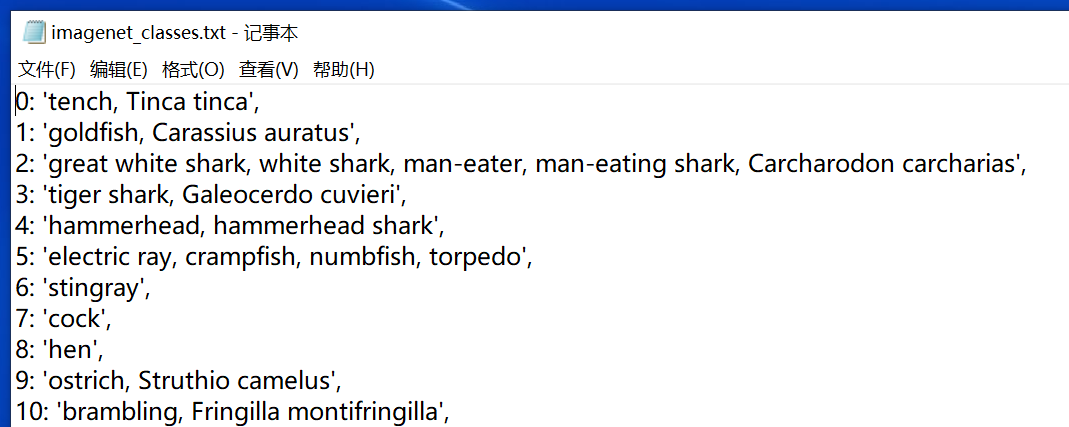

类别标签IMAGENET1000 从 https://blog.csdn.net/weixin_34304013/article/details/93708121复制到一个空的txt里,去掉最外面的{}即可。

2. 使用TorchVision加载预训练模型ResNet

2.1 从torchvison模块导入models模块,可以看一下有哪些不同的模型和网络结构。

1 from torchvision import models 2 dir(models)

1 ['AlexNet', 2 'DenseNet', 3 'GoogLeNet', 4 'GoogLeNetOutputs', 5 'Inception3', 6 'InceptionOutputs', 7 'MNASNet', 8 'MobileNetV2', 9 'ResNet', 10 'ShuffleNetV2', 11 'SqueezeNet', 12 'VGG', 13 '_GoogLeNetOutputs', 14 '_InceptionOutputs', 15 '__builtins__', 16 '__cached__', 17 '__doc__', 18 '__file__', 19 '__loader__', 20 '__name__', 21 '__package__', 22 '__path__', 23 '__spec__', 24 '_utils', 25 'alexnet', 26 'densenet', 27 'densenet121', 28 'densenet161', 29 'densenet169', 30 'densenet201', 31 'detection', 32 'googlenet', 33 'inception', 34 'inception_v3', 35 'mnasnet', 36 'mnasnet0_5', 37 'mnasnet0_75', 38 'mnasnet1_0', 39 'mnasnet1_3', 40 'mobilenet', 41 'mobilenet_v2', 42 'quantization', 43 'resnet', 44 'resnet101', 45 'resnet152', 46 'resnet18', 47 'resnet34', 48 'resnet50', 49 'resnext101_32x8d', 50 'resnext50_32x4d', 51 'segmentation', 52 'shufflenet_v2_x0_5', 53 'shufflenet_v2_x1_0', 54 'shufflenet_v2_x1_5', 55 'shufflenet_v2_x2_0', 56 'shufflenetv2', 57 'squeezenet', 58 'squeezenet1_0', 59 'squeezenet1_1', 60 'utils', 61 'vgg', 62 'vgg11', 63 'vgg11_bn', 64 'vgg13', 65 'vgg13_bn', 66 'vgg16', 67 'vgg16_bn', 68 'vgg19', 69 'vgg19_bn', 70 'video', 71 'wide_resnet101_2', 72 'wide_resnet50_2']

注: 大写的名称指的是实现许多流行模型的Python类。它们的体系结构不同——也就是说,在输入和输出之间发生的操作的安排不同。

小写的名称是函数,返回从这些类实例化的模型,有时使用不同的参数集。例如,resnet101返回一个有101层的ResNet实例,resnet18有18层,以此类推。

2.2 加载预训练模型,创建实例

1 resnet = models.resnet101(pretrained=True)

注:下载的模型文件会缓存在用户的相应目录中,这里在C:UsersDell.cache orchhubcheckpoints esnet101-5d3b4d8f.pth

可输出网络结构如下:

1 ResNet( 2 (conv1): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False) 3 (bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 4 (relu): ReLU(inplace=True) 5 (maxpool): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False) 6 (layer1): Sequential( 7 (0): Bottleneck( 8 (conv1): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False) 9 (bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 10 (conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) 11 (bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 12 (conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) 13 (bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 14 (relu): ReLU(inplace=True) 15 (downsample): Sequential( 16 (0): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) 17 (1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 18 ) 19 ) 20 (1): Bottleneck( 21 (conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False) 22 (bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 23 (conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) 24 (bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 25 (conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) 26 (bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 27 (relu): ReLU(inplace=True) 28 ) 29 (2): Bottleneck( 30 (conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False) 31 (bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 32 (conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) 33 (bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 34 (conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) 35 (bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 36 (relu): ReLU(inplace=True) 37 ) 38 ) 39 (layer2): Sequential( 40 (0): Bottleneck( 41 (conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False) 42 (bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 43 (conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False) 44 (bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 45 (conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False) 46 (bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 47 (relu): ReLU(inplace=True) 48 (downsample): Sequential( 49 (0): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2), bias=False) 50 (1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 51 ) 52 ) 53 (1): Bottleneck( 54 (conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False) 55 (bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 56 (conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) 57 (bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 58 (conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False) 59 (bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 60 (relu): ReLU(inplace=True) 61 ) 62 (2): Bottleneck( 63 (conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False) 64 (bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 65 (conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) 66 (bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 67 (conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False) 68 (bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 69 (relu): ReLU(inplace=True) 70 ) 71 (3): Bottleneck( 72 (conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False) 73 (bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 74 (conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) 75 (bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 76 (conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False) 77 (bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 78 (relu): ReLU(inplace=True) 79 ) 80 ) 81 (layer3): Sequential( 82 (0): Bottleneck( 83 (conv1): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) 84 (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 85 (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False) 86 (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 87 (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) 88 (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 89 (relu): ReLU(inplace=True) 90 (downsample): Sequential( 91 (0): Conv2d(512, 1024, kernel_size=(1, 1), stride=(2, 2), bias=False) 92 (1): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 93 ) 94 ) 95 (1): Bottleneck( 96 (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) 97 (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 98 (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) 99 (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 100 (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) 101 (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 102 (relu): ReLU(inplace=True) 103 ) 104 (2): Bottleneck( 105 (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) 106 (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 107 (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) 108 (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 109 (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) 110 (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 111 (relu): ReLU(inplace=True) 112 ) 113 (3): Bottleneck( 114 (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) 115 (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 116 (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) 117 (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 118 (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) 119 (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 120 (relu): ReLU(inplace=True) 121 ) 122 (4): Bottleneck( 123 (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) 124 (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 125 (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) 126 (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 127 (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) 128 (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 129 (relu): ReLU(inplace=True) 130 ) 131 (5): Bottleneck( 132 (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) 133 (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 134 (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) 135 (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 136 (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) 137 (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 138 (relu): ReLU(inplace=True) 139 ) 140 (6): Bottleneck( 141 (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) 142 (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 143 (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) 144 (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 145 (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) 146 (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 147 (relu): ReLU(inplace=True) 148 ) 149 (7): Bottleneck( 150 (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) 151 (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 152 (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) 153 (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 154 (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) 155 (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 156 (relu): ReLU(inplace=True) 157 ) 158 (8): Bottleneck( 159 (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) 160 (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 161 (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) 162 (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 163 (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) 164 (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 165 (relu): ReLU(inplace=True) 166 ) 167 (9): Bottleneck( 168 (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) 169 (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 170 (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) 171 (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 172 (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) 173 (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 174 (relu): ReLU(inplace=True) 175 ) 176 (10): Bottleneck( 177 (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) 178 (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 179 (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) 180 (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 181 (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) 182 (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 183 (relu): ReLU(inplace=True) 184 ) 185 (11): Bottleneck( 186 (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) 187 (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 188 (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) 189 (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 190 (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) 191 (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 192 (relu): ReLU(inplace=True) 193 ) 194 (12): Bottleneck( 195 (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) 196 (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 197 (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) 198 (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 199 (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) 200 (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 201 (relu): ReLU(inplace=True) 202 ) 203 (13): Bottleneck( 204 (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) 205 (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 206 (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) 207 (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 208 (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) 209 (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 210 (relu): ReLU(inplace=True) 211 ) 212 (14): Bottleneck( 213 (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) 214 (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 215 (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) 216 (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 217 (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) 218 (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 219 (relu): ReLU(inplace=True) 220 ) 221 (15): Bottleneck( 222 (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) 223 (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 224 (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) 225 (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 226 (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) 227 (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 228 (relu): ReLU(inplace=True) 229 ) 230 (16): Bottleneck( 231 (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) 232 (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 233 (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) 234 (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 235 (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) 236 (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 237 (relu): ReLU(inplace=True) 238 ) 239 (17): Bottleneck( 240 (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) 241 (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 242 (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) 243 (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 244 (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) 245 (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 246 (relu): ReLU(inplace=True) 247 ) 248 (18): Bottleneck( 249 (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) 250 (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 251 (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) 252 (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 253 (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) 254 (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 255 (relu): ReLU(inplace=True) 256 ) 257 (19): Bottleneck( 258 (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) 259 (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 260 (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) 261 (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 262 (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) 263 (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 264 (relu): ReLU(inplace=True) 265 ) 266 (20): Bottleneck( 267 (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) 268 (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 269 (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) 270 (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 271 (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) 272 (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 273 (relu): ReLU(inplace=True) 274 ) 275 (21): Bottleneck( 276 (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) 277 (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 278 (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) 279 (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 280 (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) 281 (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 282 (relu): ReLU(inplace=True) 283 ) 284 (22): Bottleneck( 285 (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) 286 (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 287 (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) 288 (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 289 (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) 290 (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 291 (relu): ReLU(inplace=True) 292 ) 293 ) 294 (layer4): Sequential( 295 (0): Bottleneck( 296 (conv1): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False) 297 (bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 298 (conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False) 299 (bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 300 (conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False) 301 (bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 302 (relu): ReLU(inplace=True) 303 (downsample): Sequential( 304 (0): Conv2d(1024, 2048, kernel_size=(1, 1), stride=(2, 2), bias=False) 305 (1): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 306 ) 307 ) 308 (1): Bottleneck( 309 (conv1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False) 310 (bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 311 (conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) 312 (bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 313 (conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False) 314 (bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 315 (relu): ReLU(inplace=True) 316 ) 317 (2): Bottleneck( 318 (conv1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False) 319 (bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 320 (conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) 321 (bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 322 (conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False) 323 (bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 324 (relu): ReLU(inplace=True) 325 ) 326 ) 327 (avgpool): AdaptiveAvgPool2d(output_size=(1, 1)) 328 (fc): Linear(in_features=2048, out_features=1000, bias=True) 329 )

2.3 图像预处理

1 from torchvision import transforms 2 preprocess = transforms.Compose([ 3 transforms.Resize(256), 4 transforms.CenterCrop(224), 5 transforms.ToTensor(), 6 transforms.Normalize( 7 mean=[0.485, 0.456, 0.406], 8 std=[0.229, 0.224, 0.225] 9 )])

注:借助TochVision模块中的transforms对输入图像进行预处理。

第2行:定义了一个变量,是对输入图像进行的所有图像转换的组合。

第3行:将图像调整为256×256像素。

第4行:将图像中心裁剪出来,大小为224×224像素。

第5行:将图像转换为PyTorch张量(tensor)数据类型。

第6-8行]:通过将图像的平均值和标准差设置为指定的值来正则化图像。

2.4 加载图像,并进行预处理转换为模型对应的输入形式

1 from PIL import Image 2 img = Image.open("C:/Users/Dell/Pictures/dog.jpg") 3 img

图像如下:

注:PIL:Python Imaging Library,可以通过Python解释器进行图像处理,提供了强大的图像处理能力。

1 import torch 2 img_t = preprocess(img) 3 batch_t = torch.unsqueeze(img_t, 0)

注:将图像tensor增加一个维度,因为一张图像只有3个维度,但模型要求输入是4纬张量,也就是默认是输入一批图像,而不是一张。

经过处理后,batch_t也代表一批图像,不过其中只有一张图像而已。

这里查看一下 img_t 和 batch_t 的维度

1 img_t.size() 2 3 torch.Size([3, 224, 224])

1 batch_t.size() 2 3 torch.Size([1, 3, 224, 224])

2.5 模型推断

使用预训练模型来看看模型认为图像是什么。首先,将模型置于eval模式,然后推断。

1 resnet.eval() 2 out = resnet(batch_t) 3 out.size()

1 torch.Size([1, 1000])

注:out为一个二维向量,行为1,列为1000。

前面提到,模型输入要求是一批图像,如果我们输入5张图像,则out的行为5,列为1000,列表示1000个类。

也就是行表示每一个图像,列表示1000个类,每个类的置信度。故每一行中的1000个元素,分别表示该行对应图像为每个类的可能性。

接下来需要用到一开始下载的类别标签imagenet_classes.txt,从文本文件中读取和存储标签。

1 with open('C:/Users/Dell/Desktop/imagenet_classes.txt') as f: 2 classes = [line.strip() for line in f.readlines()]

注:classes为含有1000个类名称字符串的列表(ImageNet数据集共包含1000个类)。

行号确定了类号,因此顺序不可更改。

接下来找出输出向量out中的最大置信度发生在哪个位置,用这个位置的下标来得出预测。

即索引最大预测值的位置。

1 _, index = torch.max(out, 1)

1 index 2 3 tensor([207])

注:取出二维向量 out 中每一行的最大值及下标,index为每行最大值的下标组成的列表。

接下来把预测值变为概率值。

1 percentage = torch.nn.functional.softmax(out, dim=1)[0] * 100 2 classes[index[0]], percentage[index[0]].item()

1 ("207: 'golden retriever',", 41.10982131958008)

该模型预测图像是一只金毛猎犬,置信度为41.11%

注:

第1行:对二维向量 out 中的每一行进行归一化(softmax是常用的归一化指数函数),然后取出第一行并使每个元素乘以100,得到本例中狗对应的每种类型的可能性(即置信度)。

第2行:打印类名及其置信度。

classes[index[0]]即是最大置信度对应的类名称。classes[index[0]]中,index[0]是第一行最大值的下标,即第一张图片的最大置信度的下标,index[1]为第二张图片的,index[2]是第三张图片的,以此类推。所以classes列表的元素顺序不可更改。

percentage[index[0]].item()中,index[0]的含义同上,percentage[index[0]]代表最大置信度那一项,.item()取出该项的值。

接下来看一看模型认为图像属于其他类的置信度。

1 _, indices = torch.sort(out, descending=True) 2 [(classes[idx], percentage[idx].item()) for idx in indices[0][:5]]

1 [("207: 'golden retriever',", 41.10982131958008), 2 ("151: 'Chihuahua',", 20.436004638671875), 3 ("154: 'Pekinese, Pekingese, Peke',", 8.29426097869873), 4 ("852: 'tennis ball',", 7.233486175537109), 5 ("259: 'Pomeranian',", 5.713674068450928)]

注:

torch.sort将out进行排序,默认对每一行排序,这里指定以递减的方式排序。

[(classes[idx], percentage[idx].item()) for idx in indices[0][:5]]中,indices[0][:5]产生一个临时的一维列表,包含indices的第一行前5个元素,也就是置信度最高的5个元素的下标值。

参考: 使用PyTorch中的预训练模型进行图像分类_u013679159的博客-CSDN博客

PyTorch预训练模型图像分类之一_zhangzhifu2019的博客-CSDN博客(前面有.pth文件加载)