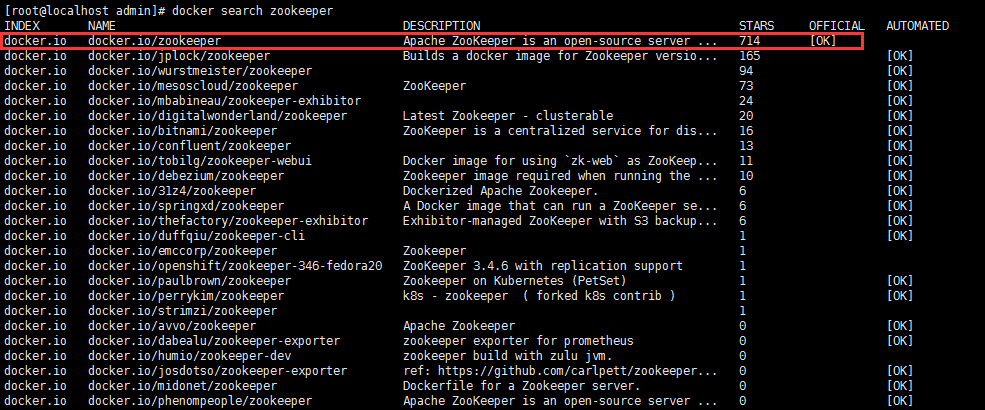

启动Docker后,先看一下我们有哪些选择。

有官方的当然选择官方啦~

下载:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

[root@localhost admin]# docker pull zookeeperUsing default tag: latestTrying to pull repository docker.io/library/zookeeper ...latest: Pulling from docker.io/library/zookeeper1ab2bdfe9778: Already exists7aaf9a088d61: Pull complete80a55c9c9fe8: Pull completea0086b0e6eec: Pull complete4165e7457cad: Pull completebcba13bcf3a1: Pull complete41c03a109e47: Pull complete4d5281c6b0d4: Pull completeDigest: sha256:175d6bb1471e1e37a48bfa41a9da047c80fade60fd585eae3a0e08a4ce1d39edStatus: Downloaded newer image for docker.io/zookeeper:latest |

查看镜像详情

[root@localhost admin]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

192.168.192.128:443/hello-2 latest 0c24558dd388 42 hours ago 660 MB

192.168.192.128:443/hello latest a3ba3d430bed 42 hours ago 660 MB

docker.io/nginx latest 5a3221f0137b 13 days ago 126 MB

docker.io/zookeeper latest 3487af26dee9 13 days ago 225 MB

docker.io/registry latest f32a97de94e1 5 months ago 25.8 MB

docker.io/mongo latest 8bf72137439e 12 months ago 380 MB

docker.io/influxdb latest 34de2bdc2d7f 12 months ago 213 MB

docker.io/centos latest 5182e96772bf 12 months ago 200 MB

docker.io/grafana/grafana latest 3e16e05be9a3 13 months ago 245 MB

docker.io/hello-world latest 2cb0d9787c4d 13 months ago 1.85 kB

docker.io/java latest d23bdf5b1b1b 2 years ago 643 MB

[root@localhost admin]# docker inspect 3487af26dee9

[

{

"Id": "sha256:3487af26dee9ef9eacee9a97521bc4f0243bef0b285247258c32f4a03cab92c5",

"RepoTags": [

"docker.io/zookeeper:latest"

],

"RepoDigests": [

"docker.io/zookeeper@sha256:175d6bb1471e1e37a48bfa41a9da047c80fade60fd585eae3a0e08a4ce1d39ed"

],

"Parent": "",

"Comment": "",

"Created": "2019-08-15T06:10:50.178554969Z",

"Container": "9a38467115f1952161d6075135d5c5287967282b834cfe68183339c810f9652b",

"ContainerConfig": {

"Hostname": "9a38467115f1",

"Domainname": "",

"User": "",

"AttachStdin": false,

"AttachStdout": false,

"AttachStderr": false,

"ExposedPorts": {

"2181/tcp": {},

"2888/tcp": {},

"3888/tcp": {},

"8080/tcp": {}

},

"Tty": false,

"OpenStdin": false,

"StdinOnce": false,

"Env": [

"PATH=/usr/local/openjdk-8/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/apache-zookeeper-3.5.5-bin/bin",

"LANG=C.UTF-8",

"JAVA_HOME=/usr/local/openjdk-8",

"JAVA_VERSION=8u222",

"JAVA_BASE_URL=https://github.com/AdoptOpenJDK/openjdk8-upstream-binaries/releases/download/jdk8u222-b10/OpenJDK8U-jre_",

"JAVA_URL_VERSION=8u222b10",

"ZOO_CONF_DIR=/conf",

"ZOO_DATA_DIR=/data",

"ZOO_DATA_LOG_DIR=/datalog",

"ZOO_LOG_DIR=/logs",

"ZOO_TICK_TIME=2000",

"ZOO_INIT_LIMIT=5",

"ZOO_SYNC_LIMIT=2",

"ZOO_AUTOPURGE_PURGEINTERVAL=0",

"ZOO_AUTOPURGE_SNAPRETAINCOUNT=3",

"ZOO_MAX_CLIENT_CNXNS=60",

"ZOO_STANDALONE_ENABLED=true",

"ZOO_ADMINSERVER_ENABLED=true",

"ZOOCFGDIR=/conf"

],

"Cmd": [

"/bin/sh",

"-c",

"#(nop) ",

"CMD ["zkServer.sh" "start-foreground"]"

],

"ArgsEscaped": true,

"Image": "sha256:20bf3cc1bd5b5766b79da5265e94007d0802ce241df1636d0f63e211a79a0e3e",

"Volumes": {

"/data": {},

"/datalog": {},

"/logs": {}

},

"WorkingDir": "/apache-zookeeper-3.5.5-bin",

"Entrypoint": [

"/docker-entrypoint.sh"

],

"OnBuild": null,

"Labels": {}

},

"DockerVersion": "18.06.1-ce",

"Author": "",

"Config": {

"Hostname": "",

"Domainname": "",

"User": "",

"AttachStdin": false,

"AttachStdout": false,

"AttachStderr": false,

"ExposedPorts": {

"2181/tcp": {},

"2888/tcp": {},

"3888/tcp": {},

"8080/tcp": {}

},

"Tty": false,

"OpenStdin": false,

"StdinOnce": false,

"Env": [

"PATH=/usr/local/openjdk-8/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/apache-zookeeper-3.5.5-bin/bin",

"LANG=C.UTF-8",

"JAVA_HOME=/usr/local/openjdk-8",

"JAVA_VERSION=8u222",

"JAVA_BASE_URL=https://github.com/AdoptOpenJDK/openjdk8-upstream-binaries/releases/download/jdk8u222-b10/OpenJDK8U-jre_",

"JAVA_URL_VERSION=8u222b10",

"ZOO_CONF_DIR=/conf",

"ZOO_DATA_DIR=/data",

"ZOO_DATA_LOG_DIR=/datalog",

"ZOO_LOG_DIR=/logs",

"ZOO_TICK_TIME=2000",

"ZOO_INIT_LIMIT=5",

"ZOO_SYNC_LIMIT=2",

"ZOO_AUTOPURGE_PURGEINTERVAL=0",

"ZOO_AUTOPURGE_SNAPRETAINCOUNT=3",

"ZOO_MAX_CLIENT_CNXNS=60",

"ZOO_STANDALONE_ENABLED=true",

"ZOO_ADMINSERVER_ENABLED=true",

"ZOOCFGDIR=/conf"

],

"Cmd": [

"zkServer.sh",

"start-foreground"

],

"ArgsEscaped": true,

"Image": "sha256:20bf3cc1bd5b5766b79da5265e94007d0802ce241df1636d0f63e211a79a0e3e",

"Volumes": {

"/data": {},

"/datalog": {},

"/logs": {}

},

"WorkingDir": "/apache-zookeeper-3.5.5-bin",

"Entrypoint": [

"/docker-entrypoint.sh"

],

"OnBuild": null,

"Labels": null

},

"Architecture": "amd64",

"Os": "linux",

"Size": 225126346,

"VirtualSize": 225126346,

"GraphDriver": {

"Name": "overlay2",

"Data": {

"LowerDir": "/var/lib/docker/overlay2/92185ebf7638a7b34180cfb87795dd758405cbad4fd0139b92a227d1a4b61847/diff:/var/lib/docker/overlay2/8787e91f5c03a7c03cee072019eca49a0402a0a0902be39ed0b5d651a79cce35/diff:/var/lib/docker/overlay2/ce5864ddfa4d1478047aa9fcaa03744e8a4078ebe43b41e7836c96c54c724044/diff:/var/lib/docker/overlay2/fc99437bcfbabb9e8234c06c90d1c60e58c34ac053aff1adc368b7ad3a50c158/diff:/var/lib/docker/overlay2/1779297a8980830229bd4bf58bd741730956d6797332fd07b863a1b48dcb6fa2/diff:/var/lib/docker/overlay2/ee735aa3608d890ac4751dd93581a67cb54a5dd4714081e9d09d0ebd9dbc3501/diff:/var/lib/docker/overlay2/cf6b3cbc42f3c8d1fb09b29db0dafbb4dceb120925970ab8a3871eaa8562414c/diff",

"MergedDir": "/var/lib/docker/overlay2/a7fcc1b78c472cde943f20d1d4495f145308507b5fe3da8800c33dc4ce426156/merged",

"UpperDir": "/var/lib/docker/overlay2/a7fcc1b78c472cde943f20d1d4495f145308507b5fe3da8800c33dc4ce426156/diff",

"WorkDir": "/var/lib/docker/overlay2/a7fcc1b78c472cde943f20d1d4495f145308507b5fe3da8800c33dc4ce426156/work"

}

},

"RootFS": {

"Type": "layers",

"Layers": [

"sha256:1c95c77433e8d7bf0f519c9d8c9ca967e2603f0defbf379130d9a841cca2e28e",

"sha256:2bf534399acac9c6b09a0b1d931223808000b04400a749f08187ed9ee435738d",

"sha256:eb25e0278d41b9ac637d8cb2e391457cf44ce8d2bfe0646d0c9faefc96413f91",

"sha256:e54bd3566d9ef3e1309a5af6caf8682f32c6ac4d6adfcbd3e601cfee4e2e0e85",

"sha256:c79435051d529a7b86f5f9fc32e7e2ec401929434e5596f02a2af731f55c9f28",

"sha256:76e0d7b2d700e6d17924b985703c7b5b84fb39ddcc0a1181b41217c2a11dffc4",

"sha256:eecdc37df6afd77091641588f9639f63b65e8eb141e56529e00da44419c5bd04",

"sha256:36e788f2d91a89375df5901f31cca33776f887c00ddfd3cf9f2466fa4cb794d6"

]

}

}

]

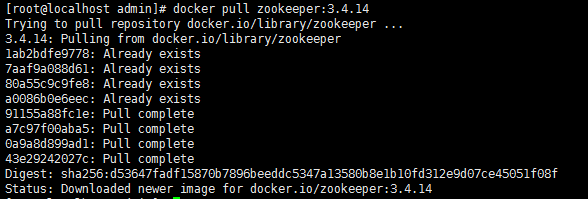

默认拉取最新的是3.5.X版本,如果你需要3.4.X版本的,要指定标签

单机

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

|

# 最后那个是镜像的ID[root@localhost admin]# docker run -d -p 2181:2181 --name some-zookeeper --restart always 3487af26dee9d5c6f857cd88c342acf63dd58e838a4cdf912daa6c8c0115091147136e819307[root@localhost admin]# docker psCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMESd5c6f857cd88 3487af26dee9 "/docker-entrypoin..." 4 seconds ago Up 3 seconds 2888/tcp, 3888/tcp, 0.0.0.0:2181->2181/tcp, 8080/tcp some-zookeeper[root@localhost admin]# docker exec -it d5c6f857cd88 bashroot@d5c6f857cd88:/apache-zookeeper-3.5.5-bin# ./bin/zkCli.shConnecting to localhost:21812019-08-29 07:15:21,623 [myid:] - INFO [main:Environment@109] - Client environment:zookeeper.version=3.5.5-390fe37ea45dee01bf87dc1c042b5e3dcce88653, built on 05/03/2019 12:07 GMT2019-08-29 07:15:21,679 [myid:] - INFO [main:Environment@109] - Client environment:host.name=d5c6f857cd882019-08-29 07:15:21,680 [myid:] - INFO [main:Environment@109] - Client environment:java.version=1.8.0_2222019-08-29 07:15:21,717 [myid:] - INFO [main:Environment@109] - Client environment:java.vendor=Oracle Corporation2019-08-29 07:15:21,718 [myid:] - INFO [main:Environment@109] - Client environment:java.home=/usr/local/openjdk-82019-08-29 07:15:21,725 [myid:] - INFO [main:Environment@109] - Client environment:java.class.path=/apache-zookeeper-3.5.5-bin/bin/../zookeeper-server/target/classes:/apache-zookeeper-3.5.5-bin/bin/../build/classes:/apache-zookeeper-3.5.5-bin/bin/../zookeeper-server/target/lib/*.jar:/apache-zookeeper-3.5.5-bin/bin/../build/lib/*.jar:/apache-zookeeper-3.5.5-bin/bin/../lib/zookeeper-jute-3.5.5.jar:/apache-zookeeper-3.5.5-bin/bin/../lib/zookeeper-3.5.5.jar:/apache-zookeeper-3.5.5-bin/bin/../lib/slf4j-log4j12-1.7.25.jar:/apache-zookeeper-3.5.5-bin/bin/../lib/slf4j-api-1.7.25.jar:/apache-zookeeper-3.5.5-bin/bin/../lib/netty-all-4.1.29.Final.jar:/apache-zookeeper-3.5.5-bin/bin/../lib/log4j-1.2.17.jar:/apache-zookeeper-3.5.5-bin/bin/../lib/json-simple-1.1.1.jar:/apache-zookeeper-3.5.5-bin/bin/../lib/jline-2.11.jar:/apache-zookeeper-3.5.5-bin/bin/../lib/jetty-util-9.4.17.v20190418.jar:/apache-zookeeper-3.5.5-bin/bin/../lib/jetty-servlet-9.4.17.v20190418.jar:/apache-zookeeper-3.5.5-bin/bin/../lib/jetty-server-9.4.17.v20190418.jar:/apache-zookeeper-3.5.5-bin/bin/../lib/jetty-security-9.4.17.v20190418.jar:/apache-zookeeper-3.5.5-bin/bin/../lib/jetty-io-9.4.17.v20190418.jar:/apache-zookeeper-3.5.5-bin/bin/../lib/jetty-http-9.4.17.v20190418.jar:/apache-zookeeper-3.5.5-bin/bin/../lib/javax.servlet-api-3.1.0.jar:/apache-zookeeper-3.5.5-bin/bin/../lib/jackson-databind-2.9.8.jar:/apache-zookeeper-3.5.5-bin/bin/../lib/jackson-core-2.9.8.jar:/apache-zookeeper-3.5.5-bin/bin/../lib/jackson-annotations-2.9.0.jar:/apache-zookeeper-3.5.5-bin/bin/../lib/commons-cli-1.2.jar:/apache-zookeeper-3.5.5-bin/bin/../lib/audience-annotations-0.5.0.jar:/apache-zookeeper-3.5.5-bin/bin/../zookeeper-*.jar:/apache-zookeeper-3.5.5-bin/bin/../zookeeper-server/src/main/resources/lib/*.jar:/conf:2019-08-29 07:15:22,108 [myid:] - INFO [main:Environment@109] - Client environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib2019-08-29 07:15:22,109 [myid:] - INFO [main:Environment@109] - Client environment:java.io.tmpdir=/tmp2019-08-29 07:15:22,109 [myid:] - INFO [main:Environment@109] - Client environment:java.compiler=<NA>2019-08-29 07:15:22,109 [myid:] - INFO [main:Environment@109] - Client environment:os.name=Linux2019-08-29 07:15:22,109 [myid:] - INFO [main:Environment@109] - Client environment:os.arch=amd642019-08-29 07:15:22,110 [myid:] - INFO [main:Environment@109] - Client environment:os.version=3.10.0-862.9.1.el7.x86_642019-08-29 07:15:22,110 [myid:] - INFO [main:Environment@109] - Client environment:user.name=root2019-08-29 07:15:22,110 [myid:] - INFO [main:Environment@109] - Client environment:user.home=/root2019-08-29 07:15:22,110 [myid:] - INFO [main:Environment@109] - Client environment:user.dir=/apache-zookeeper-3.5.5-bin2019-08-29 07:15:22,118 [myid:] - INFO [main:Environment@109] - Client environment:os.memory.free=11MB2019-08-29 07:15:22,148 [myid:] - INFO [main:Environment@109] - Client environment:os.memory.max=247MB2019-08-29 07:15:22,148 [myid:] - INFO [main:Environment@109] - Client environment:os.memory.total=15MB2019-08-29 07:15:22,206 [myid:] - INFO [main:ZooKeeper@868] - Initiating client connection, connectString=localhost:2181 sessionTimeout=30000 watcher=org.apache.zookeeper.ZooKeeperMain$MyWatcher@3b95a09c2019-08-29 07:15:22,239 [myid:] - INFO [main:X509Util@79] - Setting -D jdk.tls.rejectClientInitiatedRenegotiation=true to disable client-initiated TLS renegotiation2019-08-29 07:15:22,285 [myid:] - INFO [main:ClientCnxnSocket@237] - jute.maxbuffer value is 4194304 Bytes2019-08-29 07:15:22,366 [myid:] - INFO [main:ClientCnxn@1653] - zookeeper.request.timeout value is 0. feature enabled=Welcome to ZooKeeper!JLine support is enabled2019-08-29 07:15:22,563 [myid:localhost:2181] - INFO [main-SendThread(localhost:2181):ClientCnxn$SendThread@1112] - Opening socket connection to server localhost/0:0:0:0:0:0:0:1:2181. Will not attempt to authenticate using SASL (unknown error)2019-08-29 07:15:23,443 [myid:localhost:2181] - INFO [main-SendThread(localhost:2181):ClientCnxn$SendThread@959] - Socket connection established, initiating session, client: /0:0:0:0:0:0:0:1:37198, server: localhost/0:0:0:0:0:0:0:1:21812019-08-29 07:15:23,520 [myid:localhost:2181] - INFO [main-SendThread(localhost:2181):ClientCnxn$SendThread@1394] - Session establishment complete on server localhost/0:0:0:0:0:0:0:1:2181, sessionid = 0x10001216d990000, negotiated timeout = 30000WATCHER::WatchedEvent state:SyncConnected type:None path:null[zk: localhost:2181(CONNECTED) 0] ls /[zookeeper][zk: localhost:2181(CONNECTED) 1] quitWATCHER::WatchedEvent state:Closed type:None path:null2019-08-29 07:15:37,042 [myid:] - INFO [main:ZooKeeper@1422] - Session: 0x10001216d990000 closed2019-08-29 07:15:37,043 [myid:] - INFO [main-EventThread:ClientCnxn$EventThread@524] - EventThread shut down for session: 0x10001216d990000root@d5c6f857cd88:/apache-zookeeper-3.5.5-bin# exitexit[root@localhost admin]# |

在外部访问(192.168.192.128:2181)

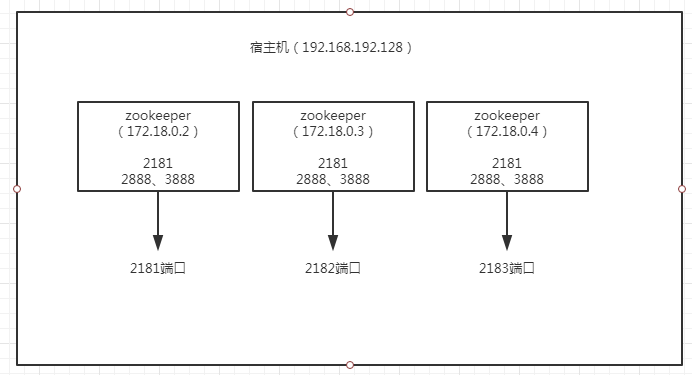

集群

环境:单台宿主机(192.168.192.128),启动三个zookeeper容器。

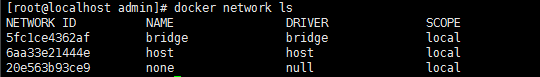

这里涉及一个问题,就是Docker容器之间通信的问题,这个很重要!

Docker有三种网络模式,bridge、host、none,在你创建容器的时候,不指定--network默认是bridge。

bridge:为每一个容器分配IP,并将容器连接到一个docker0虚拟网桥,通过docker0网桥与宿主机通信。也就是说,此模式下,你不能用宿主机的IP+容器映射端口来进行Docker容器之间的通信。

host:容器不会虚拟自己的网卡,配置自己的IP,而是使用宿主机的IP和端口。这样一来,Docker容器之间的通信就可以用宿主机的IP+容器映射端口

none:无网络。

=====================================================

先在本地创建目录:

[root@localhost admin]# mkdir /usr/local/zookeeper-cluster [root@localhost admin]# mkdir /usr/local/zookeeper-cluster/node1 [root@localhost admin]# mkdir /usr/local/zookeeper-cluster/node2 [root@localhost admin]# mkdir /usr/local/zookeeper-cluster/node3 [root@localhost admin]# ll /usr/local/zookeeper-cluster/ total 0 drwxr-xr-x. 2 root root 6 Aug 28 23:02 node1 drwxr-xr-x. 2 root root 6 Aug 28 23:02 node2 drwxr-xr-x. 2 root root 6 Aug 28 23:02 node3

然后执行命令启动

docker run -d -p 2181:2181 -p 2888:2888 -p 3888:3888 --name zookeeper_node1 --privileged --restart always -v /usr/local/zookeeper-cluster/node1/volumes/data:/data -v /usr/local/zookeeper-cluster/node1/volumes/datalog:/datalog -v /usr/local/zookeeper-cluster/node1/volumes/logs:/logs -e ZOO_MY_ID=1 -e "ZOO_SERVERS=server.1=192.168.192.128:2888:3888;2181 server.2=192.168.192.128:2889:3889;2182 server.3=192.168.192.128:2890:3890;2183" 3487af26dee9 docker run -d -p 2182:2181 -p 2889:2888 -p 3889:3888 --name zookeeper_node2 --privileged --restart always -v /usr/local/zookeeper-cluster/node2/volumes/data:/data -v /usr/local/zookeeper-cluster/node2/volumes/datalog:/datalog -v /usr/local/zookeeper-cluster/node2/volumes/logs:/logs -e ZOO_MY_ID=2 -e "ZOO_SERVERS=server.1=192.168.192.128:2888:3888;2181 server.2=192.168.192.128:2889:3889;2182 server.3=192.168.192.128:2890:3890;2183" 3487af26dee9 docker run -d -p 2183:2181 -p 2890:2888 -p 3890:3888 --name zookeeper_node3 --privileged --restart always -v /usr/local/zookeeper-cluster/node3/volumes/data:/data -v /usr/local/zookeeper-cluster/node3/volumes/datalog:/datalog -v /usr/local/zookeeper-cluster/node3/volumes/logs:/logs -e ZOO_MY_ID=3 -e "ZOO_SERVERS=server.1=192.168.192.128:2888:3888;2181 server.2=192.168.192.128:2889:3889;2182 server.3=192.168.192.128:2890:3890;2183" 3487af26dee9

【坑】

乍一看,没什么问题啊,首先映射端口到宿主机,然后三个zookeeper之间的访问地址则是宿主机IP:映射端口,没毛病啊;

看我前面讲的网络模式就能看出问题,ZOO_SERVERS里面的IP有问题,犯这个错误都是不了解Docker的网络模式的。什么错误往下看。

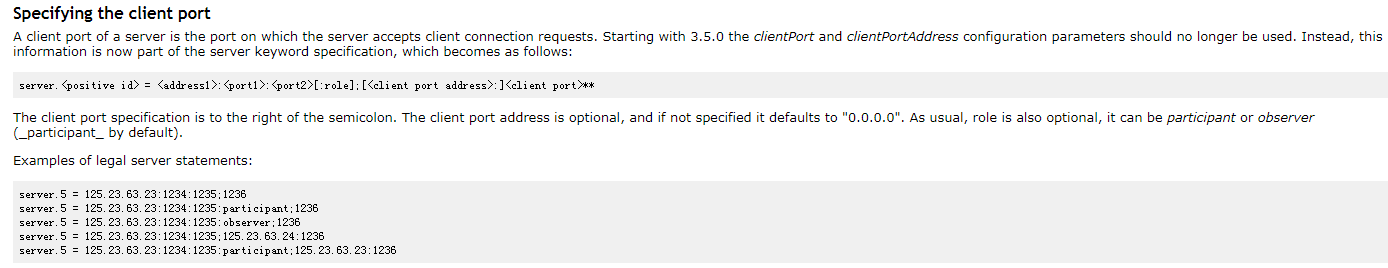

关于ZOO_SERVERS

什么意思呢,3.5.0开始,不应该再使用clientPort和clientPortAddress配置参数。相反,这些信息现在是server关键字规范的一部分。

端口映射三个容器不一样,比如2181/2182/2183,因为是一台宿主机嘛,端口不能冲突,如果你不在同一台机器,就不用修改端口。

最后的那个参数是镜像ID,也可以是镜像名称:TAG。

--privileged=true参数是为了解决【chown: changing ownership of '/data': Permission denied】,也可以省略true

执行结果:

[root@localhost admin]# docker run -d -p 2181:2181 -p 2888:2888 -p 3888:3888 --name zookeeper_node1 --privileged --restart always > -v /usr/local/zookeeper-cluster/node1/volumes/data:/data > -v /usr/local/zookeeper-cluster/node1/volumes/datalog:/datalog > -v /usr/local/zookeeper-cluster/node1/volumes/logs:/logs > -e ZOO_MY_ID=1 > -e "ZOO_SERVERS=server.1=192.168.192.128:2888:3888;2181 server.2=192.168.192.128:2889:3889;2182 server.3=192.168.192.128:2890:3890;2183" 3487af26dee9 4bfa6bbeb936037e178a577e5efbd06d4a963e91d67274413b933fd189917776 [root@localhost admin]# docker run -d -p 2182:2181 -p 2889:2888 -p 3889:3888 --name zookeeper_node2 --privileged --restart always > -v /usr/local/zookeeper-cluster/node2/volumes/data:/data > -v /usr/local/zookeeper-cluster/node2/volumes/datalog:/datalog > -v /usr/local/zookeeper-cluster/node2/volumes/logs:/logs > -e ZOO_MY_ID=2 > -e "ZOO_SERVERS=server.1=192.168.192.128:2888:3888;2181 server.2=192.168.192.128:2889:3889;2182 server.3=192.168.192.128:2890:3890;2183" 3487af26dee9 dbb7f1f323a09869d043152a4995e73bad5f615fd81bf11143fd1c28180f9869 [root@localhost admin]# docker run -d -p 2183:2181 -p 2890:2888 -p 3890:3888 --name zookeeper_node3 --privileged --restart always > -v /usr/local/zookeeper-cluster/node3/volumes/data:/data > -v /usr/local/zookeeper-cluster/node3/volumes/datalog:/datalog > -v /usr/local/zookeeper-cluster/node3/volumes/logs:/logs > -e ZOO_MY_ID=3 > -e "ZOO_SERVERS=server.1=192.168.192.128:2888:3888;2181 server.2=192.168.192.128:2889:3889;2182 server.3=192.168.192.128:2890:3890;2183" 3487af26dee9 6dabae1d92f0e861cc7515c014c293f80075c2762b254fc56312a6d3b450a919 [root@localhost admin]#

查看启动的容器

[root@localhost admin]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 6dabae1d92f0 3487af26dee9 "/docker-entrypoin..." 31 seconds ago Up 29 seconds 8080/tcp, 0.0.0.0:2183->2181/tcp, 0.0.0.0:2890->2888/tcp, 0.0.0.0:3890->3888/tcp zookeeper_node3 dbb7f1f323a0 3487af26dee9 "/docker-entrypoin..." 36 seconds ago Up 35 seconds 8080/tcp, 0.0.0.0:2182->2181/tcp, 0.0.0.0:2889->2888/tcp, 0.0.0.0:3889->3888/tcp zookeeper_node2 4bfa6bbeb936 3487af26dee9 "/docker-entrypoin..." 46 seconds ago Up 45 seconds 0.0.0.0:2181->2181/tcp, 0.0.0.0:2888->2888/tcp, 0.0.0.0:3888->3888/tcp, 8080/tcp zookeeper_node1 [root@localhost admin]#

不是说有错误吗?怎么还启动成功了??我们来看下节点1的启动日志

[root@localhost admin]# docker logs -f 4bfa6bbeb936

ZooKeeper JMX enabled by default

...

2019-08-29 09:20:22,665 [myid:1] - WARN [WorkerSender[myid=1]:QuorumCnxManager@677] - Cannot open channel to 2 at election address /192.168.192.128:3889

java.net.ConnectException: Connection refused (Connection refused)

at java.net.PlainSocketImpl.socketConnect(Native Method)

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350)

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206)

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188)

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392)

at java.net.Socket.connect(Socket.java:589)

at org.apache.zookeeper.server.quorum.QuorumCnxManager.connectOne(QuorumCnxManager.java:648)

at org.apache.zookeeper.server.quorum.QuorumCnxManager.connectOne(QuorumCnxManager.java:705)

at org.apache.zookeeper.server.quorum.QuorumCnxManager.toSend(QuorumCnxManager.java:618)

at org.apache.zookeeper.server.quorum.FastLeaderElection$Messenger$WorkerSender.process(FastLeaderElection.java:477)

at org.apache.zookeeper.server.quorum.FastLeaderElection$Messenger$WorkerSender.run(FastLeaderElection.java:456)

at java.lang.Thread.run(Thread.java:748)

2019-08-29 09:20:22,666 [myid:1] - WARN [WorkerSender[myid=1]:QuorumCnxManager@677] - Cannot open channel to 3 at election address /192.168.192.128:3890

java.net.ConnectException: Connection refused (Connection refused)

at java.net.PlainSocketImpl.socketConnect(Native Method)

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350)

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206)

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188)

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392)

at java.net.Socket.connect(Socket.java:589)

at org.apache.zookeeper.server.quorum.QuorumCnxManager.connectOne(QuorumCnxManager.java:648)

at org.apache.zookeeper.server.quorum.QuorumCnxManager.connectOne(QuorumCnxManager.java:705)

at org.apache.zookeeper.server.quorum.QuorumCnxManager.toSend(QuorumCnxManager.java:618)

at org.apache.zookeeper.server.quorum.FastLeaderElection$Messenger$WorkerSender.process(FastLeaderElection.java:477)

at org.apache.zookeeper.server.quorum.FastLeaderElection$Messenger$WorkerSender.run(FastLeaderElection.java:456)

at java.lang.Thread.run(Thread.java:748)

连接不上2 和 3,为什么呢,因为在默认的Docker网络模式下,通过宿主机的IP+映射端口,根本找不到啊!他们有自己的IP啊!如下:

[root@localhost admin]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

6dabae1d92f0 3487af26dee9 "/docker-entrypoin..." 5 minutes ago Up 5 minutes 8080/tcp, 0.0.0.0:2183->2181/tcp, 0.0.0.0:2890->2888/tcp, 0.0.0.0:3890->3888/tcp zookeeper_node3

dbb7f1f323a0 3487af26dee9 "/docker-entrypoin..." 6 minutes ago Up 6 minutes 8080/tcp, 0.0.0.0:2182->2181/tcp, 0.0.0.0:2889->2888/tcp, 0.0.0.0:3889->3888/tcp zookeeper_node2

4bfa6bbeb936 3487af26dee9 "/docker-entrypoin..." 6 minutes ago Up 6 minutes 0.0.0.0:2181->2181/tcp, 0.0.0.0:2888->2888/tcp, 0.0.0.0:3888->3888/tcp, 8080/tcp zookeeper_node1

[root@localhost admin]# docker inspect 4bfa6bbeb936

"Networks": {

"bridge": {

"IPAMConfig": null,

"Links": null,

"Aliases": null,

"NetworkID": "5fc1ce4362afe3d34fdf260ab0174c36fe4b7daf2189702eae48101a755079f3",

"EndpointID": "368237e4c903cc663111f1fe33ac4626a9100fb5a22aec85f5eccbc6968a1631",

"Gateway": "172.17.0.1",

"IPAddress": "172.17.0.2",

"IPPrefixLen": 16,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"MacAddress": "02:42:ac:11:00:02"

}

}

}

}

]

[root@localhost admin]# docker inspect dbb7f1f323a0

"Networks": {

"bridge": {

"IPAMConfig": null,

"Links": null,

"Aliases": null,

"NetworkID": "5fc1ce4362afe3d34fdf260ab0174c36fe4b7daf2189702eae48101a755079f3",

"EndpointID": "8a9734044a566d5ddcd7cbbf6661abb2730742f7c73bd8733ede9ed8ef106659",

"Gateway": "172.17.0.1",

"IPAddress": "172.17.0.3",

"IPPrefixLen": 16,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"MacAddress": "02:42:ac:11:00:03"

}

}

}

}

]

[root@localhost admin]# docker inspect 6dabae1d92f0

"Networks": {

"bridge": {

"IPAMConfig": null,

"Links": null,

"Aliases": null,

"NetworkID": "5fc1ce4362afe3d34fdf260ab0174c36fe4b7daf2189702eae48101a755079f3",

"EndpointID": "b10329b9940a07aacb016d8d136511ec388de02bf3bd0e0b50f7f4cbb7f138ec",

"Gateway": "172.17.0.1",

"IPAddress": "172.17.0.4",

"IPPrefixLen": 16,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"MacAddress": "02:42:ac:11:00:04"

}

}

}

}

]

node1---172.17.0.2

node2---172.17.0.3

node3---172.17.0.4

既然我们知道了它有自己的IP,那又出现另一个问题了,就是它的ip是动态的,启动之前我们无法得知。有个解决办法就是创建自己的bridge网络,然后创建容器的时候指定ip。

【正确方式开始】

[root@localhost admin]# docker network create --driver bridge --subnet=172.18.0.0/16 --gateway=172.18.0.1 zoonet

8257c501652a214d27efdf5ef71ff38bfe222c3a2a7898be24b8df9db1fb3b13

[root@localhost admin]# docker network ls

NETWORK ID NAME DRIVER SCOPE

5fc1ce4362af bridge bridge local

6aa33e21444e host host local

20e563b93ce9 none null local

8257c501652a zoonet bridge local

[root@localhost admin]# docker network inspect 8257c501652a

[

{

"Name": "zoonet",

"Id": "8257c501652a214d27efdf5ef71ff38bfe222c3a2a7898be24b8df9db1fb3b13",

"Created": "2019-08-29T06:08:01.442601483-04:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "172.18.0.0/16",

"Gateway": "172.18.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Containers": {},

"Options": {},

"Labels": {}

}

]

然后我们修改一下zookeeper容器的创建命令。

docker run -d -p 2181:2181 --name zookeeper_node1 --privileged --restart always --network zoonet --ip 172.18.0.2 -v /usr/local/zookeeper-cluster/node1/volumes/data:/data -v /usr/local/zookeeper-cluster/node1/volumes/datalog:/datalog -v /usr/local/zookeeper-cluster/node1/volumes/logs:/logs -e ZOO_MY_ID=1 -e "ZOO_SERVERS=server.1=172.18.0.2:2888:3888;2181 server.2=172.18.0.3:2888:3888;2181 server.3=172.18.0.4:2888:3888;2181" 3487af26dee9 docker run -d -p 2182:2181 --name zookeeper_node2 --privileged --restart always --network zoonet --ip 172.18.0.3 -v /usr/local/zookeeper-cluster/node2/volumes/data:/data -v /usr/local/zookeeper-cluster/node2/volumes/datalog:/datalog -v /usr/local/zookeeper-cluster/node2/volumes/logs:/logs -e ZOO_MY_ID=2 -e "ZOO_SERVERS=server.1=172.18.0.2:2888:3888;2181 server.2=172.18.0.3:2888:3888;2181 server.3=172.18.0.4:2888:3888;2181" 3487af26dee9 docker run -d -p 2183:2181 --name zookeeper_node3 --privileged --restart always --network zoonet --ip 172.18.0.4 -v /usr/local/zookeeper-cluster/node3/volumes/data:/data -v /usr/local/zookeeper-cluster/node3/volumes/datalog:/datalog -v /usr/local/zookeeper-cluster/node3/volumes/logs:/logs -e ZOO_MY_ID=3 -e "ZOO_SERVERS=server.1=172.18.0.2:2888:3888;2181 server.2=172.18.0.3:2888:3888;2181 server.3=172.18.0.4:2888:3888;2181" 3487af26dee9

1. 由于2888 、3888 不需要暴露,就不映射了;

2. 指定自己的网络,并指定IP;

3. 每个容器之间环境是隔离的,所以容器内所用的端口一样:2181/2888/3888

运行结果:

[root@localhost admin]# docker run -d -p 2181:2181 --name zookeeper_node1 --privileged --restart always --network zoonet --ip 172.18.0.2 > -v /usr/local/zookeeper-cluster/node1/volumes/data:/data > -v /usr/local/zookeeper-cluster/node1/volumes/datalog:/datalog > -v /usr/local/zookeeper-cluster/node1/volumes/logs:/logs > -e ZOO_MY_ID=1 > -e "ZOO_SERVERS=server.1=172.18.0.2:2888:3888;2181 server.2=172.18.0.3:2888:3888;2181 server.3=172.18.0.4:2888:3888;2181" 3487af26dee9 50c07cf11fab2d3b4da6d8ce48d8ed4a7beaab7d51dd542b8309f781e9920c36 [root@localhost admin]# docker run -d -p 2182:2181 --name zookeeper_node2 --privileged --restart always --network zoonet --ip 172.18.0.3 > -v /usr/local/zookeeper-cluster/node2/volumes/data:/data > -v /usr/local/zookeeper-cluster/node2/volumes/datalog:/datalog > -v /usr/local/zookeeper-cluster/node2/volumes/logs:/logs > -e ZOO_MY_ID=2 > -e "ZOO_SERVERS=server.1=172.18.0.2:2888:3888;2181 server.2=172.18.0.3:2888:3888;2181 server.3=172.18.0.4:2888:3888;2181" 3487af26dee9 649a4dbfb694504acfe4b8e11b990877964477bb41f8a230bd191cba7d20996f [root@localhost admin]# docker run -d -p 2183:2181 --name zookeeper_node3 --privileged --restart always --network zoonet --ip 172.18.0.4 > -v /usr/local/zookeeper-cluster/node3/volumes/data:/data > -v /usr/local/zookeeper-cluster/node3/volumes/datalog:/datalog > -v /usr/local/zookeeper-cluster/node3/volumes/logs:/logs > -e ZOO_MY_ID=3 > -e "ZOO_SERVERS=server.1=172.18.0.2:2888:3888;2181 server.2=172.18.0.3:2888:3888;2181 server.3=172.18.0.4:2888:3888;2181" 3487af26dee9 c8bc1b9ae9adf86e9c7f6a3264f883206c6d0e4f6093db3200de80ef39f57160 [root@localhost admin]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES c8bc1b9ae9ad 3487af26dee9 "/docker-entrypoin..." 17 seconds ago Up 16 seconds 2888/tcp, 3888/tcp, 8080/tcp, 0.0.0.0:2183->2181/tcp zookeeper_node3 649a4dbfb694 3487af26dee9 "/docker-entrypoin..." 22 seconds ago Up 21 seconds 2888/tcp, 3888/tcp, 8080/tcp, 0.0.0.0:2182->2181/tcp zookeeper_node2 50c07cf11fab 3487af26dee9 "/docker-entrypoin..." 33 seconds ago Up 32 seconds 2888/tcp, 3888/tcp, 0.0.0.0:2181->2181/tcp, 8080/tcp zookeeper_node1 [root@localhost admin]#

进入容器内部验证一下:

[root@localhost admin]# docker exec -it 50c07cf11fab bash root@50c07cf11fab:/apache-zookeeper-3.5.5-bin# ./bin/zkServer.sh status ZooKeeper JMX enabled by default Using config: /conf/zoo.cfg Client port found: 2181. Client address: localhost. Mode: follower root@50c07cf11fab:/apache-zookeeper-3.5.5-bin# exit exit [root@localhost admin]# docker exec -it 649a4dbfb694 bash root@649a4dbfb694:/apache-zookeeper-3.5.5-bin# ./bin/zkServer.sh status ZooKeeper JMX enabled by default Using config: /conf/zoo.cfg Client port found: 2181. Client address: localhost. Mode: leader root@649a4dbfb694:/apache-zookeeper-3.5.5-bin# exit exit [root@localhost admin]# docker exec -it c8bc1b9ae9ad bash root@c8bc1b9ae9ad:/apache-zookeeper-3.5.5-bin# ./bin/zkServer.sh status ZooKeeper JMX enabled by default Using config: /conf/zoo.cfg Client port found: 2181. Client address: localhost. Mode: follower root@c8bc1b9ae9ad:/apache-zookeeper-3.5.5-bin# exit exit [root@localhost admin]#

在验证一下创建节点

开启防火墙,以供外部访问

firewall-cmd --zone=public --add-port=2181/tcp --permanent firewall-cmd --zone=public --add-port=2182/tcp --permanent firewall-cmd --zone=public --add-port=2183/tcp --permanent systemctl restart firewalld firewall-cmd --list-all

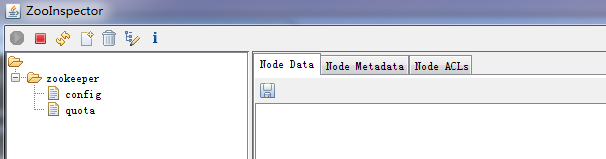

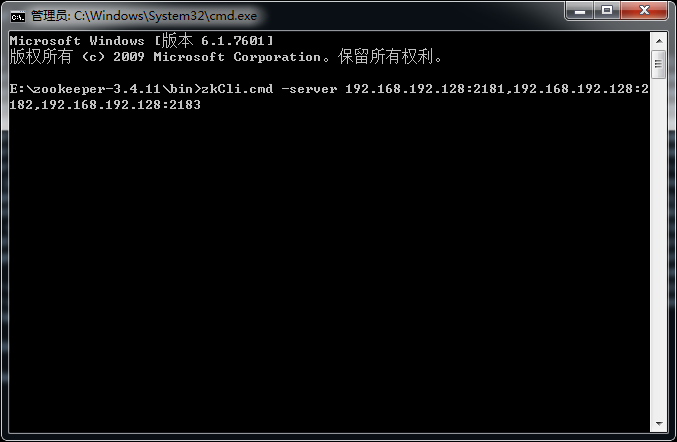

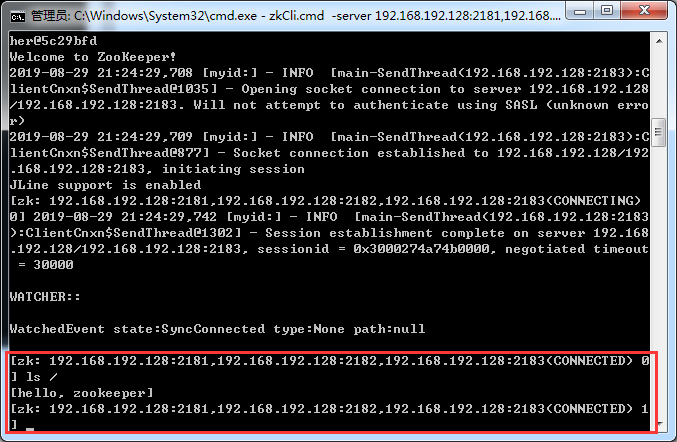

在本地,我用zookeeper的客户端连接虚拟机上的集群:

可以看到连接成功!

集群安装方式二:通过docker stack deploy或docker-compose安装

这里用docker-compose。先安装docker-compose

[root@localhost admin]# curl -L "https://github.com/docker/compose/releases/download/1.24.1/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 617 0 617 0 0 145 0 --:--:-- 0:00:04 --:--:-- 145

100 15.4M 100 15.4M 0 0 131k 0 0:02:00 0:02:00 --:--:-- 136k

[root@localhost admin]# chmod +x /usr/local/bin/docker-compose

检查版本(验证是否安装成功)

[root@localhost admin]# docker-compose --version docker-compose version 1.24.1, build 4667896b

卸载的话

rm /usr/local/bin/docker-compose

开始配置,新建三个挂载目录

[root@localhost admin]# mkdir /usr/local/zookeeper-cluster/node4 [root@localhost admin]# mkdir /usr/local/zookeeper-cluster/node5 [root@localhost admin]# mkdir /usr/local/zookeeper-cluster/node6

新建任意目录,然后在里面新建一个文件

[root@localhost admin]# mkdir DockerComposeFolder [root@localhost admin]# cd DockerComposeFolder/ [root@localhost DockerComposeFolder]# vim docker-compose.yml

文件内容如下:(自定义网络见上面)

version: '3.1'

services:

zoo1:

image: zookeeper

restart: always

privileged: true

hostname: zoo1

ports:

- 2181:2181

volumes: # 挂载数据

- /usr/local/zookeeper-cluster/node4/data:/data

- /usr/local/zookeeper-cluster/node4/datalog:/datalog

environment:

ZOO_MY_ID: 4

ZOO_SERVERS: server.4=0.0.0.0:2888:3888;2181 server.5=zoo2:2888:3888;2181 server.6=zoo3:2888:3888;2181

networks:

default:

ipv4_address: 172.18.0.14

zoo2:

image: zookeeper

restart: always

privileged: true

hostname: zoo2

ports:

- 2182:2181

volumes: # 挂载数据

- /usr/local/zookeeper-cluster/node5/data:/data

- /usr/local/zookeeper-cluster/node5/datalog:/datalog

environment:

ZOO_MY_ID: 5

ZOO_SERVERS: server.4=zoo1:2888:3888;2181 server.5=0.0.0.0:2888:3888;2181 server.6=zoo3:2888:3888;2181

networks:

default:

ipv4_address: 172.18.0.15

zoo3:

image: zookeeper

restart: always

privileged: true

hostname: zoo3

ports:

- 2183:2181

volumes: # 挂载数据

- /usr/local/zookeeper-cluster/node6/data:/data

- /usr/local/zookeeper-cluster/node6/datalog:/datalog

environment:

ZOO_MY_ID: 6

ZOO_SERVERS: server.4=zoo1:2888:3888;2181 server.5=zoo2:2888:3888;2181 server.6=0.0.0.0:2888:3888;2181

networks:

default:

ipv4_address: 172.18.0.16

networks: # 自定义网络

default:

external:

name: zoonet

注意yaml文件里不能有tab,只能有空格。

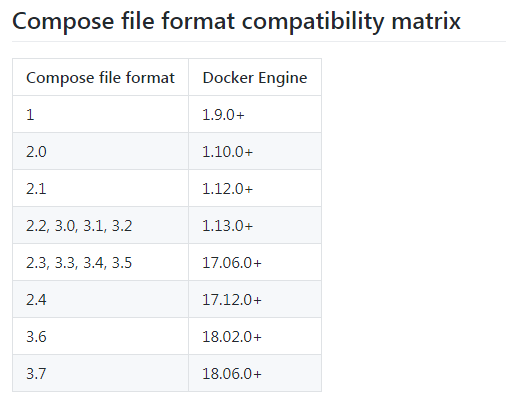

关于version与Docker版本的关系如下:

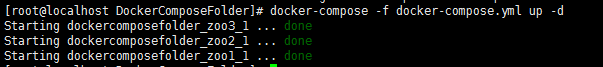

然后执行(-d后台启动)

docker-compose -f docker-compose.yml up -d

查看已启动的容器

[root@localhost DockerComposeFolder]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES a2c14814037d zookeeper "/docker-entrypoin..." 6 minutes ago Up About a minute 2888/tcp, 3888/tcp, 8080/tcp, 0.0.0.0:2183->2181/tcp dockercomposefolder_zoo3_1 50310229b216 zookeeper "/docker-entrypoin..." 6 minutes ago Up About a minute 2888/tcp, 3888/tcp, 0.0.0.0:2181->2181/tcp, 8080/tcp dockercomposefolder_zoo1_1 475d8a9e2d08 zookeeper "/docker-entrypoin..." 6 minutes ago Up About a minute 2888/tcp, 3888/tcp, 8080/tcp, 0.0.0.0:2182->2181/tcp dockercomposefolder_zoo2_1

进入一个容器

[root@localhost DockerComposeFolder]# docker exec -it a2c14814037d bash root@zoo3:/apache-zookeeper-3.5.5-bin# ./bin/zkCli.sh Connecting to localhost:2181 .... WatchedEvent state:SyncConnected type:None path:null [zk: localhost:2181(CONNECTED) 0] [zk: localhost:2181(CONNECTED) 1] ls / [zookeeper] [zk: localhost:2181(CONNECTED) 2] create /hi Created /hi [zk: localhost:2181(CONNECTED) 3] ls / [hi, zookeeper]

进入另一个容器

[root@localhost DockerComposeFolder]# docker exec -it 50310229b216 bash root@zoo1:/apache-zookeeper-3.5.5-bin# ./bin/zkCli.sh Connecting to localhost:2181 ... WatchedEvent state:SyncConnected type:None path:null [zk: localhost:2181(CONNECTED) 0] ls / [hi, zookeeper]

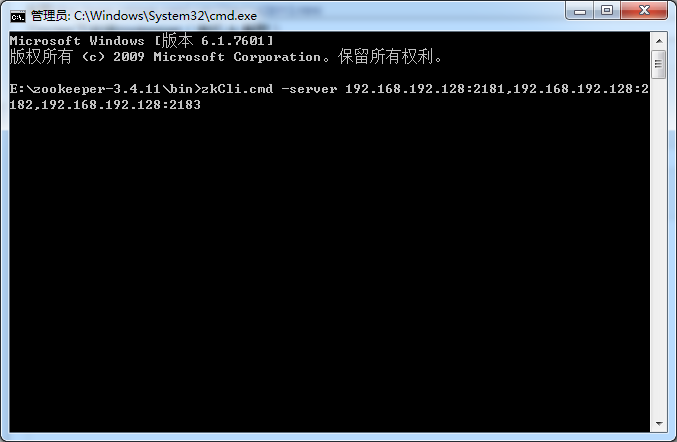

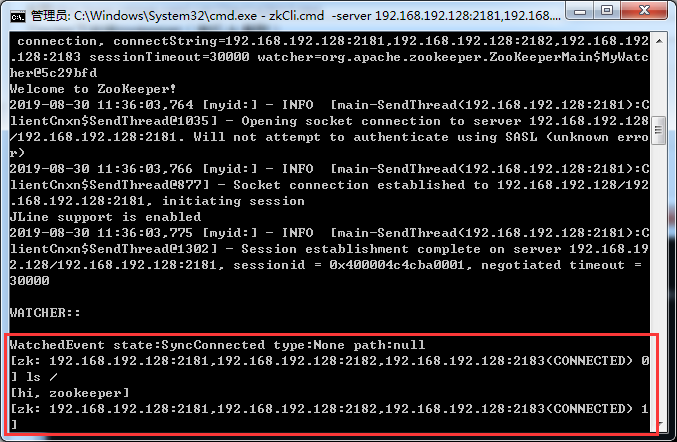

本地客户端连接集群:

zkCli.cmd -server 192.168.192.128:2181,192.168.192.128:2182,192.168.192.128:2183

查看

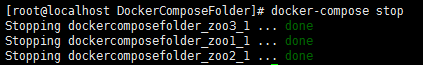

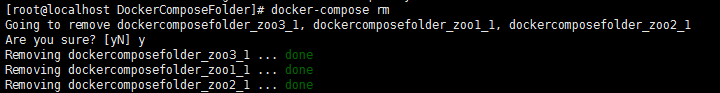

停止所有活动容器

删除所有已停止的容器

更多docker-compose的命令:

[root@localhost DockerComposeFolder]# docker-compose --help

Define and run multi-container applications with Docker.

Usage:

docker-compose [-f <arg>...] [options] [COMMAND] [ARGS...]

docker-compose -h|--help

Options:

-f, --file FILE Specify an alternate compose file

(default: docker-compose.yml)

-p, --project-name NAME Specify an alternate project name

(default: directory name)

--verbose Show more output

--log-level LEVEL Set log level (DEBUG, INFO, WARNING, ERROR, CRITICAL)

--no-ansi Do not print ANSI control characters

-v, --version Print version and exit

-H, --host HOST Daemon socket to connect to

--tls Use TLS; implied by --tlsverify

--tlscacert CA_PATH Trust certs signed only by this CA

--tlscert CLIENT_CERT_PATH Path to TLS certificate file

--tlskey TLS_KEY_PATH Path to TLS key file

--tlsverify Use TLS and verify the remote

--skip-hostname-check Don't check the daemon's hostname against the

name specified in the client certificate

--project-directory PATH Specify an alternate working directory

(default: the path of the Compose file)

--compatibility If set, Compose will attempt to convert keys

in v3 files to their non-Swarm equivalent

Commands:

build Build or rebuild services

bundle Generate a Docker bundle from the Compose file

config Validate and view the Compose file

create Create services

down Stop and remove containers, networks, images, and volumes

events Receive real time events from containers

exec Execute a command in a running container

help Get help on a command

images List images

kill Kill containers

logs View output from containers

pause Pause services

port Print the public port for a port binding

ps List containers

pull Pull service images

push Push service images

restart Restart services

rm Remove stopped containers

run Run a one-off command

scale Set number of containers for a service

start Start services

stop Stop services

top Display the running processes

unpause Unpause services

up Create and start containers

version Show the Docker-Compose version information