1、问题描述:

爬取链家深圳全部二手房的详细信息,并将爬取的数据存储到CSV文件中

2、思路分析:

(1)目标网址:https://sz.lianjia.com/ershoufang/

(2)代码结构:

class LianjiaSpider(object):

def __init__(self):

def getMaxPage(self, url): # 获取maxPage

def parsePage(self, url): # 解析每个page,获取每个huose的Link

def parseDetail(self, url): # 根据Link,获取每个house的详细信息

(3) init(self)初始化函数

· hearders用到了fake_useragent库,用来随机生成请求头。

· datas空列表,用于保存爬取的数据。

def __init__(self):

self.headers = {"User-Agent": UserAgent().random}

self.datas = list()

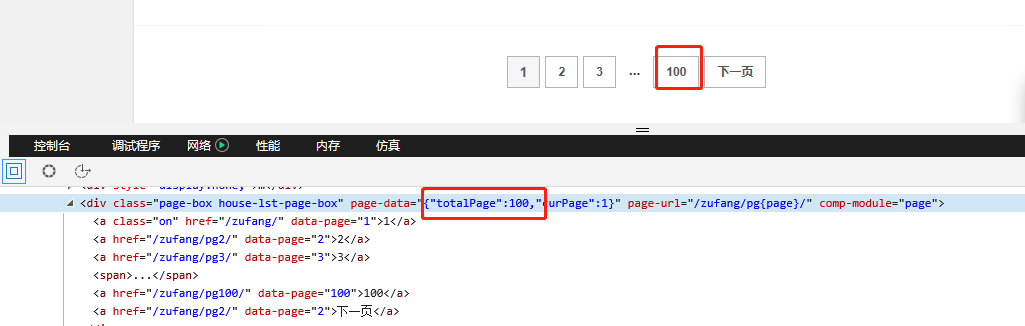

(4) getMaxPage()函数

主要用来获取二手房页面的最大页数.

def getMaxPage(self, url):

response = requests.get(url, headers = self.headers)

if response.status_code == 200:

source = response.text

soup = BeautifulSoup(source, "html.parser")

pageData = soup.find("div", class_ = "page-box house-lst-page-box")["page-data"]

# pageData = '{"totalPage":100,"curPage":1}',通过eval()函数把字符串转换为字典

maxPage = eval(pageData)["totalPage"]

return maxPage

else:

print("Fail status: {}".format(response.status_code))

return None

(5)parsePage()函数

主要是用来进行翻页的操作,得到每一页的所有二手房的Links链接。它通过利用一个for循环来重构 url实现翻页操作,而循环最大页数就是通过上面的 getMaxPage() 来获取到。

def parsePage(self, url):

maxPage = self.getMaxPage(url)

# 解析每个page,获取每个二手房的链接

for pageNum in range(1, maxPage+1 ):

url = "https://sz.lianjia.com/ershoufang/pg{}/".format(pageNum)

print("当前正在爬取: {}".format(url))

response = requests.get(url, headers = self.headers)

soup = BeautifulSoup(response.text, "html.parser")

links = soup.find_all("div", class_ = "info clear")

for i in links:

link = i.find("a")["href"] #每个<info clear>标签有很多<a>,而我们只需要第一个,所以用find

detail = self.parseDetail(link)

self.datas.append(detail)

(6)parseDetail()函数

根据parsePage()函数获取的二手房Link链接,向该链接发送请求,获取出详细页面信息。

def parseDetail(self, url):

response = requests.get(url, headers = self.headers)

detail = {}

if response.status_code == 200:

soup = BeautifulSoup(response.text, "html.parser")

detail["价格"] = soup.find("span", class_ = "total").text

detail["单价"] = soup.find("span", class_ = "unitPriceValue").text

detail["小区"] = soup.find("div", class_ = "communityName").find("a", class_ = "info").text

detail["位置"] = soup.find("div", class_="areaName").find("span", class_="info").text

detail["地铁"] = soup.find("div", class_="areaName").find("a", class_="supplement").text

base = soup.find("div", class_ = "base").find_all("li") # 基本信息

detail["户型"] = base[0].text[4:]

detail["面积"] = base[2].text[4:]

detail["朝向"] = base[6].text[4:]

detail["电梯"] = base[10].text[4:]

return detail

else:

return None

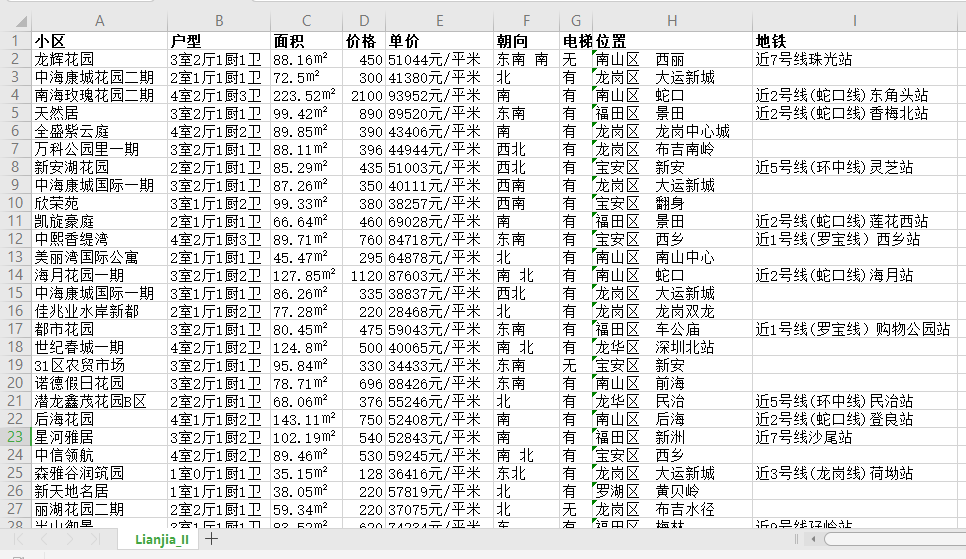

(7)将数据存储到CSV文件中

这里用到了 pandas 库的 DataFrame() 方法,它默认的是按照列名的字典顺序排序的。想要自定义列的顺序,可以加columns字段。

# 将所有爬取的二手房数据存储到csv文件中

data = pd.DataFrame(self.datas)

# columns字段:自定义列的顺序(DataFrame默认按列名的字典序排序)

columns = ["小区", "户型", "面积", "价格", "单价", "朝向", "电梯", "位置", "地铁"]

data.to_csv(".Lianjia_II.csv", encoding='utf_8_sig', index=False, columns=columns)

3、效果展示

4、完整代码:

# -* coding: utf-8 *-

#author: wangshx6

#data: 2018-11-07

#descriptinon: 爬取链家深圳全部二手房的详细信息,并将爬取的数据存储到CSV文

import requests

from bs4 import BeautifulSoup

import pandas as pd

from fake_useragent import UserAgent

class LianjiaSpider(object):

def __init__(self):

self.headers = {"User-Agent": UserAgent().random}

self.datas = list()

def getMaxPage(self, url):

response = requests.get(url, headers = self.headers)

if response.status_code == 200:

source = response.text

soup = BeautifulSoup(source, "html.parser")

pageData = soup.find("div", class_ = "page-box house-lst-page-box")["page-data"]

# pageData = '{"totalPage":100,"curPage":1}',通过eval()函数把字符串转换为字典

maxPage = eval(pageData)["totalPage"]

return maxPage

else:

print("Fail status: {}".format(response.status_code))

return None

def parsePage(self, url):

maxPage = self.getMaxPage(url)

# 解析每个page,获取每个二手房的链接

for pageNum in range(1, maxPage+1 ):

url = "https://sz.lianjia.com/ershoufang/pg{}/".format(pageNum)

print("当前正在爬取: {}".format(url))

response = requests.get(url, headers = self.headers)

soup = BeautifulSoup(response.text, "html.parser")

links = soup.find_all("div", class_ = "info clear")

for i in links:

link = i.find("a")["href"] #每个<info clear>标签有很多<a>,而我们只需要第一个,所以用find

detail = self.parseDetail(link)

self.datas.append(detail)

# 将所有爬取的二手房数据存储到csv文件中

data = pd.DataFrame(self.datas)

# columns字段:自定义列的顺序(DataFrame默认按列名的字典序排序)

columns = ["小区", "户型", "面积", "价格", "单价", "朝向", "电梯", "位置", "地铁"]

data.to_csv(".Lianjia_II.csv", encoding='utf_8_sig', index=False, columns=columns)

def parseDetail(self, url):

response = requests.get(url, headers = self.headers)

detail = {}

if response.status_code == 200:

soup = BeautifulSoup(response.text, "html.parser")

detail["价格"] = soup.find("span", class_ = "total").text

detail["单价"] = soup.find("span", class_ = "unitPriceValue").text

detail["小区"] = soup.find("div", class_ = "communityName").find("a", class_ = "info").text

detail["位置"] = soup.find("div", class_="areaName").find("span", class_="info").text

detail["地铁"] = soup.find("div", class_="areaName").find("a", class_="supplement").text

base = soup.find("div", class_ = "base").find_all("li") # 基本信息

detail["户型"] = base[0].text[4:]

detail["面积"] = base[2].text[4:]

detail["朝向"] = base[6].text[4:]

detail["电梯"] = base[10].text[4:]

return detail

else:

return None

if __name__ == "__main__":

Lianjia = LianjiaSpider()

Lianjia.parsePage("https://sz.lianjia.com/ershoufang/")