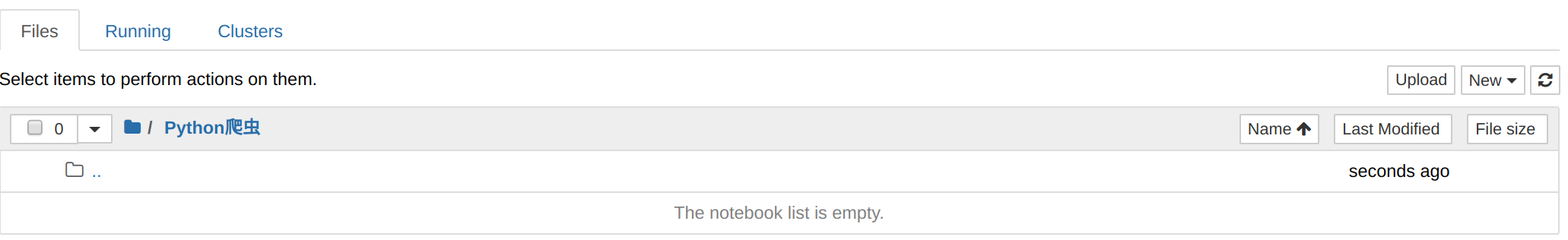

一 jupyter简单使用

1.1 安装

安装 jupyter

root@darren-virtual-machine:~# pip3 install Jupyter

启动

root@darren-virtual-machine:~# jupyter notebook --allow-root

[I 19:46:20.681 NotebookApp] Serving notebooks from local directory: /root [I 19:46:20.681 NotebookApp] The Jupyter Notebook is running at: [I 19:46:20.681 NotebookApp] http://localhost:8888/?token=64965e6933d9f6b4b94136448c2f3161863cda2e873b1013 [I 19:46:20.681 NotebookApp] or http://127.0.0.1:8888/?token=64965e6933d9f6b4b94136448c2f3161863cda2e873b1013

访问http://127.0.0.1:8888/?token=64965e6933d9f6b4b94136448c2f3161863cda2e873b1013

新建一个forder

1.2 jupyter的基本操作

- 创建cell: a和b

- 删除cell: x和dd

- 切换模式: y和m(代码和markdown模式的切换)

- 执行cell: shift + enter

- 查看帮助信息: shift + Tab

- 补全代码信息: Tab

二 爬虫基础

2.1 爬虫概念

爬虫:爬虫就是通过编写程序模拟浏览器上网,然后让其去互联网上抓取数据的过程。

爬虫的分类

- 通用爬虫 爬取一整张页面的数据, 就是搜索引擎抓取系统的一个重要组成部分

- 聚焦爬虫 建立在通用爬虫的基础上(数据解析(正则, BeautifulSoup4, xpath))

- 增量式爬虫 实时监测网络上的数据,只爬取更新的数据

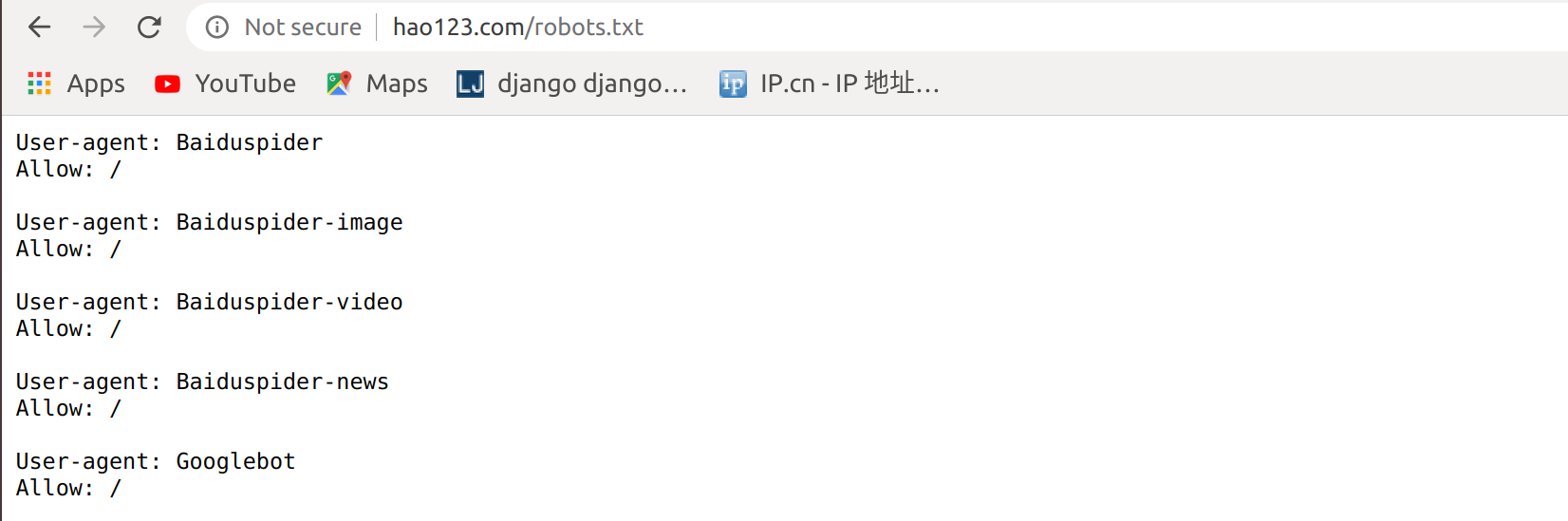

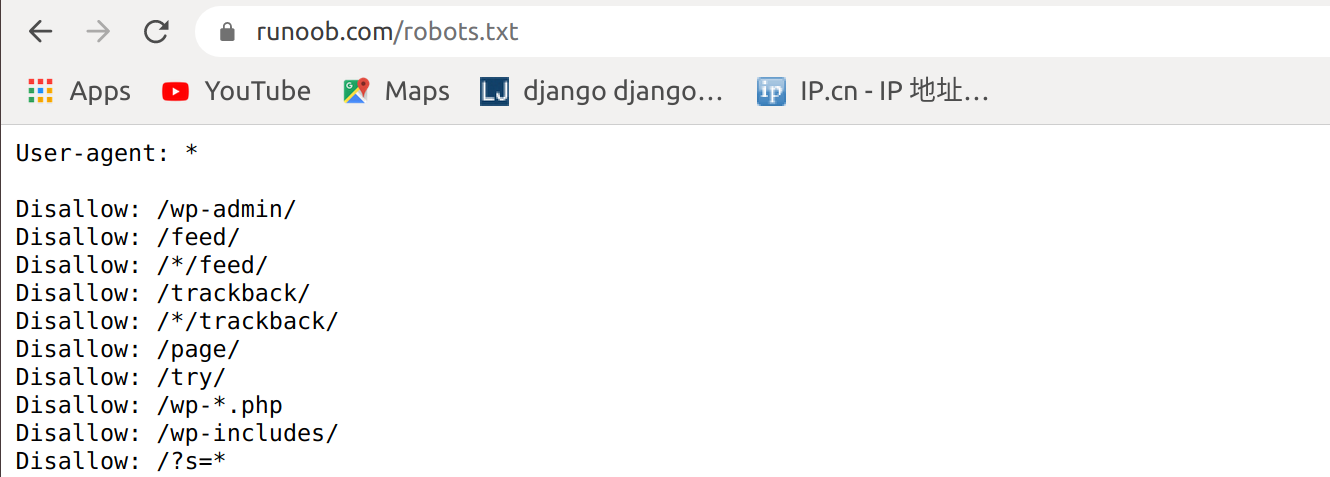

反爬机制

robots.txt协议 文本协议, 防君子不防小人

disabled

作为学习使用,可以忽略disabled协议

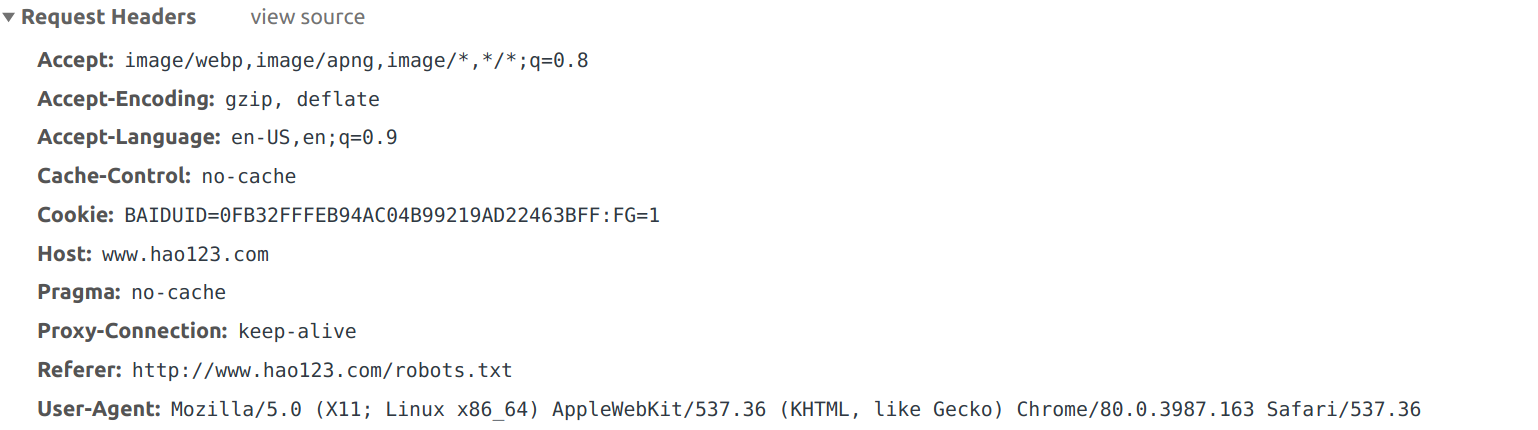

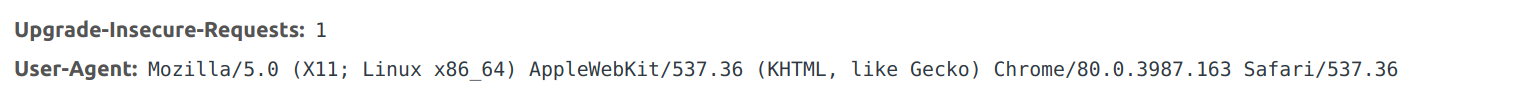

User-Agent检测(User-Agent就是请求载体的身份标识)

包含有系统,浏览器及版本

反反爬策略:使用相关的技术手段来破解相关反爬机制

协议

robots.txt协议

请求头协议

- User-Agent: 请求载体的身份标识

- Connection: close

响应头

Content-Type: 响应数据类型

fidder抓包工具,主要做手机端的一个数据爬取

HTTP和HTTPS

安全加密方式:

对称密钥加密: 客户端加密的数据和密钥一并发给服务器端(缺点是数据和密钥会被截获)非对称密钥加密:服务端生成公私钥,把公钥发给客户端,客户端使用公钥加密数据,发送给服务端,服务端用私钥解密(缺点是公钥无法验证真伪)证书密钥加密: 在非对称密钥加密的基础上,通过第三方证书认证机构对公钥进行数字签名(能够验证公钥真伪)

2.2 requests模块基本使用

requests模块的意义:发送请求获取响应数据

requests模块的作用:模拟浏览器发送请求

requests模块的安装

root@darren-virtual-machine:~/tmp# pip3 install requests

Requirement already satisfied: requests in /usr/lib/python3/dist-packages (2.18.4)

requests模块的编码流程

- 1.指定URL

- 2.向网站发送请求

- 3.获取响应数据

- 4.持久化存储

2.2.1 爬取速购首页

根据上面流程,爬取一个sogou的首页数据

爬虫代码

#需求,导入request模块

import requests

#指定url

sogou_url = "https://www.sogou.com/"

# 2.向网站发送请求

response = requests.get(url=sogou_url)

print(response)

# 3.获取响应数据

# 使用text方法可以获取到页面文本数据, 使用什么方法取决于服务器返回的数据类型, .json(获取json序列化的数据) .content获取二进制的数据

page_text = response.text

print(page_text)

# 4.持久化存储

with open("./sogou.html", 'w', encoding="utf-8") as f:

f.write(page_text)

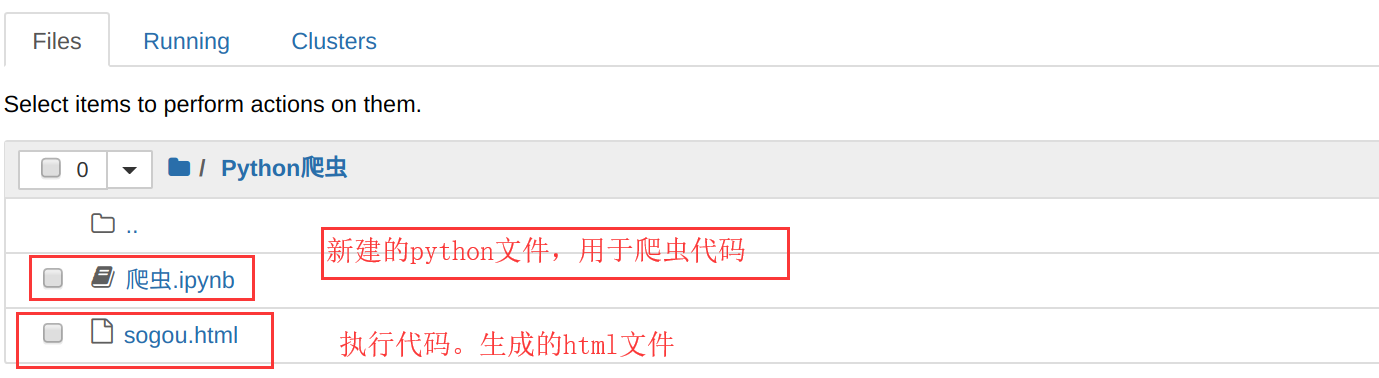

执行完成,查看生成得到代码文件

2.2.2 爬取搜狗指定词条搜索后的页面数据

# 需求:爬取搜狗指定词条搜索后的页面数据

import requests

# 1.指定URL

url = "https://www.sogou.com/web?query=周杰伦"

# 2.向网站发送请求

response = requests.get(url=url)

response.encoding = "utf-8" # 给响应对象里面的所有数据进行编码,格式为utf-8,如果不设置,会出现乱码

# 3.获取响应数据

page_text = response.text

# 4.持久化存储

with open("./jay.html", 'w', encoding="utf-8") as f:

f.write(page_text)

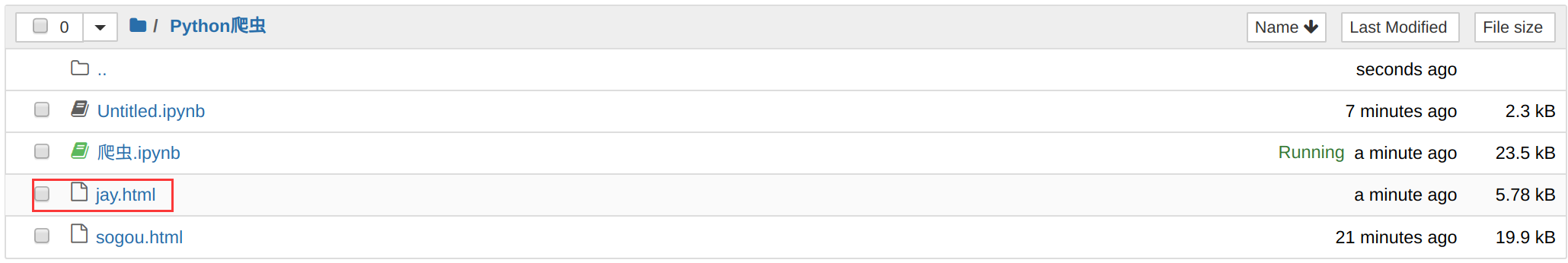

执行后

打开jayhtml

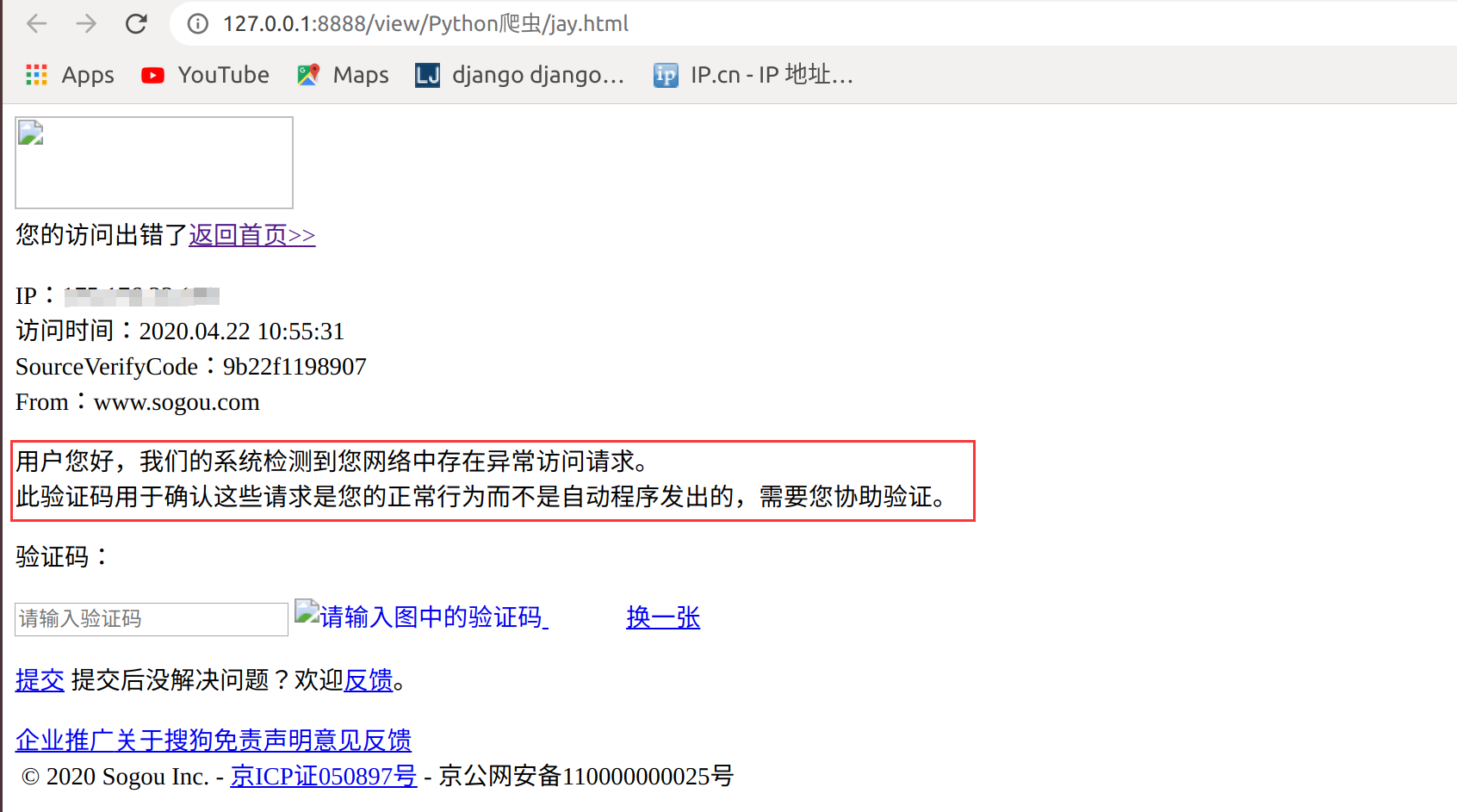

出现这种情况,是由于使用反爬机制导致的,由于网站有UA检测机制,所以我们需要进行UA伪装,将headers作用到我们的发送请求过程中

反爬机制: UA检测(User-Agent)

反反爬策略: UA伪装

把上面的字典封装到一个请求头里面

# 需求:爬取搜狗指定词条搜索后的页面数据

import requests

request_headers = {

"User-Agent": "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.163 Safari/537.36"

}

# 1.指定URL

url = "https://www.sogou.com/web?query=周杰伦"

# 2.向网站发送请求

# 由于网站有UA检测机制,所以我们需要进行UA伪装,将headers作用到我们的发送请求过程中

response = requests.get(url=url,headers=request_headers)

response.encoding = "utf-8" # 给响应对象里面的所有数据进行编码,格式为utf-8,如果不设置,会出现乱码

# 3.获取响应数据

page_text = response.text

# 4.持久化存储

with open("./jay.html", 'w', encoding="utf-8") as f:

f.write(page_text)

删掉jay.html.重新执行打开

正常打开

2.2.3 破解百度翻译

在使用翻译的时候,当更换翻译的单词,只有局部的页面发上变化,其他是不变的,使用的ajax的动态请求,当我们使用翻译,翻译cat,使用F12查看XHR信息

有以下信息

Request URL: https://fanyi.baidu.com/sug Request Method: POST Status Code: 200 Remote Address: 117.34.61.202:443 Referrer Policy: no-referrer-when-downgrade content-type: application/json; charset=utf-8 kw: ca

使用POST请求

import requests

word = input("Please input a word: ")

url = "https://fanyi.baidu.com/sug"

request_headers = {

"User-Agent": "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.163 Safari/537.36"

}

form_data = {

"kw": word

}

response = requests.post(url=url, headers=request_headers, data=form_data)

print(response.json())

执行,输入dog

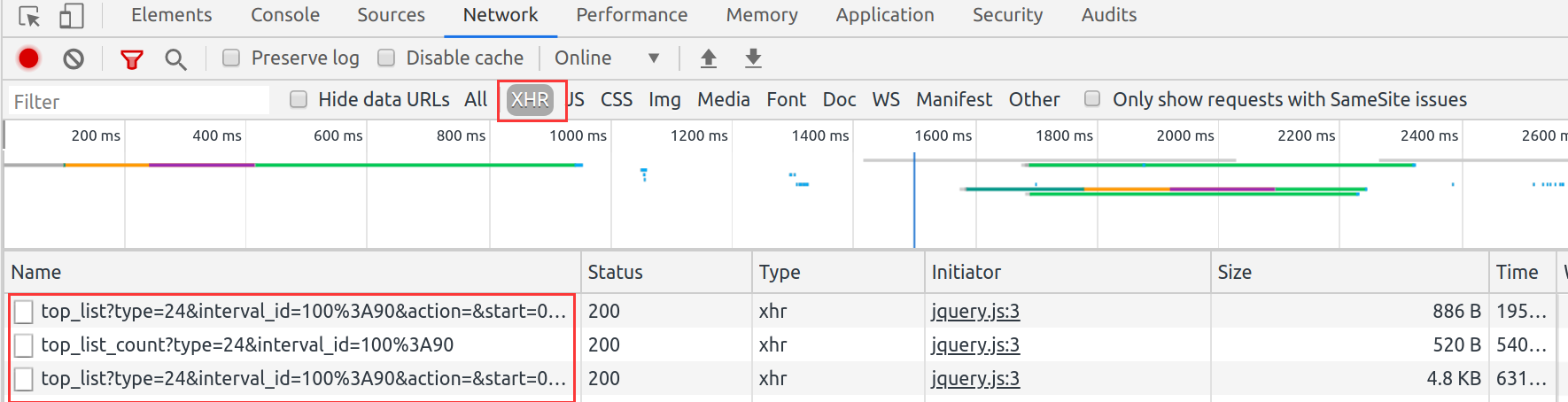

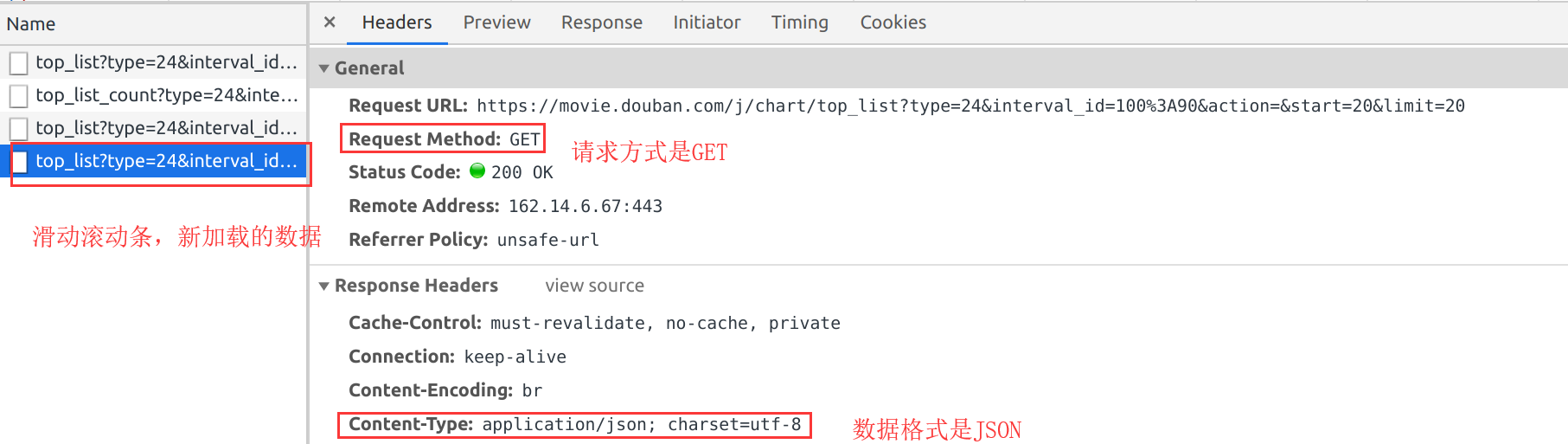

2.2.4 爬取豆瓣电影分类排行榜

https://movie.douban.com/中的电影详情数据

页面分析

访问https://movie.douban.com/,点击排行榜,点击喜剧

使用F12,查看加载数据

在之用滚动条上拉取过程中,会有数据的更新

当使用GET请求时

爬虫代码

import requests

url = "https://movie.douban.com/j/chart/top_list"

request_headers = {

"User-Agent": "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.163 Safari/537.36"

}

form_data = {

"type": "24",

"interval_id": "100:90",

"action":"",

"start": "0",

"limit": "200"

}

response = requests.get(url=url, headers=request_headers, params=form_data)

page_json = response.json()

print(len(page_json), page_json)

执行结果

![]()

响应结果

响应结果某些网站上面的数据并不是完整的,需要通过鼠标滚轮向下拖动的形式进行加载完整的数据, 这些数据就是动态加载的数据(ajax)

在发送get请求时,我们可以传递参数,使用params进行传递

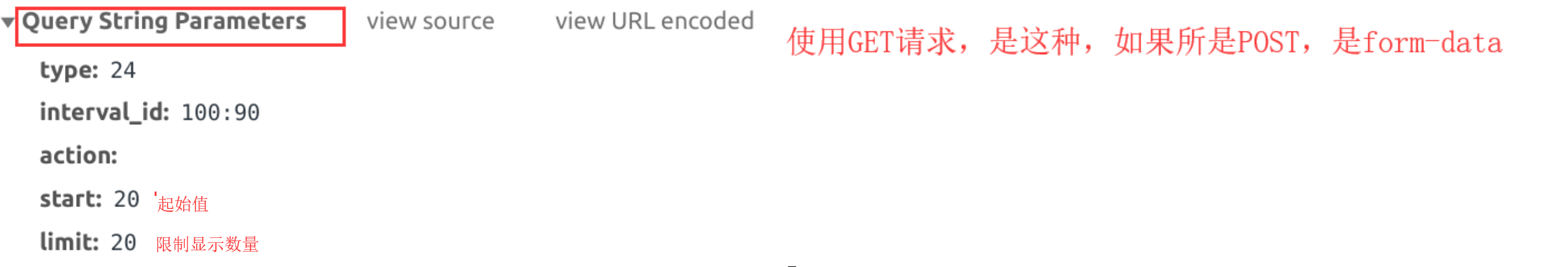

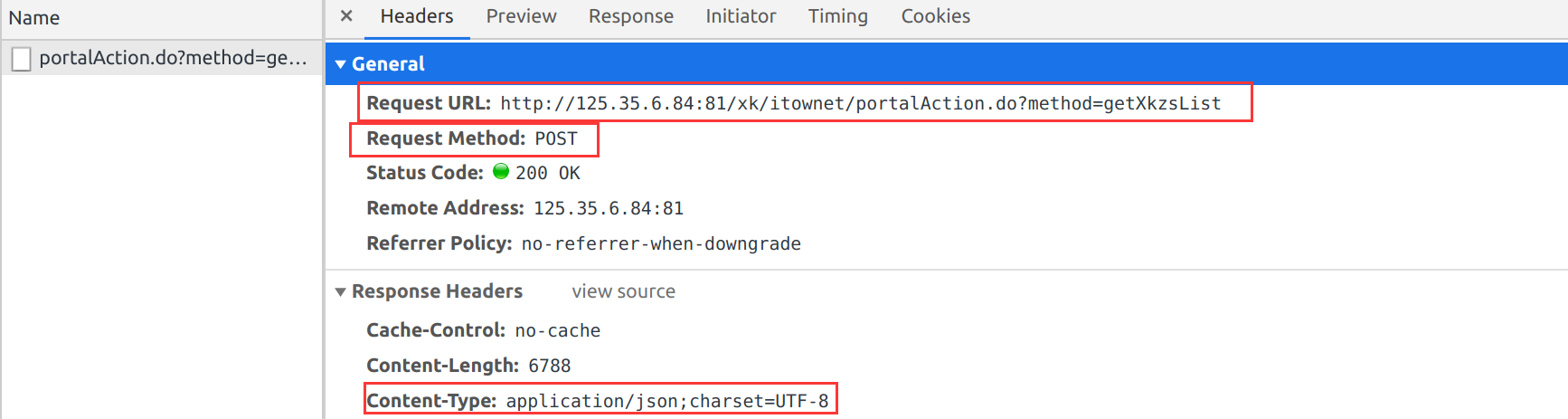

2.2.5 爬取网站相关数据

爬取国家药品监督管理总局中基于中华人民共和国化妆品生产许可证相关数据http://125.35.6.84:81/xk/

分析:

1. 首页企业名称列表的数据是通过ajax动态请求获取到的

请求的数据

2. 我们获取到的首页企业名称列表的数据里面有一条ID数据很重要,需要通过这个ID来获取详情页的URL

查看响应的信息

{"filesize":"","keyword":"","list":[

{"ID":"ff83aff95c5541cdab5ca6e847514f88","EPS_NAME":"广东天姿化妆品科技有限公司","PRODUCT_SN":"粤妆20200022","CITY_CODE":null,"XK_COMPLETE_DATE":{"date":17,"day":1,"hours":0,"minutes":0,"month":4,"nanos":0,"seconds":0,"time":1621180800000,"timezoneOffset":-480,"year":121},"XK_DATE":"2025-01-16","QF_MANAGER_NAME":"广东省药品监督管理局","BUSINESS_LICENSE_NUMBER":"91440101MA5CYUF0XX","XC_DATE":"2021-05-17","NUM_":1},

{"ID":"da523cec3df64ed9802cf806efda4056","EPS_NAME":"广州四季康妍生物科技有限公司","PRODUCT_SN":"粤妆20200063","CITY_CODE":null,"XK_COMPLETE_DATE":{"date":21,"day":2,"hours":0,"minutes":0,"month":3,"nanos":0,"seconds":0,"time":1587398400000,"timezoneOffset":-480,"year":120},"XK_DATE":"2025-04-20","QF_MANAGER_NAME":"广东省药品监督管理局","BUSINESS_LICENSE_NUMBER":"91440101MA5D1DMN7F","XC_DATE":"2020-04-21","NUM_":2},

{"ID":"e671d00b0ff149018f1faf5b897de3cf","EPS_NAME":"广州天香生物科技有限公司","PRODUCT_SN":"粤妆20160165","CITY_CODE":null,"XK_COMPLETE_DATE":{"date":21,"day":2,"hours":0,"minutes":0,"month":3,"nanos":0,"seconds":0,"time":1587398400000,"timezoneOffset":-480,"year":120},"XK_DATE":"2023-09-20","QF_MANAGER_NAME":"广东省药品监督管理局","BUSINESS_LICENSE_NUMBER":"914401110589171106","XC_DATE":"2020-04-21","NUM_":3},

{"ID":"30c04cbe369c4ec387f0d18fd67b0778","EPS_NAME":"清远市英创生物科技有限公司","PRODUCT_SN":"粤妆20200059","CITY_CODE":null,"XK_COMPLETE_DATE":{"date":20,"day":1,"hours":0,"minutes":0,"month":3,"nanos":0,"seconds":0,"time":1587312000000,"timezoneOffset":-480,"year":120},"XK_DATE":"2025-04-07","QF_MANAGER_NAME":"广东省药品监督管理局","BUSINESS_LICENSE_NUMBER":"91441821MA53M9L4XY","XC_DATE":"2020-04-20","NUM_":4},

{"ID":"2d73c6a6178846b892a5a1c813e113e7","EPS_NAME":"广州莱梧生物科技有限公司","PRODUCT_SN":"粤妆20200030","CITY_CODE":null,"XK_COMPLETE_DATE":{"date":17,"day":5,"hours":0,"minutes":0,"month":3,"nanos":0,"seconds":0,"time":1587052800000,"timezoneOffset":-480,"year":120},"XK_DATE":"2025-01-19","QF_MANAGER_NAME":"广东省药品监督管理局","BUSINESS_LICENSE_NUMBER":"91440101MA5CXL0B4C","XC_DATE":"2020-04-17","NUM_":5},

{"ID":"f4f8ae8fc569402eb72b206a714ee231","EPS_NAME":"广州市美度化妆品有限公司","PRODUCT_SN":"粤妆20170096","CITY_CODE":null,"XK_COMPLETE_DATE":{"date":17,"day":5,"hours":0,"minutes":0,"month":3,"nanos":0,"seconds":0,"time":1587052800000,"timezoneOffset":-480,"year":120},"XK_DATE":"2025-04-16","QF_MANAGER_NAME":"广东省药品监督管理局","BUSINESS_LICENSE_NUMBER":"91440101766106562A","XC_DATE":"2020-04-17","NUM_":6},

{"ID":"5164661d6de24502b40ea5449a274393","EPS_NAME":"广州市白云区研美化妆品厂","PRODUCT_SN":"粤妆20160322","CITY_CODE":null,"XK_COMPLETE_DATE":{"date":17,"day":5,"hours":0,"minutes":0,"month":3,"nanos":0,"seconds":0,"time":1587052800000,"timezoneOffset":-480,"year":120},"XK_DATE":"2024-02-26","QF_MANAGER_NAME":"广东省药品监督管理局","BUSINESS_LICENSE_NUMBER":"914401117733449561","XC_DATE":"2020-04-17","NUM_":7},

{"ID":"f360dc52327741e38f74791387d7b4cf","EPS_NAME":"广州市明颜生物科技有限公司","PRODUCT_SN":"粤妆20160090","CITY_CODE":null,"XK_COMPLETE_DATE":{"date":17,"day":5,"hours":0,"minutes":0,"month":3,"nanos":0,"seconds":0,"time":1587052800000,"timezoneOffset":-480,"year":120},"XK_DATE":"2023-10-31","QF_MANAGER_NAME":"广东省药品监督管理局","BUSINESS_LICENSE_NUMBER":"91440111304777644A","XC_DATE":"2020-04-17","NUM_":8},

{"ID":"20bdcf6625514214bc31166217049769","EPS_NAME":"长春呈实健康实业有限公司","PRODUCT_SN":"吉妆20200002","CITY_CODE":"275","XK_COMPLETE_DATE":{"date":17,"day":5,"hours":0,"minutes":0,"month":3,"nanos":0,"seconds":0,"time":1587052800000,"timezoneOffset":-480,"year":120},"XK_DATE":"2025-04-17","QF_MANAGER_NAME":"吉林省药品监督管理局","BUSINESS_LICENSE_NUMBER":"912201817025451953","XC_DATE":"2020-04-17","NUM_":9},

{"ID":"60006896fb6745a5a7b7bebe1b8896d7","EPS_NAME":"吉林洁洁宝生物科技有限公司","PRODUCT_SN":"吉妆20180008","CITY_CODE":"280","XK_COMPLETE_DATE":{"date":17,"day":5,"hours":0,"minutes":0,"month":3,"nanos":0,"seconds":0,"time":1587052800000,"timezoneOffset":-480,"year":120},"XK_DATE":"2023-06-25","QF_MANAGER_NAME":"吉林省食品药品监督管理局","BUSINESS_LICENSE_NUMBER":"9122080231679330X1","XC_DATE":"2020-04-17","NUM_":10},

{"ID":"ca1e0e50cdac4751bcc7aa7d76d7063c","EPS_NAME":"四川晶华生物科技有限公司","PRODUCT_SN":"川妆20160008","CITY_CODE":"165","XK_COMPLETE_DATE":{"date":17,"day":5,"hours":0,"minutes":0,"month":3,"nanos":0,"seconds":0,"time":1587052800000,"timezoneOffset":-480,"year":120},"XK_DATE":"2021-09-06","QF_MANAGER_NAME":"四川省药品监督管理局","BUSINESS_LICENSE_NUMBER":"91510100052536587Y","XC_DATE":"2020-04-17","NUM_":11},

{"ID":"bbfa198050ec475889223abe5c4b4fe5","EPS_NAME":"广州发妍化妆品制造有限公司","PRODUCT_SN":"粤妆20200062","CITY_CODE":null,"XK_COMPLETE_DATE":{"date":16,"day":4,"hours":0,"minutes":0,"month":3,"nanos":0,"seconds":0,"time":1586966400000,"timezoneOffset":-480,"year":120},"XK_DATE":"2025-04-15","QF_MANAGER_NAME":"广东省药品监督管理局","BUSINESS_LICENSE_NUMBER":"91440101MA59NBD02M","XC_DATE":"2020-04-16","NUM_":12},

{"ID":"6c5b9283cb9546e2b1bbbc8f386987cc","EPS_NAME":"广东柔泉生物科技有限公司","PRODUCT_SN":"粤妆20190027","CITY_CODE":null,"XK_COMPLETE_DATE":{"date":16,"day":4,"hours":0,"minutes":0,"month":3,"nanos":0,"seconds":0,"time":1586966400000,"timezoneOffset":-480,"year":120},"XK_DATE":"2024-01-24","QF_MANAGER_NAME":"广东省药品监督管理局","BUSINESS_LICENSE_NUMBER":"91441821MA521RD189","XC_DATE":"2020-04-16","NUM_":13},

{"ID":"8937c86965f04e789a44cc7fdf3fa63c","EPS_NAME":"湖南百尔泰克生物科技有限公司","PRODUCT_SN":"湘妆20200004","CITY_CODE":"189","XK_COMPLETE_DATE":{"date":15,"day":3,"hours":0,"minutes":0,"month":3,"nanos":0,"seconds":0,"time":1586880000000,"timezoneOffset":-480,"year":120},"XK_DATE":"2025-04-14","QF_MANAGER_NAME":"湖南省药品监督管理局","BUSINESS_LICENSE_NUMBER":"91430624060114819G","XC_DATE":"2020-04-15","NUM_":14},

{"ID":"4ddf34fafdc54c0fa4c6d5b3be01f789","EPS_NAME":"湖南银华日用化妆品有限公司","PRODUCT_SN":"湘妆20170008","CITY_CODE":"189","XK_COMPLETE_DATE":{"date":15,"day":3,"hours":0,"minutes":0,"month":3,"nanos":0,"seconds":0,"time":1586880000000,"timezoneOffset":-480,"year":120},"XK_DATE":"2022-09-21","QF_MANAGER_NAME":"湖南省药品监督管理局","BUSINESS_LICENSE_NUMBER":"914306003256597516","XC_DATE":"2020-04-15","NUM_":15}],

"orderBy":"createDate","orderType":"desc","pageCount":354,"pageNumber":1,"pageSize":15,"property":"","totalCount":5309}

3. 企业详情页的数据也是通过ajax请求动态获取到的

进入其中一个网页查看http://125.35.6.84:81/xk/itownet/portal/dzpz.jsp?id=ff83aff95c5541cdab5ca6e847514f88

4. 获取企业详情页的数据发送的请求携带的参数就有这个ID值,通过这个请求才能获取到企业详情页的数据

先获取ID

import requests

url = "http://125.35.6.84:81/xk/itownet/portalAction.do?method=getXkzsList"

request_headers = {

"User-Agent": "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.163 Safari/537.36"

}

id_list = list()

for i in range(1,6):

form_data = {

"on": "true",

"page": str(i),

"pageSize": "15",

"productName": "",

"conditionType": "1",

"applyname": "",

"applysn": ""

}

# 向企业列表发送请求,获取ID值

page_json = requests.post(url=url, headers=request_headers, data=form_data).json()

name_list = page_json["list"]

for name in name_list:

name_id = name["ID"]

id_list.append(name_id)

print(id_list)

执行

['ff83aff95c5541cdab5ca6e847514f88', 'da523cec3df64ed9802cf806efda4056', 'e671d00b0ff149018f1faf5b897de3cf', '30c04cbe369c4ec387f0d18fd67b0778', '2d73c6a6178846b892a5a1c813e113e7', 'f4f8ae8fc569402eb72b206a714ee231',

'5164661d6de24502b40ea5449a274393', 'f360dc52327741e38f74791387d7b4cf', '20bdcf6625514214bc31166217049769', '60006896fb6745a5a7b7bebe1b8896d7', 'ca1e0e50cdac4751bcc7aa7d76d7063c', 'bbfa198050ec475889223abe5c4b4fe5',

'6c5b9283cb9546e2b1bbbc8f386987cc', '8937c86965f04e789a44cc7fdf3fa63c', '4ddf34fafdc54c0fa4c6d5b3be01f789', '510b2339331749d2b820f3db249105c5', '37b9c5287bf84534abc6023d8acb8937', '23855b6486454b40bcaf2135a0700d5a',

'f211bc3808a2499da794eeaeb7729bed', '7afdfa6de55a4019818b7fb49460e19c', 'b81e4f82b4d34720b9eafcaf98d8e990', '9c0049652c4f4265a0edfd04a2fe92dd', '98187585f016448ca9624a736ebe7dfc', 'f8f938b56a4c4b1b99ffb8e6ed3ab505',

'c1ccfe6d4a6a415db24b28ed70e9a0aa', '42417a355d4c4c5e9b975255a80ce3d4', '057ca436be1d4b34a2ce8033963fd29b', '0b7974027f5743a5924c40a3818b2870', '52dff200c6e1426bbcd7aab2606c9d44', '14e7561b25b4439ba4ad16956d84353e',

'ce4800ecc82b40bbb8aeb964ec94b4bf', '43d70d602ff24033befdc6a8e607b7c4', '6e0df83e46ba4b96b8f3cde8986fd092', 'c677652ebd7f443d9ae3737c58eb65c5', '99f5fe0dff544c54a2823655cbb9798c', 'd8c72e8943894a65aac6b2a6e6a79726',

'fc33c5d6dac04bfba8d252e142fc537f', '2c673eede7f9478d963c8dd5227db9b2', '9c93dde6da604800b781a2f0270634b5', 'b6a6a12b71e74b7088cb44002988b45c', '5e1dec1431da4a17bb384bfbd4e3488d', 'b4437b636b5944eb9eadc1418f312b19',

'60e1d57d0bb1477d85e074c0428f47a0', '29345ac95fa74dd4a85ee6d881278610', 'd10cb1f6747848b7975254b02a4474ce', '721391c5fcb949bf953700f701cc9cc2', 'adb14d0b39be44928ed7d09fe13a969f', 'fbcb4b88fa7d44e89fe8f288e1cd1e2c',

'790a53b247f84004a7154d3a61ceabf7', '88c63f139d194d1795617d033f52bcd6', '432f87ac66554634a2b4f2759ec14837', '2c7bfa3ae96d4d078d11ce098893e6dd', '39e63fdb99ca4f0cab597e5967acc549', 'c6f057c2fc504c25b10a6ddeecec72b0',

'e8c66a6aa1b04622a13792b9946313d5', '9a4a52aac203462c86972620aeafd668', 'f5e9e7f78ae54187b75bafe16e0f74b6', 'd5bcc50ea05a4a90aec1801fd2b5f481', '38a385f3c4b040a48eb6f1bcf9508d7b', '93f1414790c24ca4b05eb0e90cd01f01',

'4d925dd8ade6466fab88761b7364997d', '63cb51c3ff5d4e8b91e82f310c7f746d', '608bc2225df540a2ac888138d53d312b', 'e27745df29b04556824ab32eaccf5a38', 'b517d913011e463aad4f31a29ae4fa14', '793d2c8ea75f41b5a5fd90c2c7c1bf5a',

'3bf4068d7cef4648bd6d03f1eb976dae', '317543f7e9ab4658bab1a911222408ec', '11f64bb411464dfebbe9a409f9b01ba9', '33ad71e3896f4ee48d81083038960fab', '374f8cbb4d244b89bef12df04dd472c5', '4510ccda515d4466a546d5236a652ed8',

'b4d6946fbbca4f3ebf30f3ea4869bea2', 'e334e1b017a845df9bf9a5cb71d0036d', '214a097fcb0e4d4faac9a2f8d0dda358']

抓取详情页数据

import requests

url = "http://125.35.6.84:81/xk/itownet/portalAction.do?method=getXkzsList"

#详情页url

detail_url = "http://125.35.6.84:81/xk/itownet/portalAction.do?method=getXkzsById"

request_headers = {

"User-Agent": "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.163 Safari/537.36"

}

all_data_list = list()

for i in range(1,6):

form_data = {

"on": "true",

"page": str(i),

"pageSize": "15",

"productName": "",

"conditionType": "1",

"applyname": "",

"applysn": ""

}

# 向企业列表发送请求,获取ID值

page_json = requests.post(url=url, headers=request_headers, data=form_data).json()

#提取json中list字段值

name_list = page_json["list"]

for name in name_list:

name_id = name["ID"]

detail_data = {

"id": name_id

}

# 封装好ID值之后,向详情页发送请求,获取企业详情页的数据

detail_json = requests.post(url=detail_url, headers=request_headers, data=detail_data).json()

all_data_list.append(detail_json)

print(len(all_data_list),all_data_list)

执行

完整结果

结果

结果参考:老男孩教育:https://www.oldboyedu.com/