作业内容,完成作业便可熟悉如下内容:

cell 1 设置绘图默认参数

1 # Run some setup code for this notebook. 2 3 import random 4 import numpy as np 5 from cs231n.data_utils import load_CIFAR10 6 import matplotlib.pyplot as plt 7 8 # This is a bit of magic to make matplotlib figures appear inline in the 9 # notebook rather than in a new window. 10 %matplotlib inline 11 plt.rcParams['figure.figsize'] = (10.0, 8.0) # set default size of plots 12 plt.rcParams['image.interpolation'] = 'nearest' 13 plt.rcParams['image.cmap'] = 'gray' 14 15 # Some more magic so that the notebook will reload external python modules; 16 # see http://stackoverflow.com/questions/1907993/autoreload-of-modules-in-ipython 17 %load_ext autoreload 18 %autoreload 2

cell 2 读取数据得到数组(矩阵)

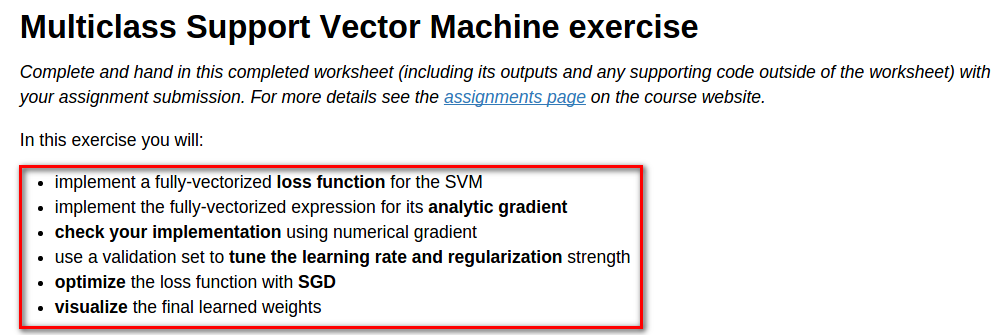

1 # Load the raw CIFAR-10 data. 2 cifar10_dir = 'cs231n/datasets/cifar-10-batches-py' 3 X_train, y_train, X_test, y_test = load_CIFAR10(cifar10_dir) 4 5 # As a sanity check, we print out the size of the training and test data. 6 print 'Training data shape: ', X_train.shape 7 print 'Training labels shape: ', y_train.shape 8 print 'Test data shape: ', X_test.shape 9 print 'Test labels shape: ', y_test.shape

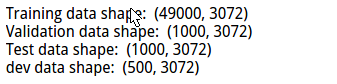

得到结果,之后会用到,方便矩阵运算:

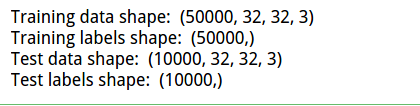

cell 3 对每一类随机选取对应的例子,并进行可视化:

1 # Visualize some examples from the dataset. 2 # We show a few examples of training images from each class. 3 classes = ['plane', 'car', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck'] 4 num_classes = len(classes) 5 samples_per_class = 7 6 for y, cls in enumerate(classes): 7 idxs = np.flatnonzero(y_train == y) 8 idxs = np.random.choice(idxs, samples_per_class, replace=False) 9 for i, idx in enumerate(idxs): 10 plt_idx = i * num_classes + y + 1 11 plt.subplot(samples_per_class, num_classes, plt_idx) 12 plt.imshow(X_train[idx].astype('uint8')) 13 plt.axis('off') 14 if i == 0: 15 plt.title(cls) 16 plt.show()

可视化结果:

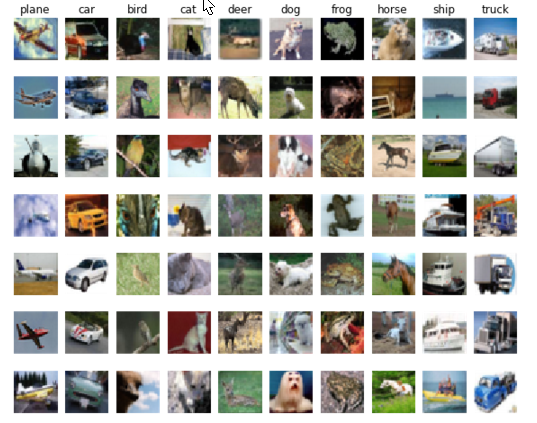

cell 4 将数据集划分为训练集,验证集。

1 # Split the data into train, val, and test sets. In addition we will 2 # create a small development set as a subset of the training data; 3 # we can use this for development so our code runs faster. 4 num_training = 49000 5 num_validation = 1000 6 num_test = 1000 7 num_dev = 500 8 9 # Our validation set will be num_validation points from the original 10 # training set. 11 mask = range(num_training, num_training + num_validation) 12 X_val = X_train[mask] 13 y_val = y_train[mask] 14 15 # Our training set will be the first num_train points from the original 16 # training set. 17 mask = range(num_training) 18 X_train = X_train[mask] 19 y_train = y_train[mask] 20 21 # We will also make a development set, which is a small subset of 22 # the training set. 23 mask = np.random.choice(num_training, num_dev, replace=False) 24 X_dev = X_train[mask] 25 y_dev = y_train[mask] 26 27 # We use the first num_test points of the original test set as our 28 # test set. 29 mask = range(num_test) 30 X_test = X_test[mask] 31 y_test = y_test[mask] 32 33 print 'Train data shape: ', X_train.shape 34 print 'Train labels shape: ', y_train.shape 35 print 'Validation data shape: ', X_val.shape 36 print 'Validation labels shape: ', y_val.shape 37 print 'Test data shape: ', X_test.shape 38 print 'Test labels shape: ', y_test.shape

得到各个数据集的尺寸:

cell 5 将数据reshape,方便计算

1 # Preprocessing: reshape the image data into rows 2 X_train = np.reshape(X_train, (X_train.shape[0], -1)) 3 X_val = np.reshape(X_val, (X_val.shape[0], -1)) 4 X_test = np.reshape(X_test, (X_test.shape[0], -1)) 5 X_dev = np.reshape(X_dev, (X_dev.shape[0], -1)) 6 7 # As a sanity check, print out the shapes of the data 8 print 'Training data shape: ', X_train.shape 9 print 'Validation data shape: ', X_val.shape 10 print 'Test data shape: ', X_test.shape 11 print 'dev data shape: ', X_dev.shape

reshape 后的结果

cell 6 计算训练集的均值

1 # Preprocessing: subtract the mean image 2 # first: compute the image mean based on the training data 3 mean_image = np.mean(X_train, axis=0) 4 print mean_image[:10] # print a few of the elements 5 plt.figure(figsize=(4,4)) 6 plt.imshow(mean_image.reshape((32,32,3)).astype('uint8')) # visualize the mean image 7 plt.show()

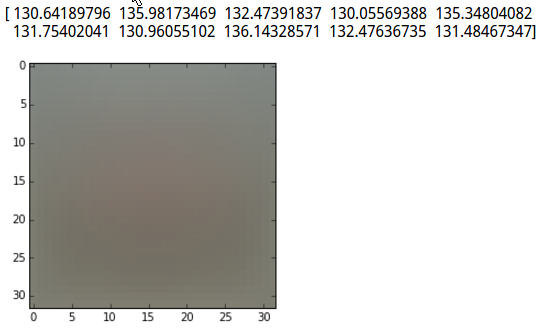

对均值可视化:

cell 7 训练集和测试集减去均值图像:

1 # second: subtract the mean image from train and test data 2 X_train -= mean_image 3 X_val -= mean_image 4 X_test -= mean_image 5 X_dev -= mean_image

cell 8 将截距项b添加到数据里,方便计算

1 # third: append the bias dimension of ones (i.e. bias trick) so that our SVM 2 # only has to worry about optimizing a single weight matrix W. 3 X_train = np.hstack([X_train, np.ones((X_train.shape[0], 1))]) 4 X_val = np.hstack([X_val, np.ones((X_val.shape[0], 1))]) 5 X_test = np.hstack([X_test, np.ones((X_test.shape[0], 1))]) 6 X_dev = np.hstack([X_dev, np.ones((X_dev.shape[0], 1))]) 7 8 print X_train.shape, X_val.shape, X_test.shape, X_dev.shape

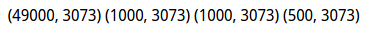

数据尺寸:

此前步骤为初始步骤,基本不需要修改,之后是作业核心。

cell 9 使用for循环计算loss

1 # Evaluate the naive implementation of the loss we provided for you: 2 from cs231n.classifiers.linear_svm import svm_loss_naive 3 import time 4 5 # generate a random SVM weight matrix of small numbers 6 W = np.random.randn(3073, 10) * 0.0001 7 8 loss, grad = svm_loss_naive(W, X_dev, y_dev, 0.00001) 9 print 'loss: %f' % (loss, )

svm_loss_naive()具体实现:

1 def svm_loss_naive(W, X, y, reg): 2 """ 3 Structured SVM loss function, naive implementation (with loops). 4 5 Inputs have dimension D, there are C classes, and we operate on minibatches 6 of N examples. 7 8 Inputs: 9 - W: A numpy array of shape (D, C) containing weights. 10 - X: A numpy array of shape (N, D) containing a minibatch of data. 11 - y: A numpy array of shape (N,) containing training labels; y[i] = c means 12 that X[i] has label c, where 0 <= c < C. 13 - reg: (float) regularization strength 14 15 Returns a tuple of: 16 - loss as single float 17 - gradient with respect to weights W; an array of same shape as W 18 """ 19 dW = np.zeros(W.shape) # initialize the gradient as zero 20 21 # compute the loss and the gradient 22 # 10 classes 23 num_classes = W.shape[1] 24 #train 49000 25 num_train = X.shape[0] 26 loss = 0.0 27 """ 28 for i ->49000: 29 for j ->10: 30 compute every train smaple about each class 31 """ 32 #L = np.zeros(num_train,nun_class) 33 for i in xrange(num_train): 34 scores = X[i].dot(W) 35 #1*3073 * 3073*10 >>1*10 36 correct_class_score = scores[y[i]] 37 for j in xrange(num_classes): 38 if j == y[i]: 39 continue 40 margin = scores[j] - correct_class_score + 1 # note delta = 1 41 if margin > 0: 42 loss += margin 43 #dW 3073*10 44 dW[:,j] += X[i].T 45 dW[:,y[i]] -= X[i].T 46 47 # Right now the loss is a sum over all training examples, but we want it 48 # to be an average instead so we divide by num_train. 49 loss /= num_train 50 dW /= num_train 51 # Add regularization to the loss. 52 # 1/2*w^2 w*w W*W = np.multiply(W,W) >>>>elementwise product 53 loss += reg * np.sum(W * W) 54 dW +=reg*W 55 ############################################################################# 56 # TODO: # 57 # Compute the gradient of the loss function and store it dW. # 58 # Rather that first computing the loss and then computing the derivative, # 59 # it may be simpler to compute the derivative at the same time that the # 60 # loss is being computed. As a result you may need to modify some of the # 61 # code above to compute the gradient. # 62 ############################################################################# 63 #computing coreect is differnt with others 64 return loss, dW

loss结果为8.97460

cell 10 完善svm_loss_naive(),梯度下降更新变量:

1 # Once you've implemented the gradient, recompute it with the code below 2 # and gradient check it with the function we provided for you 3 4 # Compute the loss and its gradient at W. 5 loss, grad = svm_loss_naive(W, X_dev, y_dev, 0.0) 6 7 # Numerically compute the gradient along several randomly chosen dimensions, and 8 # compare them with your analytically computed gradient. The numbers should match 9 # almost exactly along all dimensions. 10 from cs231n.gradient_check import grad_check_sparse 11 f = lambda w: svm_loss_naive(w, X_dev, y_dev, 0.0)[0] 12 grad_numerical = grad_check_sparse(f, W, grad) 13 14 # do the gradient check once again with regularization turned on 15 # you didn't forget the regularization gradient did you? 16 loss, grad = svm_loss_naive(W, X_dev, y_dev, 1e2) 17 f = lambda w: svm_loss_naive(w, X_dev, y_dev, 1e2)[0] 18 grad_numerical = grad_check_sparse(f, W, grad)

梯度更新代码已在cell9调用时给出。

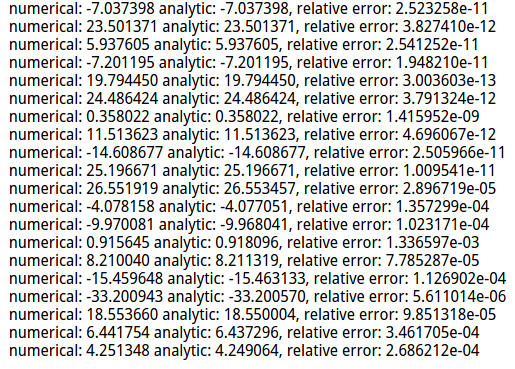

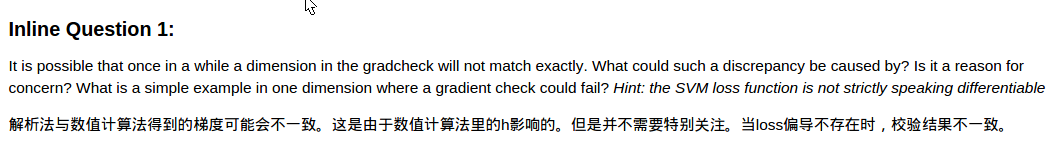

梯度校验》》》使用解析法得到的dw与数值计算法得到的结果比较:

问题:

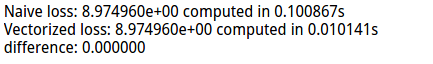

cell 11 使用向量(矩阵)的方式计算loss,并与之前的结果比较:

1 # Next implement the function svm_loss_vectorized; for now only compute the loss; 2 # we will implement the gradient in a moment. 3 tic = time.time() 4 loss_naive, grad_naive = svm_loss_naive(W, X_dev, y_dev, 0.00001) 5 toc = time.time() 6 print 'Naive loss: %e computed in %fs' % (loss_naive, toc - tic) 7 8 from cs231n.classifiers.linear_svm import svm_loss_vectorized 9 tic = time.time() 10 loss_vectorized, _ = svm_loss_vectorized(W, X_dev, y_dev, 0.00001) 11 toc = time.time() 12 print 'Vectorized loss: %e computed in %fs' % (loss_vectorized, toc - tic) 13 14 # The losses should match but your vectorized implementation should be much faster. 15 print 'difference: %f' % (loss_naive - loss_vectorized)

差异结果:

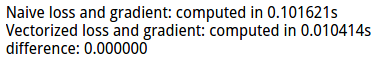

cell 12 使用向量(矩阵)的方式计算grad,并与之前的结果比较:

1 # Complete the implementation of svm_loss_vectorized, and compute the gradient 2 # of the loss function in a vectorized way. 3 4 # The naive implementation and the vectorized implementation should match, but 5 # the vectorized version should still be much faster. 6 tic = time.time() 7 _, grad_naive = svm_loss_naive(W, X_dev, y_dev, 0.00001) 8 toc = time.time() 9 print 'Naive loss and gradient: computed in %fs' % (toc - tic) 10 11 tic = time.time() 12 _, grad_vectorized = svm_loss_vectorized(W, X_dev, y_dev, 0.00001) 13 toc = time.time() 14 print 'Vectorized loss and gradient: computed in %fs' % (toc - tic) 15 16 # The loss is a single number, so it is easy to compare the values computed 17 # by the two implementations. The gradient on the other hand is a matrix, so 18 # we use the Frobenius norm to compare them. 19 difference = np.linalg.norm(grad_naive - grad_vectorized, ord='fro') 20 print 'difference: %f' % difference

结果比较:

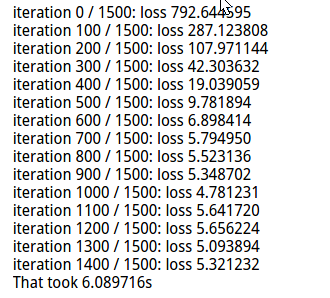

cell 13 随机梯度下降》》SGD

1 # In the file linear_classifier.py, implement SGD in the function 2 # LinearClassifier.train() and then run it with the code below. 3 from cs231n.classifiers import LinearSVM 4 svm = LinearSVM() 5 tic = time.time() 6 loss_hist = svm.train(X_train, y_train, learning_rate=1e-7, reg=5e4, 7 num_iters=1500, verbose=True) 8 toc = time.time() 9 print 'That took %fs' % (toc - tic)

loss 结果:

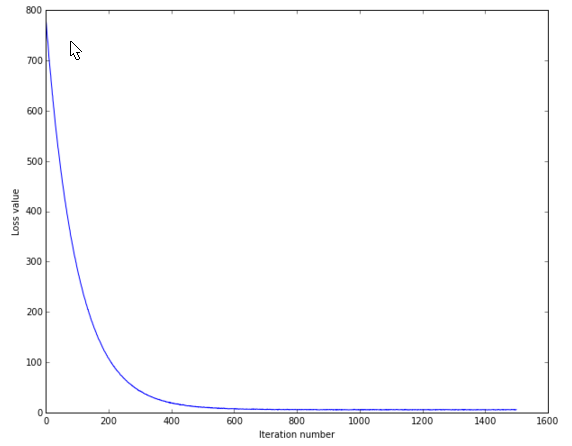

cell 14 画出loss变化曲线:

1 # A useful debugging strategy is to plot the loss as a function of 2 # iteration number: 3 plt.plot(loss_hist) 4 plt.xlabel('Iteration number') 5 plt.ylabel('Loss value') 6 plt.show()

结果:

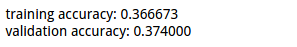

cell 15 用上述训练的参数,对验证集与训练集进行测试:

1 # Write the LinearSVM.predict function and evaluate the performance on both the 2 # training and validation set 3 y_train_pred = svm.predict(X_train) 4 print 'training accuracy: %f' % (np.mean(y_train == y_train_pred), ) 5 y_val_pred = svm.predict(X_val) 6 print 'validation accuracy: %f' % (np.mean(y_val == y_val_pred), )

结果:

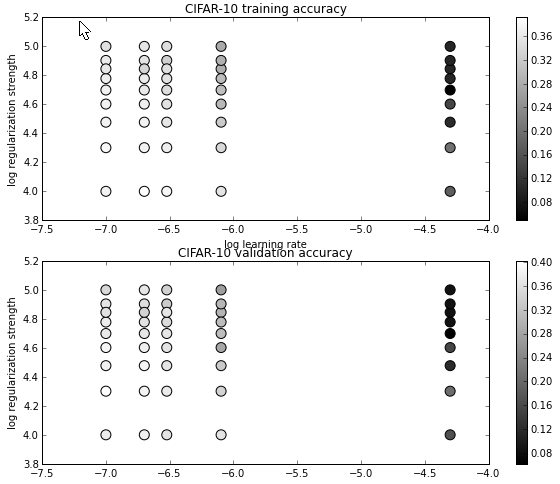

cell 16 对超参数》》学习率 正则化强度 使用训练集进行训练,使用验证集验证结果,选取最好的超参数。

1 # Use the validation set to tune hyperparameters (regularization strength and 2 # learning rate). You should experiment with different ranges for the learning 3 # rates and regularization strengths; if you are careful you should be able to 4 # get a classification accuracy of about 0.4 on the validation set. 5 learning_rates = [1e-7, 2e-7, 3e-7, 5e-5, 8e-7] 6 regularization_strengths = [1e4, 2e4, 3e4, 4e4, 5e4, 6e4, 7e4, 8e4, 1e5] 7 8 # results is dictionary mapping tuples of the form 9 # (learning_rate, regularization_strength) to tuples of the form 10 # (training_accuracy, validation_accuracy). The accuracy is simply the fraction 11 # of data points that are correctly classified. 12 results = {} 13 best_val = -1 # The highest validation accuracy that we have seen so far. 14 best_svm = None # The LinearSVM object that achieved the highest validation rate. 15 16 ################################################################################ 17 # TODO: # 18 # Write code that chooses the best hyperparameters by tuning on the validation # 19 # set. For each combination of hyperparameters, train a linear SVM on the # 20 # training set, compute its accuracy on the training and validation sets, and # 21 # store these numbers in the results dictionary. In addition, store the best # 22 # validation accuracy in best_val and the LinearSVM object that achieves this # 23 # accuracy in best_svm. # 24 # # 25 # Hint: You should use a small value for num_iters as you develop your # 26 # validation code so that the SVMs don't take much time to train; once you are # 27 # confident that your validation code works, you should rerun the validation # 28 # code with a larger value for num_iters. # 29 ################################################################################ 30 iters = 1500 31 for lr in learning_rates: 32 for rs in regularization_strengths: 33 svm = LinearSVM() 34 svm.train(X_train, y_train, learning_rate=lr, reg=rs, num_iters=iters) 35 y_train_pred = svm.predict(X_train) 36 accu_train = np.mean(y_train == y_train_pred) 37 y_val_pred = svm.predict(X_val) 38 accu_val = np.mean(y_val == y_val_pred) 39 40 results[(lr, rs)] = (accu_train, accu_val) 41 42 if best_val < accu_val: 43 best_val = accu_val 44 best_svm = svm 45 ################################################################################ 46 # END OF YOUR CODE # 47 ################################################################################ 48 49 # Print out results. 50 for lr, reg in sorted(results): 51 train_accuracy, val_accuracy = results[(lr, reg)] 52 print 'lr %e reg %e train accuracy: %f val accuracy: %f' % ( 53 lr, reg, train_accuracy, val_accuracy) 54 55 print 'best validation accuracy achieved during cross-validation: %f' % best_val

最终结果:

cell 17 对各组超参数的结果可视化:

1 # Visualize the cross-validation results 2 import math 3 x_scatter = [math.log10(x[0]) for x in results] 4 y_scatter = [math.log10(x[1]) for x in results] 5 6 # plot training accuracy 7 marker_size = 100 8 colors = [results[x][0] for x in results] 9 plt.subplot(2, 1, 1) 10 plt.scatter(x_scatter, y_scatter, marker_size, c=colors) 11 plt.colorbar() 12 plt.xlabel('log learning rate') 13 plt.ylabel('log regularization strength') 14 plt.title('CIFAR-10 training accuracy') 15 16 # plot validation accuracy 17 colors = [results[x][1] for x in results] # default size of markers is 20 18 plt.subplot(2, 1, 2) 19 plt.scatter(x_scatter, y_scatter, marker_size, c=colors) 20 plt.colorbar() 21 plt.xlabel('log learning rate') 22 plt.ylabel('log regularization strength') 23 plt.title('CIFAR-10 validation accuracy') 24 plt.show()

显示结果:

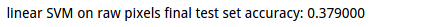

cell 18 使用得到最好超参数的模型对test集进行预测,比较预测值与真实值,计算准确率。

1 # Evaluate the best svm on test set 2 y_test_pred = best_svm.predict(X_test) 3 test_accuracy = np.mean(y_test == y_test_pred) 4 print 'linear SVM on raw pixels final test set accuracy: %f' % test_accuracy

结果:

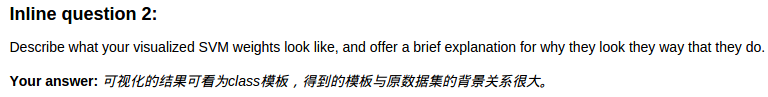

cell 19 对得到的w对应的class进行可视化:

1 # Visualize the learned weights for each class. 2 # Depending on your choice of learning rate and regularization strength, these may 3 # or may not be nice to look at. 4 w = best_svm.W[:-1,:] # strip out the bias 5 w = w.reshape(32, 32, 3, 10) 6 w_min, w_max = np.min(w), np.max(w) 7 classes = ['plane', 'car', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck'] 8 for i in xrange(10): 9 plt.subplot(2, 5, i + 1) 10 11 # Rescale the weights to be between 0 and 255 12 wimg = 255.0 * (w[:, :, :, i].squeeze() - w_min) / (w_max - w_min) 13 plt.imshow(wimg.astype('uint8')) 14 plt.axis('off') 15 plt.title(classes[i])

结果:

问题:

注:在softmax 与svm的超参数选择时,使用了共同的类,以及类中不同的相应的方法。具体的文件内容与注释如下。

附:通关CS231n企鹅群:578975100 validation:DL-CS231n