spark依赖scala,所以要下载sparak和scala俩个包

1、文件解压

tar -zxf spark-1.3.0-bin-hadoop2.4.tgz /usr/local/ ln -s /usr/local/spark-1.3.0-bin-hadoop2.4 /usr/local/spark tar -zxf scala-2.11.0.tgz /usr/local/ ln -s /usr/local/scala-2.11.0 /usr/local/scala

2、环境变量

vi ~/.bashrc export SPARK_HOME=/usr/local/spark export PATH=$PATH:$SPARK_HOME/bin export SCALA_HOME=/usr/local/scala export PATH=$PATH:$SCALA_HOME/bin export SPARK_EXAMPLES_JAR=/usr/local/spark/lib/spark-examples-1.3.0-hadoop2.4.0.jar source ~/.bashrc

3、配置文件,spark/conf/slaves

slave1.ipieuvre.com #从节点名称

slave2.ipieuvre.com

4、复制分发

将/usr/local/spark 和 scala目录拷贝到各个从节点上

环境变量也拷贝到各个从节点上,刷新环境变量

scp -r /usr/local/spark slave1.ipieuvre.com:/usr/local/ scp -r /usr/local/spark slave1.ipieuvre.com:/usr/local/ scp -r /usr/local/scala slave1.ipieuvre.com:/usr/local/ scp -r /usr/local/scala slave1.ipieuvre.com:/usr/local/ scp /root/.bashrc slave1.ipieuvre.com:/root/ scp /root/.bashrc slave2.ipieuvre.com:/root/ ssh slave1.ipieuvre.com -c "source /root/.bashrc" ssh slave2.ipieuvre.com -c "source /root/.bashrc"

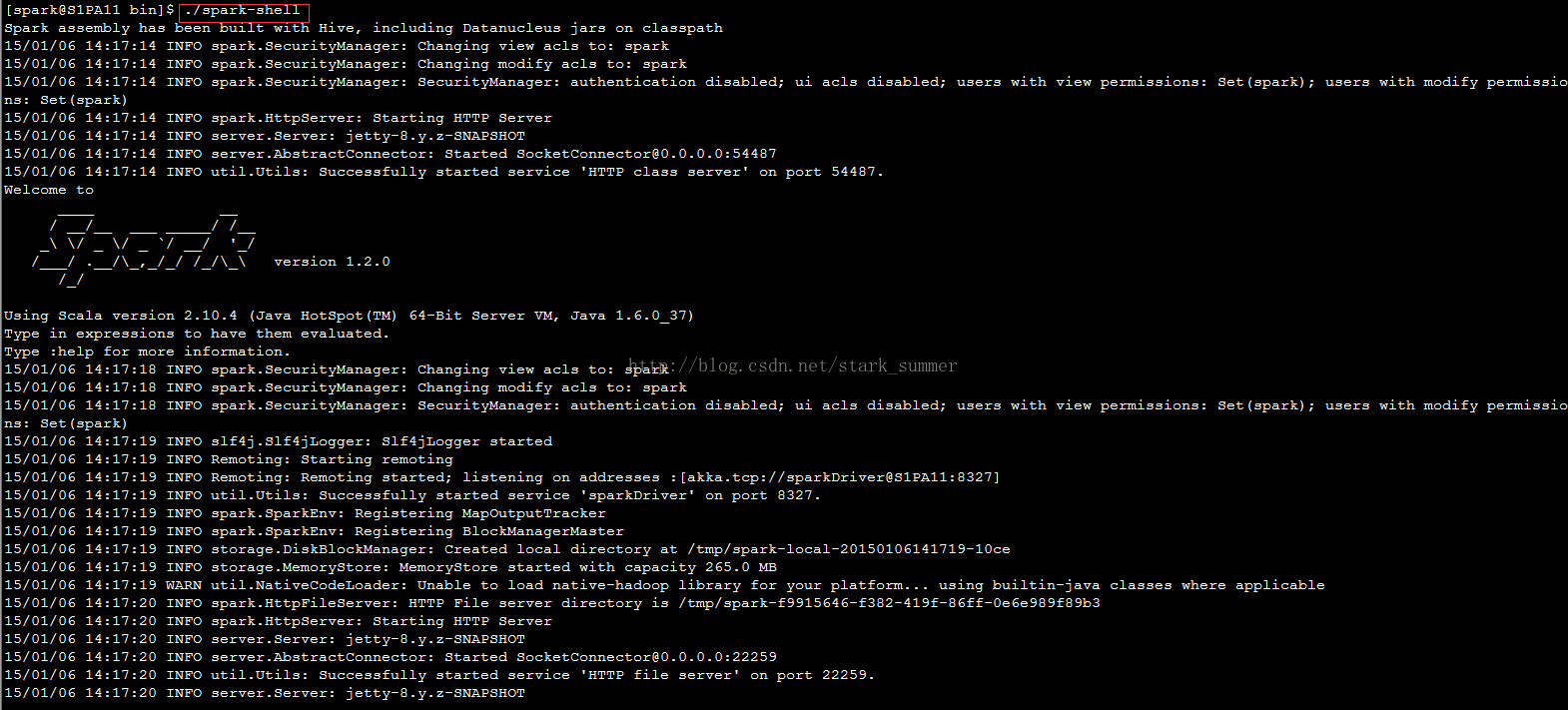

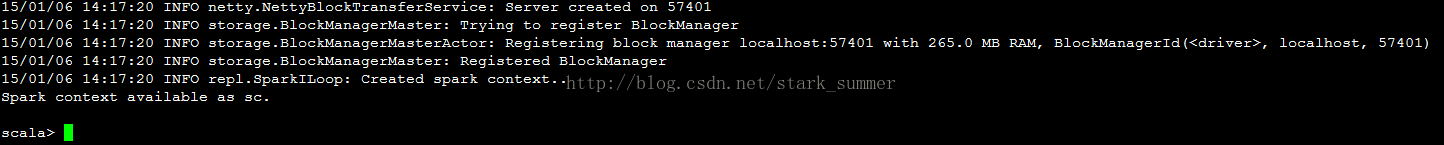

5、安装完成可以测试验证了,输入/usr/local/spark/sbin/start-all.sh启动spark,输入 spark-shell命令进入spark的shell界面:

spark WEBUI页面

http://主节点地址:4040/

spark集群的web管理页面

http://主节点地址:8080/