条件:搭建好kafka环境

搭建zookeeper+kafka地址:https://www.cnblogs.com/weibanggang/p/12377055.html

1、java无注解方式

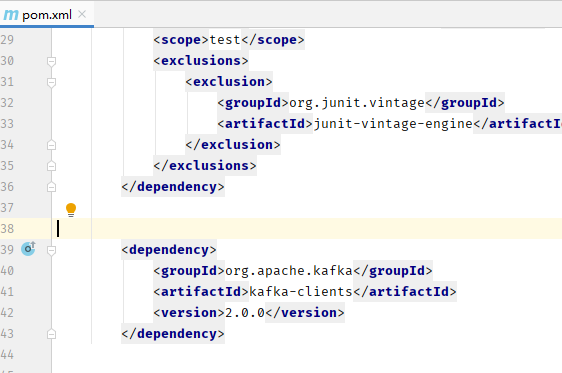

加入kafka包:

<dependency> <groupId>org.apache.kafka</groupId> <artifactId>kafka-clients</artifactId> <version>2.0.0</version> </dependency>

消费者代码

package com.wbg.springboot_kafka; import org.apache.kafka.clients.consumer.ConsumerConfig; import org.apache.kafka.clients.consumer.ConsumerRecords; import org.apache.kafka.clients.consumer.KafkaConsumer; import org.apache.kafka.common.serialization.IntegerDeserializer; import org.apache.kafka.common.serialization.StringDeserializer; import java.time.Duration; import java.util.Collections; import java.util.Properties; public class Consumer extends Thread { KafkaConsumer<Integer,String> consumer; String topic; public Consumer(String topic){ Properties properties=new Properties(); properties.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.198.129:9092,192.168.198.130:9092,192.168.198.131:9092"); properties.put(ConsumerConfig.CLIENT_ID_CONFIG,"consumer"); properties.put(ConsumerConfig.GROUP_ID_CONFIG,"consumer"); properties.put(ConsumerConfig.SESSION_TIMEOUT_MS_CONFIG,"30000"); properties.put(ConsumerConfig.AUTO_COMMIT_INTERVAL_MS_CONFIG,"1000"); //自动提交(批量确认) properties.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, IntegerDeserializer.class.getName()); properties.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class.getName()); //一个新的group的消费者去消费一个topic properties.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG,"earliest"); //这个属性. 它能够消费昨天发布的数据 consumer=new KafkaConsumer<Integer, String>(properties); this.topic = topic; } @Override public void run() { consumer.subscribe(Collections.singleton(this.topic)); while (true){ ConsumerRecords<Integer,String> consumerRecords = consumer.poll(Duration.ofSeconds(1)); consumerRecords.forEach(record ->{ System.out.println(record.key()+"->"+record.value()+"->"+record.offset()); }); } } public static void main(String[] args) { new Consumer("test_partition").start(); } }

生产者代码

package com.wbg.springboot_kafka; import org.apache.kafka.clients.producer.KafkaProducer; import org.apache.kafka.clients.producer.ProducerConfig; import org.apache.kafka.clients.producer.ProducerRecord; import org.apache.kafka.common.serialization.IntegerSerializer; import org.apache.kafka.common.serialization.StringSerializer; import java.util.Properties; import java.util.concurrent.TimeUnit; public class Producer extends Thread { KafkaProducer<Integer, String> producer; String topic; public Producer(String topic) { Properties properties = new Properties(); properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, "192.168.198.129:9092,192.168.198.130:9092,192.168.198.131:9092"); properties.put(ProducerConfig.CLIENT_ID_CONFIG, "producer"); properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, IntegerSerializer.class.getName()); properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName()); producer = new KafkaProducer<Integer, String>(properties); this.topic = topic; } @Override public void run() { int num = 0; while (num < 20) { try { String msg = "kafka msg " + num; producer.send(new ProducerRecord<>(topic, 3, msg), ((recordMetadata, e) -> { System.out.println(recordMetadata.offset() + "->" + recordMetadata.partition()); })); TimeUnit.SECONDS.sleep(2); } catch (InterruptedException e) { e.printStackTrace(); } } } public static void main(String[] args) { new Producer("test_partition").start(); } }

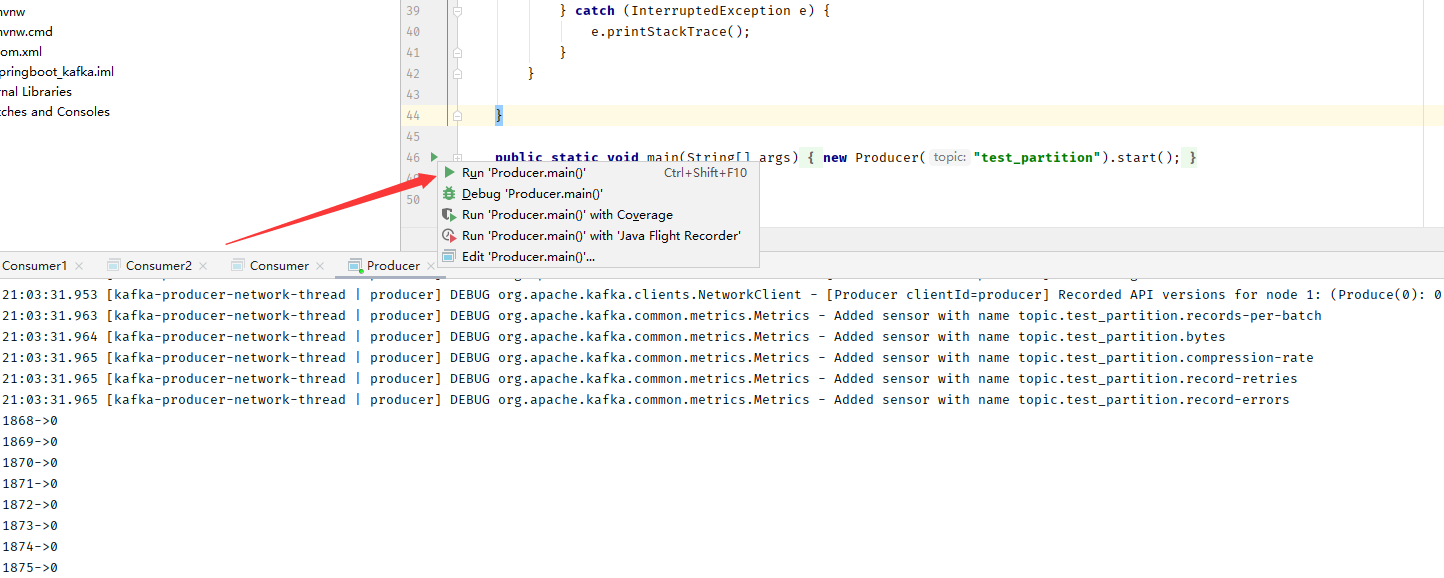

启动生产者

启动消费者

2、SpringBoot注解方式

pom依赖:

<dependency> <groupId>org.springframework.kafka</groupId> <artifactId>spring-kafka</artifactId> <version>2.2.0.RELEASE</version> </dependency>

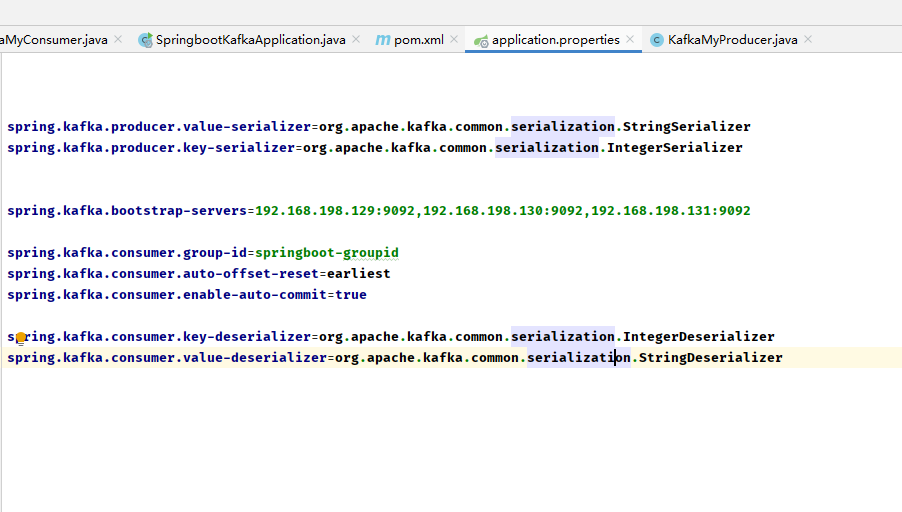

application.properties文件

spring.kafka.producer.value-serializer=org.apache.kafka.common.serialization.StringSerializer spring.kafka.producer.key-serializer=org.apache.kafka.common.serialization.IntegerSerializer spring.kafka.bootstrap-servers=192.168.198.129:9092,192.168.198.130:9092,192.168.198.131:9092 spring.kafka.consumer.group-id=springboot-groupid spring.kafka.consumer.auto-offset-reset=earliest spring.kafka.consumer.enable-auto-commit=true spring.kafka.consumer.key-deserializer=org.apache.kafka.common.serialization.IntegerDeserializer spring.kafka.consumer.value-deserializer=org.apache.kafka.common.serialization.StringDeserializer

消费者代码

@Component public class KafkaMyConsumer { @KafkaListener(topics = {"test"}) public void listener(ConsumerRecord record){ Optional msg = Optional.ofNullable(record.value()); if(msg.isPresent()){ System.out.println(msg.get());; } } }

生产者代码

@Component public class KafkaMyProducer { @Autowired private KafkaTemplate<Integer,String> kafkaTemplate; public void send(){ kafkaTemplate.send("test",1,"msgData"); } }

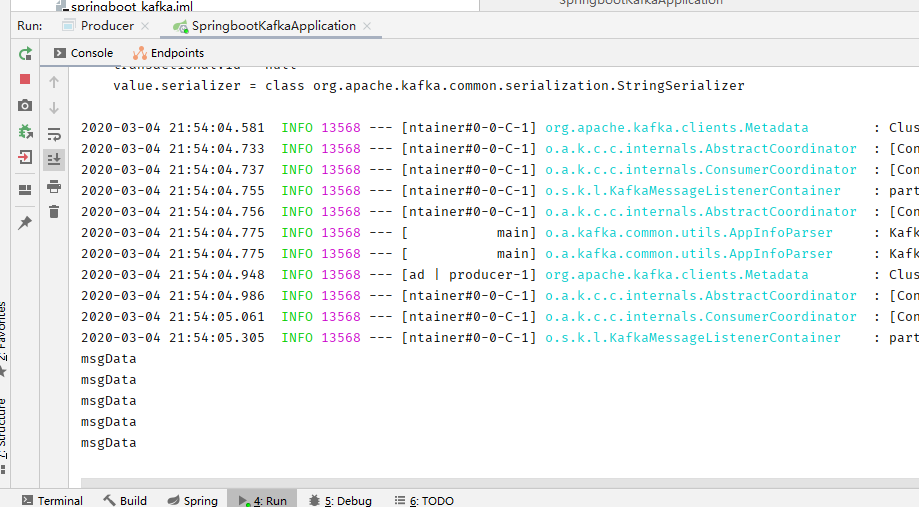

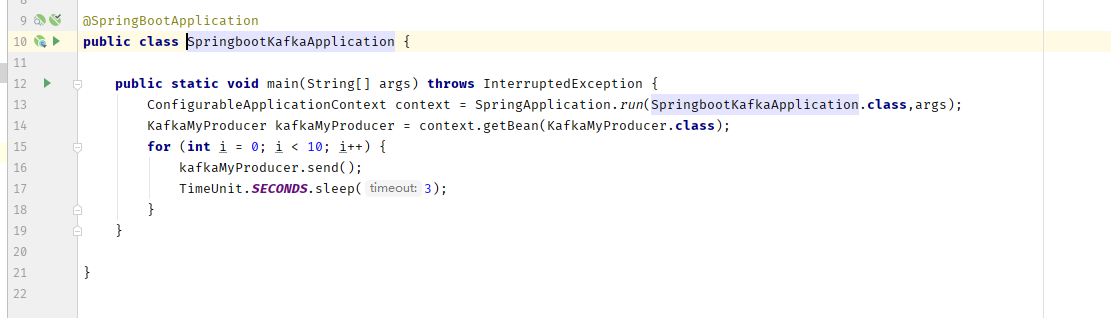

启动

@SpringBootApplication public class SpringbootKafkaApplication { public static void main(String[] args) throws InterruptedException { ConfigurableApplicationContext context = SpringApplication.run(SpringbootKafkaApplication.class,args); KafkaMyProducer kafkaMyProducer = context.getBean(KafkaMyProducer.class); for (int i = 0; i < 10; i++) { kafkaMyProducer.send(); TimeUnit.SECONDS.sleep(3); } } }