------------------------------------------------------------------------------------------------------------------------------------------------------

孤立词参考的例子就是yes/no脚本。

------------------------------------------------------------------------------------------------------------------------------------------------------

这里我们做10个词识别实验,熟悉整条链路。

后续尝试一些新的模型提高识别率;

再尝试模型语速、语调、平稳噪声的鲁棒性,尝试已有去噪处理算法的优化前端;

扩大孤立词的数量,裁剪模型,效率优化,熟悉FST解码器,将嵌入式硬件的孤立词识别能做到实用层面。

最后做连续语音识别、熟悉语言模型。

------------------------------------------------------------------------------------------------------------------------------------------------------

一、录制的语料说明

在egs/创建自己的项目coffee,再创建s5。

拷贝coffee语音库以及yes/no以下文件夹和文件。

.

├── conf

├── data

├── exp

├── input

├── local

├── mfcc

├── Nestle

├── path.sh

├── run.sh

├── steps -> ../../wsj/s5/steps

└── utils -> ../../wsj/s5/utils

Nestle语音库目录结构如下:

.

├── TestData

│ ├── 001_001.wav

│ ├── 001_002.wav

│ ├── 002_001.wav

│ ├── 002_002.wav

│ ├── 003_001.wav

│ ├── 003_002.wav

│ ├── 004_001.wav

│ ├── 004_002.wav

│ ├── 005_001.wav

│ ├── 005_002.wav

│ ├── 006_001.wav

│ ├── 006_002.wav

│ ├── 007_001.wav

│ ├── 007_02.wav

│ ├── 008_001.wav

│ ├── 008_002.wav

│ ├── 009_001.wav

│ ├── 009_002.wav

│ ├── 010_001.wav

│ └── 010_002.wav

└── TrainData

├── FastWord

│ ├── Model2_1

│ ├── Model2_10

│ ├── Model2_2

│ ├── Model2_3

│ ├── Model2_4

│ ├── Model2_5

│ ├── Model2_6

│ ├── Model2_7

│ ├── Model2_8

│ └── Model2_9

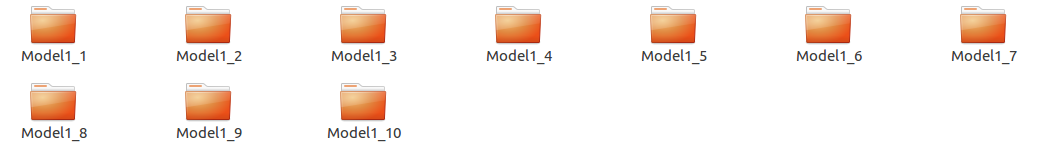

├── MiddWord

│ ├── Model1_1

│ ├── Model1_10

│ ├── Model1_2

│ ├── Model1_3

│ ├── Model1_4

│ ├── Model1_5

│ ├── Model1_6

│ ├── Model1_7

│ ├── Model1_8

│ └── Model1_9

└── SlowWord

├── Model3_1

├── Model3_10

├── Model3_2

├── Model3_3

├── Model3_4

├── Model3_5

├── Model3_6

├── Model3_7

├── Model3_8

└── Model3_9

首先,录制的语料是关于咖啡的品种,分为快速、中速、慢速

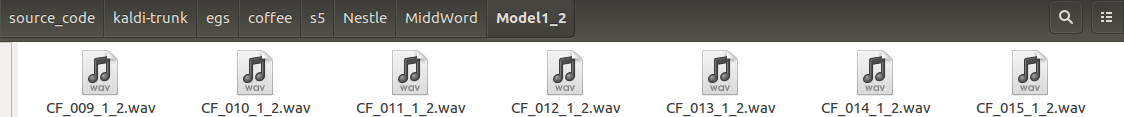

1)文件夹命名规则: Modelx_x

Model1表示中速

Model2表示快速

Model3表示慢速

10个词,后缀表示表示发音id号

2)文件说明

CF_speaker-id_speed_utter-id.wav

CF coffee,语库名缩写

二、脚本修改

由于现有的语音库,词汇表、目录结构、语音文件命名、词典、语言模型,还有对perl不是很熟悉等,所以很多脚本都得重新书写,顺便下python正则表达式。

run.sh 内容如下: #!/bin/bash train_cmd="utils/run.pl" decode_cmd="utils/run.pl" #have data, not need to download #if [ ! -d waves_yesno ]; then # wget http://www.openslr.org/resources/1/waves_yesno.tar.gz || exit 1; # was: # wget http://sourceforge.net/projects/kaldi/files/waves_yesno.tar.gz || exit 1; # tar -xvzf waves_yesno.tar.gz || exit 1; #fi train_yesno=waves_train test_base_name=waves_test #clear data exp mfcc filefolder rm -rf data exp mfcc # Data preparation # we need to rewrite scripts below local/prepare_data.sh Nestle #structure of dir and file name is different local/prepare_dict.sh #dict contains 10 words, not 2. utils/prepare_lang.sh --position-dependent-phones false data/local/dict "<SIL>" data/local/lang data/lang local/prepare_lm.sh echo "Data Prepraration finish!" # Feature extraction for x in waves_test waves_train; do steps/make_mfcc.sh --nj 1 data/$x exp/make_mfcc/$x mfcc steps/compute_cmvn_stats.sh data/$x exp/make_mfcc/$x mfcc utils/fix_data_dir.sh data/$x done echo "Feature extraction finish!" # Mono training steps/train_mono.sh --nj 1 --cmd "$train_cmd" --totgauss 400 data/waves_train data/lang exp/mono0 echo "Mono training finish!" # Graph compilation utils/mkgraph.sh data/lang_test_tg exp/mono0 exp/mono0/graph_tgpr echo "Graph compilation finish!" # Decoding steps/decode.sh --nj 1 --cmd "$decode_cmd" exp/mono0/graph_tgpr data/waves_test exp/mono0/decode_waves_test for x in exp/*/decode*; do [ -d $x ] && grep WER $x/wer_* | utils/best_wer.sh; done

prepare_data.sh

修改如下:

#!/bin/bash

mkdir -p data/local

local=`pwd`/local

scripts=`pwd`/scripts

export PATH=$PATH:`pwd`/../../../tools/irstlm/bin

echo "Preparing train and test data"

train_base_name=waves_train

test_base_name=waves_test

root_dir=$1

train_dir=$root_dir/TrainData/MiddWord

test_dir=$root_dir/TestData

echo fix up uncorrect file name

#fix file name

for subdir in $(ls $train_dir)

do

moddir=$train_dir/$subdir

if [ -d $moddir ];then

for wav_file in $(ls $moddir)

do

#filename=$moddir/$wav_file

python ./local/FixUpName.py $moddir $wav_file

done

fi

done

echo "fix up files finish!"

#遍历各个词的文件夹

#文件列表

#注意空格

#utter_id 文件路径

for subdir in $(ls $train_dir)

do

moddir=$train_dir/$subdir

if [ -d $moddir ];then

echo "$moddir make file list and labels"

#ls -l $moddir > ${moddir}.list

#ls -l $moddir | grep -P 'CF.+.wav' -o > ${moddir}.list

ls -l $moddir | grep -P 'CF(_[0-9]{1,3}){3}.wav' -o >${moddir}.list

if [ -f ${moddir}_waves_train.scp ];then

rm ${moddir}_waves_train.scp

fi

python ./local/create_coffee_labels.py ${moddir}.list ${moddir} ${moddir}_waves_train.scp

fi

done

cat $train_dir/*.list > data/local/waves_train

#sort -u data/local/waves_train -o data/local/waves_train

cat $train_dir/*.scp > data/local/waves_train_wav.scp

#sort -u data/local/waves_train_wav.scp -o data/local/waves_train_wav.scp

ls -l $test_dir | grep -P '[0-9]{1,3}_[0-9]{1,3}.wav' -o >data/local/waves_test

python ./local/create_coffee_test_labels.py data/local/waves_test $test_dir data/local/waves_test_wav.scp

#sort -u data/local/waves_test -o data/local/waves_test

#sort -u data/local/waves_test_wav.scp -o data/local/waves_test_wav.scp

echo "$moddir make file list and labels finish!"

echo "$moddir make file utter"

cd data/local

#测试数据的命名与训练数据的命名不一致

python ../../local/create_coffee_test_txt.py waves_test ../../input/trans.txt ${test_base_name}.txt

python ../../local/create_coffee_txt.py waves_train ../../input/trans.txt ${train_base_name}.txt

echo "make make file utter finish"

#sort -u ${test_base_name}.txt -o ${test_base_name}.txt

#sort -u ${train_base_name}.txt -o ${train_base_name}.txt

cp ../../input/task.arpabo lm_tg.arpa

cd ../..

#暂时不区分说话人

# This stage was copied from WSJ example

for x in waves_train waves_test; do

mkdir -p data/$x

cp data/local/${x}_wav.scp data/$x/wav.scp

echo "data/$x/wav.scp sort!"

sort -u data/$x/wav.scp -o data/$x/wav.scp

cp data/local/$x.txt data/$x/text

echo "data/$x/text sort!"

sort -u data/$x/text -o data/$x/text

cat data/$x/text | awk '{printf("%s global

", $1);}' >data/$x/utt2spk

echo "data/$x/utt2spk sort!"

sort -u data/$x/utt2spk -o data/$x/utt2spk

#create_coffee_speaker.py

utils/utt2spk_to_spk2utt.pl <data/$x/utt2spk >data/$x/spk2utt

done

FixUpName.py #coding=utf-8 import re import sys import os def addzeros(matched): digits = matched.group('digits') n = len(digits) zeros = '0'*(3-n) return (zeros+digits) moddir=sys.argv[1] wav_file=sys.argv[2] filename=os.path.join(moddir,wav_file) out_file = re.sub('(?P<digits>d+)',addzeros,wav_file) #print wav_file #print out_file outname=os.path.join(moddir,out_file) if os.path.exists(filename): os.rename(filename, outname);

create_coffee_labels.py #coding=utf-8 import re import codecs import sys import os list_file = sys.argv[1]#os.path.join(os.getcwd(),sys.argv[1]) wav_dir = sys.argv[2] label_file = sys.argv[3]#os.path.join(os.getcwd(),sys.argv[3]) #print list_file, label_file file_object = codecs.open(list_file, 'r', 'utf-8') try: all_the_text = file_object.readlines() finally: file_object.close() pattern = r"CF_(.+).wav" try: utf_file = codecs.open(label_file, 'w', 'utf-8') for each_text in all_the_text: print each_text ret = re.findall(pattern, each_text) #print ret[0] if len(ret) > 0: content = ret[0] + " " + wav_dir +"/"+each_text #print content utf_file.write(content) finally: utf_file.close()

create_coffee_test_labels.py #coding=utf-8 import re import codecs import sys import os list_file = sys.argv[1]#os.path.join(os.getcwd(),sys.argv[1]) wav_dir = sys.argv[2] label_file = sys.argv[3]#os.path.join(os.getcwd(),sys.argv[3]) #print list_file, label_file file_object = codecs.open(list_file, 'r', 'utf-8') try: all_the_text = file_object.readlines() finally: file_object.close() pattern = r"(.+).wav" try: utf_file = codecs.open(label_file, 'w', 'utf-8') for each_text in all_the_text: print each_text ret = re.findall(pattern, each_text) #print ret[0] if len(ret) > 0: content = ret[0] + " " + wav_dir +"/"+each_text #print content utf_file.write(content) finally: utf_file.close()

create_coffee_txt.py #coding=utf-8 import re import codecs import sys import os filename = sys.argv[1] #print filename wordfile = sys.argv[2] #print wordfile outname = sys.argv[3] #print wordfile word_object = codecs.open(wordfile, 'r', 'utf-8') try: all_the_words = word_object.readlines() #print all_the_words[0] finally: word_object.close() #print filename file_object = codecs.open(filename, 'r', 'utf-8') try: all_the_text = file_object.readlines() finally: file_object.close() pattern = r"_(d+).wav" try: out_file = codecs.open(outname, 'w', 'utf-8') for each_text in all_the_text: #print each_text ret = re.findall(pattern, each_text) #print ret[0] if len(ret) > 0: index = int(ret[0]) #print all_the_words[index-1] content = each_text[3:-5]+ " " + all_the_words[index-1] #print content out_file.write(content) finally: out_file.close()

create_coffee_test_txt.py #coding=utf-8 import re import codecs import sys import os filename = sys.argv[1] print filename wordfile = sys.argv[2] print wordfile outname = sys.argv[3] #print wordfile word_object = codecs.open(wordfile, 'r', 'utf-8') try: all_the_words = word_object.readlines() print all_the_words[0] finally: word_object.close() #print filename file_object = codecs.open(filename, 'r', 'utf-8') try: all_the_text = file_object.readlines() finally: file_object.close() pattern = r"(d+)_.+.wav" try: out_file = codecs.open(outname, 'w', 'utf-8') for each_text in all_the_text: print each_text ret = re.findall(pattern, each_text) print ret[0] if len(ret) > 0: index = int(ret[0]) print "test_data" print all_the_words[index-1] content = each_text[:-5]+ " " + all_the_words[index-1] #print content out_file.write(content) finally: out_file.close()

三、实验流程

1.准备好语音库

2.准备好脚本文件

local里面的所有脚本文件。

├── create_coffee_labels.py

├── create_coffee_speaker.py

├── create_coffee_test_labels.py

├── create_coffee_test_txt.py

├── create_coffee_txt.py

├── FixUpName.py

├── prepare_data.sh

├── prepare_dict.sh

├── prepare_lm.sh

└── score.sh

input里面的所有文件。

.

├── lexicon_nosil.txt

├── lexicon.txt

├── phones.txt

├── task.arpabo

└── trans.txt

conf 配置文件夹

conf

├── mfcc.conf

└── topo_orig.proto

---------------------------------------------------------------------------------------------------------------------------------------------------------------------

1.数据准备阶段

prepare_data.sh 运行完,得到 text,wav.scp,utt2spk, spk2utt文件,这几个文件需要保证已排序。

text : < uttid > < word >

wav.scp : < uttid > < utter_file_path >

utt2spk : < uttid > < speakid >

spk2utt : < speakid > < uttid >

word.txt : 同 text

2.准备好词典,生成语言模型文件

input文件夹下:

├── lexicon_nosil.txt

├── lexicon.txt

├── phones.txt

└── trans.txt

lexicon.txt: 词典,包括语料中涉及的词汇与发音。

lexicon_nosil.txt 不包含静音的词典。

phones.txt 所有发音音素

trans.txt 训练的所有词汇表

生成语言模型

task.arpabo 用 ngram-count trans.txt生成。

四、碰到的问题

1.shell脚本输出文件名,不能有空格。

2.生成语言模型,生成arpa文件,对于孤立词来说,语言模型没有意义,这个可能是kaldi整个架构的原因,必须要有语言模型。

ngram-count3.文件命名规范,需要进行排序,如果没有,可能报如下错误。

echo "$0: file $1 is not in sorted order or has duplicates" && exit 1;

有时加 sort -u 就可以保证有序 ,但是如果命名不规范,依然报错,所以自己写了个脚本,对语音库文件重新命名。

FixUpName.py

五、跑完的流程

make make file utter finish data/waves_train/wav.scp sort! data/waves_train/text sort! data/waves_train/utt2spk sort! data/waves_test/wav.scp sort! data/waves_test/text sort! data/waves_test/utt2spk sort! Dictionary preparation succeeded utils/prepare_lang.sh --position-dependent-phones false data/local/dict <SIL> data/local/lang data/lang Checking data/local/dict/silence_phones.txt ... --> reading data/local/dict/silence_phones.txt --> data/local/dict/silence_phones.txt is OK Checking data/local/dict/optional_silence.txt ... --> reading data/local/dict/optional_silence.txt --> data/local/dict/optional_silence.txt is OK Checking data/local/dict/nonsilence_phones.txt ... --> reading data/local/dict/nonsilence_phones.txt --> data/local/dict/nonsilence_phones.txt is OK Checking disjoint: silence_phones.txt, nonsilence_phones.txt --> disjoint property is OK. Checking data/local/dict/lexicon.txt --> reading data/local/dict/lexicon.txt --> data/local/dict/lexicon.txt is OK Checking data/local/dict/extra_questions.txt ... --> data/local/dict/extra_questions.txt is empty (this is OK) --> SUCCESS [validating dictionary directory data/local/dict] **Creating data/local/dict/lexiconp.txt from data/local/dict/lexicon.txt fstaddselfloops data/lang/phones/wdisambig_phones.int data/lang/phones/wdisambig_words.int prepare_lang.sh: validating output directory utils/validate_lang.pl data/lang Checking data/lang/phones.txt ... --> data/lang/phones.txt is OK Checking words.txt: #0 ... --> data/lang/words.txt is OK Checking disjoint: silence.txt, nonsilence.txt, disambig.txt ... --> silence.txt and nonsilence.txt are disjoint --> silence.txt and disambig.txt are disjoint --> disambig.txt and nonsilence.txt are disjoint --> disjoint property is OK Checking sumation: silence.txt, nonsilence.txt, disambig.txt ... --> summation property is OK Checking data/lang/phones/context_indep.{txt, int, csl} ... --> 1 entry/entries in data/lang/phones/context_indep.txt --> data/lang/phones/context_indep.int corresponds to data/lang/phones/context_indep.txt --> data/lang/phones/context_indep.csl corresponds to data/lang/phones/context_indep.txt --> data/lang/phones/context_indep.{txt, int, csl} are OK Checking data/lang/phones/nonsilence.{txt, int, csl} ... --> 10 entry/entries in data/lang/phones/nonsilence.txt --> data/lang/phones/nonsilence.int corresponds to data/lang/phones/nonsilence.txt --> data/lang/phones/nonsilence.csl corresponds to data/lang/phones/nonsilence.txt --> data/lang/phones/nonsilence.{txt, int, csl} are OK Checking data/lang/phones/silence.{txt, int, csl} ... --> 1 entry/entries in data/lang/phones/silence.txt --> data/lang/phones/silence.int corresponds to data/lang/phones/silence.txt --> data/lang/phones/silence.csl corresponds to data/lang/phones/silence.txt --> data/lang/phones/silence.{txt, int, csl} are OK Checking data/lang/phones/optional_silence.{txt, int, csl} ... --> 1 entry/entries in data/lang/phones/optional_silence.txt --> data/lang/phones/optional_silence.int corresponds to data/lang/phones/optional_silence.txt --> data/lang/phones/optional_silence.csl corresponds to data/lang/phones/optional_silence.txt --> data/lang/phones/optional_silence.{txt, int, csl} are OK Checking data/lang/phones/disambig.{txt, int, csl} ... --> 2 entry/entries in data/lang/phones/disambig.txt --> data/lang/phones/disambig.int corresponds to data/lang/phones/disambig.txt --> data/lang/phones/disambig.csl corresponds to data/lang/phones/disambig.txt --> data/lang/phones/disambig.{txt, int, csl} are OK Checking data/lang/phones/roots.{txt, int} ... --> 11 entry/entries in data/lang/phones/roots.txt --> data/lang/phones/roots.int corresponds to data/lang/phones/roots.txt --> data/lang/phones/roots.{txt, int} are OK Checking data/lang/phones/sets.{txt, int} ... --> 11 entry/entries in data/lang/phones/sets.txt --> data/lang/phones/sets.int corresponds to data/lang/phones/sets.txt --> data/lang/phones/sets.{txt, int} are OK Checking data/lang/phones/extra_questions.{txt, int} ... Checking optional_silence.txt ... --> reading data/lang/phones/optional_silence.txt --> data/lang/phones/optional_silence.txt is OK Checking disambiguation symbols: #0 and #1 --> data/lang/phones/disambig.txt has "#0" and "#1" --> data/lang/phones/disambig.txt is OK Checking topo ... Checking word-level disambiguation symbols... --> data/lang/phones/wdisambig.txt exists (newer prepare_lang.sh) Checking data/lang/oov.{txt, int} ... --> 1 entry/entries in data/lang/oov.txt --> data/lang/oov.int corresponds to data/lang/oov.txt --> data/lang/oov.{txt, int} are OK --> data/lang/L.fst is olabel sorted --> data/lang/L_disambig.fst is olabel sorted --> SUCCESS [validating lang directory data/lang] Preparing language models for test arpa2fst --disambig-symbol=#0 --read-symbol-table=data/lang_test_tg/words.txt input/task.arpabo data/lang_test_tg/G.fst LOG (arpa2fst[5.2.124~1396-70748]:Read():arpa-file-parser.cc:98) Reading data section. LOG (arpa2fst[5.2.124~1396-70748]:Read():arpa-file-parser.cc:153) Reading 1-grams: section. LOG (arpa2fst[5.2.124~1396-70748]:RemoveRedundantStates():arpa-lm-compiler.cc:359) Reduced num-states from 1 to 1 fstisstochastic data/lang_test_tg/G.fst 7.27531e-08 7.27531e-08 Succeeded in formatting data. Data Prepraration finish! steps/make_mfcc.sh --nj 1 data/waves_test exp/make_mfcc/waves_test mfcc utils/validate_data_dir.sh: WARNING: you have only one speaker. This probably a bad idea. Search for the word 'bold' in http://kaldi-asr.org/doc/data_prep.html for more information. utils/validate_data_dir.sh: Successfully validated data-directory data/waves_test steps/make_mfcc.sh: [info]: no segments file exists: assuming wav.scp indexed by utterance. Succeeded creating MFCC features for waves_test steps/compute_cmvn_stats.sh data/waves_test exp/make_mfcc/waves_test mfcc Succeeded creating CMVN stats for waves_test fix_data_dir.sh: kept all 20 utterances. fix_data_dir.sh: old files are kept in data/waves_test/.backup steps/make_mfcc.sh --nj 1 data/waves_train exp/make_mfcc/waves_train mfcc utils/validate_data_dir.sh: WARNING: you have only one speaker. This probably a bad idea. Search for the word 'bold' in http://kaldi-asr.org/doc/data_prep.html for more information. utils/validate_data_dir.sh: Successfully validated data-directory data/waves_train steps/make_mfcc.sh: [info]: no segments file exists: assuming wav.scp indexed by utterance. Succeeded creating MFCC features for waves_train steps/compute_cmvn_stats.sh data/waves_train exp/make_mfcc/waves_train mfcc Succeeded creating CMVN stats for waves_train fix_data_dir.sh: kept all 680 utterances. fix_data_dir.sh: old files are kept in data/waves_train/.backup Feature extraction finish! steps/train_mono.sh --nj 1 --cmd utils/run.pl --totgauss 400 data/waves_train data/lang exp/mono0 steps/train_mono.sh: Initializing monophone system. steps/train_mono.sh: Compiling training graphs steps/train_mono.sh: Aligning data equally (pass 0) steps/train_mono.sh: Pass 1 steps/train_mono.sh: Aligning data steps/train_mono.sh: Pass 2 steps/train_mono.sh: Aligning data steps/train_mono.sh: Pass 3 steps/train_mono.sh: Aligning data steps/train_mono.sh: Pass 4 steps/train_mono.sh: Aligning data steps/train_mono.sh: Pass 5 steps/train_mono.sh: Aligning data steps/train_mono.sh: Pass 6 steps/train_mono.sh: Aligning data steps/train_mono.sh: Pass 7 steps/train_mono.sh: Aligning data steps/train_mono.sh: Pass 8 steps/train_mono.sh: Aligning data steps/train_mono.sh: Pass 9 steps/train_mono.sh: Aligning data steps/train_mono.sh: Pass 10 steps/train_mono.sh: Aligning data steps/train_mono.sh: Pass 11 steps/train_mono.sh: Pass 12 steps/train_mono.sh: Aligning data steps/train_mono.sh: Pass 13 steps/train_mono.sh: Pass 14 steps/train_mono.sh: Aligning data steps/train_mono.sh: Pass 15 steps/train_mono.sh: Pass 16 steps/train_mono.sh: Aligning data steps/train_mono.sh: Pass 17 steps/train_mono.sh: Pass 18 steps/train_mono.sh: Aligning data steps/train_mono.sh: Pass 19 steps/train_mono.sh: Pass 20 steps/train_mono.sh: Aligning data steps/train_mono.sh: Pass 21 steps/train_mono.sh: Pass 22 steps/train_mono.sh: Pass 23 steps/train_mono.sh: Aligning data steps/train_mono.sh: Pass 24 steps/train_mono.sh: Pass 25 steps/train_mono.sh: Pass 26 steps/train_mono.sh: Aligning data steps/train_mono.sh: Pass 27 steps/train_mono.sh: Pass 28 steps/train_mono.sh: Pass 29 steps/train_mono.sh: Aligning data steps/train_mono.sh: Pass 30 steps/train_mono.sh: Pass 31 steps/train_mono.sh: Pass 32 steps/train_mono.sh: Aligning data steps/train_mono.sh: Pass 33 steps/train_mono.sh: Pass 34 steps/train_mono.sh: Pass 35 steps/train_mono.sh: Aligning data steps/train_mono.sh: Pass 36 steps/train_mono.sh: Pass 37 steps/train_mono.sh: Pass 38 steps/train_mono.sh: Aligning data steps/train_mono.sh: Pass 39 steps/diagnostic/analyze_alignments.sh --cmd utils/run.pl data/lang exp/mono0 analyze_phone_length_stats.py: WARNING: optional-silence SIL is seen only 32.6470588235% of the time at utterance begin. This may not be optimal. steps/diagnostic/analyze_alignments.sh: see stats in exp/mono0/log/analyze_alignments.log 1 warnings in exp/mono0/log/analyze_alignments.log exp/mono0: nj=1 align prob=-99.75 over 0.14h [retry=0.0%, fail=0.0%] states=35 gauss=396 steps/train_mono.sh: Done training monophone system in exp/mono0 Mono training finish! tree-info exp/mono0/tree tree-info exp/mono0/tree fstpushspecial fsttablecompose data/lang_test_tg/L_disambig.fst data/lang_test_tg/G.fst fstminimizeencoded fstdeterminizestar --use-log=true fstisstochastic data/lang_test_tg/tmp/LG.fst 0.000133727 0.000118745 fstcomposecontext --context-size=1 --central-position=0 --read-disambig-syms=data/lang_test_tg/phones/disambig.int --write-disambig-syms=data/lang_test_tg/tmp/disambig_ilabels_1_0.int data/lang_test_tg/tmp/ilabels_1_0.30709 fstisstochastic data/lang_test_tg/tmp/CLG_1_0.fst 0.000133727 0.000118745 make-h-transducer --disambig-syms-out=exp/mono0/graph_tgpr/disambig_tid.int --transition-scale=1.0 data/lang_test_tg/tmp/ilabels_1_0 exp/mono0/tree exp/mono0/final.mdl fsttablecompose exp/mono0/graph_tgpr/Ha.fst data/lang_test_tg/tmp/CLG_1_0.fst fstrmepslocal fstrmsymbols exp/mono0/graph_tgpr/disambig_tid.int fstminimizeencoded fstdeterminizestar --use-log=true fstisstochastic exp/mono0/graph_tgpr/HCLGa.fst 0.000286827 -0.000292581 add-self-loops --self-loop-scale=0.1 --reorder=true exp/mono0/final.mdl Graph compilation finish! steps/decode.sh --nj 1 --cmd utils/run.pl exp/mono0/graph_tgpr data/waves_test exp/mono0/decode_waves_test decode.sh: feature type is delta steps/diagnostic/analyze_lats.sh --cmd utils/run.pl exp/mono0/graph_tgpr exp/mono0/decode_waves_test analyze_phone_length_stats.py: WARNING: optional-silence SIL is seen only 55.0% of the time at utterance begin. This may not be optimal. steps/diagnostic/analyze_lats.sh: see stats in exp/mono0/decode_waves_test/log/analyze_alignments.log Overall, lattice depth (10,50,90-percentile)=(3,8,31) and mean=13.9 steps/diagnostic/analyze_lats.sh: see stats in exp/mono0/decode_waves_test/log/analyze_lattice_depth_stats.log %WER 65.00 [ 13 / 20, 11 ins, 0 del, 2 sub ] exp/mono0/decode_waves_test/wer_10