1.安装环境

操作系统版本:

#cat /etc/redhat-release

CentOS Linux release 7.6.1810 (Core)

内核版本:

#uname -a

Linux master.k8s.com 3.10.0-957.27.2.el7.x86_64 #1 SMP Mon Jul 29 17:46:05 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux

主机名 IP 地址

master.k8s.com 192.168.25.65

node.k8s.com 192.168.25.66

2.修改master和node的hosts文件

# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.25.65 master.k8s.com

192.168.25.66 node.k8s.com

3.安装chrony实现所有服务器间的时间同步

# yum install chrony -y

# systemctl start chronyd

# sed -i -e '/^server/s/^/#/' -e '1a server ntp.aliyun.com iburst' /etc/chrony.conf

# systemctl restart chronyd

# timedatectl set-timezone Asia/Shanghai

# timedatectl

Local time: Fri 2020-11-27 16:06:42 CST

Universal time: Fri 2020-11-27 08:06:42 UTC

RTC time: Fri 2020-11-27 08:06:42

Time zone: Asia/Shanghai (CST, +0800)

NTP enabled: yes

NTP synchronized: yes

RTC in local TZ: no

DST active: n/a

4.关闭master和node的防火墙和selinux

# systemctl stop firewalld && systemctl disable firewalld

# sed -ri 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

# 主要查看SELINUX=disabled,如果SELINUX=eabled 需要修改为disabled

# setenforce 0

# getenforce

# 关掉swap

# swapoff -a

# 要永久禁掉swap分区,打开如下文件注释掉swap那一行

# vi /etc/fstab

5.配置系统内核参数和调优

配置sysctl内核参数

$ cat > /etc/sysctl.conf <<EOF

vm.max_map_count=262144

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

生效文件

$ sysctl -p

修改Linux 资源配置文件,调高ulimit最大打开数和systemctl管理的服务文件最大打开数

$ echo "* soft nofile 655360" >> /etc/security/limits.conf

$ echo "* hard nofile 655360" >> /etc/security/limits.conf

$ echo "* soft nproc 655360" >> /etc/security/limits.conf

$ echo "* hard nproc 655360" >> /etc/security/limits.conf

$ echo "* soft memlock unlimited" >> /etc/security/limits.conf

$ echo "* hard memlock unlimited" >> /etc/security/limits.conf

$ echo "DefaultLimitNOFILE=1024000" >> /etc/systemd/system.conf

$ echo "DefaultLimitNPROC=1024000" >> /etc/systemd/system.conf

6.master和node上安装docker

# 安装依赖包

# yum install -y yum-utils device-mapper-persistent-data lvm2

# 添加docker软件包的yum源

# yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

# 关闭测试版本list(只显示稳定版)

# yum-config-manager --enable docker-ce-edge

# yum-config-manager --enable docker-ce-test

# 更新yum包索引

# yum makecache fast

# 安装docker

# 直接安装Docker CE

# yum install docker-ce

# 若需要安装指定版本的Docker CE

# yum list docker-ce --showduplicates|sort -r #找到需要安装的

# yum install docker-ce-18.06.0.ce -y

#启动docker

# systemctl start docker & systemctl enable docker

#配置docker 使用阿里云加速

#vi /etc/docker/daemon.json

{

"registry-mirrors": ["https://q2hy3fzi.mirror.aliyuncs.com"]

}

#systemctl daemon-reload && systemctl restart docker

7. 配置节点间ssh互信

配置ssh互信,那么节点之间就能无密访问,方便日后执行自动化部署

# ssh-keygen # 每台机器执行这个命令, 一路回车即可

# ssh-copy-id node # 到master上拷贝公钥到其他节点,这里需要输入 yes和密码

8.master和node上安装k8s 工具

更换yum源为阿里源

# vi /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

# yum安装k8s工具

# yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

# 或指定版本安装

# yum install -y kubelet-1.19.4 kubeadm-1.19.4 kubectl-1.19.4 --disableexcludes=kubernetes

# 启动k8s服务

# systemctl enable kubelet && systemctl start kubelet

# 查看版本号

# kubeadm version

配置iptable

# vi /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

vm.swappiness=0

# 保存后执行

# sysctl --system

9.master节点获取要下载的镜像列表及初始化

# kubeadm config images list

W1127 16:52:01.979405 19281 configset.go:348] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

k8s.gcr.io/kube-apiserver:v1.19.4

k8s.gcr.io/kube-controller-manager:v1.19.4

k8s.gcr.io/kube-scheduler:v1.19.4

k8s.gcr.io/kube-proxy:v1.19.4

k8s.gcr.io/pause:3.2

k8s.gcr.io/etcd:3.4.13-0

k8s.gcr.io/coredns:1.7.0

# 制作下载镜像的脚本 可按照如下的设置

# vi docker.sh

#!/bin/bash

docker pull registry.aliyuncs.com/google_containers/kube-apiserver:v1.19.4

docker tag registry.aliyuncs.com/google_containers/kube-apiserver:v1.19.4 k8s.gcr.io/kube-apiserver:v1.19.4

docker rmi registry.aliyuncs.com/google_containers/kube-apiserver:v1.19.4

docker pull registry.aliyuncs.com/google_containers/kube-controller-manager:v1.19.4

docker tag registry.aliyuncs.com/google_containers/kube-controller-manager:v1.19.4 k8s.gcr.io/kube-controller-manager:v1.19.4

docker rmi registry.aliyuncs.com/google_containers/kube-controller-manager:v1.19.4

docker pull registry.aliyuncs.com/google_containers/kube-scheduler:v1.19.4

docker tag registry.aliyuncs.com/google_containers/kube-scheduler:v1.19.4 k8s.gcr.io/kube-scheduler:v1.19.4

docker rmi registry.aliyuncs.com/google_containers/kube-scheduler:v1.19.4

docker pull registry.aliyuncs.com/google_containers/kube-proxy:v1.19.4

docker tag registry.aliyuncs.com/google_containers/kube-proxy:v1.19.4 k8s.gcr.io/kube-proxy:v1.19.4

docker rmi registry.aliyuncs.com/google_containers/kube-proxy:v1.19.4

docker pull registry.aliyuncs.com/google_containers/etcd:3.4.13-0

docker tag registry.aliyuncs.com/google_containers/etcd:3.4.13-0 k8s.gcr.io/etcd:3.4.13-0

docker rmi registry.aliyuncs.com/google_containers/etcd:3.4.13-0

docker pull registry.aliyuncs.com/google_containers/pause:3.2

docker tag registry.aliyuncs.com/google_containers/pause:3.2 k8s.gcr.io/pause:3.2

docker rmi registry.aliyuncs.com/google_containers/pause:3.2

docker pull coredns/coredns:1.7.0

docker tag coredns/coredns:1.7.0 k8s.gcr.io/coredns:1.7.0

docker rmi coredns/coredns:1.7.0

# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

k8s.gcr.io/kube-proxy v1.19.4 635b36f4d89f 2 weeks ago 118MB

k8s.gcr.io/kube-controller-manager v1.19.4 4830ab618586 2 weeks ago 111MB

k8s.gcr.io/kube-apiserver v1.19.4 b15c6247777d 2 weeks ago 119MB

k8s.gcr.io/kube-scheduler v1.19.4 14cd22f7abe7 2 weeks ago 45.7MB

k8s.gcr.io/etcd 3.4.13-0 0369cf4303ff 3 months ago 253MB

k8s.gcr.io/coredns 1.7.0 bfe3a36ebd25 5 months ago 45.2MB

k8s.gcr.io/pause 3.2 80d28bedfe5d 9 months ago 683kB

#master 初始化操作

#kubeadm init --kubernetes-version=1.19.4

--apiserver-advertise-address=192.168.25.65

--service-cidr=10.1.0.0/16

--pod-network-cidr=10.244.0.0/16

执行输出;

# mkdir -p $HOME/.kube

# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

# sudo chown $(id -u):$(id -g) $HOME/.kube/config

#集群主节点安装成功,这里要记得保存这条命令,以便之后各个节点加入集群:

You can now join any number of machines by running the following on each node

as root:

kubeadm join 192.168.25.65:6443 --token ygn0n5.6yutikspk9y64rdt

--discovery-token-ca-cert-hash sha256:602fc447acaa422ce73a1ec1b506e219d90ace3de1c23a4f563e7151cc7def50

#配置kubetl认证信息

#echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

#source ~/.bash_profile

或

#export KUBECONFIG=/etc/kubernetes/admin.conf

#source ~/.bash_profile

#查看一下集群pod,确认个组件都处于Running 状态

#注意由于master节点上存在污点,所以coredns 暂时还无法正常启动。

[root@master ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-f9fd979d6-24cl7 0/1 Pending 0 3m34s

coredns-f9fd979d6-fp8gh 0/1 Pending 0 3m34s

etcd-master.k8s.com 1/1 Running 0 3m44s

kube-apiserver-master.k8s.com 1/1 Running 0 3m44s

kube-controller-manager-master.k8s.com 1/1 Running 0 3m44s

kube-proxy-gxqxq 1/1 Running 0 3m34s

kube-scheduler-master.k8s.com 1/1 Running 0 3m44s

10.给集群部署flannel 网络组件

#配置flannel网络

#mkdir -p /root/k8s/

#cd /root/k8s

#wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

查看需要下载的镜像

# cat kube-flannel.yml |grep image|uniq

image: quay.io/coreos/flannel:v0.13.1-rc1

下载镜像

# docker pull quay.io/coreos/flannel:v0.13.1-rc1

部署插件

# kubectl apply -f kube-flannel.yml

注意:如果 https://raw.githubusercontent.com 不能访问请使用:

# 打开https://site.ip138.com/raw.Githubusercontent.com/ 输入raw.githubusercontent.com 查询IP地址

# 修改hosts Ubuntu,CentOS及macOS直接在终端输入

# vi /etc/hosts

151.101.76.133 raw.githubusercontent.com

如果yml中的"Network": "10.244.0.0/16"和kubeadm init xxx --pod-network-cidr不一样,就需要修改成一样的。不然可能会使得Node间Cluster IP不通。

由于我上面的kubeadm init xxx --pod-network-cidr就是10.244.0.0/16。所以此yaml文件就不需要更改了。

11.配置 k8s集群 命令补全

#(仅master)

#yum install -y bash-completion

#source <(kubectl completion bash)

#echo "source <(kubectl completion bash)" >> ~/.bashrc

#source ~/.bashrc

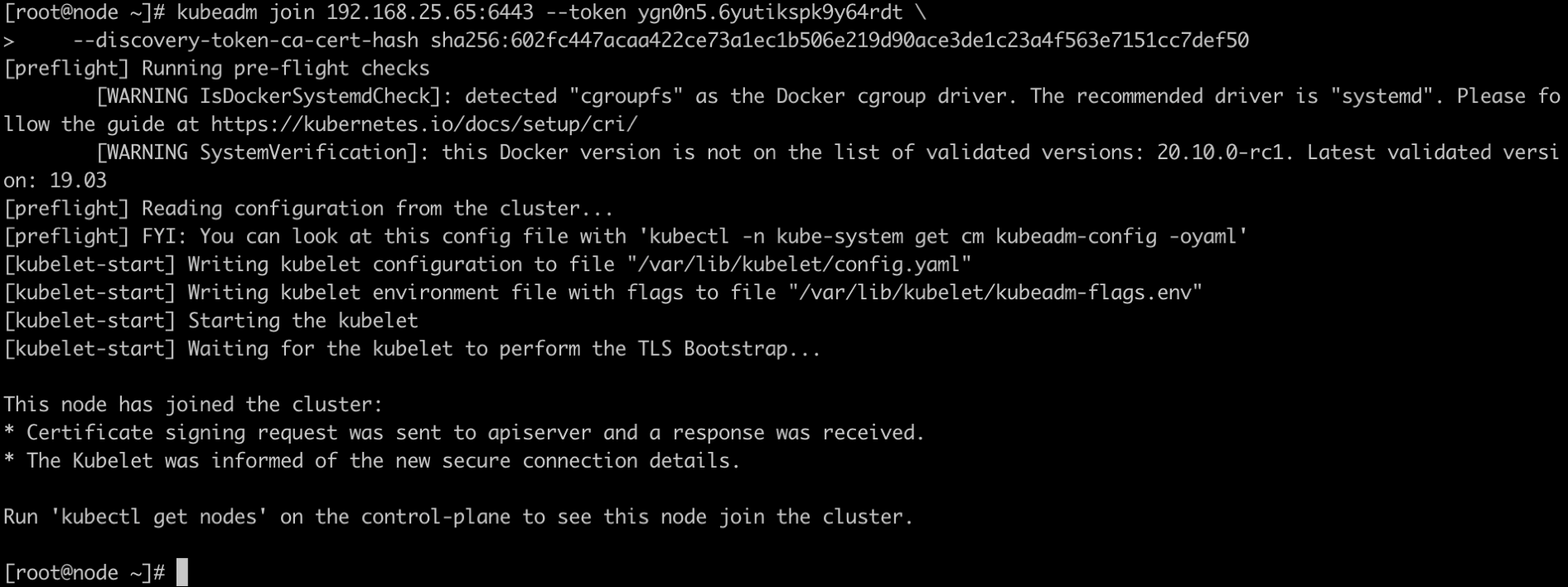

12.node加入集群

# kubeadm join 192.168.25.65:6443 --token ygn0n5.6yutikspk9y64rdt

> --discovery-token-ca-cert-hash sha256:602fc447acaa422ce73a1ec1b506e219d90ace3de1c23a4f563e7151cc7def50

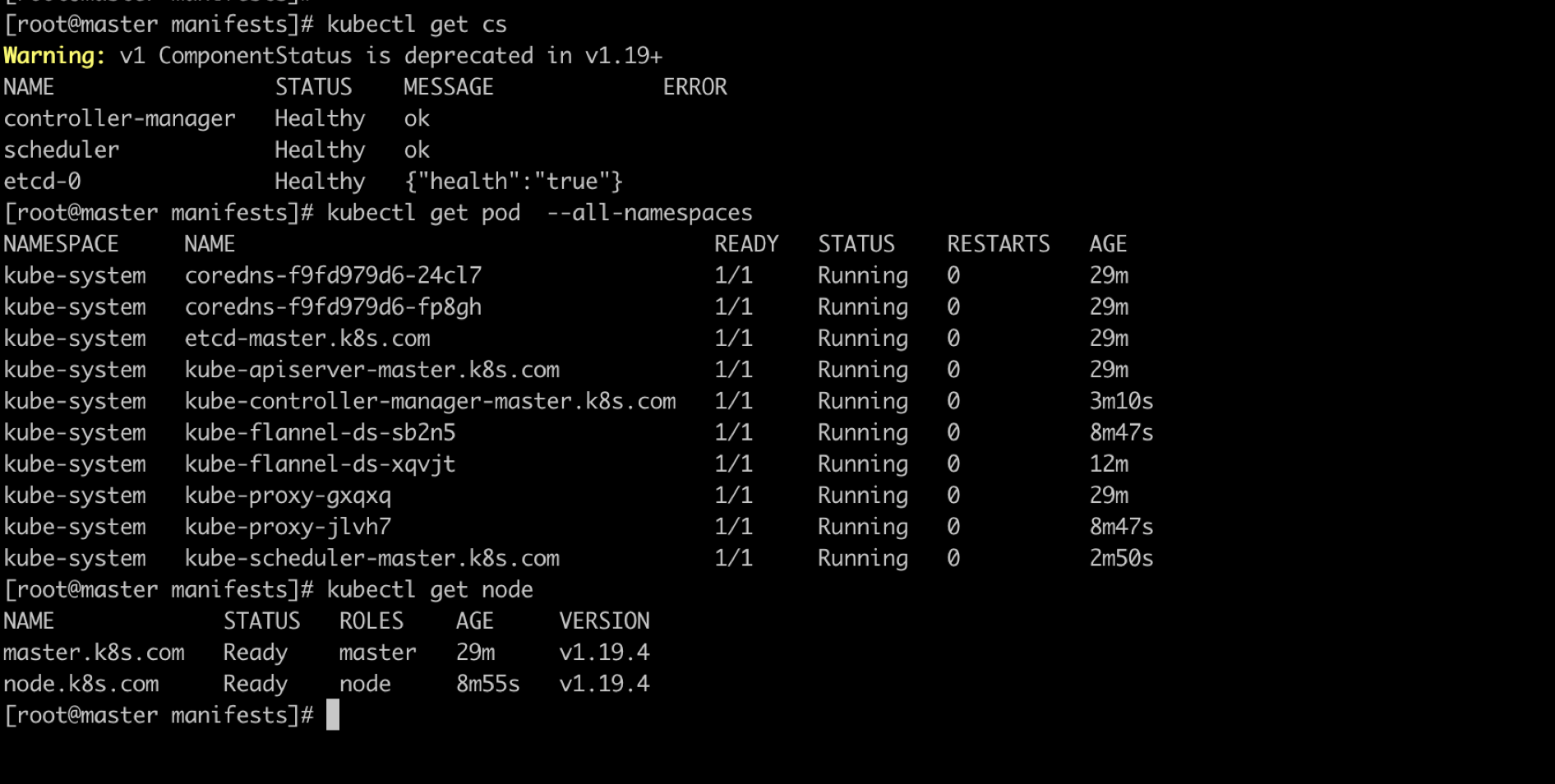

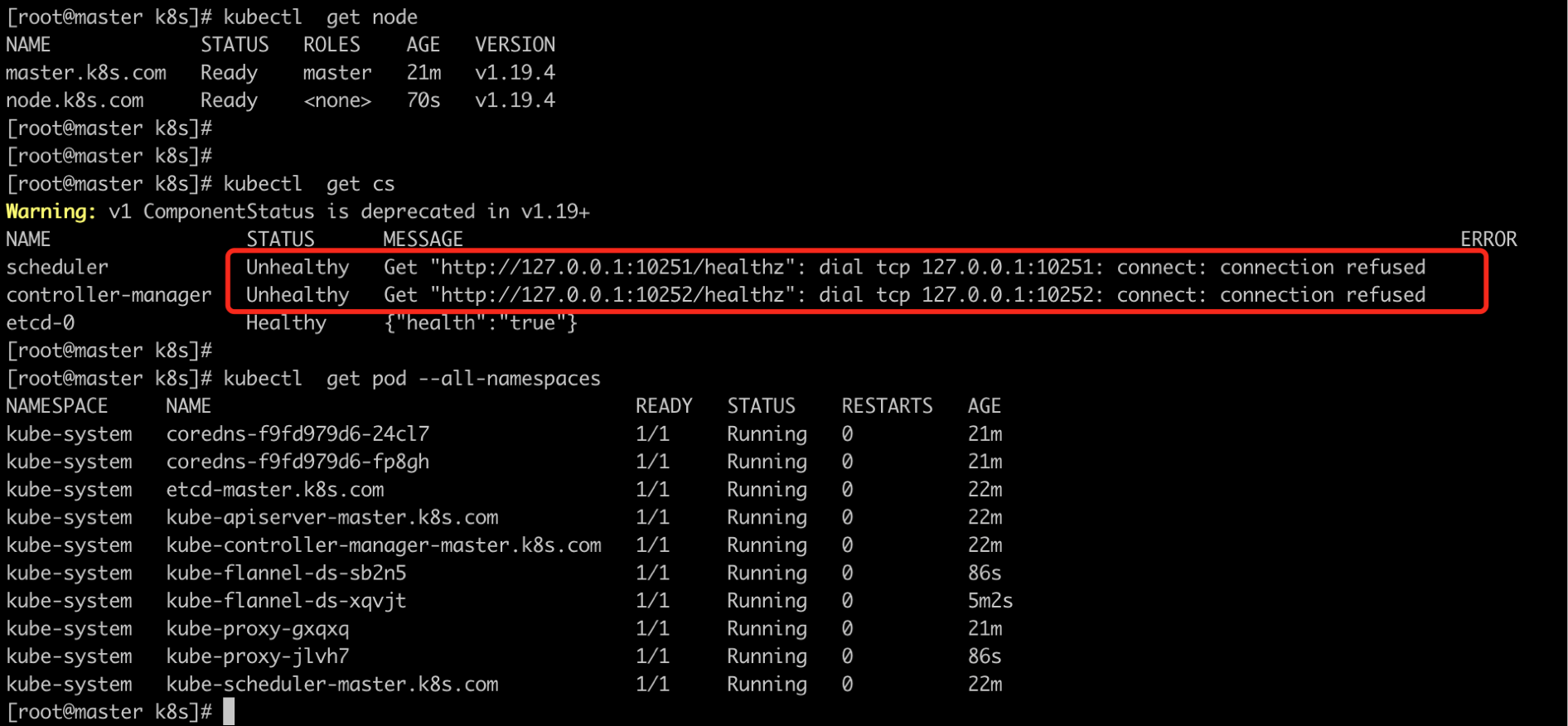

检查下集群的状态:

# kubectl get cs

# kubectl get pod --all-namespaces

# kubectl get node

若出现如下的报错,可以参考这篇博文解决:https://llovewxm1314.blog.csdn.net/article/details/108458197

13.部署Dashboard插件

下载Dashboard插件配置文件

#node节点下载镜像

#docker pull kubernetesui/dashboard:v2.0.0

#docker pull kubernetesui/metrics-scraper:v1.0.4

#下载配置

#wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0/aio/deploy/recommended.yaml

编辑kubernetes-dashboard.yaml文件,在Dashboard Service中添加type: NodePort,暴露Dashboard服务

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

执行命令

# kubectl create -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

创建sa并绑定默认的cluster-admin管理员集群角色:

#kubectl create serviceaccount dashboard-admin -n kubernetes-dashboard

#kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kubernetes-dashboard:dashboard-admin

登陆kubernetes-dashboard:

#kubectl get secret -n kubernetes-dashboard

#kubectl describe secret dashboard-admin-token-bwdjj -n kubernetes-dashboard

注意:查看kubernetes-dashboard 命令:

#kubectl --namespace=kubernetes-dashboard get service kubernetes-dashboard

解决Google浏览器不能打开kubernetes dashboard方法

执行命令

#mkdir key && cd key

生成证书

#openssl genrsa -out dashboard.key 2048

#openssl req -new -out dashboard.csr -key dashboard.key -subj '/CN=192.168.25.65'

#openssl x509 -req -in dashboard.csr -signkey dashboard.key -out dashboard.crt

删除原有的证书secret

#kubectl delete secret kubernetes-dashboard-certs -n kubernetes-dashboard

创建新的证书secret

#kubectl create secret generic kubernetes-dashboard-certs --from-file=dashboard.key --from-file=dashboard.crt -n kubernetes-dashboard

查看pod

#kubectl get pod -n kubernetes-dashboard

重启pod

#kubectl delete pod kubernetes-dashboard-7b544877d5-d76kd -n kubernetes-dashboard

14.部署metrics server

下载配置文件

#wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.4.1/components.yaml

# cat components.yaml | grep image

image: k8s.gcr.io/metrics-server/metrics-server:v0.4.1

#docker pull registry.aliyuncs.com/google_containers/metrics-server:v0.4.1

#docker tag registry.aliyuncs.com/google_containers/metrics-server:v0.4.1 k8s.gcr.io/metrics-server/metrics-server:v0.4.1

#docker rmi registry.aliyuncs.com/google_containers/metrics-server:v0.4.1

#修改文件内容

# vi components.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: metrics-server

namespace: kube-system

labels:

k8s-app: metrics-server

spec:

selector:

matchLabels:

k8s-app: metrics-server

template:

metadata:

name: metrics-server

labels:

k8s-app: metrics-server

spec:

serviceAccountName: metrics-server

volumes:

# mount in tmp so we can safely use from-scratch images and/or read-only containers

- name: tmp-dir

emptyDir: {}

hostNetwork: true #增加

containers:

- name: metrics-server

image: k8s.gcr.io/metrics-server-arm64:v0.3.6

imagePullPolicy: IfNotPresent

command: #增加

- /metrics-server #增加

- --metric-resolution=30s #增加

- --requestheader-allowed-names=aggregator #增加

- --kubelet-insecure-tls #增加

- --kubelet-preferred-address-types=InternalDNS,InternalIP,ExternalDNS,ExternalIP,Hostname #增加

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- pods

- nodes

- nodes/stats

- namespaces

- configmaps

verbs:

- get

- list

- watch

#kubectl create -f components.yaml

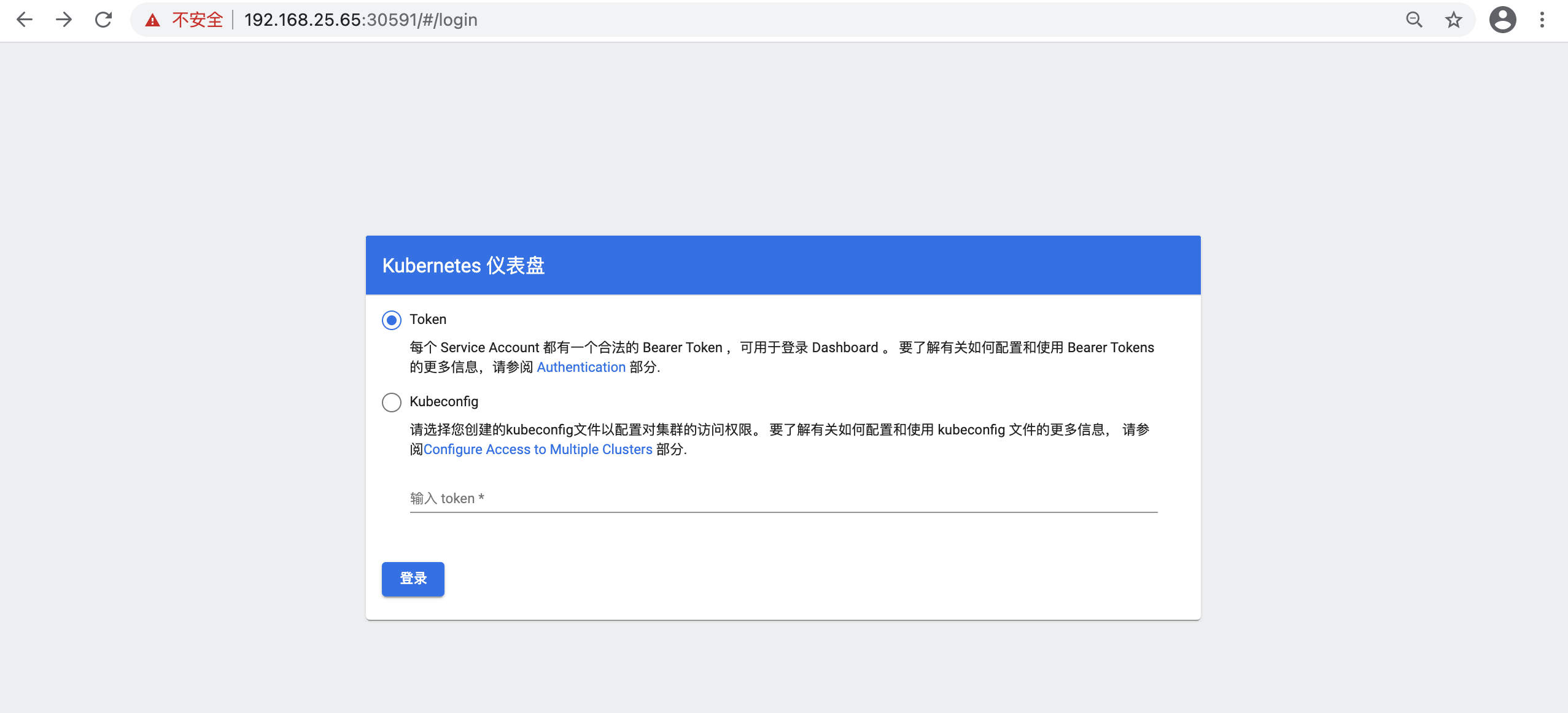

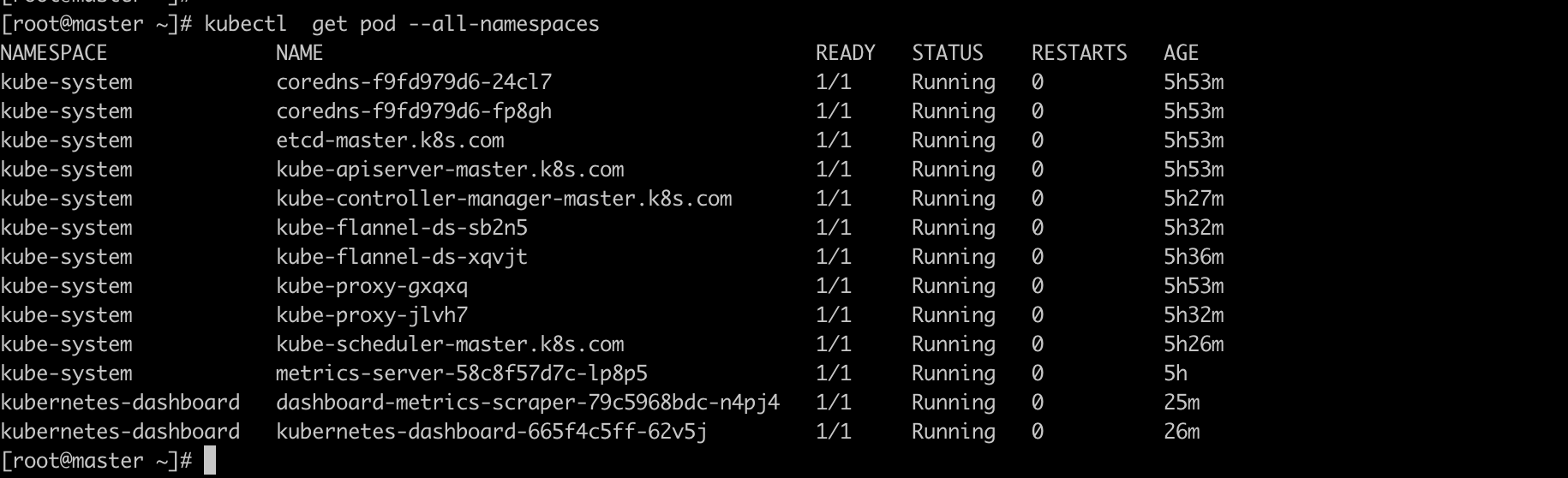

15.登录集群dashboard面板

#查看svc地址的nodeport 端口

# kubectl get svc -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.1.131.57 <none> 8000/TCP 5h25m

kubernetes-dashboard NodePort 10.1.227.79 <none> 443:30591/TCP 5h25m

#获取令牌登录token

#kubectl describe secret/$(kubectl get secret -n kubernetes-dashboard |grep admin|awk '{print $1}') -n kubernetes-dashboard

#记录:

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IktHT3cwbWpuUkZhc3BqTG5LamFtYzV3STc1SDRPaERGbWV6SG5WUW5KRkEifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tamdncjYiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiMjU3NTRjYzQtNjNiNS00YTM0LThlYzgtNzIwNjE4MDE1MjE1Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmVybmV0ZXMtZGFzaGJvYXJkOmRhc2hib2FyZC1hZG1pbiJ9.7nTKEYPMw0mh1C53KfanP-fadtt9OEnmVbKpfaztJmBBDlKphuT4NBkqqEyQDZ0TyOF8naB9vHF4RmdNZWEBCGQh8YrsdfMyLOMnZSMIkMAxqdMv52zuSzxVOYjW5EzWl7YOhNKHglTp78LwQw7CvGuc8lNqp6Qij2iakxghq6yIs5deUonnCxvKXGfeoZ2NV2xu8A9UYffM6_gvNdpuf7DpUMXNsYglMOwY4uu5FGrH4rcPizdId6Il227wOs9PAJ4XOQEHJYY2xcbU7H1GbV297t16o95msXHkQD7SdhgkKNCeaL4NWJ67K5cIKJNr_i2ISNFWXZ6g9scejce-cg

#打开浏览器访问地址 https://master.k8s.com:30591 并使用token登录

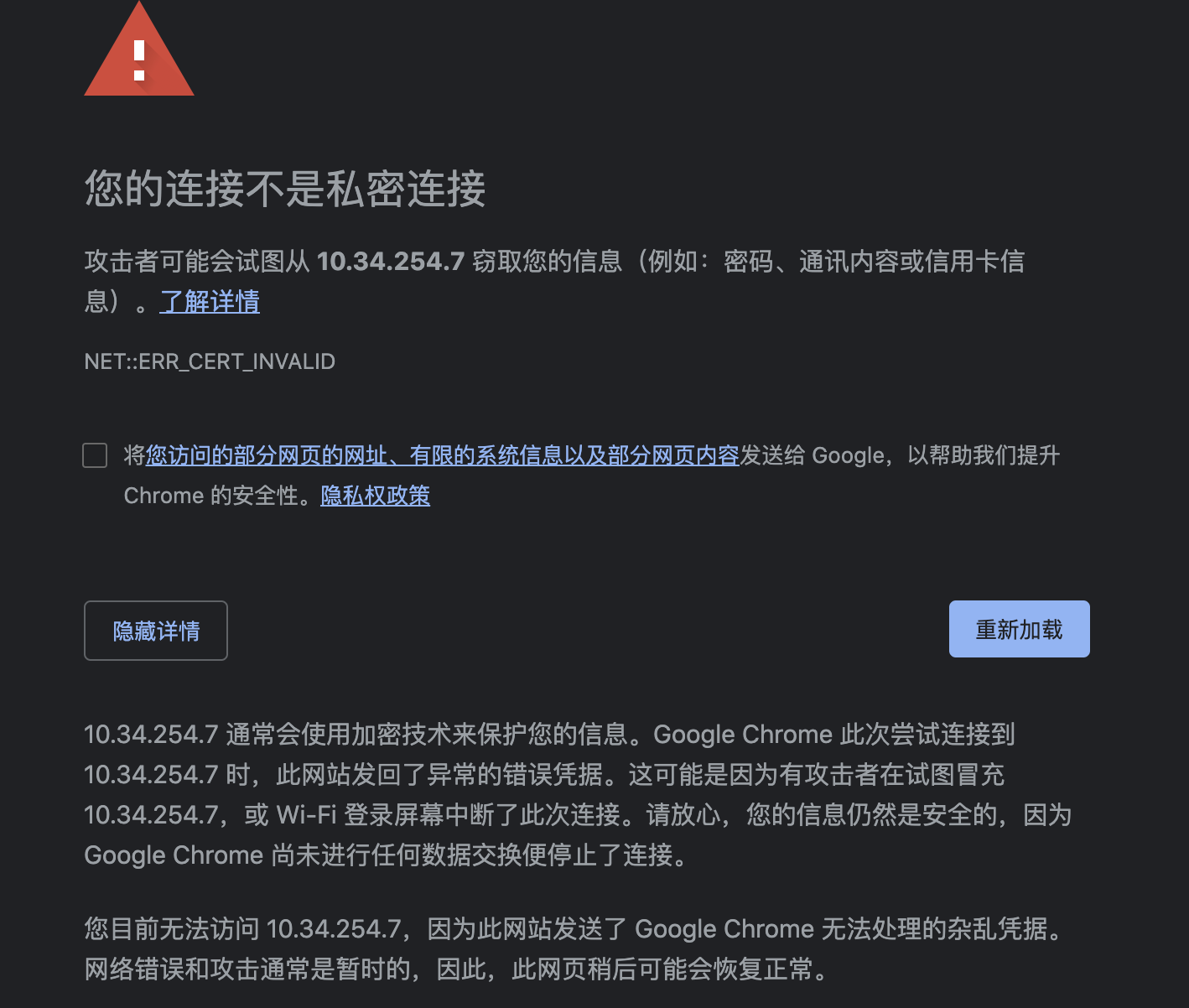

#注意注意:若谷歌浏览器提示网站返回是乱码,认为网站非安全, 如下图所示可以使用这种办法

----->>>在界面任意位置执行 thisisunsafe

问题现象:

10.34.254.7 通常会使用加密技术来保护您的信息。Google Chrome 此次尝试连接到 10.34.254.7 时,此网站发回了异常的错误凭据。这可能是因为有攻击者在试图冒充 10.34.254.7,或 Wi-Fi 登录屏幕中断了此次连接。请放心,您的信息仍然是安全的,因为 Google Chrome 尚未进行任何数据交换便停止了连接。

您目前无法访问 10.34.254.7,因为此网站发送了 Google Chrome 无法处理的杂乱凭据。网络错误和攻击通常是暂时的,因此,此网页稍后可能会恢复正常。

16.结尾

#至此kubernetes V1.19.4 版本就部署成功了。当然还有很多很多的小细节还没有写,从头走一遍才能有更深的体会吧。

第一篇就更新到这儿了。感谢大家坚持看完。来给生活比个✌️

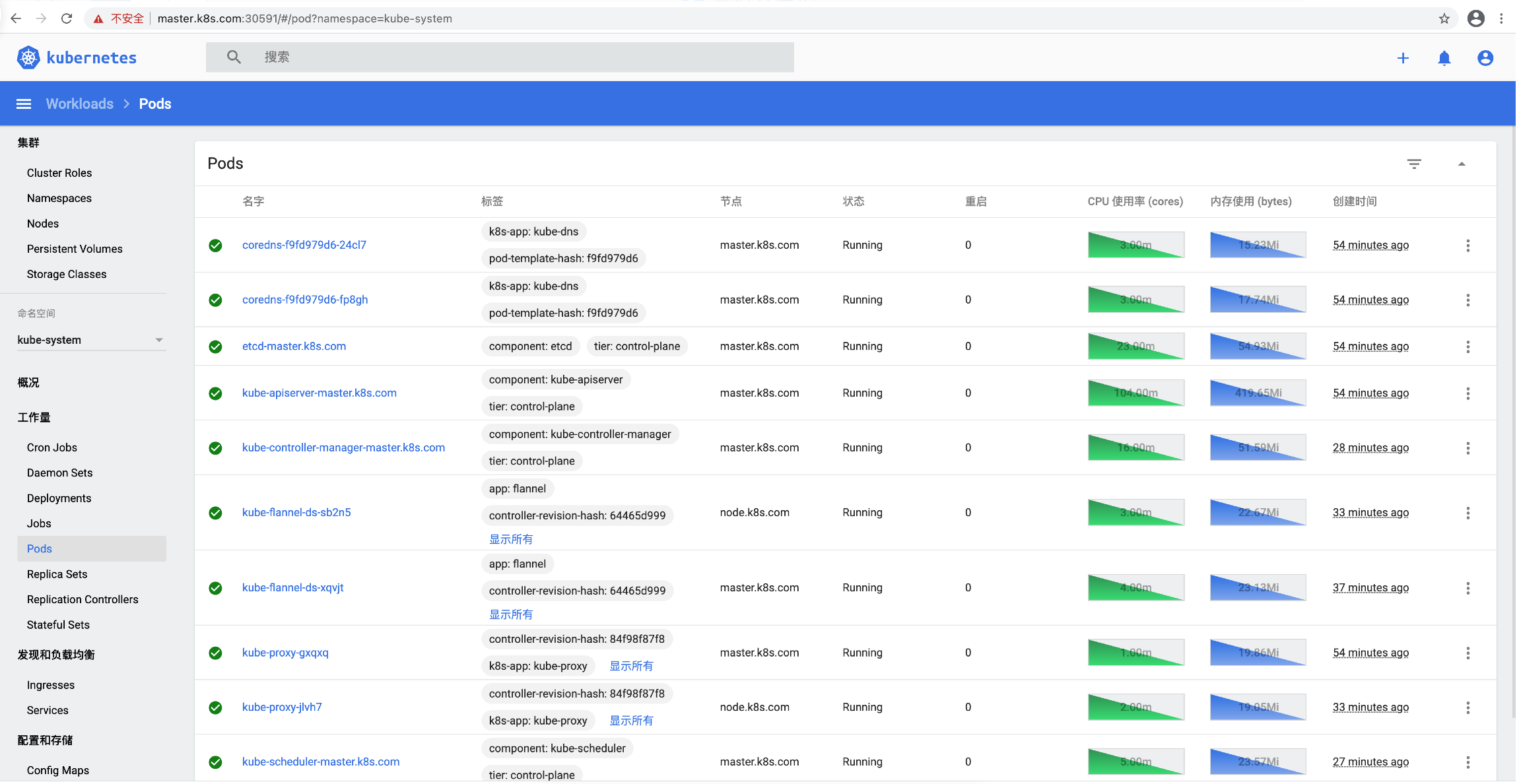

登录成功后的界面如下: