yolo5.4,不多比比

这里训练完模型之后,咱们使用pytorch写一个小案例,可以和下面libtorch版本对比下:

1 import cv2 2 import torch 3 import torch.backends.cudnn as cudnn 4 from numpy import random 5 6 from utils.datasets import LoadImages 7 from models.experimental import attempt_load 8 9 from utils.general import check_img_size, non_max_suppression, scale_coords, xyxy2xywh 10 from utils.plots import plot_one_box 11 12 # device 13 device = torch.device('cpu') # cuda:0,cpu = FP32 14 # load model 15 model = attempt_load(['weights/yolov5s.pt'], map_location = device) 16 #model = torch.jit.load("weights/yolo-5S-face-wg.torchscript.pt") 17 #model = model.to(device) 18 #model.eval() 19 20 # reference 21 stride = int(model.stride.max()) 22 imgsz = 638 # note:我故意设置为638 23 imgsz = check_img_size(imgsz, s=stride) 24 # read the image datasets.py 191 行 25 dataset = LoadImages('D:/Data/yolo/4-320-314.jpg', img_size=imgsz, stride=stride) 26 # get class names 27 names = model.names 28 # anchors' color 29 colors = [[random.randint(0, 255) for _ in range(3)] for _ in names] 30 # img resize 31 # img:srcImg 32 # im0s:dstImg 33 for path, img, im0s, vid_cap in dataset: 34 img = torch.from_numpy(img).to(device) 35 img = img.float() 36 img /= 255.0 37 if img.ndimension() == 3: 38 img = img.unsqueeze(0) 39 # img:torch.Size([1, 3, 256, 640]) 40 pred = model(img, augment=False)[0] # defaults: no-augment 41 # NMS 42 pred = non_max_suppression(pred, conf_thres=0.25, iou_thres=0.45, classes=None, agnostic=False) 43 44 # Process detections 45 for i, det in enumerate(pred): 46 # im0(source image) (360, 900, 3) 47 # gn tensor([900, 360, 900, 360]) 48 im0 = im0s 49 gn = torch.tensor(im0.shape)[[1, 0, 1, 0]] 50 if len(det): # 检测到框个数 51 # Rescale boxes from img_size to im0 size 52 # 调整框大小,缩放到实际原图中去 53 det[:, :4] = scale_coords(img.shape[2:], det[:, :4], im0.shape).round() 54 # conf:置信度得分 cls:类别 55 for *xyxy, conf, cls in reversed(det): 56 # 框的几何信息转换:xyxy -> xywh 57 xywh = (xyxy2xywh(torch.tensor(xyxy).view(1, 4)) / gn).view(-1).tolist() # normalized xywh 58 label = f'{names[int(cls)]} {conf:.2f}' 59 plot_one_box(xyxy, im0, label=label, color=colors[int(cls)], line_thickness=3) 60 cv2.namedWindow("dst_img", cv2.WINDOW_NORMAL) 61 cv2.imshow("dst_img", im0) 62 cv2.waitKey(0)

一、libtorch+cpu版本

CPU版本导出模型脚本export-libtorch-cpu.py:

相对官方原本的export.py,我们仅需修改54行就行了。

1 """Exports a YOLOv5 *.pt model to ONNX and TorchScript formats 2 3 Usage: 4 $ export PYTHONPATH="$PWD" && python models/export.py --weights ./weights/yolov5s.pt --img 640 --batch 1 5 """ 6 7 import argparse 8 import sys 9 import time 10 11 sys.path.append('./') # to run '$ python *.py' files in subdirectories 12 13 import torch 14 import torch.nn as nn 15 16 import models 17 from models.experimental import attempt_load 18 from utils.activations import Hardswish, SiLU 19 from utils.general import set_logging, check_img_size 20 21 if __name__ == '__main__': 22 parser = argparse.ArgumentParser() 23 parser.add_argument('--weights', type=str, default='./yolov5s.pt', help='weights path') # from yolov5/models/ 24 parser.add_argument('--img-size', nargs='+', type=int, default=[640, 640], help='image size') # height, width 25 parser.add_argument('--dynamic', action='store_true', help='dynamic ONNX axes') 26 parser.add_argument('--batch-size', type=int, default=1, help='batch size') 27 opt = parser.parse_args() 28 opt.img_size *= 2 if len(opt.img_size) == 1 else 1 # expand 29 print(opt) 30 set_logging() 31 t = time.time() 32 33 # Load PyTorch model 34 model = attempt_load(opt.weights, map_location=torch.device('cpu')) # load FP32 model 35 labels = model.names 36 37 # Checks 38 gs = int(max(model.stride)) # grid size (max stride) 39 opt.img_size = [check_img_size(x, gs) for x in opt.img_size] # verify img_size are gs-multiples 40 41 # Input 42 img = torch.zeros(opt.batch_size, 3, *opt.img_size) # image size(1,3,320,192) iDetection 43 44 # Update model 45 for k, m in model.named_modules(): 46 m._non_persistent_buffers_set = set() # pytorch 1.6.0 compatibility 47 if isinstance(m, models.common.Conv): # assign export-friendly activations 48 if isinstance(m.act, nn.Hardswish): 49 m.act = Hardswish() 50 elif isinstance(m.act, nn.SiLU): 51 m.act = SiLU() 52 # elif isinstance(m, models.yolo.Detect): 53 # m.forward = m.forward_export # assign forward (optional) 54 model.model[-1].export = False # set Detect() layer export=True 55 y = model(img) # dry run 56 57 # TorchScript export 58 try: 59 print(' Starting TorchScript export with torch %s...' % torch.__version__) 60 f = opt.weights.replace('.pt', '.torchscript.pt') # filename 61 ts = torch.jit.trace(model, img) 62 ts.save(f) 63 print('TorchScript export success, saved as %s' % f) 64 except Exception as e: 65 print('TorchScript export failure: %s' % e) 66 67 # ONNX export 68 try: 69 import onnx 70 71 print(' Starting ONNX export with onnx %s...' % onnx.__version__) 72 f = opt.weights.replace('.pt', '.onnx') # filename 73 torch.onnx.export(model, img, f, verbose=False, opset_version=12, input_names=['images'], 74 output_names=['classes', 'boxes'] if y is None else ['output'], 75 dynamic_axes={'images': {0: 'batch', 2: 'height', 3: 'width'}, # size(1,3,640,640) 76 'output': {0: 'batch', 2: 'y', 3: 'x'}} if opt.dynamic else None) 77 78 # Checks 79 onnx_model = onnx.load(f) # load onnx model 80 onnx.checker.check_model(onnx_model) # check onnx model 81 # print(onnx.helper.printable_graph(onnx_model.graph)) # print a human readable model 82 print('ONNX export success, saved as %s' % f) 83 except Exception as e: 84 print('ONNX export failure: %s' % e) 85 86 # CoreML export 87 try: 88 import coremltools as ct 89 90 print(' Starting CoreML export with coremltools %s...' % ct.__version__) 91 # convert model from torchscript and apply pixel scaling as per detect.py 92 model = ct.convert(ts, inputs=[ct.ImageType(name='image', shape=img.shape, scale=1 / 255.0, bias=[0, 0, 0])]) 93 f = opt.weights.replace('.pt', '.mlmodel') # filename 94 model.save(f) 95 print('CoreML export success, saved as %s' % f) 96 except Exception as e: 97 print('CoreML export failure: %s' % e) 98 99 # Finish 100 print(' Export complete (%.2fs). Visualize with https://github.com/lutzroeder/netron.' % (time.time() - t))

二、libtorch+GPU

在上面的基础上做了如下修改:1、将模型塞进cuda,2、将样图塞进cuda

1 """Exports a YOLOv5 *.pt model to ONNX and TorchScript formats 2 3 Usage: 4 $ export PYTHONPATH="$PWD" && python models/export.py --weights ./weights/yolov5s.pt --img 640 --batch 1 5 """ 6 7 import argparse 8 import sys 9 import time 10 11 sys.path.append('./') # to run '$ python *.py' files in subdirectories 12 13 import torch 14 import torch.nn as nn 15 16 import models 17 from models.experimental import attempt_load 18 from utils.activations import Hardswish, SiLU 19 from utils.general import set_logging, check_img_size 20 21 if __name__ == '__main__': 22 parser = argparse.ArgumentParser() 23 parser.add_argument('--weights', type=str, default='./yolov5s.pt', help='weights path') # from yolov5/models/ 24 parser.add_argument('--img-size', nargs='+', type=int, default=[640, 640], help='image size') # height, width 25 parser.add_argument('--dynamic', action='store_true', help='dynamic ONNX axes') 26 parser.add_argument('--batch-size', type=int, default=1, help='batch size') 27 opt = parser.parse_args() 28 opt.img_size *= 2 if len(opt.img_size) == 1 else 1 # expand 29 print(opt) 30 set_logging() 31 t = time.time() 32 33 # Load PyTorch model 34 # gpu 35 model = attempt_load(opt.weights, map_location=torch.device('cuda')) # load FP32 model 36 labels = model.names 37 38 # Checks 39 gs = int(max(model.stride)) # grid size (max stride) 40 opt.img_size = [check_img_size(x, gs) for x in opt.img_size] # verify img_size are gs-multiples 41 42 # Input 43 # gpu 44 img = torch.zeros(opt.batch_size, 3, *opt.img_size).to(device='cuda') # image size(1,3,320,192) iDetection 45 46 # Update model 47 for k, m in model.named_modules(): 48 m._non_persistent_buffers_set = set() # pytorch 1.6.0 compatibility 49 if isinstance(m, models.common.Conv): # assign export-friendly activations 50 if isinstance(m.act, nn.Hardswish): 51 m.act = Hardswish() 52 elif isinstance(m.act, nn.SiLU): 53 m.act = SiLU() 54 # elif isinstance(m, models.yolo.Detect): 55 # m.forward = m.forward_export # assign forward (optional) 56 model.model[-1].export = False # set Detect() layer export=True 57 y = model(img) # dry run 58 59 # TorchScript export 60 try: 61 print(' Starting TorchScript export with torch %s...' % torch.__version__) 62 f = opt.weights.replace('.pt', '.torchscript.pt') # filename 63 ts = torch.jit.trace(model, img) 64 ts.save(f) 65 print('TorchScript export success, saved as %s' % f) 66 except Exception as e: 67 print('TorchScript export failure: %s' % e) 68 69 # ONNX export 70 try: 71 import onnx 72 73 print(' Starting ONNX export with onnx %s...' % onnx.__version__) 74 f = opt.weights.replace('.pt', '.onnx') # filename 75 torch.onnx.export(model, img, f, verbose=False, opset_version=12, input_names=['images'], 76 output_names=['classes', 'boxes'] if y is None else ['output'], 77 dynamic_axes={'images': {0: 'batch', 2: 'height', 3: 'width'}, # size(1,3,640,640) 78 'output': {0: 'batch', 2: 'y', 3: 'x'}} if opt.dynamic else None) 79 80 # Checks 81 onnx_model = onnx.load(f) # load onnx model 82 onnx.checker.check_model(onnx_model) # check onnx model 83 # print(onnx.helper.printable_graph(onnx_model.graph)) # print a human readable model 84 print('ONNX export success, saved as %s' % f) 85 except Exception as e: 86 print('ONNX export failure: %s' % e) 87 88 # CoreML export 89 try: 90 import coremltools as ct 91 92 print(' Starting CoreML export with coremltools %s...' % ct.__version__) 93 # convert model from torchscript and apply pixel scaling as per detect.py 94 model = ct.convert(ts, inputs=[ct.ImageType(name='image', shape=img.shape, scale=1 / 255.0, bias=[0, 0, 0])]) 95 f = opt.weights.replace('.pt', '.mlmodel') # filename 96 model.save(f) 97 print('CoreML export success, saved as %s' % f) 98 except Exception as e: 99 print('CoreML export failure: %s' % e) 100 101 # Finish 102 print(' Export complete (%.2fs). Visualize with https://github.com/lutzroeder/netron.' % (time.time() - t))

三、源码

在百度网盘,需要请留言

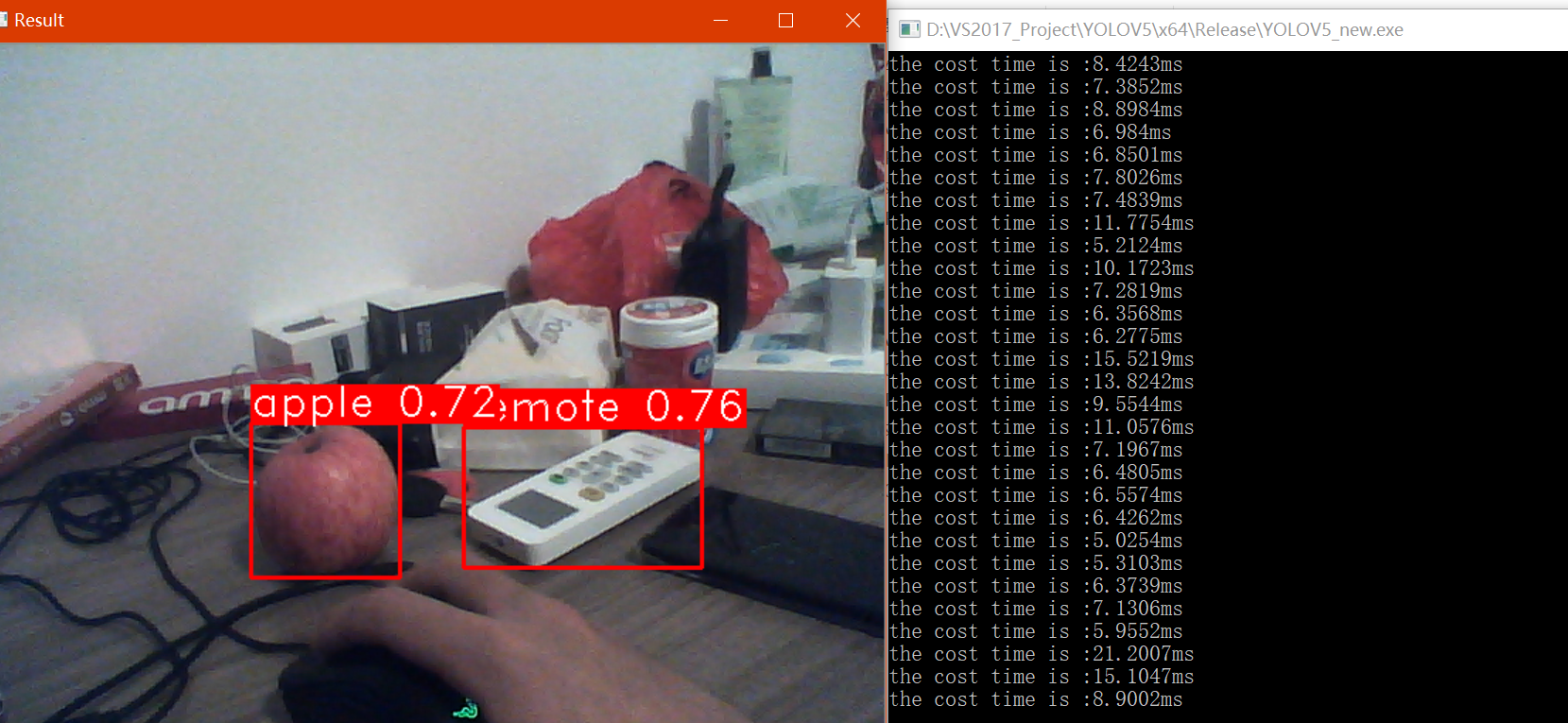

台式机:i7-8700 p4000, 5s:5ms

笔记本:i5-7200u MX940, 5s:5m

我有点看不懂了。

下图就是笔记本实测: