注释:已经看过有半年了,而且当时只是看了理论和opencv的函数调用,现在忘的九霄云外了,Ng的视频也没看SVM,现在准备系统的再学一遍吧。

之前记录的博客:http://www.cnblogs.com/wjy-lulu/p/6979436.html

一.SVM理论重点和难点剖析

注释:这里主要讲解公式推导和证明的重难点,不是按部就班的讲解SVM的求解过程,算是对推导过程的补充吧!

一点未接触过SVM的请看大神的博客:

http://blog.csdn.net/c406495762/article/details/78072313

http://www.cnblogs.com/jerrylead/archive/2011/03/13/1982684.html

http://blog.csdn.net/zouxy09/article/details/17291543

http://blog.pluskid.org/?p=685

http://blog.csdn.net/v_july_v/article/details/7624837

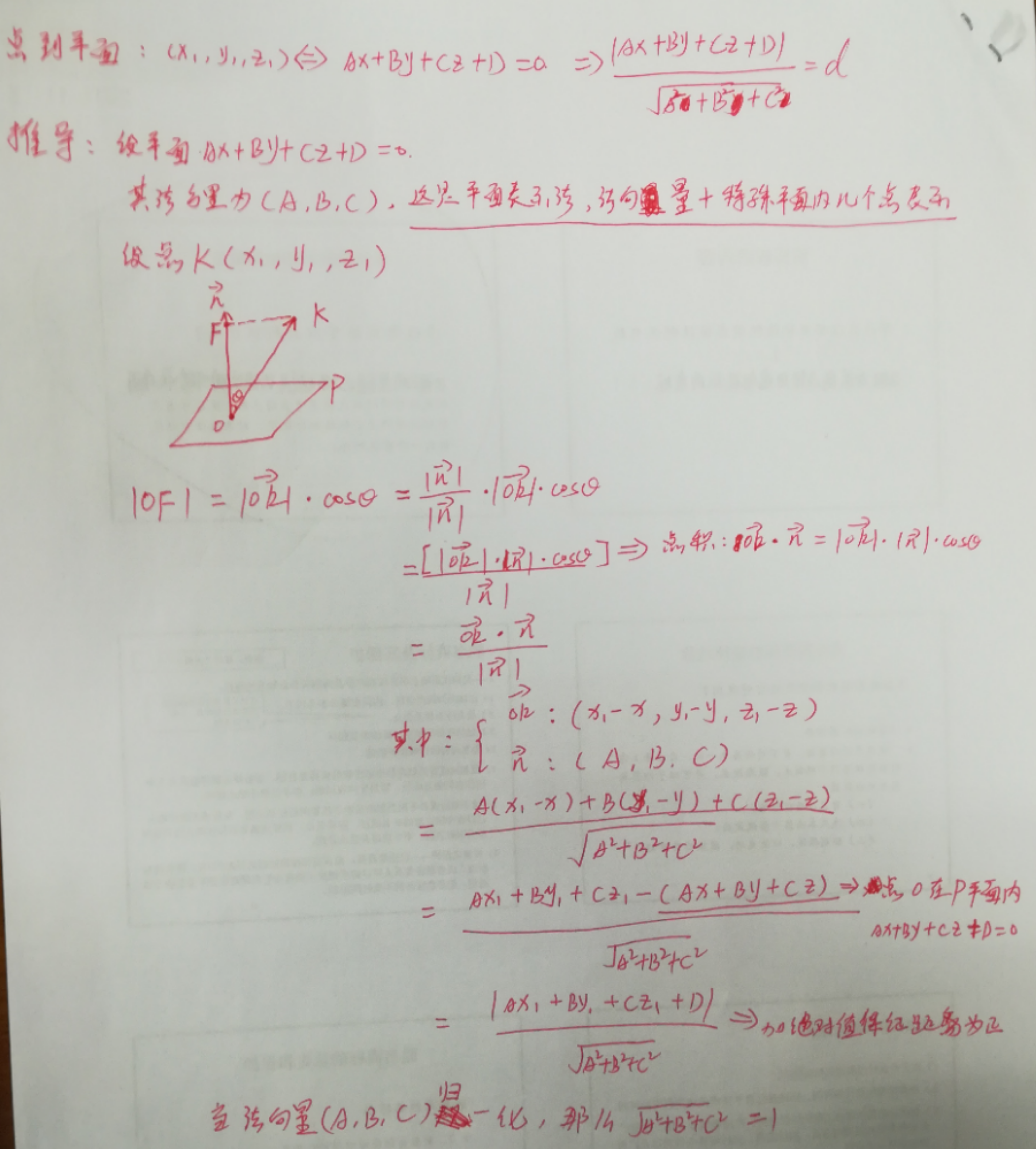

1.1点到直线距离的由来

我们先讨论点到平面的距离,由此推广到点到直线和点到超平面的距离公式。

点到平面公式推导

SVM公式推导一

SVM公式推导二

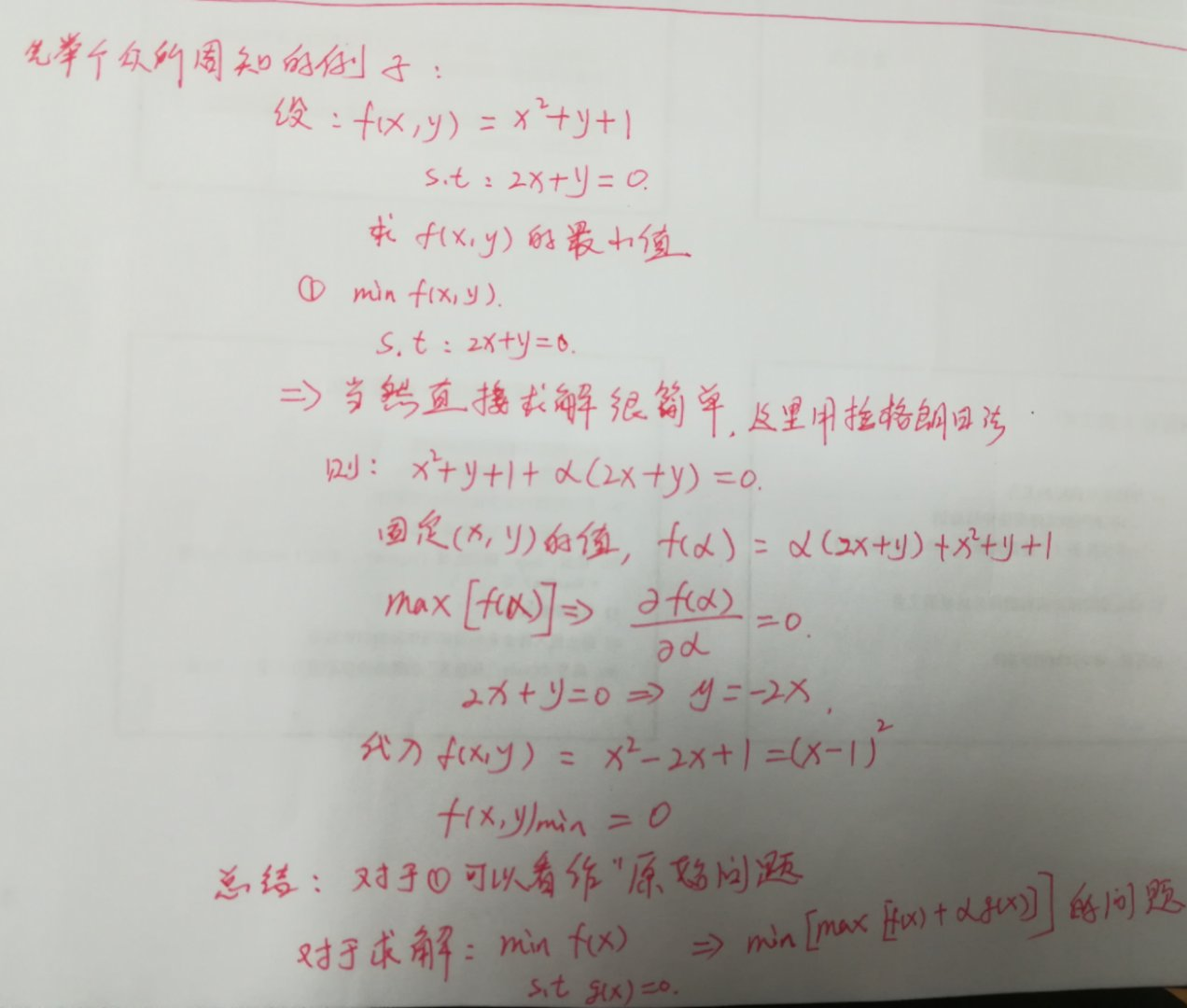

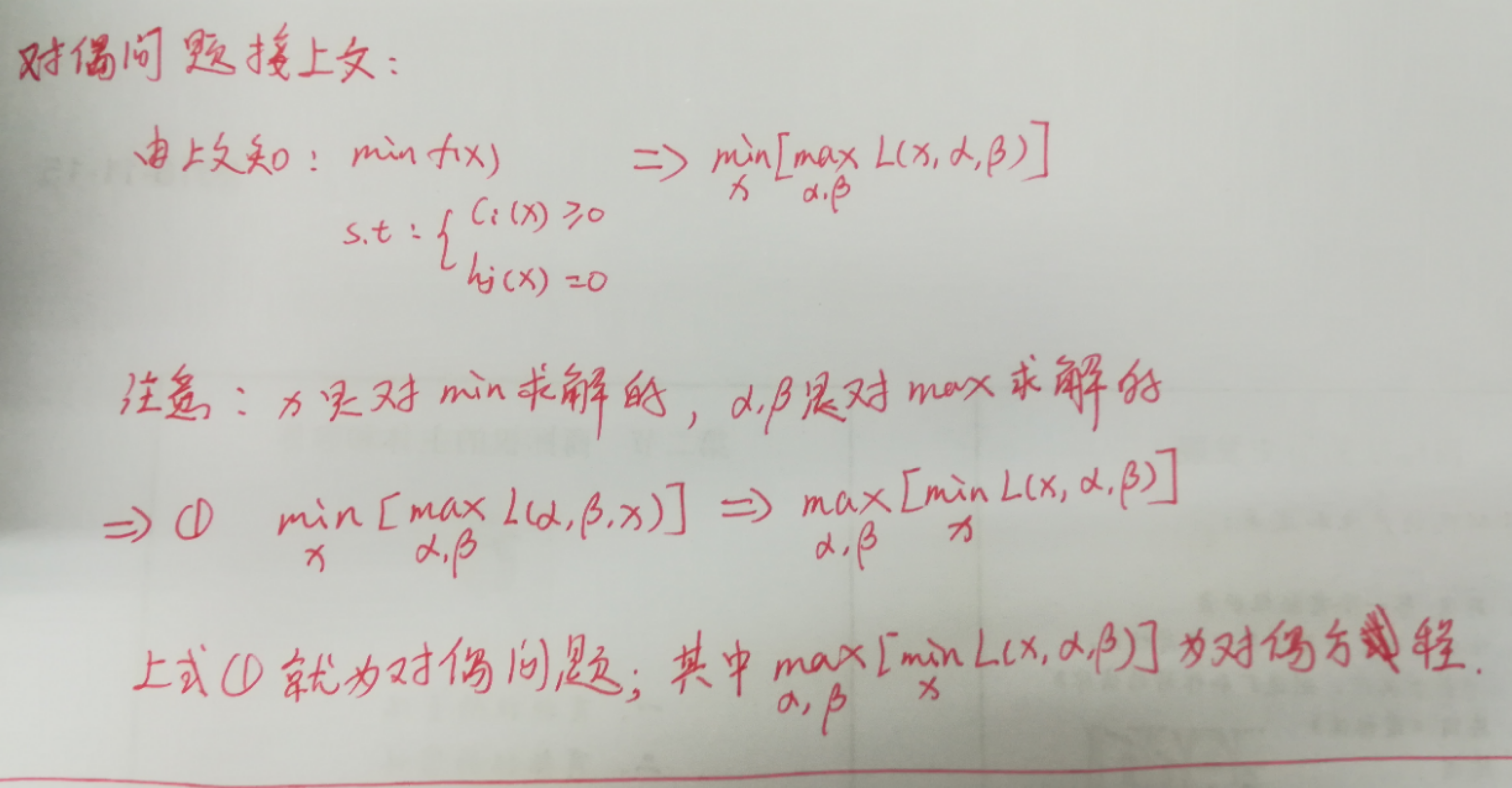

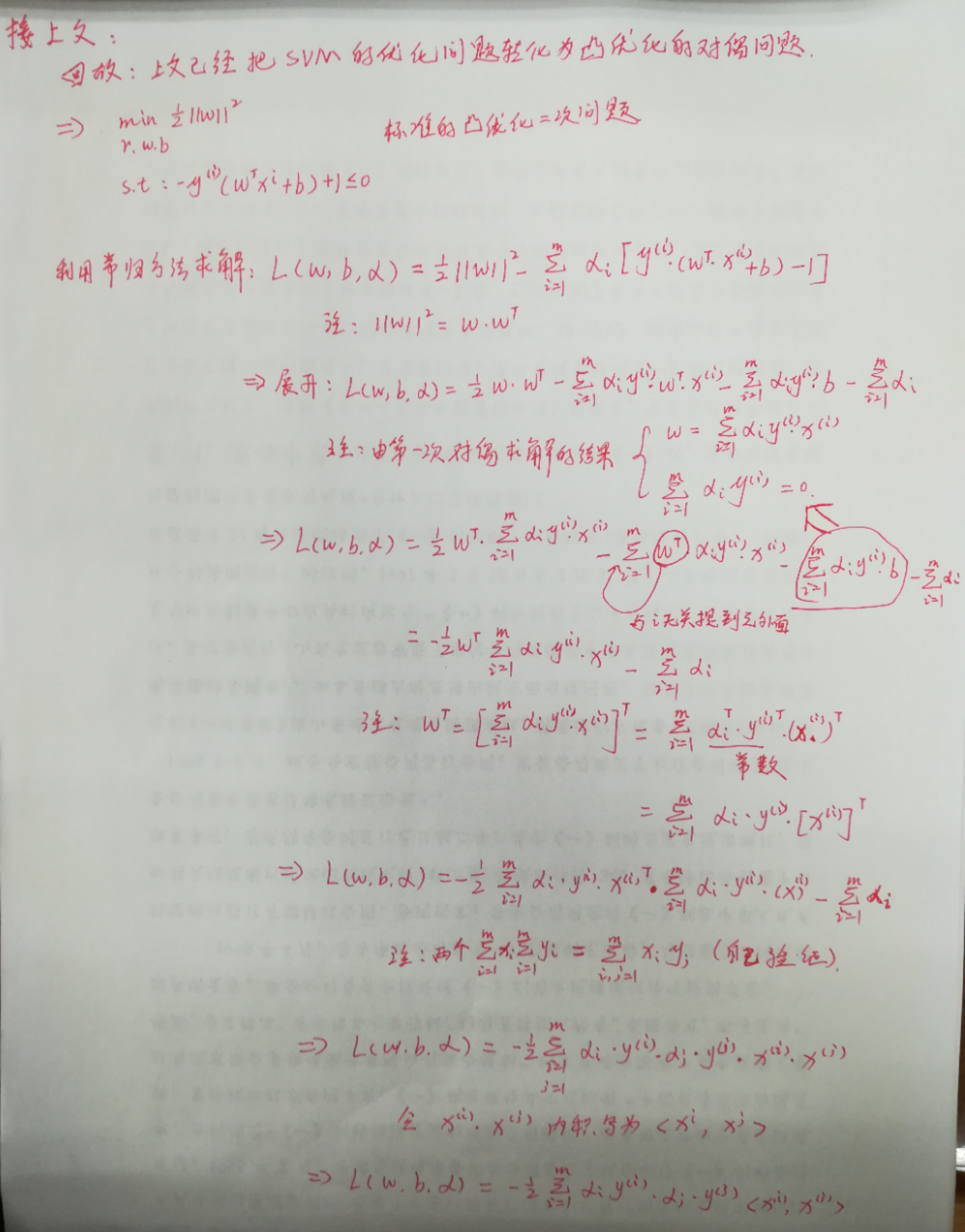

1.2拉格朗日对偶问题

用于求解带条件的最优化问题,其实到最后你就明白了SVM从头到尾最主要做的就是如何高效的求解目标值。而其它的学习算法做的都是对数据的求解优化问题,这点是SVM和其它算法根本的区别。

原始问题

对偶问题一

对偶问题2

原始问题和对偶问题的关系

KKT条件

SVM公式推导三

SVM公式推导四

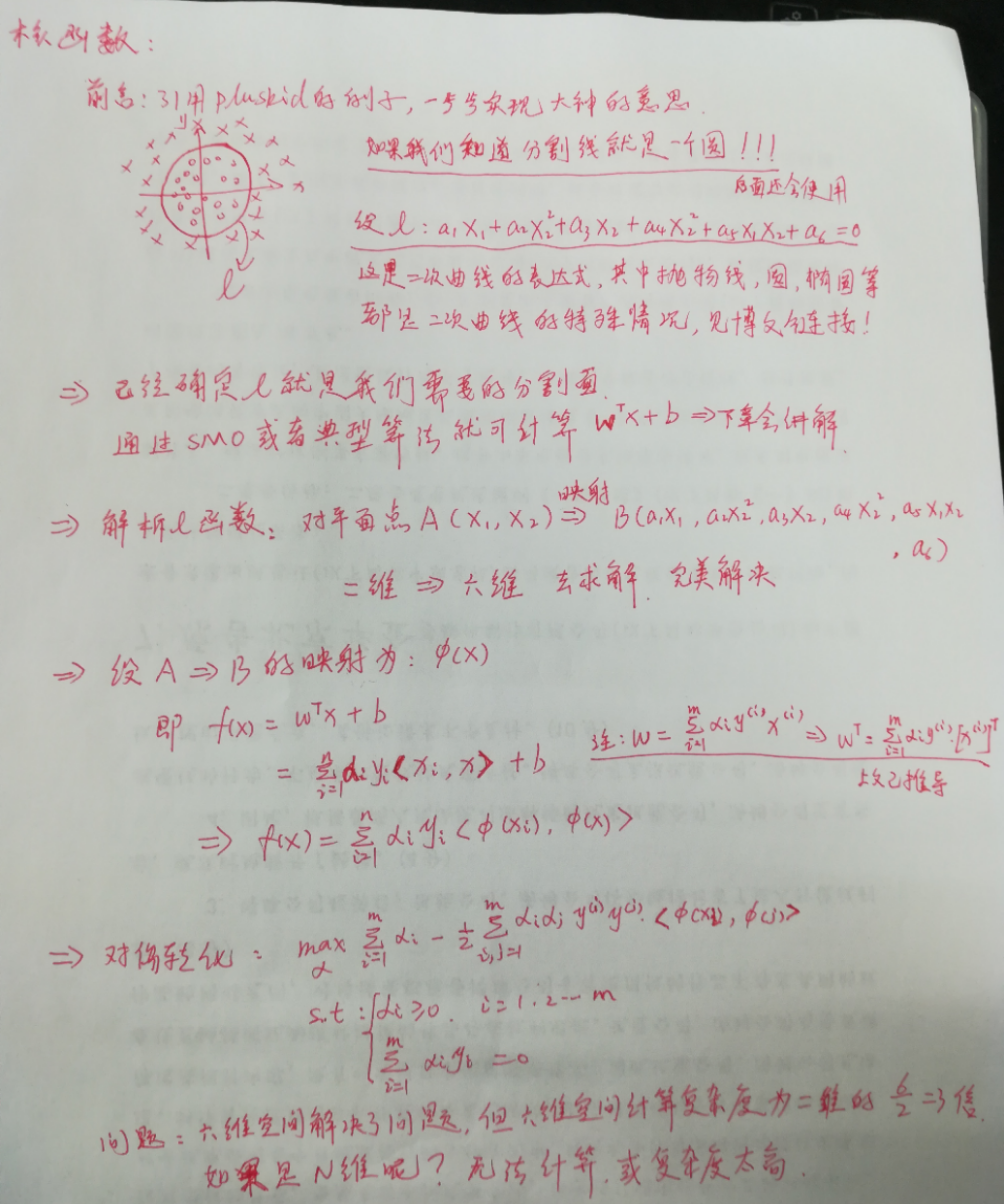

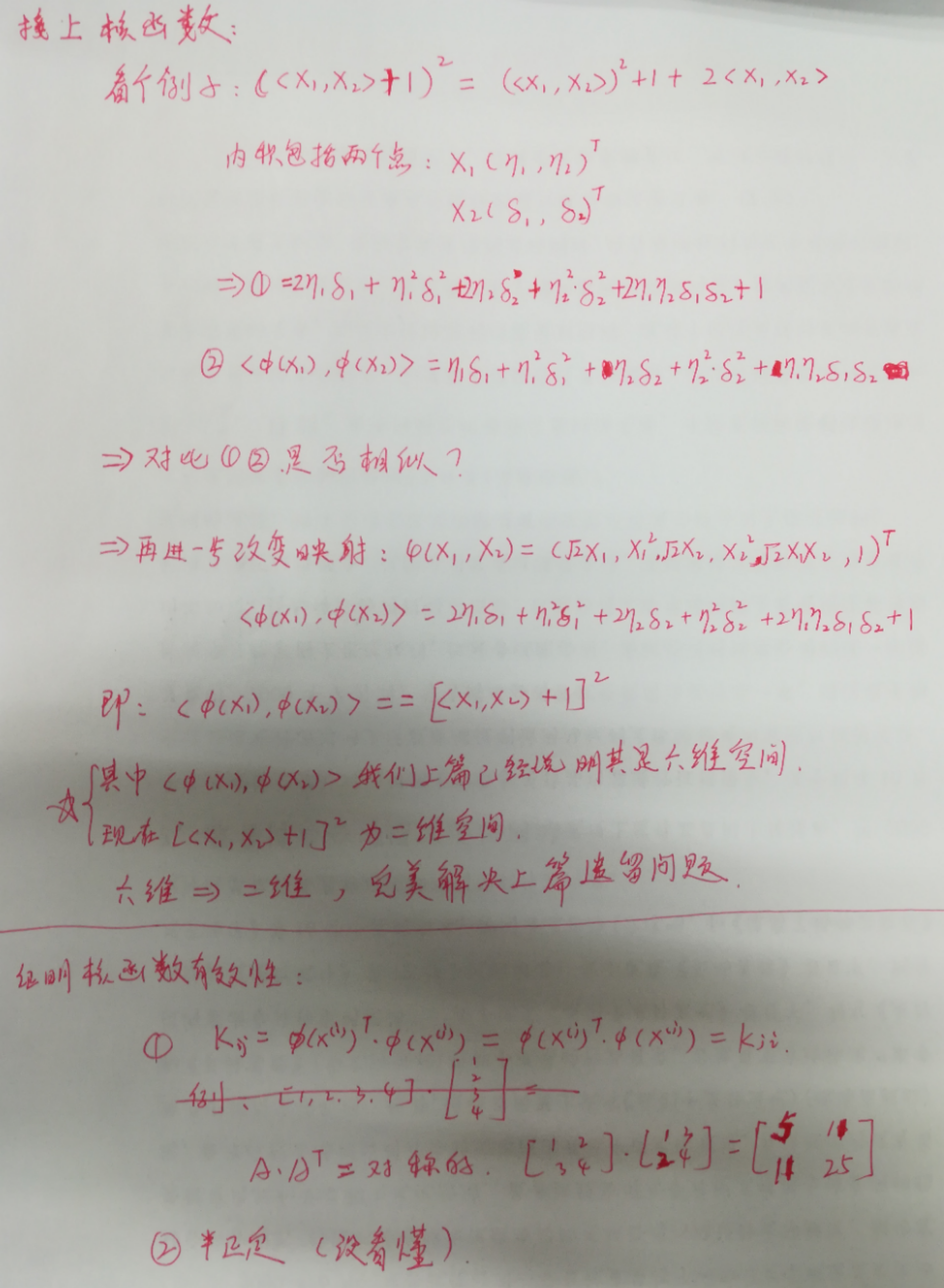

1.3核函数的推导

目的:1.处理线性不可分的情况。2.求解方便。

过程:二维情况的不可分割,就映射到三维、四维....等高维空间去分割。

通俗解释:https://www.zhihu.com/question/24627666 知乎大神开始装逼的时刻了。

理论部分:如下公式推导.......

核函数引出一

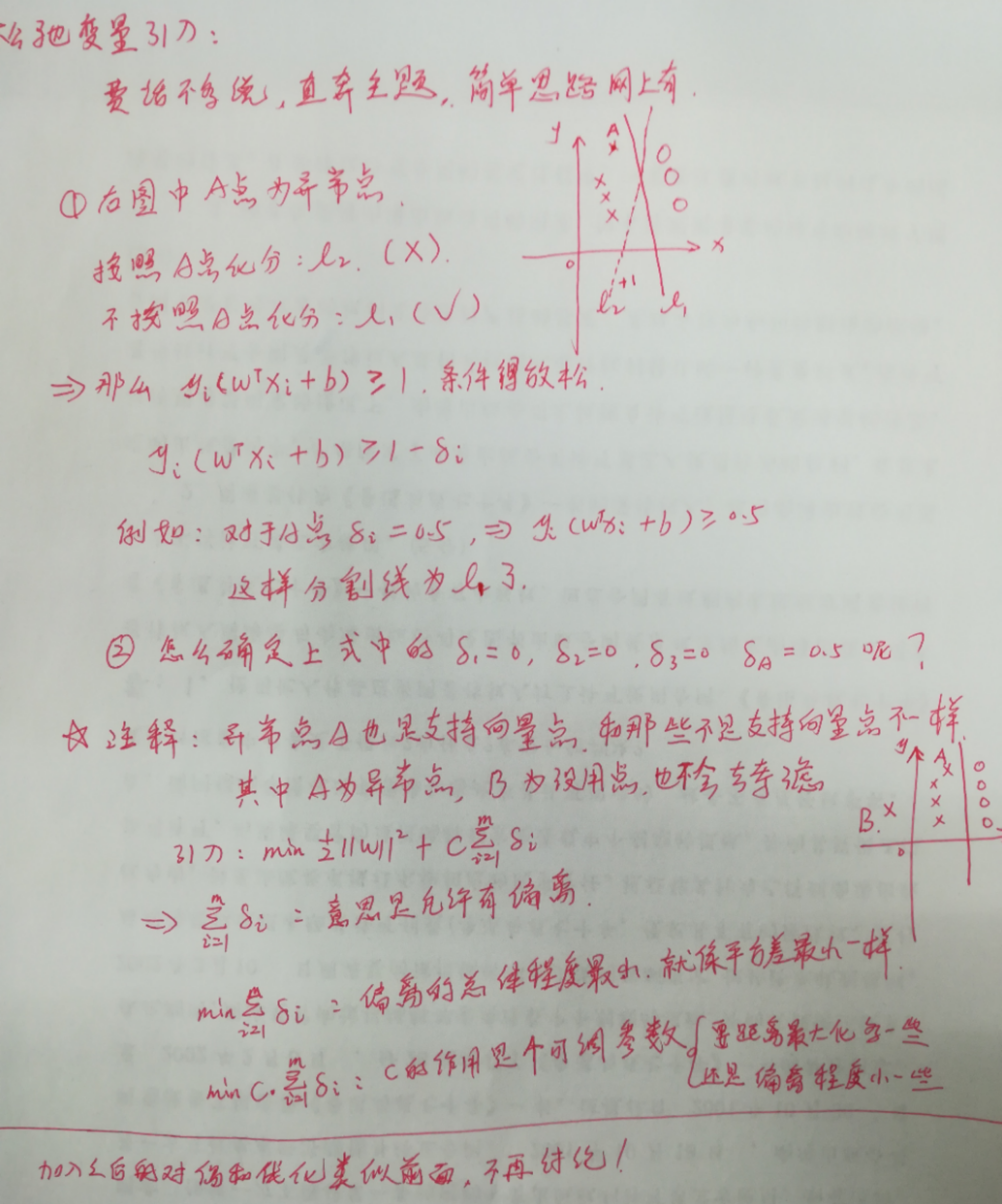

1.4松弛变量的引入

目的:防止部分异常点的干扰。

原理:和其它算法引入惩罚系数一样的,允许有异常点但是要接受惩罚。比如:异常的点肯定都是偏离群体的点,既然偏离群体,那么它的值就为负数且绝对值愈大惩罚程度越大。

具体推导:见下文......

松弛变量的引入

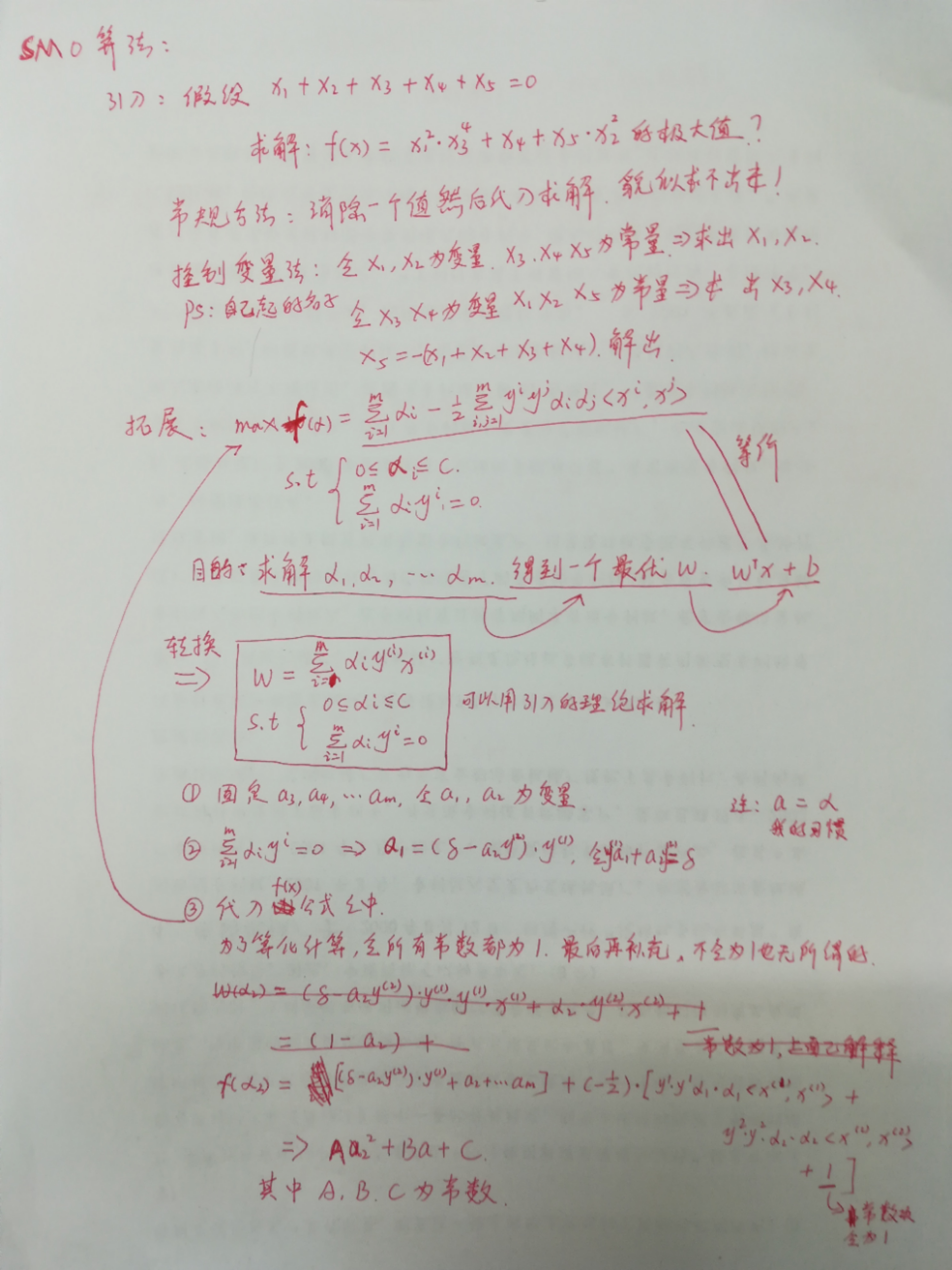

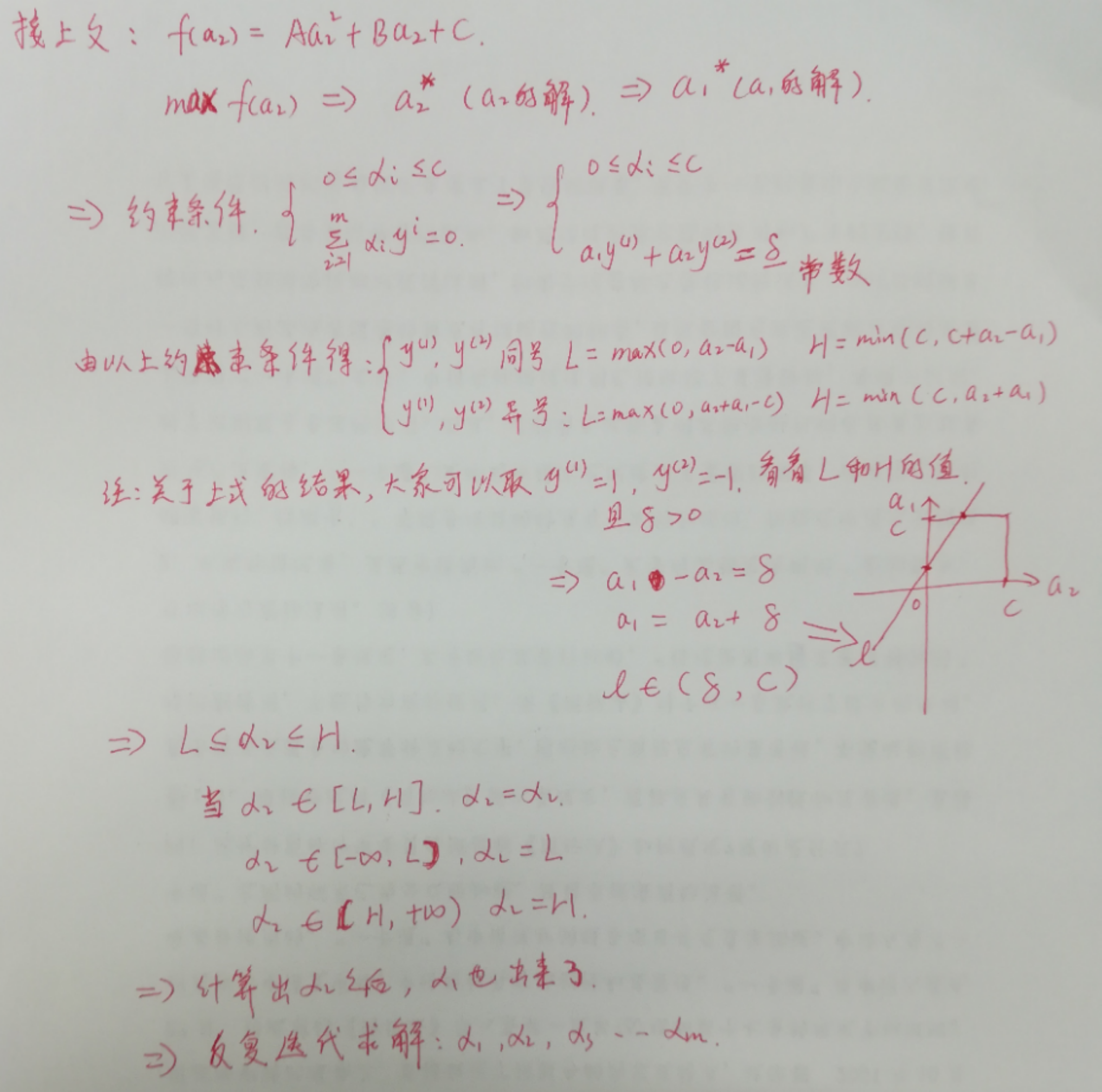

1.5.SMO算法

SMO算法一

SMO算法二

注释:后面还有参数如何最优选择,有点看不懂而且也有点不想看了,干脆从下面的代码去分析SMO的具体过程吧!

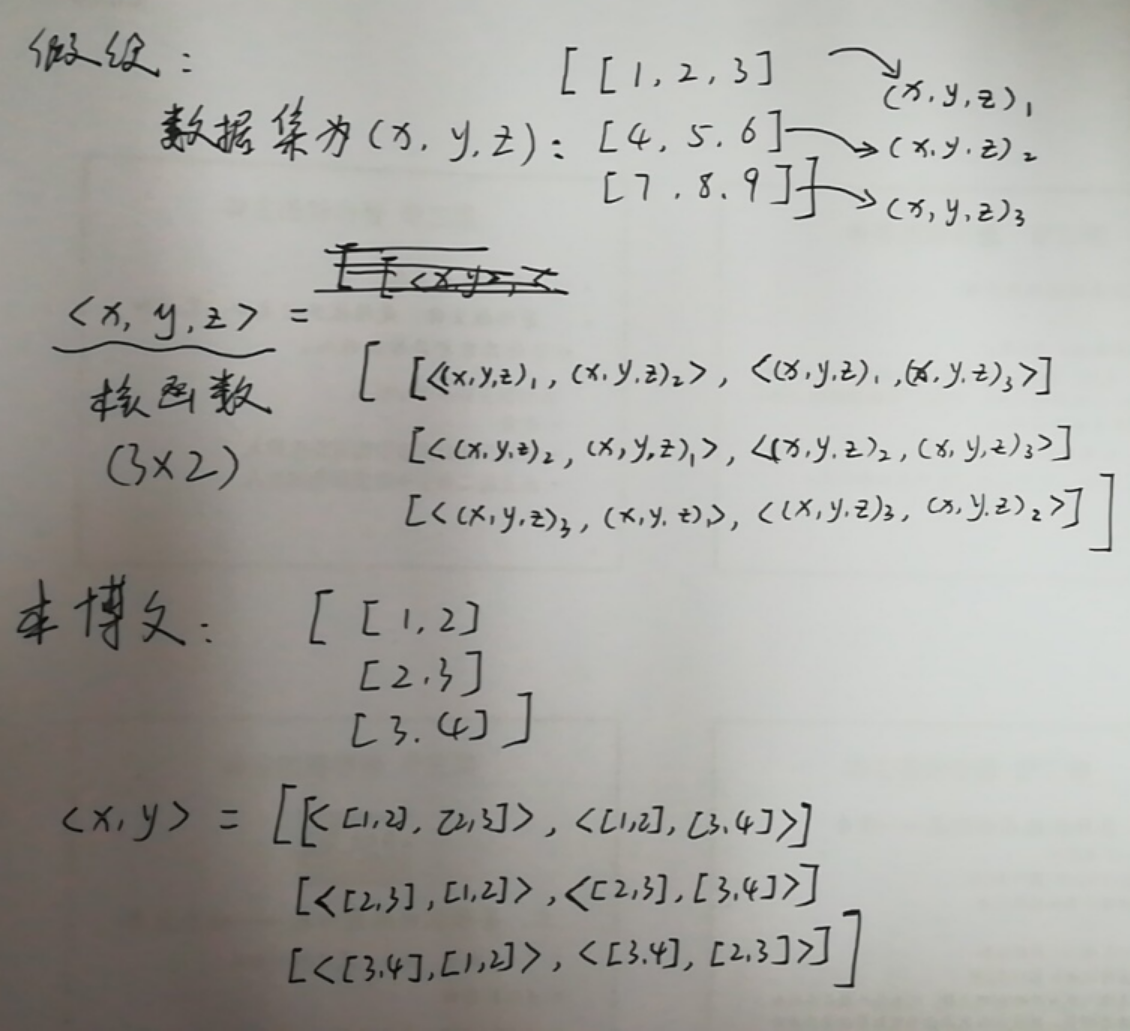

二.程序实现

代码实现强烈推荐:http://blog.csdn.net/c406495762/article/details/78072313

给了程序伪代码很详细,程序读起来很方便。

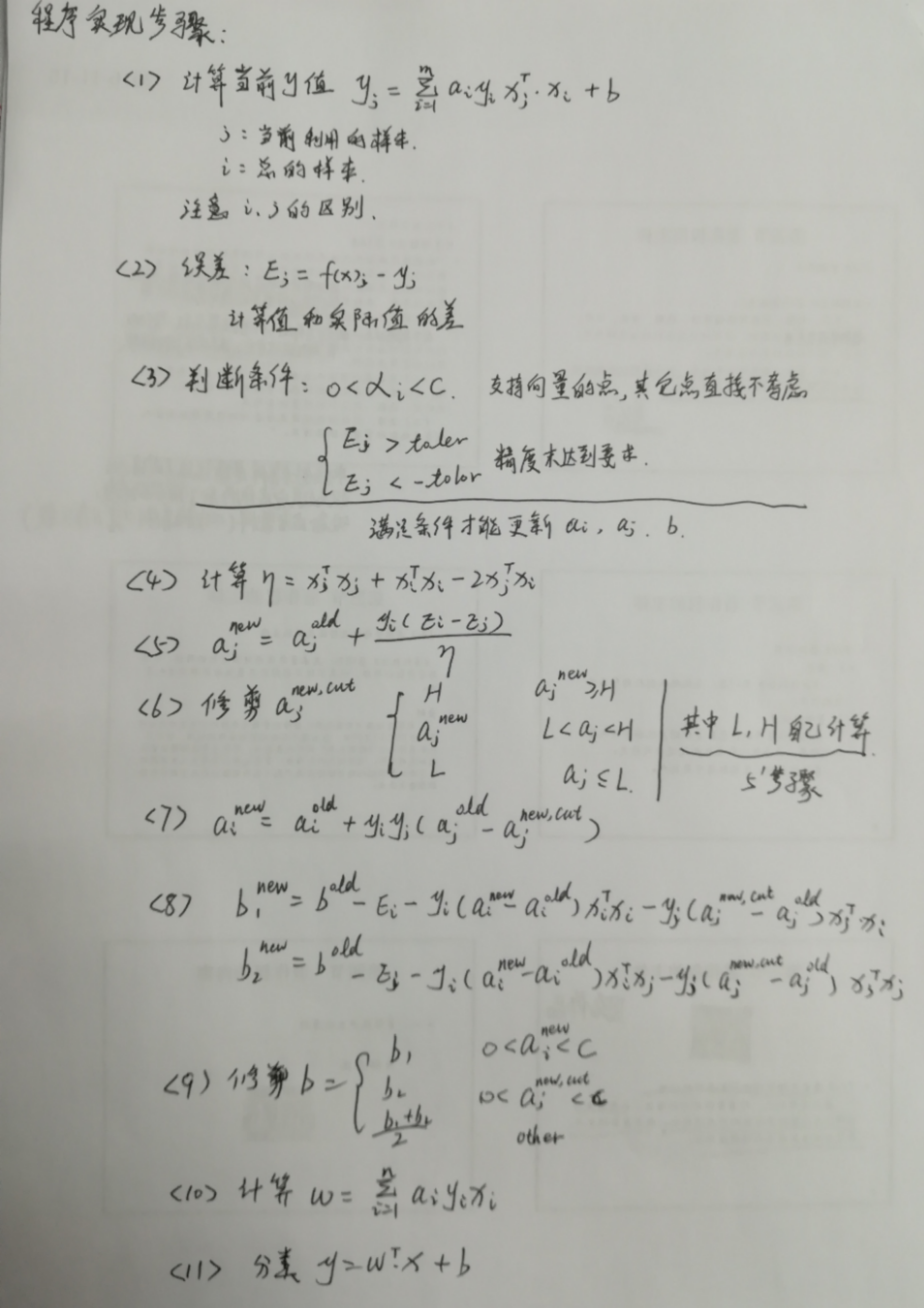

2.1.SMO实现

2.1.1简化版SMO

简化版:针对理论中“SMO”的最后一句话,最优选择的问题!简化版是随机选择,选择上不做优化。

1 import numpy as np 2 import matplotlib.pyplot as plt 3 4 #预处理数据 5 def loadDataSet(fileName): 6 dataMat = [] 7 labelMat = [] 8 fr = open(fileName,'r') 9 for line in fr.readlines(): 10 lineArr = line.strip().split(' ') 11 dataMat.append([float(lineArr[0]),float(lineArr[1])]) 12 labelMat.append(float(lineArr[2])) 13 a = np.mat(dataMat) 14 b = np.mat(labelMat).transpose() 15 DataLabel = np.array(np.hstack((a,b))) 16 return dataMat, labelMat, DataLabel 17 #随机 18 def selectJrand(i,m): 19 j = i 20 while(j==i): 21 j = int(np.random.uniform(0,m)) 22 return j 23 #约束之后的aj 24 def clipAlpha(aj,H,L): 25 if aj>H: 26 aj = H 27 elif aj<L: 28 aj = L 29 return aj 30 #C:松弛系数 31 #maxIter:迭代次数 32 def smoSimple(dataMatIn,classLabels,C,toler,maxIter): 33 dataMatraix = np.mat(dataMatIn) 34 labelMatraix = np.mat(classLabels).transpose() 35 b =0 36 m,n = dataMatraix.shape 37 alphas = np.mat(np.zeros((m,1)))#系数都初始化为0 38 iter = 0 39 while(iter<maxIter):#大循环是最大迭代次数 40 alphaPairsChange = 0 41 for i in range(m):#样本训练 42 #预测值,具体看博客手写图片 43 fXi = float(np.multiply(alphas,labelMatraix).T*(dataMatraix*dataMatraix[i,:].T))+b 44 #误差 45 Ei = fXi - float(labelMatraix[i]) 46 #满足条件才能更新a,b的值,感觉这里的labelMat[i]不影响结果 47 if(((labelMatraix[i]*Ei<-toler) and (alphas[i]<C)) 48 or ((labelMatraix[i]*Ei)>toler) and (alphas[i]>0)): 49 j = selectJrand(i,m)#随机选择一个不同于i的[0,m] 50 fXj = float(np.multiply(alphas,labelMatraix).transpose() 51 *(dataMatraix*dataMatraix[j,:].T))+b 52 Ej = fXj - float(labelMatraix[j]) 53 alphaIold = alphas[i].copy() 54 alphaJold = alphas[j].copy() 55 if (labelMatraix[i] != labelMatraix[j]): 56 L = max(0,alphas[j]-alphas[i]) 57 H = min(C,C+alphas[j]-alphas[i]) 58 else: 59 L = max(0,alphas[i]+alphas[j]-C) 60 H = min(C,alphas[i]+alphas[j]) 61 if (L==H): 62 print('L==H') 63 continue 64 #计算 65 eta = 2.0*dataMatraix[i,:]*dataMatraix[j,:].T 66 - dataMatraix[i,:]*dataMatraix[i,:].T 67 - dataMatraix[j,:]*dataMatraix[j,:].T 68 if (eta>0): 69 print("eta>0") 70 continue 71 #更新a的新值j 72 alphas[j] -= labelMatraix[j]*(Ei - Ej)/eta 73 #修剪aj 74 alphas[j] = clipAlpha(alphas[j],H,L) 75 if (abs(alphas[j] - alphaJold) < 0.00001): 76 print("aj not moving") 77 #更新ai 78 alphas[i] += labelMatraix[j]*labelMatraix[i]*(alphaJold - alphas[j]) 79 #更新b1,b2 80 b1 = b - Ei - labelMatraix[i]*(alphas[i]-alphaIold)*dataMatraix[i,:] 81 *dataMatraix[i,:].T - labelMatraix[j]*(alphas[j]-alphaJold)*dataMatraix[i,:]*dataMatraix[j,:].T 82 b2 = b - Ej - labelMatraix[i]*(alphas[i] - alphaIold)*dataMatraix[i,:] 83 * dataMatraix[j, :].T - labelMatraix[j]*(alphas[j] - alphaJold) * dataMatraix[j,:] * dataMatraix[j,:].T 84 #通过b1和b2计算b 85 if (0< alphas[i] <C): b = b1 86 elif (0<alphas[j]<C): b = b2 87 else: b = (b1+b2)/2 88 #计算更新次数,用来判断是否训练数据是否全部达标 89 alphaPairsChange += 1 90 print("iter: %d i:%d pairs:%d "%(iter,i,alphaPairsChange)) 91 #连续的达标次数 92 if(alphaPairsChange==0): iter +=1 93 else: iter = 0 94 print("iteration number: %d"%(iter)) 95 return b, alphas

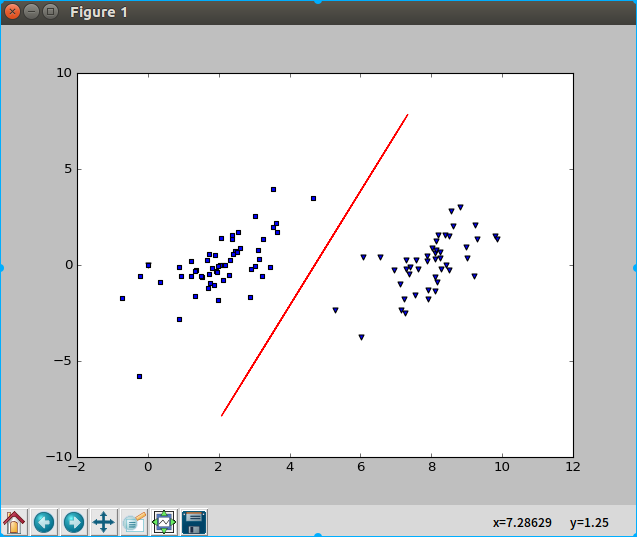

2.1.2效果图

main.py文件

1 import svm 2 import matplotlib.pyplot as plt 3 import numpy as np 4 5 if __name__ == '__main__': 6 fig = plt.figure() 7 axis = fig.add_subplot(111) 8 dataMat, labelMat,DataLabel= svm.loadDataSet("testSet.txt") 9 #b, alphas = svm.smoSimple(dataMat,labelMat,0.6,0.001,40) 10 #ws = svm.calsWs(alphas,dataMat,labelMat) 11 pData0 = [0,0,0] 12 pData1 = [0,0,0] 13 for hLData in DataLabel: 14 if (hLData[-1]==1):pData0 = np.vstack((pData0,hLData)) 15 elif(hLData[-1]==-1):pData1 = np.vstack((pData1,hLData)) 16 else:continue 17 vmax = np.max(pData0[:,0:1]) 18 vmin = np.min(pData0[:,0:1]) 19 axis.scatter(pData0[:,0:1],pData0[:,1:2],marker = 'v') 20 axis.scatter(pData1[:,0:1],pData1[:,1:2],marker = 's') 21 xdata = np.random.uniform(2.0,8.0,[1,20]) 22 ydata = xdata*(0.81445/0.27279) - (3.837/0.27279) 23 axis.plot(xdata.tolist()[0],ydata.tolist()[0],'r') 24 25 fig.show() 26 27 #print("alphas = ",alphas[alphas>0])

svm.py文件

1 import numpy as np 2 import matplotlib.pyplot as plt 3 4 #预处理数据 5 def loadDataSet(fileName): 6 dataMat = [] 7 labelMat = [] 8 fr = open(fileName,'r') 9 for line in fr.readlines(): 10 lineArr = line.strip().split(' ') 11 dataMat.append([float(lineArr[0]),float(lineArr[1])]) 12 labelMat.append(float(lineArr[2])) 13 a = np.mat(dataMat) 14 b = np.mat(labelMat).transpose() 15 DataLabel = np.array(np.hstack((a,b))) 16 return dataMat, labelMat, DataLabel 17 #随机 18 def selectJrand(i,m): 19 j = i 20 while(j==i): 21 j = int(np.random.uniform(0,m)) 22 return j 23 #约束之后的aj 24 def clipAlpha(aj,H,L): 25 if aj>H: 26 aj = H 27 elif aj<L: 28 aj = L 29 return aj 30 #C:松弛系数 31 #maxIter:迭代次数 32 def smoSimple(dataMatIn,classLabels,C,toler,maxIter): 33 dataMatraix = np.mat(dataMatIn) 34 labelMatraix = np.mat(classLabels).transpose() 35 b =0 36 m,n = dataMatraix.shape 37 alphas = np.mat(np.zeros((m,1)))#系数都初始化为0 38 iter = 0 39 while(iter<maxIter):#大循环是最大迭代次数 40 alphaPairsChange = 0 41 for i in range(m):#样本训练 42 #预测值,具体看博客手写图片 43 fXi = float(np.multiply(alphas,labelMatraix).T*(dataMatraix*dataMatraix[i,:].T))+b 44 #误差 45 Ei = fXi - float(labelMatraix[i]) 46 #满足条件才能更新a,b的值,感觉这里的labelMat[i]不影响结果 47 if(((labelMatraix[i]*Ei<-toler) and (alphas[i]<C)) 48 or ((labelMatraix[i]*Ei)>toler) and (alphas[i]>0)): 49 j = selectJrand(i,m)#随机选择一个不同于i的[0,m] 50 fXj = float(np.multiply(alphas,labelMatraix).transpose() 51 *(dataMatraix*dataMatraix[j,:].T))+b 52 Ej = fXj - float(labelMatraix[j]) 53 alphaIold = alphas[i].copy() 54 alphaJold = alphas[j].copy() 55 if (labelMatraix[i] != labelMatraix[j]): 56 L = max(0,alphas[j]-alphas[i]) 57 H = min(C,C+alphas[j]-alphas[i]) 58 else: 59 L = max(0,alphas[i]+alphas[j]-C) 60 H = min(C,alphas[i]+alphas[j]) 61 if (L==H): 62 print('L==H') 63 continue 64 #计算 65 eta = 2.0*dataMatraix[i,:]*dataMatraix[j,:].T 66 - dataMatraix[i,:]*dataMatraix[i,:].T 67 - dataMatraix[j,:]*dataMatraix[j,:].T 68 if (eta>0): 69 print("eta>0") 70 continue 71 #更新a的新值j 72 alphas[j] -= labelMatraix[j]*(Ei - Ej)/eta 73 #修剪aj 74 alphas[j] = clipAlpha(alphas[j],H,L) 75 if (abs(alphas[j] - alphaJold) < 0.00001): 76 print("aj not moving") 77 #更新ai 78 alphas[i] += labelMatraix[j]*labelMatraix[i]*(alphaJold - alphas[j]) 79 #更新b1,b2 80 b1 = b - Ei - labelMatraix[i]*(alphas[i]-alphaIold)*dataMatraix[i,:] 81 *dataMatraix[i,:].T - labelMatraix[j]*(alphas[j]-alphaJold)*dataMatraix[i,:]*dataMatraix[j,:].T 82 b2 = b - Ej - labelMatraix[i]*(alphas[i] - alphaIold)*dataMatraix[i,:] 83 * dataMatraix[j, :].T - labelMatraix[j]*(alphas[j] - alphaJold) * dataMatraix[j,:] * dataMatraix[j,:].T 84 #通过b1和b2计算b 85 if (0< alphas[i] <C): b = b1 86 elif (0<alphas[j]<C): b = b2 87 else: b = (b1+b2)/2 88 #计算更新次数,用来判断是否训练数据是否全部达标 89 alphaPairsChange += 1 90 print("iter: %d i:%d pairs:%d "%(iter,i,alphaPairsChange)) 91 #连续的达标次数 92 if(alphaPairsChange==0): iter +=1 93 else: iter = 0 94 print("iteration number: %d"%(iter)) 95 return b, alphas 96 97 #分类函数 98 def calsWs(alphas,dataArr,classLabels): 99 X = np.mat(dataArr) 100 labelMat = np.mat(classLabels).T 101 alphas = np.mat(np.array(alphas).reshape((1,100))).T 102 m, n = X.shape 103 w = np.zeros([n,1]) 104 for i in range(m): 105 w += np.multiply(alphas[i]*labelMat[i],X[i,:].T) 106 return w

2.1.3非线性分类(核向量)

程序代码:

1 import numpy as np 2 import matplotlib.pyplot as plt 3 4 #预处理数据 5 def loadDataSet(fileName): 6 dataMat = [] 7 labelMat = [] 8 fr = open(fileName,'r') 9 for line in fr.readlines(): 10 lineArr = line.strip().split(' ') 11 dataMat.append([float(lineArr[0]),float(lineArr[1])]) 12 labelMat.append(float(lineArr[2])) 13 a = np.mat(dataMat) 14 b = np.mat(labelMat).T 15 DataLabel = np.array(np.hstack((a,b))) 16 return dataMat, labelMat, DataLabel 17 #随机 18 def selectJrand(i,m): 19 j = i 20 while(j==i): 21 j = int(np.random.uniform(0,m)) 22 return j 23 #约束之后的aj 24 def clipAlpha(aj,H,L): 25 if aj>H: 26 aj = H 27 elif aj<L: 28 aj = L 29 return aj 30 #C:松弛系数 31 #maxIter:迭代次数 32 def smselfimple(dataMatIn,classLabels,C,toler,maxIter): 33 dataMatraix = np.mat(dataMatIn) 34 labelMatraix = np.mat(classLabels).transpselfe() 35 b =0 36 m,n = dataMatraix.shape 37 alphas = np.mat(np.zerself((m,1)))#系数都初始化为0 38 iter = 0 39 while(iter<maxIter):#大循环是最大迭代次数 40 alphaPairsChange = 0 41 for i in range(m):#样本训练 42 #预测值,具体看博客手写图片 43 fXi = float(np.multiply(alphas,labelMatraix).T*(dataMatraix*dataMatraix[i,:].T))+b 44 #误差 45 Ei = fXi - float(labelMatraix[i]) 46 #满足条件才能更新a,b的值,感觉这里的labelMat[i]不影响结果 47 if(((labelMatraix[i]*Ei<-toler) and (alphas[i]<C)) 48 or ((labelMatraix[i]*Ei)>toler) and (alphas[i]>0)): 49 j = selectJrand(i,m)#随机选择一个不同于i的[0,m] 50 fXj = float(np.multiply(alphas,labelMatraix).transpselfe() 51 *(dataMatraix*dataMatraix[j,:].T))+b 52 Ej = fXj - float(labelMatraix[j]) 53 alphaIold = alphas[i].copy() 54 alphaJold = alphas[j].copy() 55 if (labelMatraix[i] != labelMatraix[j]): 56 L = max(0,alphas[j]-alphas[i]) 57 H = min(C,C+alphas[j]-alphas[i]) 58 else: 59 L = max(0,alphas[i]+alphas[j]-C) 60 H = min(C,alphas[i]+alphas[j]) 61 if (L==H): 62 print('L==H') 63 continue 64 #计算 65 eta = 2.0*dataMatraix[i,:]*dataMatraix[j,:].T 66 - dataMatraix[i,:]*dataMatraix[i,:].T 67 - dataMatraix[j,:]*dataMatraix[j,:].T 68 if (eta>0): 69 print("eta>0") 70 continue 71 #更新a的新值j 72 alphas[j] -= labelMatraix[j]*(Ei - Ej)/eta 73 #修剪aj 74 alphas[j] = clipAlpha(alphas[j],H,L) 75 if (abs(alphas[j] - alphaJold) < 0.00001): 76 print("aj not moving") 77 #更新ai 78 alphas[i] += labelMatraix[j]*labelMatraix[i]*(alphaJold - alphas[j]) 79 #更新b1,b2 80 b1 = b - Ei - labelMatraix[i]*(alphas[i]-alphaIold)*dataMatraix[i,:] 81 *dataMatraix[i,:].T - labelMatraix[j]*(alphas[j]-alphaJold)*dataMatraix[i,:]*dataMatraix[j,:].T 82 b2 = b - Ej - labelMatraix[i]*(alphas[i] - alphaIold)*dataMatraix[i,:] 83 * dataMatraix[j, :].T - labelMatraix[j]*(alphas[j] - alphaJold) * dataMatraix[j,:] * dataMatraix[j,:].T 84 #通过b1和b2计算b 85 if (0< alphas[i] <C): b = b1 86 elif (0<alphas[j]<C): b = b2 87 else: b = (b1+b2)/2 88 #计算更新次数,用来判断是否训练数据是否全部达标 89 alphaPairsChange += 1 90 print("iter: %d i:%d pairs:%d "%(iter,i,alphaPairsChange)) 91 #连续的达标次数 92 if(alphaPairsChange==0): iter +=1 93 else: iter = 0 94 print("iteration number: %d"%(iter)) 95 return b, alphas 96 97 #分类函数 98 def calsWs(alphas,dataArr,classLabels): 99 X = np.mat(dataArr) 100 labelMat = np.mat(classLabels).T 101 alphas = np.mat(np.array(alphas).reshape((1,100))).T 102 w = np.sum(np.multiply(np.multiply(alphas,labelMat),X),axis=0) 103 return w 104 105 #完整版SMO 106 class optStruct: 107 def __init__(self,dataMatIn,classLabel,C,toler,kTup): 108 self.X = dataMatIn 109 self.labelMat = classLabel 110 self.C = C 111 self.tol = toler 112 self.m = np.shape(dataMatIn)[0] 113 self.alphas = np.mat(np.zeros((self.m,1))) 114 self.b = 0 115 self.eCache = np.mat(np.zeros((self.m,2)))#存储误差,用于启发式搜索 116 self.K = np.mat(np.zeros((self.m,self.m)))#存储核函数转化后的dataMatIn 117 for i in range(self.m): 118 self.K[:,i] = kernelTrans(self.X,self.X[i,:],kTup) 119 def clacEk(self,k): 120 #计算当前值 121 fXk = float(np.multiply(self.alphas,self.labelMat).T*self.K[:,k]+ self.b) 122 Ek = fXk - float(self.labelMat[k]) 123 return Ek 124 def selecJ(self,i,Ei): 125 maxK = -1 126 maxDeltaE = 0 127 Ej = 0 128 self.eCache[i] = [1,Ei]#存储偏差 129 #A代表mat转换成array类型,nonzero返回非零元素的下标 130 validEcacheList = np.nonzero(self.eCache[:,0].A)[0] 131 if (len(validEcacheList)>1):#启发式选择 132 for k in validEcacheList: 133 if (k==i):continue#k不能等于i 134 Ek = self.clacEk(k)#计算绝对偏差 135 deltaE = abs(Ei - Ek)#相对偏差 136 if (deltaE >maxDeltaE): 137 maxK = k 138 maxDeltaE = deltaE 139 Ej = Ek 140 return maxK, Ej 141 142 else: 143 j = selectJrand(i,self.m)#随机选择 144 Ej = self.clacEk(j)#随机绝对偏差 145 return j,Ej 146 def updataEk(self,k): 147 Ek = self.clacEk(k) 148 self.eCache[k] = [k,Ek] 149 def innerL(self,i): 150 Ei = self.clacEk(i) 151 if ((self.labelMat[i]*Ei<-self.tol and self.alphas[i]<self.C) 152 or(self.labelMat[i]*Ei>self.tol and self.alphas[i]>0)): 153 j,Ej = self.selecJ(i,Ei)#选择J 154 alphaIold = self.alphas[i].copy() 155 alphaJold = self.alphas[j].copy() 156 #计算L和H的值 157 if (self.labelMat[i] != self.labelMat[j]): 158 L = max(0,self.alphas[j]-self.alphas[i]) 159 H = min(self.C,self.C+self.alphas[j]-self.alphas[i]) 160 else: 161 L = max(0,self.alphas[j]+self.alphas[i]-self.C) 162 H = min(self.C,self.alphas[i] +self.alphas[j]) 163 if (L==H): return 0 164 #eta = 2.0* self.X[i,:]*self.X[j,:].T - self.X[i,:]*self.X[i,:].T - 165 # self.X[j,:]*self.X[j,:].T 166 eta = 2.0*self.K[i,j] - self.K[i,i] - self.K[j,j]#在此应用核函数 167 if (eta>=0): return 0 168 self.alphas[j] -= self.labelMat[j] * (Ei - Ej)/eta 169 self.alphas[j] = clipAlpha(self.alphas[j],H,L) 170 #更新新出现的aj 171 self.updataEk(j) 172 if (abs(self.alphas[j] - alphaJold)<0.00001): 173 print('J not move') 174 self.alphas[i] += self.labelMat[j]*self.labelMat[i]*(alphaJold-self.alphas[j]) 175 self.updataEk(i)#更新新出现的ai 176 b1 = self.b - Ei - self.labelMat[i] *(self.alphas[i] - alphaIold)* 177 self.K[i,i] - self.labelMat[j]*(self.alphas[j]-alphaJold)*self.K[i,j] 178 b2 = self.b - Ej - self.labelMat[i] *(self.alphas[i] - alphaIold)* 179 self.K[i,j] - self.labelMat[j]*(self.alphas[j]-alphaJold)*self.K[j,j] 180 if (self.alphas[i]>0 and self.alphas[i]<self.C): self.b = b1 181 elif (self.alphas[j]>0 and self.alphas[j]<self.C): self.b = b2 182 else:self.b = (b1+b2)/2.0 183 return 1 184 else: 185 return 0 186 187 def smoP(dataMatIn, classLabels, C, toler, maxIter, kTup): # full Platt SMO 188 self = optStruct(np.mat(dataMatIn), np.mat(classLabels).transpose(), C, toler, kTup) 189 iter = 0 190 entireSet = True; 191 alphaPairsChanged = 0 192 while (iter < maxIter) and ((alphaPairsChanged > 0) or (entireSet)): 193 alphaPairsChanged = 0 194 if entireSet: # go over all 195 for i in range(self.m): 196 alphaPairsChanged += self.innerL(i) 197 print('fullSet iter:%d, i=%d, pair change:%d' % (iter, i, alphaPairsChanged)) 198 iter += 1 199 else: # go over non-bound (railed) alphas 200 nonBoundIs = np.nonzero((self.alphas.A > 0) * (self.alphas.A < C))[0] 201 for i in nonBoundIs: 202 alphaPairsChanged += self.innerL(i) 203 print('nonBound iter:%d, i=%d, pair change:%d' % (iter, i, alphaPairsChanged)) 204 iter += 1 205 if entireSet: 206 entireSet = False # toggle entire set loop 207 elif (alphaPairsChanged == 0): 208 entireSet = True 209 print("iteration number: %d" % iter) 210 return self.b, self.alphas 211 #生成核函数,注意这里核函数的计算公式,见博文对其进行说明! 212 def kernelTrans(X,A,kTup): 213 m,n = X.shape 214 K = np.mat(np.zeros([m,1])) 215 if (kTup[0]=='lin'):K = X*A.T 216 elif(kTup[0]=='rbf'): 217 for j in range(m): 218 deltaRow = X[j,:] - A 219 K[j] = deltaRow * deltaRow.T 220 K = np.exp(K/(-1*kTup[1]**2)) 221 else:raise NameError('Houston We have a problem') 222 return K 223 def testRbf(k1 = 1.3): 224 dataArr, labelArr, dataLbel = loadDataSet('testSetRBF.txt') 225 b, alphas = smoP(dataArr,labelArr,200,0.0001,10000,('rbf',k1)) 226 dataMat = np.mat(dataArr) 227 labelMat = np.mat(labelArr).T 228 svInd = np.nonzero(alphas.A>0)[0]#支持向量a 229 sVs = dataMat[svInd]#支持向量X 230 labelSV = labelMat[svInd] 231 print("There are %d Support Vector"%(sVs.shape[0])) 232 m, n = dataMat.shape 233 errorCount = 0 234 for i in range(m): 235 kernelEval = kernelTrans(sVs,dataMat[i,:],('rbf',k1)) 236 predict = kernelEval.T * np.multiply(labelSV,alphas[svInd])+b 237 if(np.sign(predict)!=np.sign(labelArr[i])):errorCount+=1 238 print("The training error is: %f"%(float(errorCount/m))) 239 dataArr, labelArr, datalabel = loadDataSet('testSetRBF2.txt') 240 errorCount = 0 241 dataMat = np.mat(dataArr) 242 labelMat = np.mat(labelArr).T 243 m, n = dataMat.shape 244 for i in range(m): 245 kernelEval = kernelTrans(sVs, dataMat[i,:], ('rbf', k1)) 246 predict = kernelEval.T * np.multiply(labelSV, alphas[svInd]) + b 247 if (np.sign(predict) != np.sign(labelArr[i])): errorCount += 1 248 print("The test error is: %f" %(float(errorCount / m))) 249 250 if __name__ == '__main__': 251 252 testRbf(1.3)

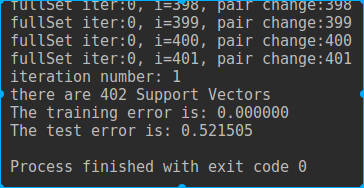

2.1.4手写数字识别测试

1 import numpy as np 2 import matplotlib.pyplot as plt 3 4 #预处理数据 5 def loadDataSet(fileName): 6 dataMat = [] 7 labelMat = [] 8 fr = open(fileName,'r') 9 for line in fr.readlines(): 10 lineArr = line.strip().split(' ') 11 dataMat.append([float(lineArr[0]),float(lineArr[1])]) 12 labelMat.append(float(lineArr[2])) 13 a = np.mat(dataMat) 14 b = np.mat(labelMat).T 15 DataLabel = np.array(np.hstack((a,b))) 16 return dataMat, labelMat, DataLabel 17 #随机 18 def selectJrand(i,m): 19 j = i 20 while(j==i): 21 j = int(np.random.uniform(0,m)) 22 return j 23 #约束之后的aj 24 def clipAlpha(aj,H,L): 25 if aj>H: 26 aj = H 27 elif aj<L: 28 aj = L 29 return aj 30 #C:松弛系数 31 #maxIter:迭代次数 32 def smselfimple(dataMatIn,classLabels,C,toler,maxIter): 33 dataMatraix = np.mat(dataMatIn) 34 labelMatraix = np.mat(classLabels).transpselfe() 35 b =0 36 m,n = dataMatraix.shape 37 alphas = np.mat(np.zerself((m,1)))#系数都初始化为0 38 iter = 0 39 while(iter<maxIter):#大循环是最大迭代次数 40 alphaPairsChange = 0 41 for i in range(m):#样本训练 42 #预测值,具体看博客手写图片 43 fXi = float(np.multiply(alphas,labelMatraix).T*(dataMatraix*dataMatraix[i,:].T))+b 44 #误差 45 Ei = fXi - float(labelMatraix[i]) 46 #满足条件才能更新a,b的值,感觉这里的labelMat[i]不影响结果 47 if(((labelMatraix[i]*Ei<-toler) and (alphas[i]<C)) 48 or ((labelMatraix[i]*Ei)>toler) and (alphas[i]>0)): 49 j = selectJrand(i,m)#随机选择一个不同于i的[0,m] 50 fXj = float(np.multiply(alphas,labelMatraix).transpselfe() 51 *(dataMatraix*dataMatraix[j,:].T))+b 52 Ej = fXj - float(labelMatraix[j]) 53 alphaIold = alphas[i].copy() 54 alphaJold = alphas[j].copy() 55 if (labelMatraix[i] != labelMatraix[j]): 56 L = max(0,alphas[j]-alphas[i]) 57 H = min(C,C+alphas[j]-alphas[i]) 58 else: 59 L = max(0,alphas[i]+alphas[j]-C) 60 H = min(C,alphas[i]+alphas[j]) 61 if (L==H): 62 print('L==H') 63 continue 64 #计算 65 eta = 2.0*dataMatraix[i,:]*dataMatraix[j,:].T 66 - dataMatraix[i,:]*dataMatraix[i,:].T 67 - dataMatraix[j,:]*dataMatraix[j,:].T 68 if (eta>0): 69 print("eta>0") 70 continue 71 #更新a的新值j 72 alphas[j] -= labelMatraix[j]*(Ei - Ej)/eta 73 #修剪aj 74 alphas[j] = clipAlpha(alphas[j],H,L) 75 if (abs(alphas[j] - alphaJold) < 0.00001): 76 print("aj not moving") 77 #更新ai 78 alphas[i] += labelMatraix[j]*labelMatraix[i]*(alphaJold - alphas[j]) 79 #更新b1,b2 80 b1 = b - Ei - labelMatraix[i]*(alphas[i]-alphaIold)*dataMatraix[i,:] 81 *dataMatraix[i,:].T - labelMatraix[j]*(alphas[j]-alphaJold)*dataMatraix[i,:]*dataMatraix[j,:].T 82 b2 = b - Ej - labelMatraix[i]*(alphas[i] - alphaIold)*dataMatraix[i,:] 83 * dataMatraix[j, :].T - labelMatraix[j]*(alphas[j] - alphaJold) * dataMatraix[j,:] * dataMatraix[j,:].T 84 #通过b1和b2计算b 85 if (0< alphas[i] <C): b = b1 86 elif (0<alphas[j]<C): b = b2 87 else: b = (b1+b2)/2 88 #计算更新次数,用来判断是否训练数据是否全部达标 89 alphaPairsChange += 1 90 print("iter: %d i:%d pairs:%d "%(iter,i,alphaPairsChange)) 91 #连续的达标次数 92 if(alphaPairsChange==0): iter +=1 93 else: iter = 0 94 print("iteration number: %d"%(iter)) 95 return b, alphas 96 97 #分类函数 98 def calsWs(alphas,dataArr,classLabels): 99 X = np.mat(dataArr) 100 labelMat = np.mat(classLabels).T 101 alphas = np.mat(np.array(alphas).reshape((1,100))).T 102 w = np.sum(np.multiply(np.multiply(alphas,labelMat),X),axis=0) 103 return w 104 #文本转化为int 105 def img2vector(filename): 106 returnVect = np.zeros((1,1024)) 107 fr = open(filename) 108 for i in range(32): 109 lineStr = fr.readline() 110 for j in range(32): 111 returnVect[0,32*i+j] = int(lineStr[j]) 112 return returnVect 113 #完整版SMO 114 class optStruct: 115 def __init__(self,dataMatIn,classLabel,C,toler,kTup): 116 self.X = dataMatIn 117 self.labelMat = classLabel 118 self.C = C 119 self.tol = toler 120 self.m = np.shape(dataMatIn)[0] 121 self.alphas = np.mat(np.zeros((self.m,1))) 122 self.b = 0 123 self.eCache = np.mat(np.zeros((self.m,2)))#存储误差,用于启发式搜索 124 self.K = np.mat(np.zeros((self.m,self.m)))#存储核函数转化后的dataMatIn 125 for i in range(self.m): 126 self.K[:,i] = kernelTrans(self.X,self.X[i,:],kTup) 127 def clacEk(self,k): 128 #计算当前值 129 fXk = float(np.multiply(self.alphas,self.labelMat).T*self.K[:,k]+ self.b) 130 Ek = fXk - float(self.labelMat[k]) 131 return Ek 132 def selecJ(self,i,Ei): 133 maxK = -1 134 maxDeltaE = 0 135 Ej = 0 136 self.eCache[i] = [1,Ei]#存储偏差 137 #A代表mat转换成array类型,nonzero返回非零元素的下标 138 validEcacheList = np.nonzero(self.eCache[:,0].A)[0] 139 if (len(validEcacheList)>1):#启发式选择 140 for k in validEcacheList: 141 if (k==i):continue#k不能等于i 142 Ek = self.clacEk(k)#计算绝对偏差 143 deltaE = abs(Ei - Ek)#相对偏差 144 if (deltaE >maxDeltaE): 145 maxK = k 146 maxDeltaE = deltaE 147 Ej = Ek 148 return maxK, Ej 149 150 else: 151 j = selectJrand(i,self.m)#随机选择 152 Ej = self.clacEk(j)#随机绝对偏差 153 return j,Ej 154 def updataEk(self,k): 155 Ek = self.clacEk(k) 156 self.eCache[k] = [k,Ek] 157 def innerL(self,i): 158 Ei = self.clacEk(i) 159 if ((self.labelMat[i]*Ei<-self.tol and self.alphas[i]<self.C) 160 or(self.labelMat[i]*Ei>self.tol and self.alphas[i]>0)): 161 j,Ej = self.selecJ(i,Ei)#选择J 162 alphaIold = self.alphas[i].copy() 163 alphaJold = self.alphas[j].copy() 164 #计算L和H的值 165 if (self.labelMat[i] != self.labelMat[j]): 166 L = max(0,self.alphas[j]-self.alphas[i]) 167 H = min(self.C,self.C+self.alphas[j]-self.alphas[i]) 168 else: 169 L = max(0,self.alphas[j]+self.alphas[i]-self.C) 170 H = min(self.C,self.alphas[i] +self.alphas[j]) 171 if (L==H): return 0 172 #eta = 2.0* self.X[i,:]*self.X[j,:].T - self.X[i,:]*self.X[i,:].T - 173 # self.X[j,:]*self.X[j,:].T 174 eta = 2.0*self.K[i,j] - self.K[i,i] - self.K[j,j]#在此应用核函数 175 if (eta>=0): return 0 176 self.alphas[j] -= self.labelMat[j] * (Ei - Ej)/eta 177 self.alphas[j] = clipAlpha(self.alphas[j],H,L) 178 #更新新出现的aj 179 self.updataEk(j) 180 if (abs(self.alphas[j] - alphaJold)<0.00001): 181 print('J not move') 182 self.alphas[i] += self.labelMat[j]*self.labelMat[i]*(alphaJold-self.alphas[j]) 183 self.updataEk(i)#更新新出现的ai 184 b1 = self.b - Ei - self.labelMat[i] *(self.alphas[i] - alphaIold)* 185 self.K[i,i] - self.labelMat[j]*(self.alphas[j]-alphaJold)*self.K[i,j] 186 b2 = self.b - Ej - self.labelMat[i] *(self.alphas[i] - alphaIold)* 187 self.K[i,j] - self.labelMat[j]*(self.alphas[j]-alphaJold)*self.K[j,j] 188 if (self.alphas[i]>0 and self.alphas[i]<self.C): self.b = b1 189 elif (self.alphas[j]>0 and self.alphas[j]<self.C): self.b = b2 190 else:self.b = (b1+b2)/2.0 191 return 1 192 else: 193 return 0 194 195 def smoP(dataMatIn, classLabels, C, toler, maxIter, kTup): # full Platt SMO 196 self = optStruct(np.mat(dataMatIn), np.mat(classLabels).transpose(), C, toler, kTup) 197 iter = 0 198 entireSet = True; 199 alphaPairsChanged = 0 200 while (iter < maxIter) and ((alphaPairsChanged > 0) or (entireSet)): 201 alphaPairsChanged = 0 202 if entireSet: # go over all 203 for i in range(self.m): 204 alphaPairsChanged += self.innerL(i) 205 print('fullSet iter:%d, i=%d, pair change:%d' % (iter, i, alphaPairsChanged)) 206 iter += 1 207 else: # go over non-bound (railed) alphas 208 nonBoundIs = np.nonzero((self.alphas.A > 0) * (self.alphas.A < C))[0] 209 for i in nonBoundIs: 210 alphaPairsChanged += self.innerL(i) 211 print('nonBound iter:%d, i=%d, pair change:%d' % (iter, i, alphaPairsChanged)) 212 iter += 1 213 if entireSet: 214 entireSet = False # toggle entire set loop 215 elif (alphaPairsChanged == 0): 216 entireSet = True 217 print("iteration number: %d" % iter) 218 return self.b, self.alphas 219 #生成核函数,注意这里核函数的计算公式,见博文对其进行说明! 220 def kernelTrans(X,A,kTup): 221 m,n = X.shape 222 K = np.mat(np.zeros([m,1])) 223 if (kTup[0]=='lin'):K = X*A.T 224 elif(kTup[0]=='rbf'): 225 for j in range(m): 226 deltaRow = X[j,:] - A 227 K[j] = deltaRow * deltaRow.T 228 K = np.exp(K/(-1*kTup[1]**2)) 229 else:raise NameError('Houston We have a problem') 230 return K 231 232 def testRbf(k1 = 1.3): 233 dataArr, labelArr, dataLbel = loadDataSet('testSetRBF.txt') 234 b, alphas = smoP(dataArr,labelArr,200,0.0001,10000,('rbf',k1)) 235 dataMat = np.mat(dataArr) 236 labelMat = np.mat(labelArr).T 237 svInd = np.nonzero(alphas.A>0)[0]#支持向量a 238 sVs = dataMat[svInd]#支持向量X 239 labelSV = labelMat[svInd] 240 print("There are %d Support Vector"%(sVs.shape[0])) 241 m, n = dataMat.shape 242 errorCount = 0 243 for i in range(m): 244 kernelEval = kernelTrans(sVs,dataMat[i,:],('rbf',k1)) 245 predict = kernelEval.T * np.multiply(labelSV,alphas[svInd])+b 246 if(np.sign(predict)!=np.sign(labelArr[i])):errorCount+=1 247 print("The training error is: %f"%(float(errorCount/m))) 248 dataArr, labelArr, datalabel = loadDataSet('testSetRBF2.txt') 249 errorCount = 0 250 dataMat = np.mat(dataArr) 251 labelMat = np.mat(labelArr).T 252 m, n = dataMat.shape 253 for i in range(m): 254 kernelEval = kernelTrans(sVs, dataMat[i,:], ('rbf', k1)) 255 predict = kernelEval.T * np.multiply(labelSV, alphas[svInd]) + b 256 if (np.sign(predict) != np.sign(labelArr[i])): errorCount += 1 257 print("The test error is: %f" %(float(errorCount / m))) 258 259 def loadImage(dirName): 260 from os import listdir 261 hwLabel = [] 262 trainingFileList = listdir(dirName) 263 m = len(trainingFileList) 264 trainingMat = np.zeros((m,1024)) 265 for i in range(m): 266 fileNameStr = trainingFileList[i] 267 fileStr = fileNameStr.split('.')[0] 268 classNumStr = int(fileStr.split('_')[0]) 269 if (classNumStr==9): 270 hwLabel.append(-1) 271 else:hwLabel.append(1) 272 trainingMat[i,:] = img2vector('%s/%s'%(dirName,fileNameStr)) 273 return trainingMat, np.array(hwLabel) 274 275 def testDigits(kTup = ('rbf',10)): 276 dataArr, labelArr = loadImage('trainingDigits') 277 b, alphas = smoP(dataArr,labelArr,200,0.0001,10000,kTup) 278 dataMat = np.mat(dataArr);labelMat = np.mat(labelArr).T 279 svInd = np.nonzero(alphas.A>0)[0] 280 sVs = dataMat[svInd] 281 labelSV = labelMat[svInd] 282 print("there are %d Support Vectors"%(int(sVs.shape[0]))) 283 m,n = dataMat.shape 284 errorCount = 0 285 for i in range(m): 286 kernelEval = kernelTrans(sVs,dataMat[i,:],kTup) 287 predict = kernelEval.T * np.multiply(labelSV,alphas[svInd]) + b 288 if (np.sign(predict)!=np.sign(labelArr[i])):errorCount+=1 289 print("The training error is: %f"%(float((errorCount)/m))) 290 dataArr, labelArr = loadImage('testDigits') 291 errorCount = 0 292 dataMat = np.mat(dataArr);labelMat = np.mat(labelArr).T 293 m, n = dataMat.shape 294 for i in range(m): 295 kernelEval = kernelTrans(sVs, dataMat[i, :], kTup) 296 predict = kernelEval.T * np.multiply(labelSV, alphas[svInd]) + b 297 if (np.sign(predict) != np.sign(labelArr[i])): errorCount += 1 298 print("The test error is: %f" % (float((errorCount) / m)))

三.参考文献

参考:

注释:以下是参考链接里面的内容,按以下中文描述排列!都是大神的结晶,没有主观次序。

点到平面距离、拉格朗日二次优化、二次曲线公式推导、SMO公式推导

https://www.cnblogs.com/graphics/archive/2010/07/10/1774809.html

http://www.cnblogs.com/90zeng/p/Lagrange_duality.html