分片(sharding)是指将数据库拆分,将其分散在不同的机器上的过程。将数据分散到不同的机器上,不需要功能强大的服务器就可以存储更多的数据和处理更大的负载。基本思想就是将集合切成小块,这些块分散到若干片里,每个片只负责总数据的一部分,最后通过一个均衡器来对各个分片进行均衡(数据迁移)。通过一个名为mongos的路由进程进行操作,mongos知道数据和片的对应关系(通过配置服务器)。大部分使用场景都是解决磁盘空间的问题,对于写入有可能会变差(+++里面的说明+++),查询则尽量避免跨分片查询。使用分片的时机:

1,机器的磁盘不够用了。使用分片解决磁盘空间的问题。 2,单个mongod已经不能满足写数据的性能要求。通过分片让写压力分散到各个分片上面,使用分片服务器自身的资源。 3,想把大量数据放到内存里提高性能。和上面一样,通过分片使用分片服务器自身的资源。

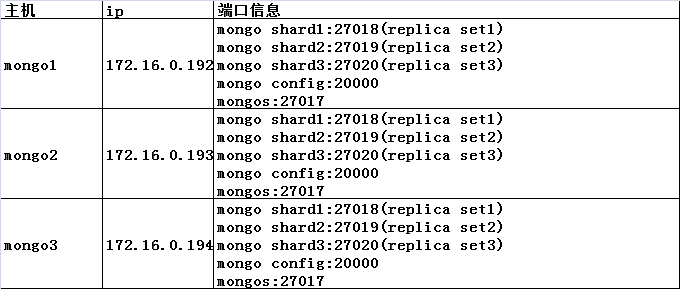

MongoDB sharding Cluster(分片集群),需要三种角色

1、安装Mongodb(3台机器都要操作,本次用的4.0.3版本)

下载地址:https://fastdl.mongodb.org/linux/mongodb-linux-x86_64-4.0.3.tgz

解压 [mongo@mongo1 ~]$ tar -zxvf mongodb-linux-x86_64-4.0.3.tgz 修改解压目录名 [mongo@mongo1 ~]$ mv mongodb-linux-x86_64-4.0.3 mongodb

2、创建相应集群所需目录(3台机器根据上述服务器规划表,分别操作)

[mongo@mongo1 ~]$ mkdir services [mongo@mongo1 ~]$ mv mongodb services/

[mongo@mongo1 ~]$ mkdir -p services/shard1 services/shard1/db services/shard2 services/shard2/db services/shard3 services/shard3/db services/configsvr services/configsvr/db services/mongos

3、配置relica sets

进入到servcies目录

[mongo@mongo1 ~]$ cd services/

在三台机器上分别先执行(1)(2)(3)(4)步,注意配置文件中bingIp属性,需不同机器不同配置

(1)配置并启动Shard1

[mongo@mongo1 services]$ vi shard1/mongod.conf

#shard1 mongod.conf storage: dbPath: /home/mongo/services/shard1/db journal: enabled: true systemLog: destination: file logAppend: true path: /home/mongo/services/shard1/mongod.log net: port: 27018 bindIp: 172.16.0.192

processManagement:

fork: true

pidFilePath: /home/mongo/services/shard1/pid

replication:

replSetName: shard1

sharding:

clusterRole: shardsvr

启动mongod进程,查看是否成功,若不成功,可查看配置的mongod.log,根据错误日志进行配置文件修改

[mongo@mongo1 services]$ mongodb/bin/mongod -f shard1/mongod.conf about to fork child process, waiting until server is ready for connections. forked process: 19834 child process started successfully, parent exiting

(2)配置并启动Shard2

[mongo@mongo1 services]$ vi shard2/mongod.conf

#shard2 mongod.conf storage: dbPath: /home/mongo/services/shard2/db journal: enabled: true systemLog: destination: file logAppend: true path: /home/mongo/services/shard2/mongod.log net: port: 27019 bindIp: 127.0.0.1 processManagement: fork: true pidFilePath: /home/mongo/services/shard2/pid replication: replSetName: shard2 sharding: clusterRole: shardsvr

启动mongod进程,查看是否成功,若不成功,可查看配置的mongod.log,根据错误日志进行配置文件修改

[mongo@mongo1 services]$ mongodb/bin/mongod -f shard2/mongod.conf about to fork child process, waiting until server is ready for connections. forked process: 27144 child process started successfully, parent exiting

(3)配置并启动Shard3

[mongo@mongo1 services]$ vi shard3/mongod.conf

#shard31 mongod.conf storage: dbPath: /home/mongo/services/shard3/db journal: enabled: true systemLog: destination: file logAppend: true path: /home/mongo/services/shard3/mongod.log net: port: 27020 bindIp: 127.0.0.1 processManagement: fork: true pidFilePath: /home/mongo/services/shard3/pid replication: replSetName: shard3 sharding: clusterRole: shardsvr

启动mongod进程

[mongo@mongo1 services]$ mongodb/bin/mongod -f shard3/mongod.conf about to fork child process, waiting until server is ready for connections. forked process: 1101 child process started successfully, parent exiting

(4)配置并启动configsvr

[mongo@mongo1 services]$ vi configsvr/cfg.conf

#configsvr cfg.conf storage: dbPath: /home/mongo/services/configsvr/db journal: enabled: true systemLog: destination: file logAppend: true path: /home/mongo/services/configsvr/mongod.log net: port: 20000 bindIp: 127.0.0.1 processManagement: fork: true pidFilePath: /home/mongo/services/configsvr/pid replication: replSetName: cfg sharding: clusterRole: configsvr

启动mongod进程

[mongo@mongo1 services]$ mongodb/bin/mongod -f configsvr/cfg.conf about to fork child process, waiting until server is ready for connections. forked process: 7940 child process started successfully, parent exiting

(5)关闭防火墙或者开放防火墙上述所用端口

各种版本liunx的防火墙关闭指令不同,目前我这里使用的是CentOS7

[mongo@mongo1 services]$ systemctl stop firewalld ==== AUTHENTICATING FOR org.freedesktop.systemd1.manage-units === Authentication is required to manage system services or units. Authenticating as: root Password: ==== AUTHENTICATION COMPLETE ===

(6)初始化Shard副本集

进入其中一个Shard1容器配置Shard1副本集

[mongo@mongo1 services]$ mongodb/bin/mongo 172.16.0.194:27018 MongoDB shell version v4.0.3 connecting to: mongodb://172.16.0.194:27018/test Implicit session: session { "id" : UUID("d24db7a9-132f-4db4-8b20-0a082f72486d") } MongoDB server version: 4.0.3 Server has startup warnings: 2018-10-30T23:41:10.942+0800 I CONTROL [initandlisten] 2018-10-30T23:41:10.942+0800 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database. 2018-10-30T23:41:10.942+0800 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted. 2018-10-30T23:41:10.942+0800 I CONTROL [initandlisten] 2018-10-30T23:41:10.942+0800 I CONTROL [initandlisten] 2018-10-30T23:41:10.943+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/enabled is 'always'. 2018-10-30T23:41:10.943+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never' 2018-10-30T23:41:10.943+0800 I CONTROL [initandlisten] 2018-10-30T23:41:10.943+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/defrag is 'always'. 2018-10-30T23:41:10.943+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never' 2018-10-30T23:41:10.943+0800 I CONTROL [initandlisten] > > rs.initiate({ ... "_id":"shard1", ... "members":[ ... { ... "_id":0, ... "host":"172.16.0.192:27018" ... }, ... { ... "_id":1, ... "host":"172.16.0.193:27018" ... }, ... { ... "_id":2, ... "host":"172.16.0.194:27018" ... } ... ] ... }) { "ok" : 1, "operationTime" : Timestamp(1540915476, 1), "$clusterTime" : { "clusterTime" : Timestamp(1540915476, 1), "signature" : { "hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="), "keyId" : NumberLong(0) } } }

进入其中一个Shard2容器配置Shard2副本集

[mongo@mongo1 services]$ mongodb/bin/mongo 172.16.0.193:27019

> rs.initiate({ ... "_id":"shard3", ... "members":[ ... { ... "_id":0, ... "host":"172.16.0.192:27020" ... }, ... { ... "_id":1, ... "host":"172.16.0.193:27020" ... }, ... { ... "_id":2, ... "host":"172.16.0.194:27020" ... } ... ] ... })

进入其中一个Shard3容器配置Shard3副本集

[mongo@mongo1 services]$ mongodb/bin/mongo 172.16.0.192:27020

2018-10-30T23:32:26.519+0800 I CONTROL [initandlisten] > rs.initiate({ ... "_id":"shard3", ... "members":[ ... { ... "_id":0, ... "host":"172.16.0.192:27020" ... }, ... { ... "_id":1, ... "host":"172.16.0.193:27020" ... }, ... { ... "_id":2, ... "host":"172.16.0.194:27020" ... } ... ] ... })

(7)初始化Configsvr副本集

进入其中一个Configsvr容器配置Configsvr副本集

[mongo@mongo1 services]$ mongodb/bin/mongo 172.16.0.193:20000

> rs.initiate({ ... "_id":"cfg", ... "members":[ ... { ... "_id":0, ... "host":"172.16.0.192:20000" ... }, ... { ... "_id":1, ... "host":"172.16.0.193:20000" ... }, ... { ... "_id":2, ... "host":"172.16.0.194:20000" ... } ... ] ... })

4、配置并启动路由进程(分别在三台机器上操作,注意bindIp的值)

[mongo@mongo1 services]$ vi mongos/mongos.conf

#mongos mongos.conf systemLog: destination: file logAppend: true path: /home/mongo/services/mongos/mongos.log net: port: 27017 bindIp: 172.16.0.192 processManagement: fork: true pidFilePath: /home/mongo/services/mongos/pid sharding: configDB: cfg/172.16.0.192:20000,172.16.0.193:20000,172.16.0.194:20000

启动路由进程

[mongo@mongo1 services]$ mongodb/bin/mongos -f mongos/mongos.conf about to fork child process, waiting until server is ready for connections. forked process: 27963 child process started successfully, parent exiting

5、添加分片集群

(1)从3台机器中任意找一台,连接mongod

[mongo@mongo3 services]$ mongodb/bin/mongo 172.16.0.192:27017

(2)添加3个分片集群

mongos> sh.addShard("shard1/172.16.0.192:27018,172.16.0.193:27018,172.16.0.194:27018") { "shardAdded" : "shard1", "ok" : 1, "operationTime" : Timestamp(1540981435, 6), "$clusterTime" : { "clusterTime" : Timestamp(1540981435, 6), "signature" : { "hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="), "keyId" : NumberLong(0) } } } mongos> sh.addShard("shard2/172.16.0.192:27019,172.16.0.193:27019,172.16.0.194:27019") { "shardAdded" : "shard2", "ok" : 1, "operationTime" : Timestamp(1540981449, 1), "$clusterTime" : { "clusterTime" : Timestamp(1540981449, 1), "signature" : { "hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="), "keyId" : NumberLong(0) } } } mongos> sh.addShard("shard3/172.16.0.192:27020,172.16.0.193:27020,172.16.0.194:27020") { "shardAdded" : "shard3", "ok" : 1, "operationTime" : Timestamp(1540981455, 1), "$clusterTime" : { "clusterTime" : Timestamp(1540981455, 1), "signature" : { "hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="), "keyId" : NumberLong(0) } } }

(3)通过sh.status()检查上面配置是否正确

mongos> sh.status() --- Sharding Status --- sharding version: { "_id" : 1, "minCompatibleVersion" : 5, "currentVersion" : 6, "clusterId" : ObjectId("5bd8849513e9f7fb57277071") } shards: { "_id" : "shard1", "host" : "shard1/172.16.0.192:27018,172.16.0.193:27018,172.16.0.194:27018", "state" : 1 } { "_id" : "shard2", "host" : "shard2/172.16.0.192:27019,172.16.0.193:27019,172.16.0.194:27019", "state" : 1 } { "_id" : "shard3", "host" : "shard3/172.16.0.192:27020,172.16.0.193:27020,172.16.0.194:27020", "state" : 1 } active mongoses: "4.0.3" : 3 autosplit: Currently enabled: yes balancer: Currently enabled: yes Currently running: no Failed balancer rounds in last 5 attempts: 0 Migration Results for the last 24 hours: No recent migrations databases: { "_id" : "config", "primary" : "config", "partitioned" : true } config.system.sessions shard key: { "_id" : 1 } unique: false balancing: true chunks: shard1 1 { "_id" : { "$minKey" : 1 } } -->> { "_id" : { "$maxKey" : 1 } } on : shard1 Timestamp(1, 0) mongos>

(4)查看分片集群数据库信息

mongos> show dbs; admin 0.000GB config 0.001GB mongos> db test mongos> use config switched to db config mongos> show collections changelog chunks collections lockpings locks migrations mongos shards tags transactions version mongos>

至此,MongoDB分片集群部署成功,生产部署还需要调整一些参数,这部分内容可以通过--help查看参数详情。

6、测试分片集群

(1)新建数据库,且向集合中插入文档

mongos> use testShard; switched to db testShard mongos> db.users.insert({userid:1,username:"ChavinKing",city:"beijing"}) WriteResult({ "nInserted" : 1 }) mongos> db.users.find() { "_id" : ObjectId("5bd99310e1a54b9bca661075"), "userid" : 1, "username" : "ChavinKing", "city" : "beijing" } mongos>

(2)查看分片集群状态

mongos> sh.status(); --- Sharding Status --- sharding version: { "_id" : 1, "minCompatibleVersion" : 5, "currentVersion" : 6, "clusterId" : ObjectId("5bd8849513e9f7fb57277071") } shards: { "_id" : "shard1", "host" : "shard1/172.16.0.192:27018,172.16.0.193:27018,172.16.0.194:27018", "state" : 1 } { "_id" : "shard2", "host" : "shard2/172.16.0.192:27019,172.16.0.193:27019,172.16.0.194:27019", "state" : 1 } { "_id" : "shard3", "host" : "shard3/172.16.0.192:27020,172.16.0.193:27020,172.16.0.194:27020", "state" : 1 } active mongoses: "4.0.3" : 3 autosplit: Currently enabled: yes balancer: Currently enabled: yes Currently running: no Failed balancer rounds in last 5 attempts: 0 Migration Results for the last 24 hours: No recent migrations databases: { "_id" : "config", "primary" : "config", "partitioned" : true } config.system.sessions shard key: { "_id" : 1 } unique: false balancing: true chunks: shard1 1 { "_id" : { "$minKey" : 1 } } -->> { "_id" : { "$maxKey" : 1 } } on : shard1 Timestamp(1, 0) { "_id" : "testShard", "primary" : "shard2", "partitioned" : false, "version" : { "uuid" : UUID("842f5428-9e0a-49b4-9c18-ff1c95d1bfea"), "lastMod" : 1 } } #数据库testShard目前不支持分片("partitioned" :false),数据库文件存储在shard2片上("primary" : "shard2")

3、MongoDB分片是针对集合的,要想使集合支持分片,首先需要使其数据库支持分片,为数据库testShard激活分片

mongos> sh.enableSharding("testShard") { "ok" : 1, "operationTime" : Timestamp(1540986138, 3), "$clusterTime" : { "clusterTime" : Timestamp(1540986138, 3), "signature" : { "hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="), "keyId" : NumberLong(0) } } }

4、为分片字段建立索引,同时为集合指定片键

mongos> db.users.ensureIndex({city:1})

{

"raw" : {

"shard3/172.16.0.192:27020,172.16.0.193:27020,172.16.0.194:27020" : {

"createdCollectionAutomatically" : false,

"numIndexesBefore" : 1,

"numIndexesAfter" : 2,

"ok" : 1

}

},

"ok" : 1,

"operationTime" : Timestamp(1540986455, 8),

"$clusterTime" : {

"clusterTime" : Timestamp(1540986455, 8),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

mongos> sh.shardCollection("testShard.users",{city:1})

{

"ok" : 0,

"errmsg" : "Please create an index that starts with the proposed shard key before sharding the collection",

"code" : 72,

"codeName" : "InvalidOptions",

"operationTime" : Timestamp(1540986499, 4),

"$clusterTime" : {

"clusterTime" : Timestamp(1540986499, 4),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

mongos>

5、再次查看分片集群状态

mongos> sh.status() --- Sharding Status --- sharding version: { "_id" : 1, "minCompatibleVersion" : 5, "currentVersion" : 6, "clusterId" : ObjectId("5bd8849513e9f7fb57277071") } shards: { "_id" : "shard1", "host" : "shard1/172.16.0.192:27018,172.16.0.193:27018,172.16.0.194:27018", "state" : 1 } { "_id" : "shard2", "host" : "shard2/172.16.0.192:27019,172.16.0.193:27019,172.16.0.194:27019", "state" : 1 } { "_id" : "shard3", "host" : "shard3/172.16.0.192:27020,172.16.0.193:27020,172.16.0.194:27020", "state" : 1 } active mongoses: "4.0.3" : 3 autosplit: Currently enabled: yes balancer: Currently enabled: yes Currently running: no Failed balancer rounds in last 5 attempts: 0 Migration Results for the last 24 hours: No recent migrations databases: { "_id" : "config", "primary" : "config", "partitioned" : true } config.system.sessions shard key: { "_id" : 1 } unique: false balancing: true chunks: shard1 1 { "_id" : { "$minKey" : 1 } } -->> { "_id" : { "$maxKey" : 1 } } on : shard1 Timestamp(1, 0) { "_id" : "test", "primary" : "shard3", "partitioned" : false, "version" : { "uuid" : UUID("fabfdcc3-0757-4042-be35-39efe9691f86"), "lastMod" : 1 } } { "_id" : "testShard", "primary" : "shard2", "partitioned" : true, "version" : { "uuid" : UUID("842f5428-9e0a-49b4-9c18-ff1c95d1bfea"), "lastMod" : 1 } } testShard.users shard key: { "city" : 1 } unique: false balancing: true chunks: shard2 1 { "city" : { "$minKey" : 1 } } -->> { "city" : { "$maxKey" : 1 } } on : shard2 Timestamp(1, 0)

#数据库testShard支持分片("partitioned" :true),数据库文件存储在shard2片上 mongos>

6、向集群插入测试数据

mongos> for(var i=1;i<1000000;i++) db.users.insert({userid:i,username:"chavin"+i,city:"beijing"}) mongos> for(var i=1;i<1000000;i++) db.users.insert({userid:i,username:"dbking"+i,city:"changsha"})

7、再次查看分片集群状态

mongos> sh.status() --- Sharding Status --- sharding version: { "_id" : 1, "minCompatibleVersion" : 5, "currentVersion" : 6, "clusterId" : ObjectId("5bd8849513e9f7fb57277071") } shards: { "_id" : "shard1", "host" : "shard1/172.16.0.192:27018,172.16.0.193:27018,172.16.0.194:27018", "state" : 1 } { "_id" : "shard2", "host" : "shard2/172.16.0.192:27019,172.16.0.193:27019,172.16.0.194:27019", "state" : 1 } { "_id" : "shard3", "host" : "shard3/172.16.0.192:27020,172.16.0.193:27020,172.16.0.194:27020", "state" : 1 } active mongoses: "4.0.3" : 3 autosplit: Currently enabled: yes balancer: Currently enabled: yes Currently running: no Failed balancer rounds in last 5 attempts: 5 Last reported error: Could not find host matching read preference { mode: "primary" } for set shard2 Time of Reported error: Thu Nov 01 2018 18:22:23 GMT+0800 (CST) Migration Results for the last 24 hours: 2 : Success databases: { "_id" : "config", "primary" : "config", "partitioned" : true } config.system.sessions shard key: { "_id" : 1 } unique: false balancing: true chunks: shard1 1 { "_id" : { "$minKey" : 1 } } -->> { "_id" : { "$maxKey" : 1 } } on : shard1 Timestamp(1, 0) { "_id" : "test", "primary" : "shard3", "partitioned" : false, "version" : { "uuid" : UUID("fabfdcc3-0757-4042-be35-39efe9691f86"), "lastMod" : 1 } } { "_id" : "testShard", "primary" : "shard2", "partitioned" : true, "version" : { "uuid" : UUID("842f5428-9e0a-49b4-9c18-ff1c95d1bfea"), "lastMod" : 1 } } testShard.users shard key: { "city" : 1 } unique: false balancing: true chunks: shard1 1 shard2 1 shard3 1 { "city" : { "$minKey" : 1 } } -->> { "city" : "beijing" } on : shard3 Timestamp(3, 0) { "city" : "beijing" } -->> { "city" : "shanghai" } on : shard2 Timestamp(3, 1) { "city" : "shanghai" } -->> { "city" : { "$maxKey" : 1 } } on : shard1 Timestamp(2, 0)

#数据库testShard支持分片("partitioned" :true),数据库文件存储在shard1、shard2片、shard3上

测试完毕